DeepSeek-R1 Takes on Doubao and Kimi: The Race for Domestic AI Supremacy

![]() 02/07 2025

02/07 2025

![]() 612

612

With daily active users surpassing 20 million and strategic alliances forged with industry giants like China Mobile, Huawei, Kingsoft Office, and Geely Automobile, DeepSeek has reached new heights in its journey.

As tech titans rush to embrace AI, many enterprises are pouring resources into data and computing power chips, aiming to achieve technological supremacy and amass clusters comprising tens of thousands of cards. DeepSeek, however, has opted for a more frugal approach, emphasizing "doing more with less." The training cost of its V3 model stands at just $5.576 million, and its latest R1 model, built upon the V3, is reportedly on par with OpenAI's o1 large model.

With a training cost that's a fraction of other AI large models, can DeepSeek-R1 truly match the prowess of the o1 model and outshine its domestic counterparts?

The proof is in the pudding. Lei decided to pit DeepSeek-R1 against four other prominent AI large models in China—Doubao, Kimi, ERNIE Bot, and Tongyi Qianwen—to ascertain whether DeepSeek-R1 lives up to its billing.

Challenging the Four Major AI Heavyweights: Will DeepSeek Emerge Victorious?

AI large models, with their optimized architectures, enhanced computing power, and increased parameters, offer increasingly diverse functionalities and nuances worth exploring. For this test, Lei chose three everyday applications: content analysis, creative writing, and mathematical reasoning.

The five large models participating in the test are DeepSeek-R1, Doubao Lark, Kimi-k1.5, ERNIE 3.5, and Tongyi Qianwen 2.5, all available for free.

Content Analysis: DeepSeek-R1 Shines Bright

To streamline workflow, professionals often rely on AI tools to summarize documents and PDFs. Lei selected the "Lifestyle and Marketing Trends of Young People in 2024" report jointly released by JD.com and China Business Network to evaluate whether the AI large models could distill key points and help him swiftly grasp the characteristics of young consumer groups in 2024.

In past evaluations, Lei noted that AI struggled with analyzing document core content, resulting in repetitive outputs. However, in just three to four months, the document summarization capabilities of AI large models have seen remarkable improvements.

In this round, all models performed well, except for Tongyi Qianwen, which showed no significant progress and provided overly concise summaries with critical information missing. Doubao and Kimi not only outlined the top ten trends for 2024 but also categorized the outlook for lifestyle trends in 2025. Kimi further highlighted that post-90s and post-00s consumers accounted for more than half, emphasizing the importance of young consumer groups. ERNIE Bot's performance was average, summarizing the top ten trends for 2024 but overlooking the outlook for 2025.

(Image source: Screenshot from Tongyi Qianwen)

As the focal point of this evaluation, DeepSeek-R1 excelled. For each trend it summarized, it added data or products as examples to substantiate its views and enhance content reliability. Many AI-generated articles can be easily identified due to their tendency towards empty content lacking practical examples. DeepSeek-R1 has clearly attained a higher level of sophistication.

(Image source: Screenshot from DeepSeek)

In summary, DeepSeek-R1 proved its mettle in this round, outperforming the other four AI large models. Among the others, Doubao and Kimi stood out, while the free version of ERNIE 3.5 performed adequately, and Tongyi Qianwen lagged behind.

Creative Writing: DeepSeek Reigns Supreme Again

On February 5, novel platform China Reading and digital publisher ChineseAll announced the integration of DeepSeek-R1 to boost authors' creativity through AI. But can AI truly replace online novel authors?

Lei tasked the AI large models with crafting a 5,000 to 10,000-word martial arts novel in the style of Gu Long and provided an outline:

"Ye Feishuang, the top swordsman of the Tiannan Sword Sect, challenges Murong Chen, the leader of the Taixuan Sect, to a duel atop Huashan Mountain. Each brings disciples from their respective sects to support them. However, Murong Chen secretly collaborates with five major criminal organizations, aiming to annihilate the Tiannan Sword Sect. In reality, the Tiannan Sword Sect is a force planted in the martial world by the Six Departments, aiming to lure out the criminal organizations through this sect duel and eliminate them in one fell swoop. As the criminal organizations join forces with the Taixuan Sect to besiege the Tiannan Sword Sect disciples, the Six Departments' army attacks from behind, completely eradicating the criminal organizations and the Taixuan Sect that have plagued the region."

Unlike previous tests with limited scopes, writing a martial arts novel, albeit constrained by an outline, offers ample room for creativity, making the differences and styles among the major AI large models more apparent.

In this round, Doubao and Kimi named their novels "Sword Shadow and Storm Chronicles" and "Dragon Shadow and Frost Chronicles," respectively, which deviate from the naming style of most Gu Long novels but align more with Liang Yusheng's style. DeepSeek-R1 and ERNIE Bot did not name their novels, while Tongyi Qianwen simply titled it "Peak of Huashan."

(Image source: Screenshot from Doubao)

In terms of content, Tongyi Qianwen remained at the bottom, lacking detailed descriptions and plot twists. It didn't introduce any characters or sect names not mentioned by Lei. Kimi generated content of better quality with richer details and a deeper understanding of the outline, but like Tongyi Qianwen, it focused solely on the outlined characters.

DeepSeek-R1, ERNIE Bot, and Doubao produced content of even higher quality, featuring complete characters, moves, and sect names, along with numerous plot twists and enriched details. For instance, in the novel written by DeepSeek-R1, the two protagonists were originally close friends but turned enemies over a woman, setting the stage for a sequel. ERNIE Bot's content saw Ye Feishuang almost lose control during the battle but be saved by his elder martial brother. Doubao added a plot where Ye Feishuang is betrayed by his closest friend after achieving fame and fortune.

(Image source: Screenshot from DeepSeek)

Unfortunately, ERNIE Bot's content overlooked the Six Departments mentioned in the outline, transforming the story into a simple martial world feud. Doubao's sequel lacked sufficient depiction of the villain, slightly lowering Lei's rating.

In this round, DeepSeek-R1 once again outpaced the other AI large models. However, it's not that the others performed poorly; ERNIE Bot and Doubao exceeded Lei's expectations. It's just that DeepSeek-R1 excelled, incorporating emotional entanglements, plot twists, and an ending that captured the essence of Gu Long's style.

At this stage, AI large models still grapple with novel writing and require users to refine outlines as much as possible. Lei's hastily crafted outline was too general, which might explain Tongyi Qianwen and Kimi's subpar performances.

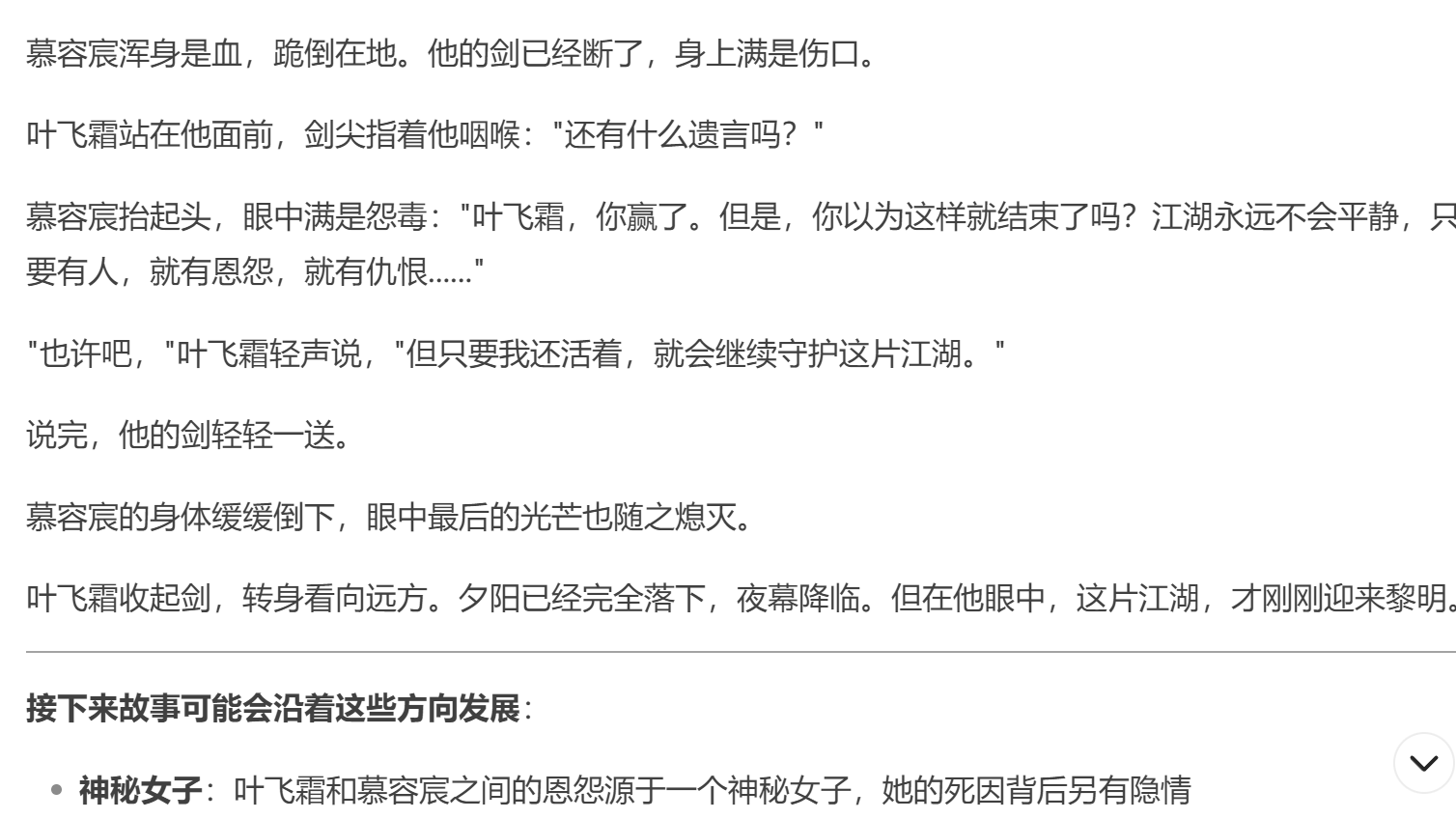

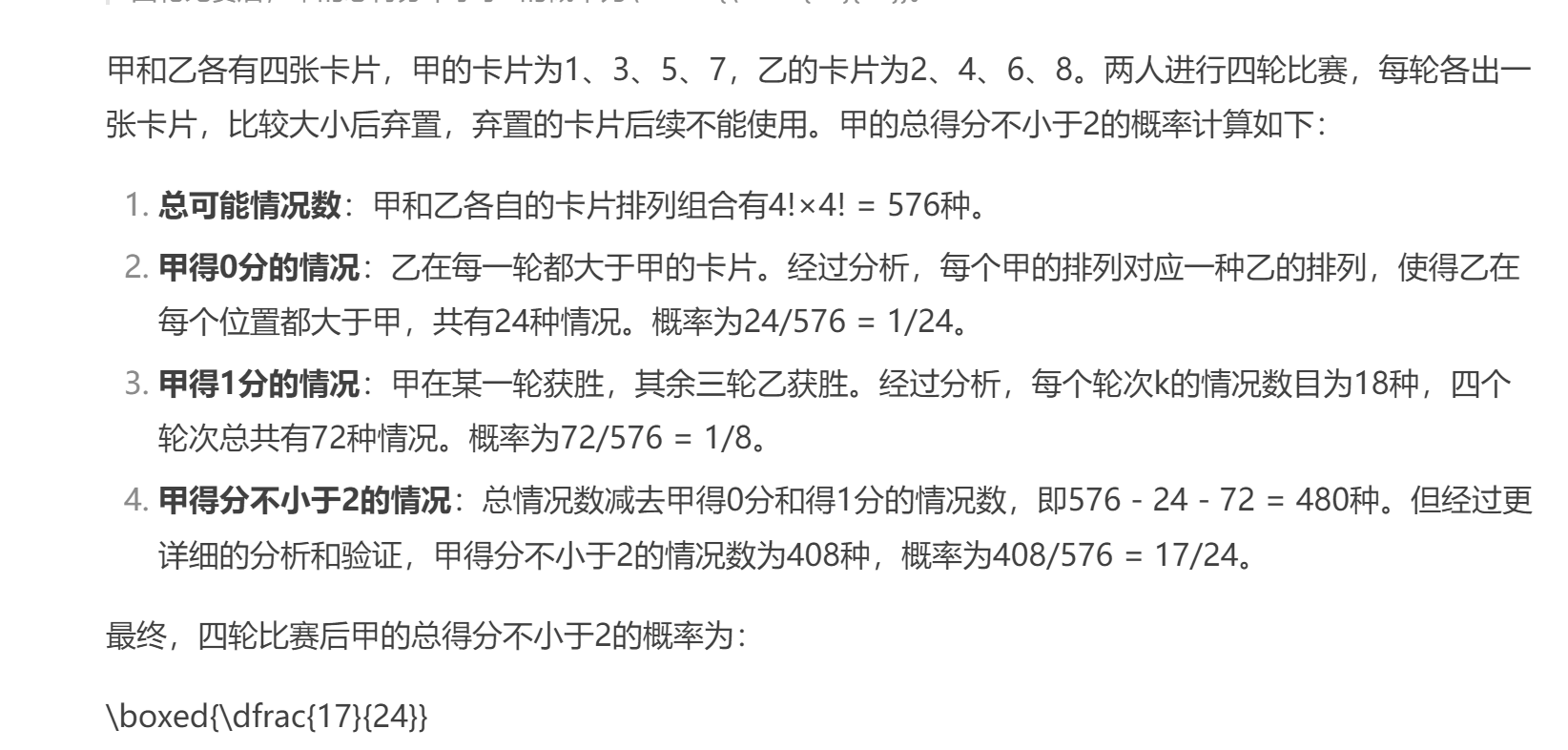

Mathematical Reasoning: A Persistent Hurdle for AI Large Models

In 2024, an Apple engineer published a paper criticizing AI large models for lacking genuine mathematical reasoning abilities, accusing AI companies of exaggerating their capabilities. Subsequently, major AI companies launched new large model versions, touting "complex reasoning" as a selling point. But after several months, do AI large models truly possess reasoning abilities?

For this round, Lei chose a math problem from the 2024 National College Entrance Examination, Volume 1, Question 14:

"Jia and Yi each have four cards labeled with numbers. Jia's cards are labeled 1, 3, 5, and 7, while Yi's cards are labeled 2, 4, 6, and 8. They engage in four rounds of competitions. In each round, they randomly select one card and compare the numbers. The person with the larger number scores 1 point, and the one with the smaller number scores 0 points. After each round, they discard the selected cards. What is the probability that Jia's total score after four rounds is no less than 2? (Correct answer: 1/2)"

From a human perspective, this problem isn't particularly challenging. Even if one lists all possibilities and calculates, it won't take much time. However, for AI large models, this problem is extremely daunting. DeepSeek-R1 and Doubao both gave the answer 17/24, while Kimi, ERNIE Bot, and Tongyi Qianwen provided incorrect answers of 1971/4096, 243/256, and 551/576, respectively.

(Image source: Screenshot from DeepSeek)

Subsequently, Lei used OpenAI's o1, o3 mini, and GPT-4 large models to solve the problem. All three models obtained the correct answer, but there were minor issues, such as the o1 model outputting 2=1/2, which didn't affect its correct calculation. This indicates that in mathematical reasoning, DeepSeek-R1 still lags behind OpenAI's large models.

(Image source: Screenshot from o1 large model)

The most intriguing aspect isn't the absurd answers but the reasoning processes of the AI large models. DeepSeek-R1 and Kimi-k1.5 frequently interrupted their thought processes and chose new solutions. Mathematical reasoning remains a formidable challenge for current AI large models. DeepSeek-R1, which led the other domestic AI large models in the first two tests, failed to pull ahead in this round.

Success is seldom a walk in the park, and DeepSeek's achievements are well-deserved.

In December 2024, when the DeepSeek-V3 large model was first launched, Lei tested it. At the time, Lei's assessment was that DeepSeek-V3 could rival Doubao and Kimi in content summarization and text generation but lagged behind in functional richness compared to other AI agents.

In just over a month, the R1 large model, adjusted based on the V3, achieved a qualitative leap. It surpassed renowned AI large models like Doubao, Kimi, ERNIE Bot, and Tongyi Qianwen in content summarization and text generation. Of course, all models still struggle with mathematical reasoning, with OpenAI maintaining its lead.

DeepSeek-R1's capabilities alone couldn't have created such a significant impact. The crucial factor is its training cost of approximately $6 million, far lower than GPT-4 and estimated to be only 1/200 or even less of GPT-5's cost.

Previously, our understanding was that enhancing AI large models required stacking computing power and buying data, and AI companies were indeed doing so. For instance, Xiaomi plans to build a cluster comprising tens of thousands of cards, and ByteDance aims to invest 40 billion yuan in purchasing AI computing power chips by 2025. Macquarie analysts questioned whether DeepSeek had concealed its development costs, estimating that the training cost of the R1 large model should be around $2.6 billion.

However, DeepSeek revealed that with just a few million dollars, equivalent to less than nine figures in RMB, it can train a product that rivals OpenAI's o1 large model. Due to the impact of DeepSeek-R1, the stock price of NVIDIA, the main provider of global computing power chips, has plummeted recently. Although it has recovered slightly in the past two days, it hasn't yet returned to its peak.

With DeepSeek-R1's outstanding performance, DeepSeek has instantly become a hot commodity in the AI industry, partnering with giants across various industries. Even Huawei, known for its prowess in industrial AI, has integrated Xiaoyi with DeepSeek-R1. Due to the large user base, DeepSeek's official website has frequently experienced server busyness recently, and the API invocation recharge portal has been closed due to the high number of users.

Although the training and reasoning costs of DeepSeek-R1 are low, the influx of a large number of users has made DeepSeek's current computing power insufficient to meet user demand. What Chinese enterprises excel at is scaling from 1 to infinity. DeepSeek has set the precedent, and other AI companies will swiftly follow suit. If DeepSeek wants to retain this surge of traffic, increasing computing power and improving user experience is imminent.

Source: Lei Technology