What are the Tests for Autonomous Driving Technology?

![]() 06/10 2025

06/10 2025

![]() 566

566

Previously, we discussed the necessity of testing autonomous driving technology before implementation, focusing on aspects such as safety, reliability, user experience, and protection of commercial interests. Today, AIoT Auto delves deeper into the various methods employed in autonomous driving testing.

What constitutes the tests for autonomous driving technology?

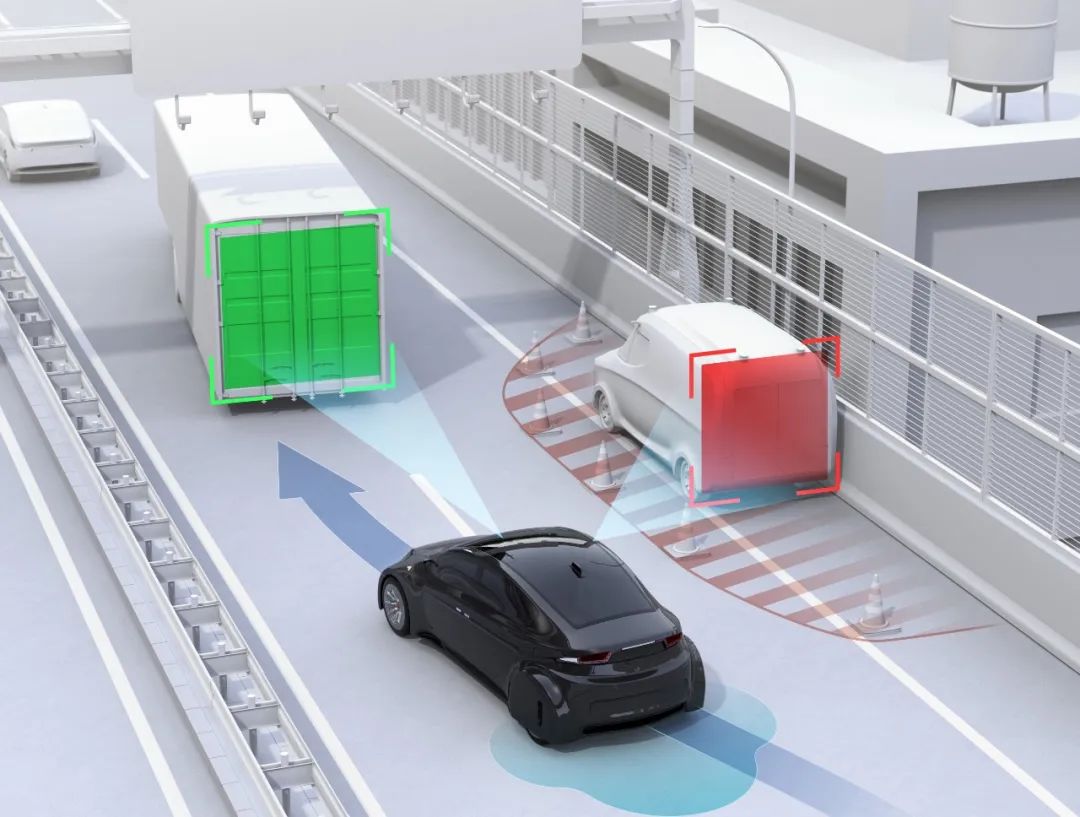

Autonomous driving systems undergo a multi-layered testing process, including simulation testing, laboratory testing, and road testing. Each level serves distinct purposes and goals, collectively forming a comprehensive testing framework.

Simulation testing serves as the initial gateway. It involves embedding algorithms into a virtual environment to validate the system using simulated sensor data and road scenarios. On the simulation platform, various traffic scenarios like merging, sudden pedestrian appearances, traffic signal changes, and complex road signs can be defined. This allows the system to perceive and respond in a "virtual world." Simulation testing's low cost and high efficiency enable teams to cover numerous scenarios quickly, identify algorithm vulnerabilities, and evaluate different strategies. Additionally, it simulates extreme weather conditions and complex road environments, allowing the system to "practice" edge scenarios before real-world testing.

While simulation testing effectively assesses autonomous driving capabilities, it's not identical to reality. Hardware-in-the-Loop (HIL) testing becomes the next crucial step. Here, the system's core hardware devices interact in real-time with the simulation platform. The platform sends virtual sensor signals to the actual Electronic Control Unit (ECU), which then feeds back decision results to the simulation environment, driving the virtual vehicle model or other virtual traffic participants. This setup allows testing sensor failures, signal delays, network packet losses, and other scenarios with real hardware, verifying system performance at the hardware integration level.

Simulation and HIL testing alone cannot fully capture real-road complexity. Hence, closed-course and open road testing follow. Closed-course testing occurs in professional test sites, featuring scenarios like sharp turns, steep slopes, circular lanes, and simulated intersections. It offers the advantage of sensing performance assessment in various lighting, humidity, and dust environments. Real-time control regaining ensures safety if the test vehicle encounters issues.

Open road testing, the most challenging and valuable, involves test vehicles driving on urban, highway, and suburban roads, interacting with real traffic. Due to unpredictable situations, test teams often apply for licenses and assign safety officers. The goal is to subject the system to authentic real-world scenarios, verifying robustness and safety and generating valuable data for analysis.

Besides mainstream testing, specific scenario testing, like extreme scenario testing, focuses on low-probability but high-impact events. Meteorological and dust laboratories simulate various conditions to ensure system robustness. Replay testing uses historical sensor data to verify system performance improvements in the same scenarios, saving costs and accelerating issue resolution.

Fuzz testing or random stress testing deliberately adds noise or erroneous data to sensor data or control commands, ensuring system safety under adverse conditions. It exposes vulnerabilities and provides optimization clues.

Human-Machine Interface (HMI) evaluation ensures user experience and ease of use. Assessments include button response times, dashboard and sound prompt intuitiveness, and passenger comfort during autonomous lane changes. This optimizes interface design and prompt priorities, ensuring both safety and a positive user experience.

The testing system is an iterative, closed-loop process. From unit testing to open road testing, each stage balances cost and benefit. Issues discovered later are fed back to earlier stages, ensuring continuous improvement.

Autonomous Driving Testing Reference Indicators

Testing evaluates safety risks through collision, emergency braking, and driver takeover counts per million kilometers. Functional performance includes perception accuracy rates for pedestrians, vehicles, and traffic signs, as well as road edge and obstacle detection distances. Positioning accuracy measures lateral and longitudinal errors between actual and high-precision map positions. Planning and decision-making performance assesses path deviation, especially in emergency scenarios. Control level focuses on response delays and control errors during command execution.

Extreme environment testing considers temperature and humidity impacts on sensors and hardware. High temperatures may cause sensor crashes, while cold environments may frost camera lenses. These tests ensure system stability under various conditions.

Future Trends in Autonomous Driving Testing

As autonomous driving technology evolves, so too do its testing methodologies, continually advancing and innovating. From initial rule-based test scripts to today's intelligent scenario generation enhanced by machine learning, and envisioning the potential deep integration of digital twins and virtual reality in the future, autonomous driving testing is transitioning from "manually crafted test cases" to "automatically generated high-risk scenarios." Particularly with the backbone of big data and artificial intelligence, data-driven approaches enable the autonomous discovery of extreme risk scenarios from vast driving data sets, crafting corresponding test scripts. This allows the system to "rehearse" these high-risk scenarios in a simulated environment first. Consequently, both testing efficiency and coverage experience a significant boost, accelerating the identification of system vulnerabilities and shortening development cycles.

Final Thoughts

The fundamental rationale behind the necessity for comprehensive and systematic testing of autonomous driving functions prior to deployment is to guarantee their safe and reliable operation in the complex real-world environment. Testing transcends mere "bug hunting"; it is a multi-faceted process encompassing technical validation, safety assurance, regulatory compliance, user experience enhancement, and protection of commercial interests. Simulation testing efficiently validates algorithm concepts, while hardware-in-the-loop testing verifies the harmony between hardware and software. Closed-course and open-road testing subject the system to the most authentic and rigorous assessments. Techniques like extreme scenario testing, playback testing, and fuzz testing further address special risk scenarios that conventional testing might overlook, ensuring the system's robustness under all conceivable circumstances.

Autonomous driving testing mirrors a meticulous movie production, from scripting to editing, from actors to set design, every aspect must be meticulously executed to deliver a complete, seamless, and realistic visual experience to the audience upon their entry into the "cinema." Similarly, the testing team must ensure the autonomous driving system undergoes countless rehearsals on the "stage," guaranteeing flawless performance upon its official release. Only when the system accurately recognizes "virtual pedestrians" and "virtual vehicles" on the simulation platform, robustly handles "fake obstacles" and "fake traffic lights" in a closed venue, and anticipates and safely navigates "red light chaos" and "pedestrian intrusions" on real roads, can we confidently extend the autonomous driving function to a broader audience and diverse road environments.

-- END --