AI Mobile Phone Design: SoC Architecture, Energy Efficiency, and Communications

![]() 06/12 2025

06/12 2025

![]() 696

696

Produced by Zhineng Zhixin

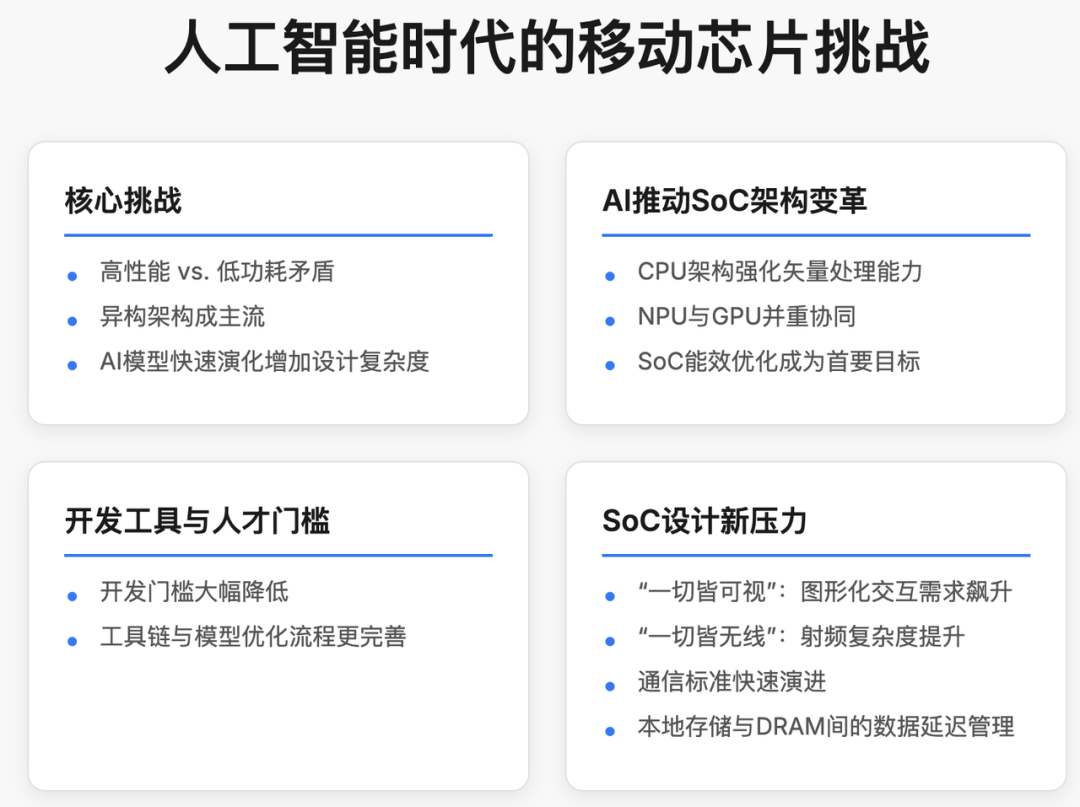

As generative AI, multimodal models, and edge intelligence advance, smartphones are compelled to handle ever-increasing computational loads.

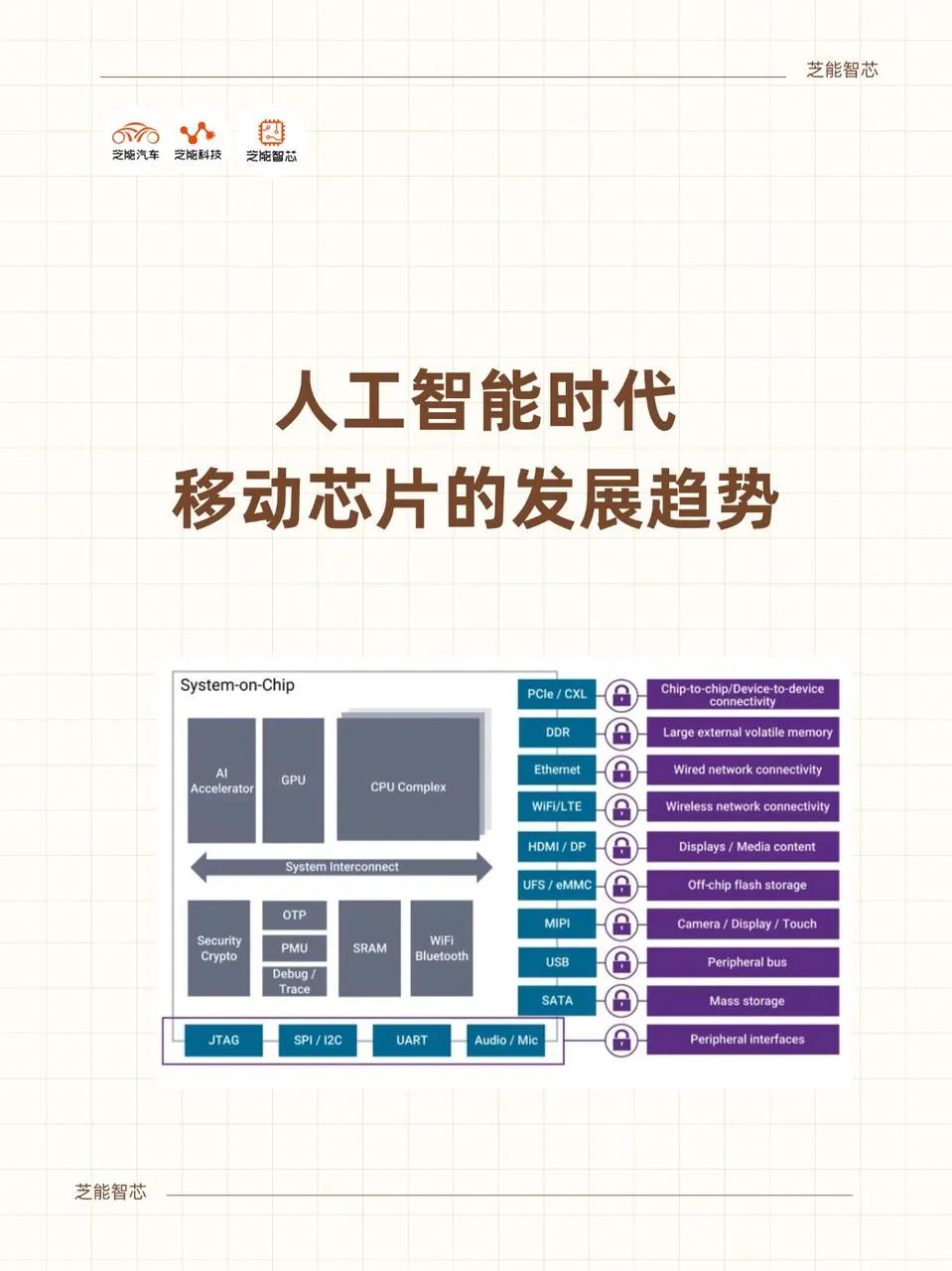

To support complex AI models, evolving communication protocols, and enriched human-computer interactions while maintaining low power consumption, chip design encounters unprecedented challenges. Heterogeneous computing, AI-specific processing units, DRAM and storage interface optimization, and future-proof architectures are gradually becoming hallmarks of high-end SoCs.

We delve into the evolution and technical trends of SoC architecture to analyze the bottlenecks and breakthroughs faced by mobile AI at the hardware level.

Part 1

AI Reconstruction under Heterogeneous Architecture:

Division of Labor and Collaboration among CPU, GPU, and NPU

In recent years, high-end smartphone SoCs have widely adopted heterogeneous computing architectures. This design philosophy isn't about stacking processing units but rather about precisely allocating specialized cores based on each computational task's characteristics.

In a typical mobile SoC, Arm cores manage system control and basic tasks, GPUs handle graphics and some general-purpose computing, while NPUs (Neural Processing Units) specialize in AI inference.

Generative AI, particularly models based on the Transformer architecture, places significantly higher demands on matrix operation density, memory access patterns, and bandwidth requirements compared to traditional algorithms. For instance, lightweight models like TinyLlama, despite having a smaller parameter size, require efficient tensor processing capabilities, where NPUs excel.

When dealing with large language models or multimodal models, the complexity of required activation functions, attention mechanisms, and vector operations far surpasses the original design intent of traditional AI chips, prompting NPUs to continuously scale up and introduce more programmable features.

GPUs are also evolving towards AI adaptability. Some vendors have introduced more specialized data type processing units within GPUs, such as FP8 and INT4 low-bit operations, to optimize energy efficiency.

To further leverage graphics units, some architectures have integrated NPU technology into GPU pipelines, realizing a unified vector computing framework. This trend of "AI-GPU fusion" not only enhances dynamic scheduling efficiency of computing resources but also mitigates the cost and thermal design pressure associated with repeated chip area stacking.

The crux lies in the energy efficiency of the parallel architecture. At the ALU (Arithmetic Logic Unit) level, different vendors are minimizing the energy consumption of each AI inference through meticulous design of arithmetic engines, dynamic voltage adjustment, and multithreaded pipeline optimization.

At the application scenario level, tasks such as always-on speech recognition, camera detection, and background object recognition require the NPU to have "milliwatt-level" real-time response capabilities, making energy efficiency indicators not merely a supplement to performance indicators but the guiding principle determining the direction of architectural evolution.

Part 2

Memory, Connectivity, and Communications:

The Next Bottleneck for SoCs

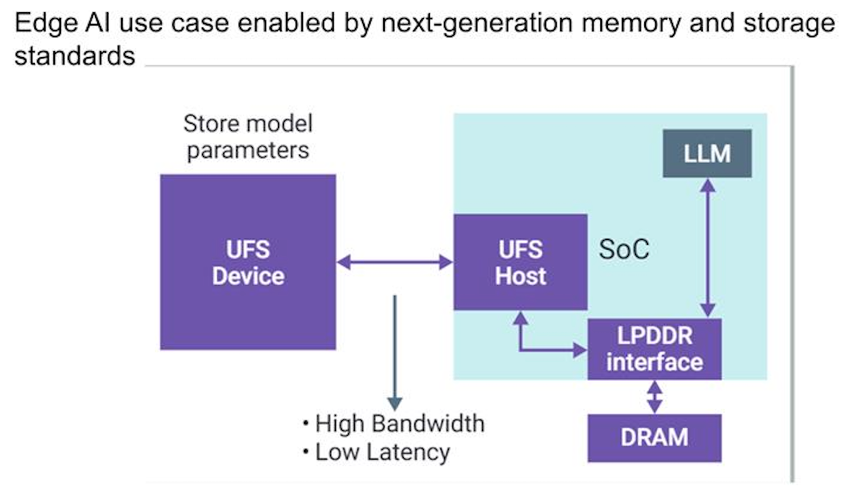

As model sizes expand, SoC chips are no longer solely the main stage for computation; memory access paths, data loading latency, and connectivity bandwidth have also become critical bottlenecks constraining AI experiences.

Generative AI heavily relies on large models to retrieve complete contexts from DRAM in real-time for reasoning and inference. For instance, when deploying LLMs on mobile phones, it's impossible to load all parameters simultaneously; instead, segments are typically read from UFS storage, loaded into DRAM, processed, and then returned to the user. Poorly controlled data transfer delays can result in a less seamless experience, even with excellent AI model performance.

This places higher demands on UFS controllers and the SoC's connectivity path. The UFS 4.x specifications currently being promoted focus not only on throughput speed but also on fast wakeup mechanisms for low-power read-write states.

This is because, in AI model invocations, a large number of fragmented and intermittent data reads can easily cause frequent wakeups of the storage controller, leading to energy waste. Therefore, control strategies tend towards "in-place computing" and "minimum wakeup," which involves caching commonly used parts of the model into DRAM and only accessing flash storage when necessary, while leveraging local SRAM resources within the AI inference engine to avoid full-link activation.

The continuous evolution of communication protocols also poses significant SoC adaptation challenges. Mobile phones integrate communication modules ranging from 5G to Wi-Fi 6E, Bluetooth 5.x, to UWB and Near Field Communication (NFC), each requiring independent RF transceiver links and antenna configurations.

Today, high-end phones have over six built-in antennas, and interference between RF paths, noise coupling, and power management coordination have become system engineering issues that cannot be overlooked in SoC design.

These wireless connections are no longer solely for traditional data transfer functions but are integral parts of the AI decision-making system. For example, some multimodal AI systems rely on input from Bluetooth headphones, 5G real-time video streams, and camera images for fused analysis.

Such collaboration makes the QoS (Quality of Service) of communication modules directly impact AI performance, forcing SoCs to have the ability to dynamically perceive network conditions and schedule AI processing priorities.

At the interface standard level, organizations such as the MIPI Alliance are also driving protocol evolution to adapt to AI data transmission scenarios.

The new generation of MIPI interfaces must not only support higher bandwidth but also enable direct access to on-chip accelerators, reducing data transfer links. For example, whether camera images can be directly fed to the NPU through the MIPI interface instead of being routed through the CPU becomes a crucial point in evaluating the efficiency of the system architecture.

SoC Evolution Roadmap for the Future of AI

The path to AI for smartphones is no longer a question of "whether to deploy" but rather a challenge of "how to deploy efficiently." SoC vendors face not only the demand for faster computation but also the design challenge of creating more flexible, programmable, and self-adaptive system architectures for new models.

Future mobile SoCs must possess the following three core characteristics:

◎ Heterogeneous Computing: Clear division of labor among CPU, GPU, and NPU, with collaborative operation through a unified tensor programming interface to adapt to a wide range of tasks from traditional image AI to multimodal GenAI;

◎ Memory Connectivity Optimization: Comprehensive adjustments from UFS controllers, DRAM scheduling, to on-chip cache architectures to address the power consumption explosion under high-frequency, low-latency access;

◎ Standard Ecosystem Collaboration: From MIPI to UFS, from AI model standards to compiler toolchains, integrated software and hardware capabilities have become the second battlefield for SoC competition.

With the support of software and hardware collaboration, AI is no longer just a symbol of high-end flagship devices but will soon become a standard capability that permeates mid-range and even entry-level phones. Achieving maximum performance with minimal power consumption and seamlessly integrating AI into the user experience are key to determining the technical direction of the next generation of mobile devices.

Summary

With the maturation of chip design tools, model compilation technologies, and AI inference frameworks, the flexibility and scalability of SoC design will become important drivers of AI evolution. Future chip architectures will not only be designed for "hardcoded AI" but also for "supporting AI that has yet to be born," marking the dawn of a new era of intelligent mobile computing.