AI Glasses Revolutionizing App Ecosystem: Millions of Apps at Risk of "Collective Unemployment"?

![]() 06/12 2025

06/12 2025

![]() 515

515

By VR Tuo Luo / Ran Qixing

The ascendancy of large AI models has profoundly transformed the way users interact with information, with a particularly significant disruption to traditional search engines.

Previously, Apple executive Eddy Cue disclosed that Google search queries on Apple devices declined for the first time in Q1 2025. Market research firm Gartner predicted last year that by 2026, traffic to traditional search engines will decrease by 25% due to the shift towards AI tools.

As the "premier hardware carrier for large AI models," AI glasses, which gained popularity in 2024, are also quietly revolutionizing human-computer interaction and reshaping the familiar APP ecosystem.

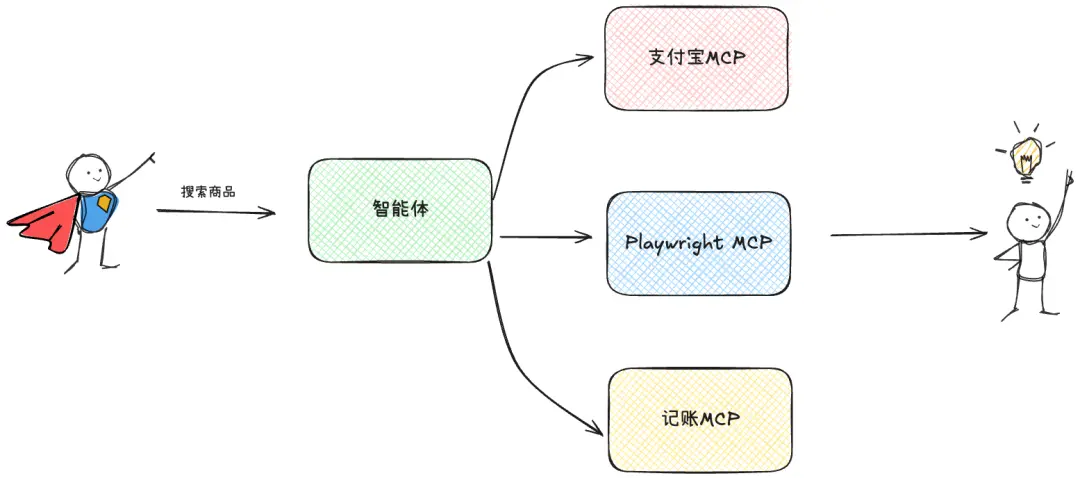

Leveraging multimodal AI integration capabilities, AI glasses directly invoke various system-level functions and MCP services through AI Agents, bypassing traditional APP stores and independent software launch processes. This "fundamental" shift undoubtedly has a colossal impact on the existing software ecosystem.

Of course, this "change" didn't happen overnight or universally. For game content that demands ultimate visuals and heavy interactive experiences, or complex applications in specific professional fields, they will continue to have a longer lifecycle before AI glasses' performance, display quality, battery life, and interaction methods fully match or surpass mature devices like smartphones.

Evolution from "Wearable 3D Display" to "Smart Assistant"

Looking back at the development of smart glasses, early products like Google Glass with a prism solution were more akin to information notifiers. Later products, such as Magic Leap 2 and Hololens 2, achieved exceptional 3D display and spatial computing, but their high cost and large size hindered consumer adoption.

Many split-body Birdbath solution products, while achieving a balance between cost, display, and wearing comfort, focus more on serving as a "second screen" for smartphones or computers, primarily addressing the need for "portable 3D large-screen display".

However, the real game-changer lies in AI, particularly the integration of multimodal large AI models. Whether combined with ordinary camera glasses or paired with AR waveguide glasses, they have revolutionized the smart glasses experience, realizing value increment.

A prime example is Ray-Ban Meta, which has surpassed 2 million units sold in the market. This collaboration between Meta and EssilorLuxottica isn't just a fashionable pair of glasses; it also incorporates Meta AI. Users can activate the AI assistant with the voice command "Hey Meta" to take photos, record videos, listen to music, make phone calls, and even perform real-time translation and visual-based Q&A.

With a simple "Hey Meta, what is this?", it can recognize and provide information. This instant, contextual AI service is gradually replacing traditional operations where users need to take out their phones, unlock them, and open specific apps (like translation software, recognition software).

Similar trends are emerging among other companies actively deploying AI glasses, especially Chinese firms with keen business instincts, which is a "boost" for original AR glasses manufacturers. Building on AI camera glasses, Rokid has launched Rokid Glasses equipped with monochrome Micro-LED + diffractive waveguide, while INMO has introduced the INMO Air 3, an iterative AR glasses product with a large AI model. LeTV Innovation has created the LeTV X3 Pro, which deeply integrates multimodal large AI models with full-color Micro-LED + etched waveguide.

Through AR display, these products continuously enhance their capabilities as all-in-one AI glasses. They typically integrate functions such as navigation, translation, information notifications, and smart assistants, providing more efficient intelligent services through multimodal interaction methods like voice, touch, and gestures. In navigation scenarios, glasses can directly project navigation paths into the user's field of view or display real-time translation subtitles during cross-language communication, significantly enhancing efficiency while reducing users' "head-down" dependence on mobile navigation or translation apps.

Tool-type Apps Bear the Brunt, AI Agents Aim to "Take Over" Everything

Those who feel the chill first are undoubtedly tool-type apps with relatively simple functions and fixed interaction interfaces. For users, these applications don't even require much visual interaction.

They roughly fall into these categories:

Information Acquisition and Search: The traditional search engine experience involves users inputting keywords, obtaining a list of links, and then filtering them manually. Large AI models have already altered this model through generative answers. AI glasses go a step further. Through visual and voice input, combined with contextual information such as location and time, AI Agents can more accurately understand user needs and directly provide answers or perform operations. For example, seeing an exotic flower and asking "What kind of flower is this?", AI glasses can directly retrieve relevant information and present it through voice or AR without first opening a browser, taking a picture, and conducting a secondary search.

Translation and Communication: The real-time translation function of AI glasses like Ray-Ban Meta has already demonstrated its potential. More natural, lower-latency multilingual translation has been achieved, dealing a significant blow to mobile translation apps. According to Statista, the global digital translation market size continues to expand and is expected to reach $9.87 billion by 2025. However, a significant portion of this growth may be captured by more convenient and efficient new terminals like AI glasses in the future.

Navigation and Travel: Although mobile navigation apps are already very powerful, frequent phone checking is inconvenient and unsafe in scenarios like walking or cycling. AI glasses can provide navigation information through voice or directly overlay it in front of the user's field of view, offering a more intuitive AR navigation experience.

Life Assistants and Efficiency Tools: Lightweight tool apps such as schedule reminders, quick notes, unit conversions, weather inquiries, etc., can easily have their functions integrated into the resident smart assistant of AI glasses. Users can complete settings or inquiries with a single voice command, a far shorter operation path than opening a specific app.

Multimodal AI enables glasses to understand users' speech and recognize scenes in front of them. AI Agents act as "super butlers," connecting and scheduling various service resources. In this mode, users no longer need to concern themselves with which app provides weather information or navigation services; the AI Agent automatically handles everything based on commands and scenarios.

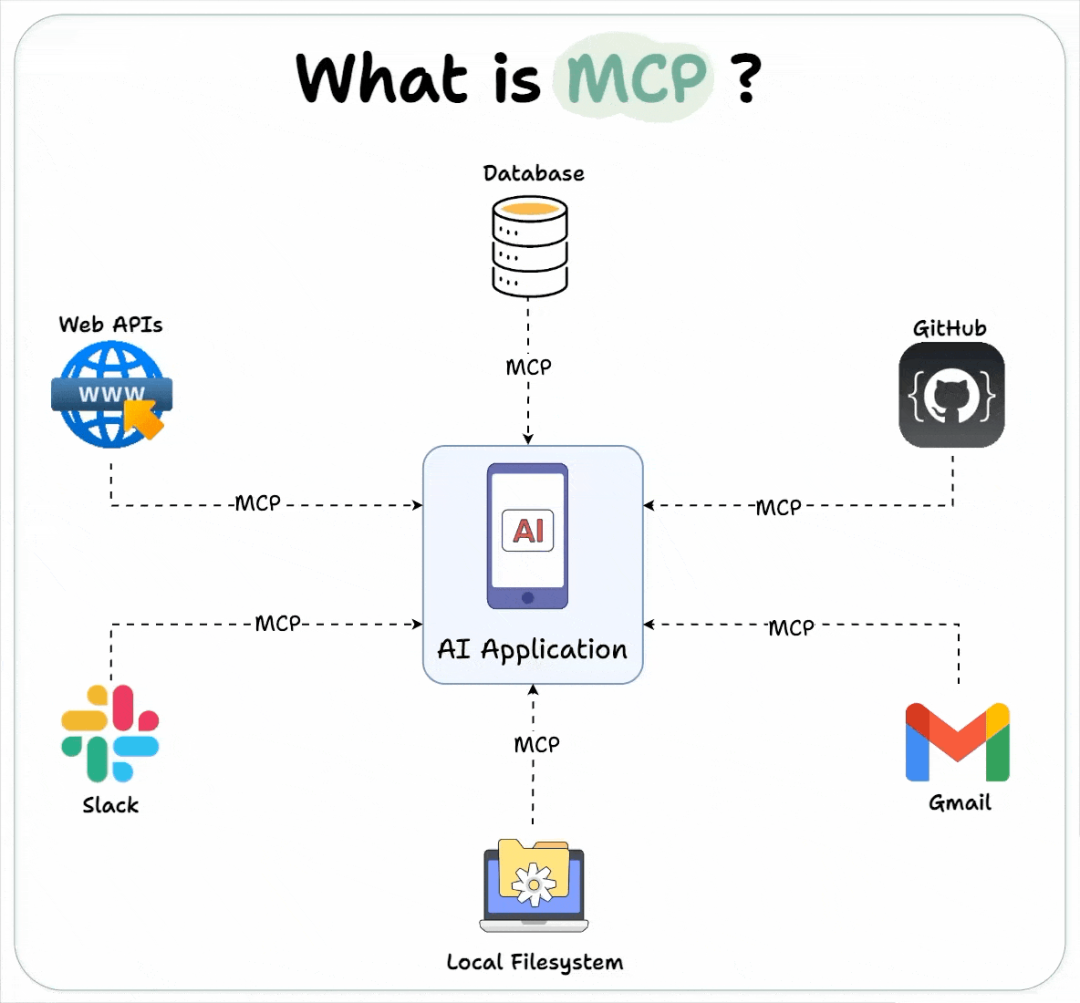

To achieve flexible invocation of complex services by AI Agents, there is actually a key technology involved - MCP (Model Context Protocol).

MCP is a communication protocol proposed by Anthropic and open-sourced in November 2024. It aims to solve the need for seamless integration between large language models (LLMs) and external data sources and tools. It can be understood as a "universal socket" for AI Agents to connect to various capabilities in the real world.

In just half a year, MCP has developed rapidly. According to the latest May data from ModelScope, a domestic AI open-source community, it has integrated over 3,000 MCP Servers, covering popular and high-frequency application areas such as search tools, location services, entertainment and multimedia, finance, and various developer tools.

This means AI Agents are no longer just "theorists" with brains but also have "hands and feet" they can call upon. The training data for large AI models is often outdated and cannot provide various real-time and accurate information. Through various MCP servers, AI Agents can access the latest data, such as the latest news, stock prices, weather, train tickets, air tickets, maps, etc. In the future, when users need to book restaurants or purchase movie tickets, AI Agents can also connect to corresponding service providers through MCP.

All these operations are completed silently by the AI Agent in the background, discovering, invoking, and integrating services before presenting the results directly to the user.

This MCP-based service orchestration capability enables AI glasses to truly break through the functional silos of individual apps, integrating capabilities scattered across different applications in the past to provide coherent and intelligent "one-stop" services. Traditional service-type apps will also undergo a transformation - they can implement their core capabilities through MCP interfaces and embrace new changes.

Currently, well-known apps such as Gaode Maps, Tencent Maps, Baidu Maps, Alipay, China UnionPay, etc., have already implemented MCP Servers, with AI Agents becoming new traffic entry points and user touchpoints. The prosperity of the MCP ecosystem will undoubtedly accelerate the process of AI glasses "killing" traditional app forms, shifting the focus of interaction from "app-centric" to "task-and-user-intent-centric".

Visual Impact and Complex Interaction: The "Safe Haven" for Games and Other Apps

Despite the aggressive momentum of AI glasses and the growing MCP ecosystem, not all types of apps will "surrender" in the short term.

Especially for game apps that demand high visual effects, immersion, and control complexity, as well as productivity tools in specific professional fields (such as complex video editing, 3D modeling software, programming software, etc.), their development on AI glasses still faces many practical bottlenecks.

Currently, the entire AI glasses industry still faces the three challenges of display, performance, and wearing comfort. In recent years, although there have been some breakthroughs in the Micro-LED microdisplay + waveguide solution, it has not yet achieved an ideal display effect comparable to smartphones and computers. PPD, rainbow patterns, transmittance, etc., need further improvement.

In terms of performance, low-power SoCs often cannot provide strong performance support, while more powerful chips consume a lot of power, far exceeding what the 200-300 mAh batteries of AI glasses can handle. Larger battery capacities do not conform to the lightweight ergonomic design of glasses, resulting in many mutually restraining technical bottlenecks.

Considering overall performance and battery life, the current human-computer interaction methods for AI glasses mainly focus on voice and touch interaction. More natural and precise "hand-eye interaction" is still a long way from being implemented. Even if it is truly applied, in some scenarios like office work and gaming, the comprehensive experience is still difficult to match that of smartphone touchscreens, physical controllers, or keyboards and mice.

Therefore, for a considerable period in the future, games that pursue ultimate visual experiences and complex interactions, as well as professional applications with deep functionality, will still primarily rely on smartphones, tablets, PCs, and VR headsets. AI glasses may serve as auxiliary displays for these experiences or provide certain lightweight interconnected functions but are difficult to completely replace them.

APP "Morphological Reshaping" Under the AI Glasses Frenzy

In fact, the statement here by VR Tuo Luo that "killing app forms" should be more accurately understood as AI glasses are "killing" the traditional app interaction mode where users must actively search for, download, install, learn, and frequently switch between apps, especially for tasks that can be completed through simple commands and contextual awareness.

In the future, the APP ecosystem will not completely disappear but is more likely to experience a situation of "morphological reshaping" and "ecological coexistence". Some tool-type app functions will be "absorbed," with basic tool functions being integrated into AI Agents at the operating system level or system functions of AI glasses, eliminating the need for users to download independent apps for these single functions.

"MCPization" of apps will be a major trend, with more apps potentially encapsulating their core capabilities into MCP services for invocation by AI Agents. At the same time, the focus of app developers may shift from polishing independent front-end interfaces to providing efficient and secure back-end services.

"Glasses end" becomes a new entry and interactive interface. For some applications, AI glasses may become a new and more convenient entry and interactive interface. For example, map apps can provide navigation services based on augmented reality on the glasses end, and social apps can provide more immediate information reminders and AR-style interactions on the glasses end.

As a potential next-generation computing platform, AI glasses have a structural impact on the current form of apps. It heralds the arrival of a more natural, proactive, and contextualized interaction paradigm. Under this paradigm, simple tool-type apps will bear the brunt, with their functions being modularized and serviced, until they are eventually "invisibilized" by powerful AI agents, which will be the general trend. For applications that emphasize immersive experiences and complex interactions, such as games and heavy content, the hardware bottlenecks of AI glasses determine that they still have a longer independent development window period.

The format of apps is not likely to fade away easily; instead, it is destined to metamorphose into a novel form amidst the wave of an era ushered in by large AI models and AI glasses. Currently, this "metamorphosis" is merely at its inception.