AI Large Model Adoption in Enterprise Scenarios by 2025

![]() 06/23 2025

06/23 2025

![]() 464

464

Enterprise AI deployment has transcended experimental projects, evolving into a strategic imperative. Budgets are normalized, model selection diversified, procurement processes standardized, and AI applications are being systematically integrated. Despite the fragmentation of industrial and enterprise needs, this is the direction enterprises are embracing. Key vendors are emerging, and enterprises increasingly opt for off-the-shelf applications to expedite implementation.

The market landscape increasingly mirrors traditional software, but the pace of change and complexity are distinctly different—this is the unique cadence of AI.

Source: A16Z

Translation: Industry Insider

Where will AI large models be in enterprise scenarios by 2025?

Over the past year, AI's role in enterprises has undergone a fundamental transformation. It is no longer confined to innovation labs or a mere "new toy" for technology departments; it has genuinely permeated core business systems, becoming an indispensable part of IT and operational budgets.

This evolution is quiet yet rapid: AI models have diversified, procurement processes have become rigorous, and enterprises are no longer reinventing the wheel. Instead, they are methodically selecting, deploying, and evaluating AI services, akin to traditional software. Technology leaders are maturing, understanding that different models suit various tasks, use case fragmentation is the norm, and high-quality AI-native applications are rapidly surpassing traditional software vendors.

Recently, A16z released a research report titled "AI Technology in Enterprise Scenarios," offering a comprehensive review of how enterprises deploy, procure, integrate, and plan for AI in 2025, based on in-depth interviews with over 20 enterprise buyers and a survey of 100 CIOs.

This report presents a new perspective: AI is no longer a question of "whether it's worth trying" but a realistic challenge of "how to scale implementation."

How exactly is AI being implemented? Or rather, how should it be implemented in enterprise scenarios? How can it be better implemented? This report is both a survey and a mirror, reflecting the global implementation of AI by enterprises.

Let's delve into this report together.

Below is the original text of the report (with readability adjustments):

A year ago, we summarized 16 changes enterprises faced in building and procuring generative AI (Gen AI). Today, the situation has significantly changed. To this end, we revisited over 20 enterprise buyers and surveyed 100 CIOs across 15 industries, aiming to help entrepreneurs understand how enterprise customers use, procure, and plan for AI in 2025 and beyond.

Although the AI world is constantly evolving, the market landscape's evolution over the past year has surpassed our expectations:

1. Enterprise AI budgets continue to exceed expectations, leaping from pilot projects to become part of IT and business core budgets.

2. Enterprises have matured in using "multi-model combinations," focusing on balancing performance and cost. OpenAI, Google, and Anthropic dominate the closed-source market, while Meta and Mistral are popular in the open-source camp.

3. AI model procurement processes increasingly resemble traditional software procurement: evaluations are stricter, hosting is more meticulous, and standardized testing is prioritized. Meanwhile, complex AI workflows are driving up model replacement costs.

4. The AI application ecosystem is gradually taking shape: standardized applications are replacing custom development, and AI-native third-party applications are experiencing explosive growth.

This report focuses on the latest enterprise trends across four dimensions: budget allocation, model selection, procurement processes, and application usage, helping entrepreneurs understand enterprise customers' key focus areas in detail.

I. Budget: AI expenditures exceed expectations and continue to grow

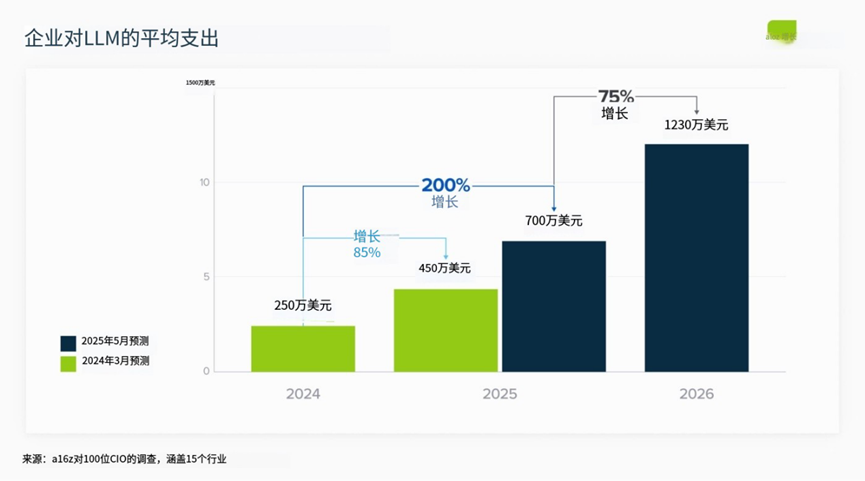

1. AI budget growth far exceeds expectations and shows no signs of slowing down

Enterprises' investments in large language models (LLMs) have significantly surpassed last year's already high budget expectations and are expected to continue growing, with an average increase of about 75%. As one CIO noted, "My expenditure for the entire year of 2023 can now be used up in a week."

The budget increase is attributed to two factors: enterprises continuously discovering more internal use cases, driving widespread adoption among employees, and more enterprises deploying customer-facing AI applications, especially in technology-innovative enterprises where investments in these scenarios are expanding exponentially. A large technology company stated, "Last year, we focused on internal efficiency improvements, but this year, we will shift to customer-facing Gen AI, with significantly increased investments."

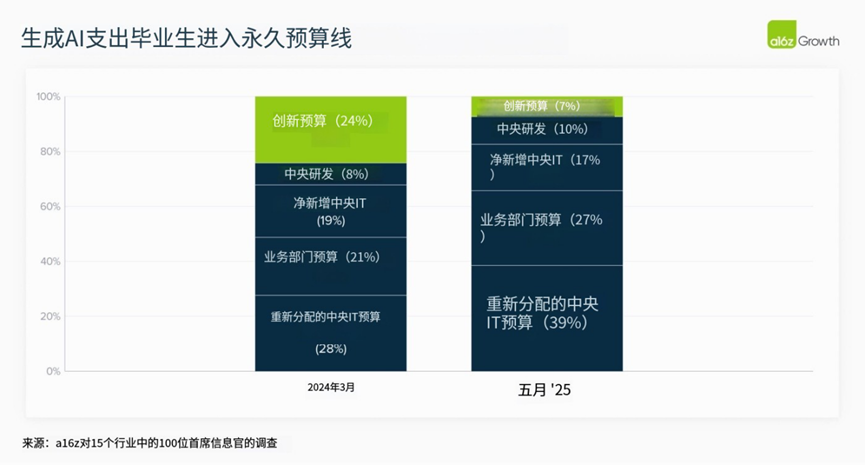

2. AI is officially included in core budgets, ending the "experimental period"

A year ago, about 25% of enterprises' expenditures on LLMs still came from innovation-specific budgets; today, this proportion has dropped to 7%. Enterprises generally include expenses for AI models and applications in regular IT and business department budgets, reflecting that AI is no longer an exploratory project but an "infrastructure" for business operations.

A CTO pointed out, "Our products are gradually integrating AI functions, and related expenses are naturally rising." This signifies that the trend of AI integration into mainstream budgets will further accelerate.

II. Models: Multi-model strategies become mainstream, and three major vendors initially establish a leading position

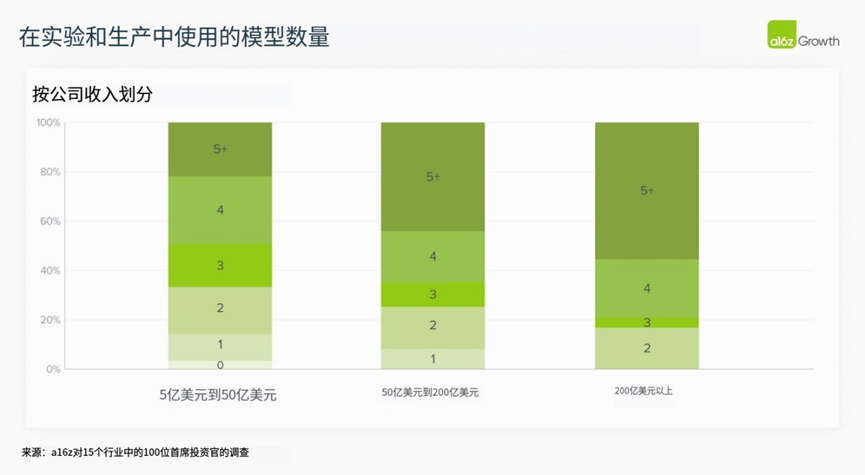

3. The era of multi-models has become the norm, with "differentiation" rather than "homogeneity" driving development

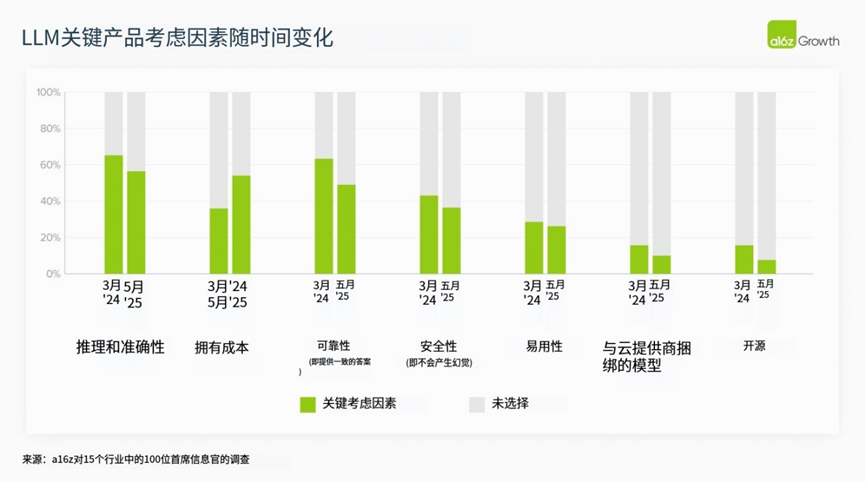

Currently, multiple LLMs with excellent performance are available on the market, and enterprises are deploying multiple models in actual production. While avoiding vendor lock-in is crucial, the more fundamental driver is the increasingly significant performance differences between models in various use cases.

In this year's survey, 37% of enterprises use five or more models, a significant increase from 29% last year.

Although models score similarly in general assessments, enterprise users have found that the differences in their actual effects cannot be ignored. For example, Anthropic's Claude excels at fine-grained code completion, while Gemini is more suitable for system design and architecture. In text-based tasks, users report that Anthropic's language fluency and content generation are stronger, while OpenAI's models are more suitable for complex question-answering tasks.

This difference prompts enterprises to adopt "multi-model best practices," ensuring performance optimization while reducing dependence on a single vendor. We predict that this strategy will continue to dominate enterprises' model deployment paths in the future.

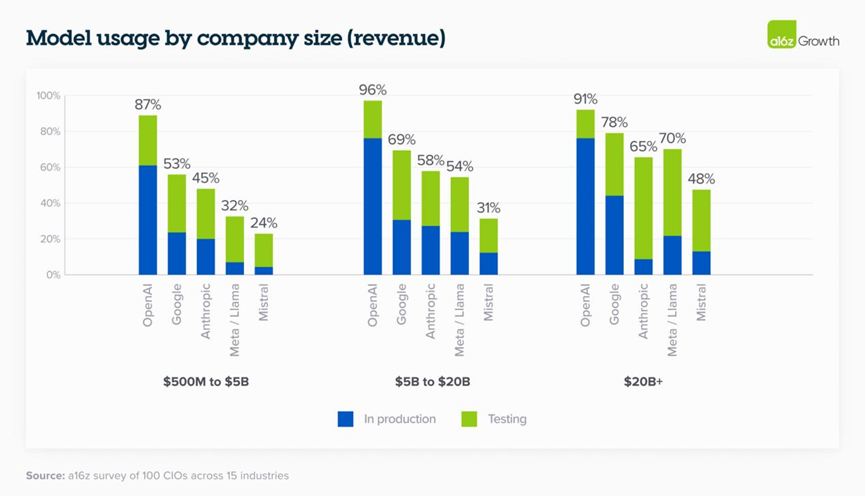

4. The model landscape remains fiercely competitive, but three major vendors initially show advantages

Although enterprises continue to trial multiple models in experiments and production, three leaders have emerged: OpenAI maintains its market share lead, while Google and Anthropic have rapidly caught up over the past year.

Specifically:

(1) OpenAI: Its model portfolio is widely used, with GPT-4 being the most commonly deployed model in production environments, and the inference model o3 also attracting significant attention. 67% of OpenAI users have deployed non-cutting-edge models in production, a much higher proportion than Google (41%) and Anthropic (27%).

(2) Google: Performs more prominently in large enterprises, benefiting from the GCP customer base and brand trust. Gemini 2.5 not only has a top-tier context window but also offers significant cost-effectiveness—the cost of Gemini 2.5 Flash per million tokens is $0.26, much lower than GPT-4.1 mini's $0.70.

(3) Anthropic: Highly favored by technology-forward enterprises (such as software companies and startups). Its performance in code-related tasks is outstanding and is the core engine behind the fastest-growing AI coding applications.

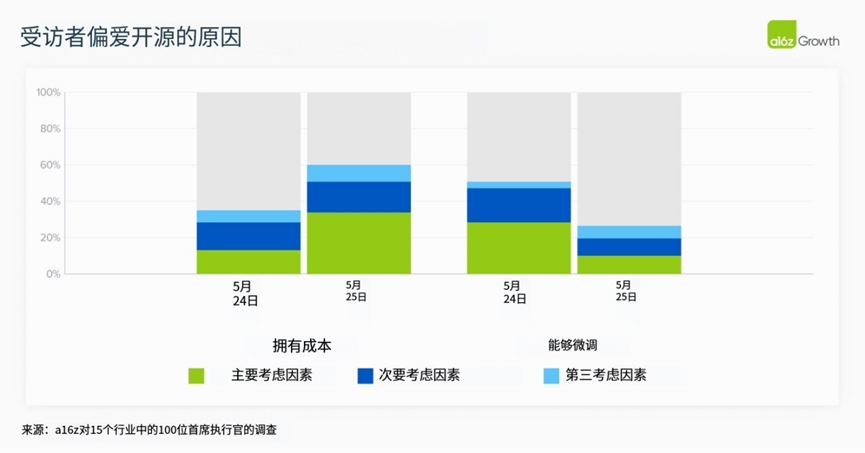

Additionally, open-source models like Llama and Mistral are preferred by large enterprises due to data security, compliance, and customizability considerations. The Grok model from the new player xAI is also gaining widespread attention, and the market remains uncertain.

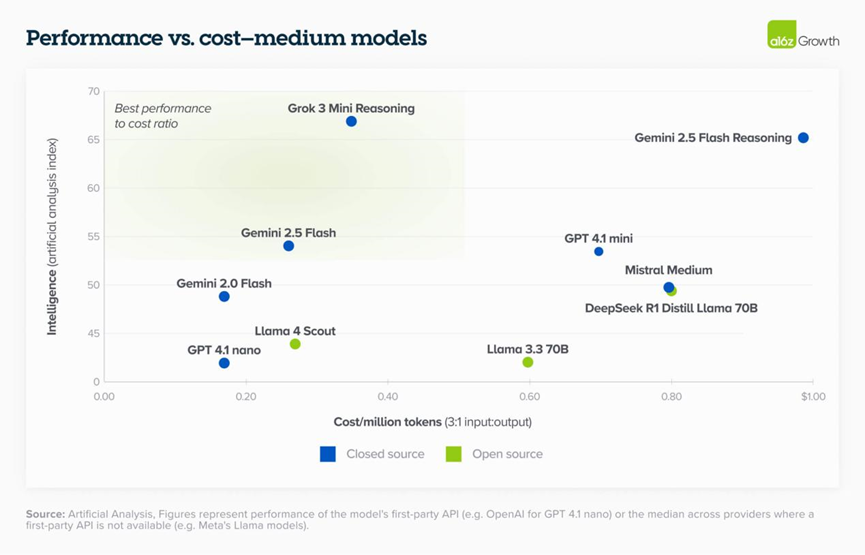

5. For small and medium-sized models, the cost-performance advantage of closed-source models is becoming increasingly apparent

As mentioned, model costs are falling significantly each year. Under this trend, the performance/cost ratio of closed-source models (especially small and medium-sized models) is becoming increasingly attractive.

Currently, xAI's Grok 3 mini and Google's Gemini 2.5 Flash lead in this field. For instance, some customers prefer closed-source models due to cost considerations and the convenience of ecosystem integration.

As one customer candidly admitted, "The current pricing is already very attractive, and we are deeply embedded in the Google ecosystem, using everything from G Suite to databases. Their experience in enterprise services is valuable to us." Another customer summarized more bluntly, "Gemini is cheap."

This reflects that closed-source models are gradually winning the market in low- to mid-cost scenarios.

6. As model capabilities enhance, the importance of fine-tuning is declining

With significant improvements in model intelligence levels and context windows, enterprises have found that achieving superior performance no longer relies on fine-tuning but more on efficient prompt engineering.

One enterprise observed, "We no longer need to extract training data to fine-tune the model; just putting it into a sufficiently long context window yields almost equally good results."

This shift has two important impacts:

(1) Reducing usage costs: Prompt engineering costs are much lower than fine-tuning;

(2) Reducing vendor lock-in risks: Prompts can be easily migrated to other models, while fine-tuned models often face migration difficulties and high upfront investments.

However, in some ultra-specific use cases, fine-tuning is still indispensable. For example, a streaming media company fine-tuned an open-source model for query enhancement in video search to adapt to domain-specific language.

Additionally, if new methods like reinforcement fine-tuning gain widespread application outside the lab, fine-tuning may also experience a new round of growth in the future.

Overall, most enterprises have lowered their ROI expectations for fine-tuning in routine scenarios and are more inclined to choose open-source models in cost-sensitive scenarios.

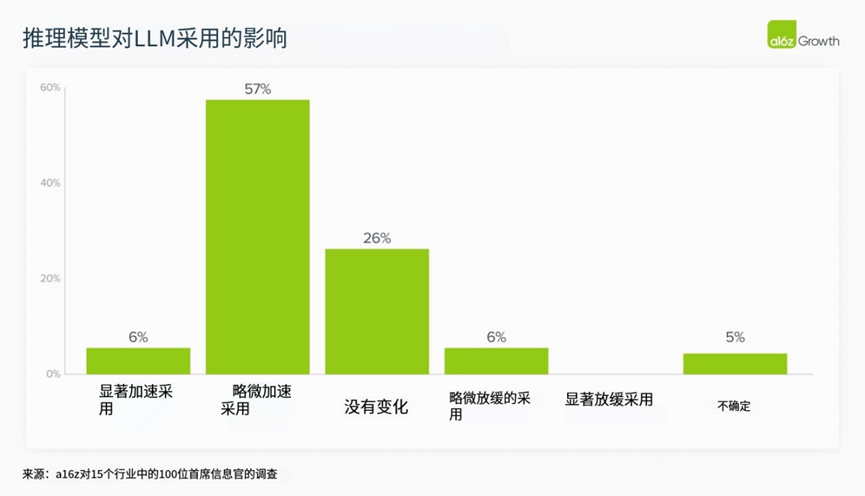

7. Enterprises are optimistic about the prospects of "reasoning models" and are actively preparing for large-scale deployment

Reasoning models enable large language models to perform more complex tasks accurately, significantly expanding LLMs' applicable scenarios. Although most enterprises are still testing and have not officially deployed them, they are generally optimistic about their potential.

One executive said, "Reasoning models can help us solve more novel and complex task scenarios, and I expect their usage to increase significantly soon. We are just in the early testing phase right now."

Among early adopters, OpenAI's reasoning models have performed most prominently. Although DeepSeek has also received considerable attention, OpenAI has a clear advantage in production deployment: This survey shows that 23% of enterprises have used OpenAI's o3 model in production, compared to only 3% using DeepSeek. However, DeepSeek has a higher adoption rate among startups, with lower penetration in the enterprise market.

As reasoning capabilities are gradually integrated into the main workflows of enterprise applications, their influence is expected to rapidly amplify.

III. Procurement: Enterprise AI procurement processes are maturing and fully drawing on traditional software procurement mechanisms

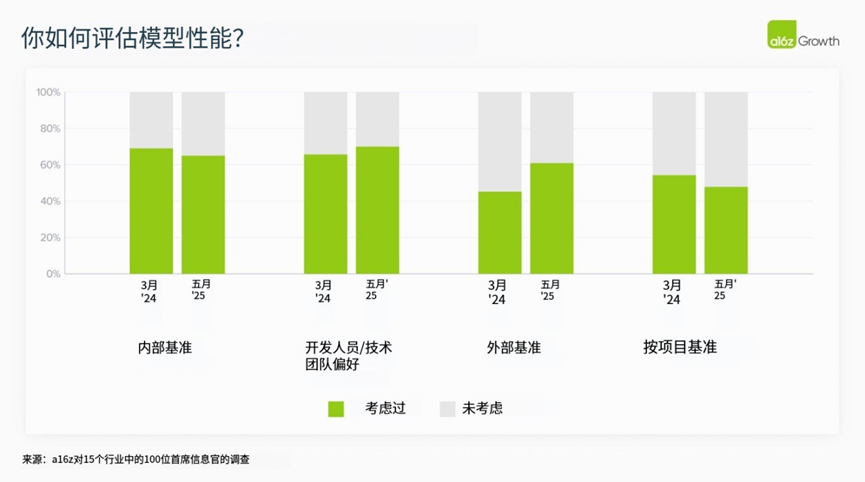

8. Model procurement processes are becoming more standardized, and cost sensitivity is increasing

Currently, enterprises generally adopt systematic evaluation frameworks when selecting models. In our interviews, security and cost have become core considerations in model procurement, alongside accuracy and reliability. As one enterprise leader said, "Nowadays, most models have sufficient basic capabilities, and price has become an even more important factor."

Additionally, enterprises are becoming increasingly specialized in "use case-model" matching:

(1) For critical scenarios or tasks with high performance requirements, enterprises tend to choose top models with strong brand endorsements;

(2) For internal or low-risk tasks, enterprises make more "cost-oriented" decisions.

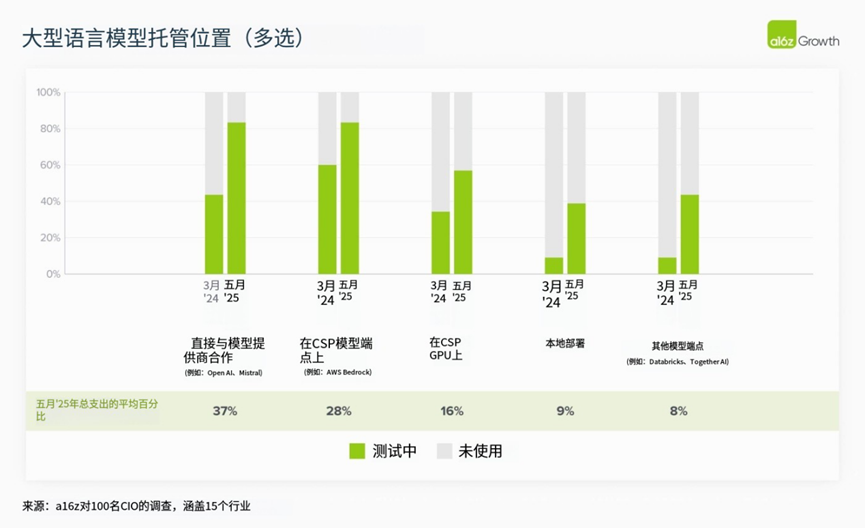

9. Enterprises' trust in model vendors has significantly increased, and hosting strategies have become more diverse

Over the past year, trust between enterprises and model vendors has witnessed a remarkable surge. While some enterprises continue to favor hosting models through established cloud service relationships, such as leveraging OpenAI via AWS, an increasing number are opting to collaborate directly with model providers or utilize platforms like Databricks, particularly when the main cloud vendor does not host the model.

As one respondent noted, "We strive to adopt the latest and most powerful models promptly, with preview versions being equally crucial." This shift towards direct hosting represents a significant departure from last year's strategy of aiming to revert to the main cloud vendor whenever possible.

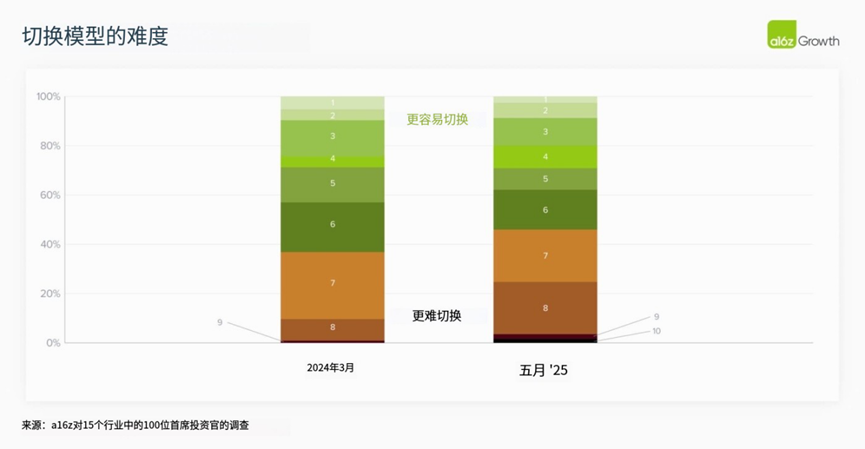

10. As task complexity escalates, the cost of model switching escalates rapidly as well.

Last year, many companies deliberately minimized switching costs in AI application design, envisioning models that could be "freely added or removed." However, with the rise of "agent-based workflows," this approach began to falter. Agent-based workflows often involve multi-step collaboration, and model replacements within these workflows can have far-reaching consequences. After significant investments in constructing prompts, designing guardrails, and verifying quality, companies are even more reluctant to replace models.

A CIO succinctly summarized, "All our prompts are tailored for OpenAI, with each prompt having a specific structure and details. Switching to another model would necessitate not only retraining all prompts but might also jeopardize the workflow's stability."

11. External evaluation benchmarks are increasingly serving as the "first filter" for model procurement.

With the proliferation of models, corporate buyers are increasingly relying on external evaluation systems, such as Gartner's Magic Quadrant and LM Arena, for initial screening references in model procurement. While internal benchmarks, gold-standard datasets, and developer feedback remain crucial, external indicators are becoming the "first hurdle." However, companies emphasize that external benchmarks are only one part of the evaluation, with actual trials and employee feedback being the true deciding factors.

IV. Application: Accelerated Deployment of AI Applications, Transition from "Self-Building" to "Procurement"

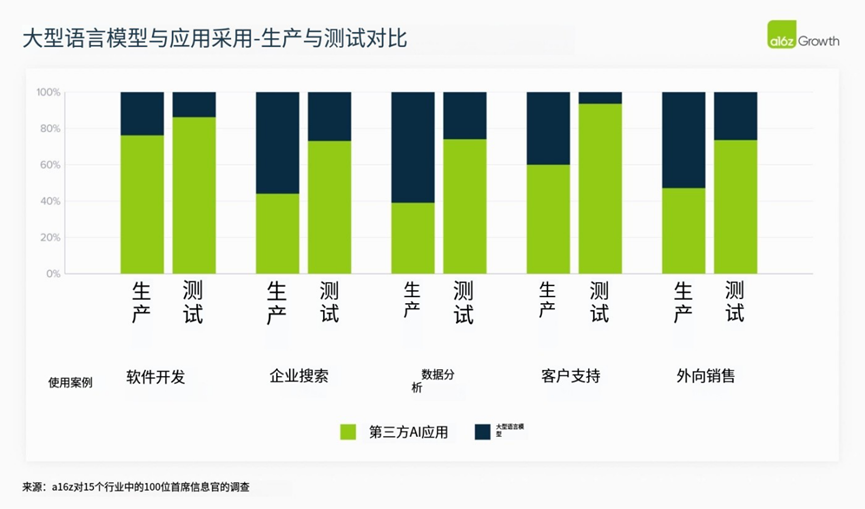

12. A notable shift from "self-development" to "purchasing ready-made products."

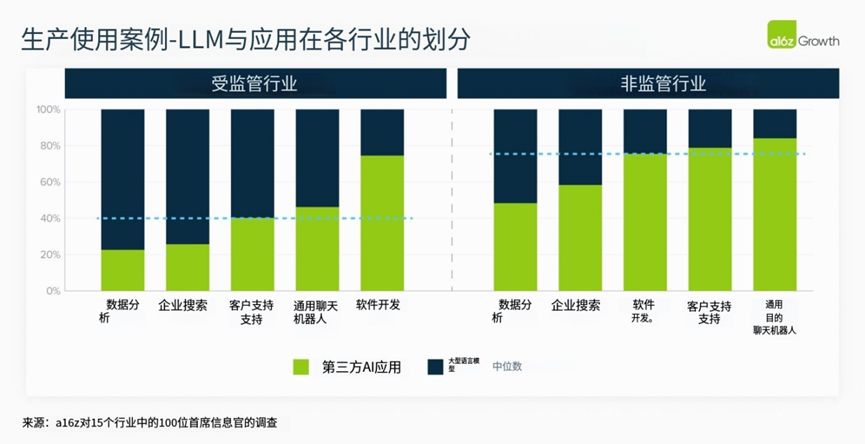

The AI application ecosystem has rapidly matured. Over the past year, the transition from "self-building" to "procuring professional third-party applications" has become evident.

Two primary factors drive this shift:

(1) The dynamic disparity in performance and cost necessitates continuous evaluation and tuning, which is generally more suited to professional teams rather than internal ones.

(2) The rapid evolution of AI makes it challenging to sustain in-house tools in the long run, and they may not necessarily provide a competitive edge, diminishing the cost-effectiveness of "self-building."

For instance, in customer support scenarios, over 90% of surveyed CIOs indicated that they are testing third-party applications. A listed fintech company attempted to develop its customer service system internally but ultimately opted to procure a mature solution. This trend has yet to fully unfold in high-risk industries like healthcare, where data privacy and compliance remain paramount.

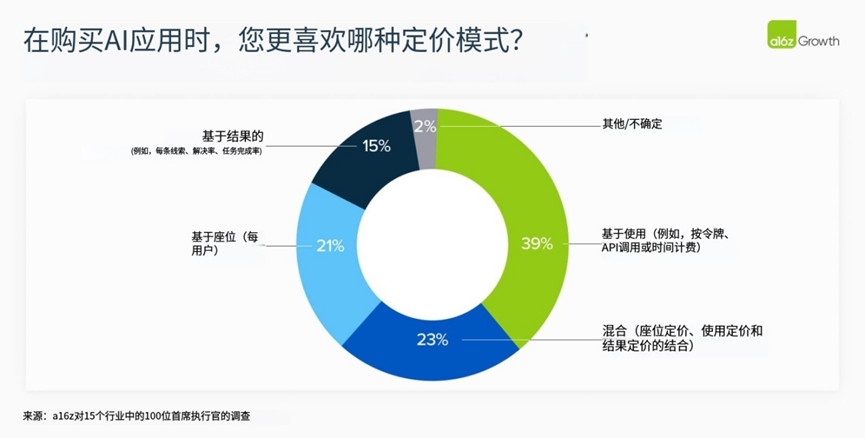

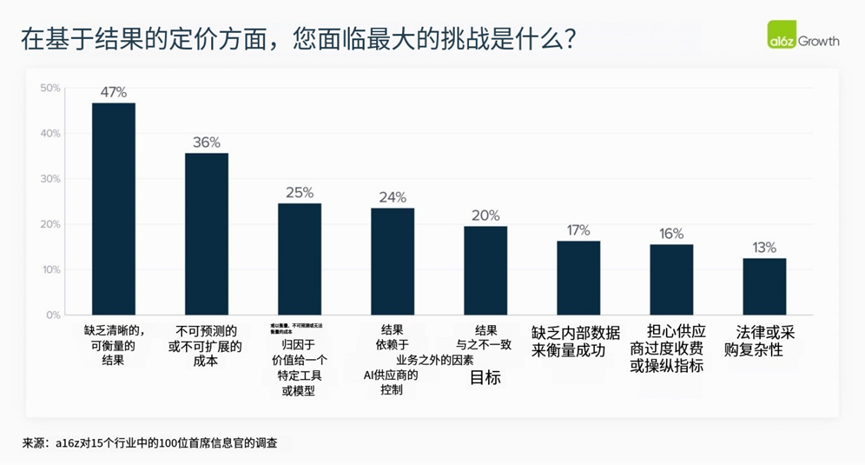

13. "Pay-per-result" pricing models are still not widely embraced by CIOs.

Despite widespread discussion around "pay-per-performance" pricing, companies have numerous practical concerns - including vague result definitions, attribution challenges, and uncontrollable costs. Most CIOs prefer pay-per-usage pricing due to its intuitiveness, predictability, and controllability.

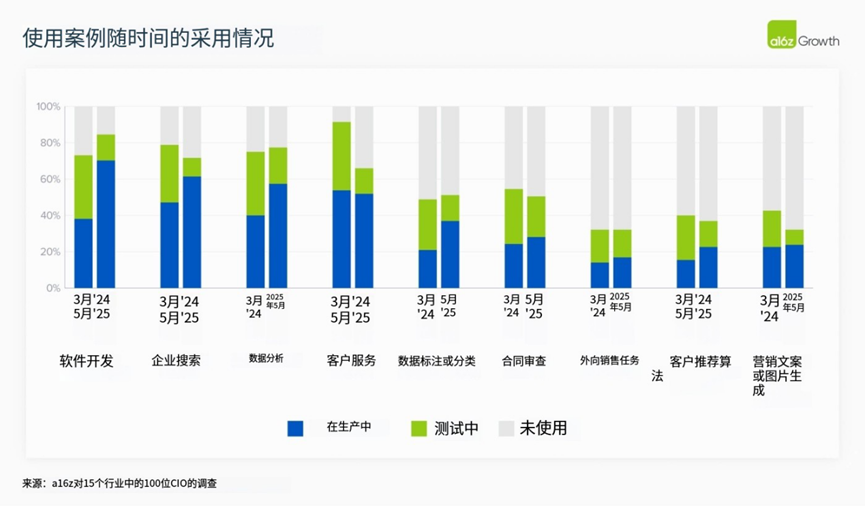

14. Software development has emerged as the primary "killer" AI application scenario.

While AI has been implemented across various fields, including internal search, data analysis, and customer service, its explosion in software development stands out. This is attributed to three advantages:

(1) Substantial improvement in model capabilities;

(2) Exceptionally high quality of off-the-shelf tools;

(3) Directly observable ROI and broad applicability across industries.

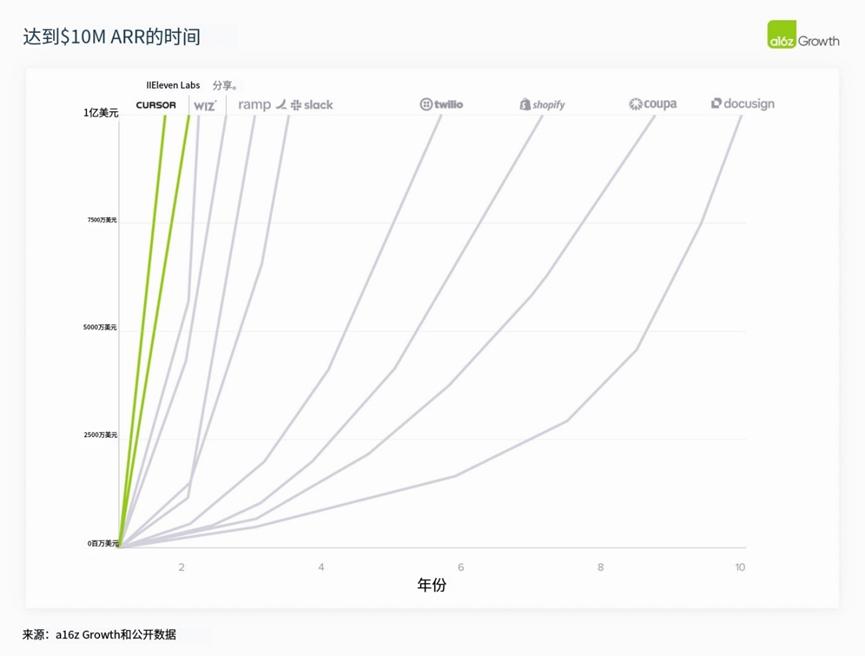

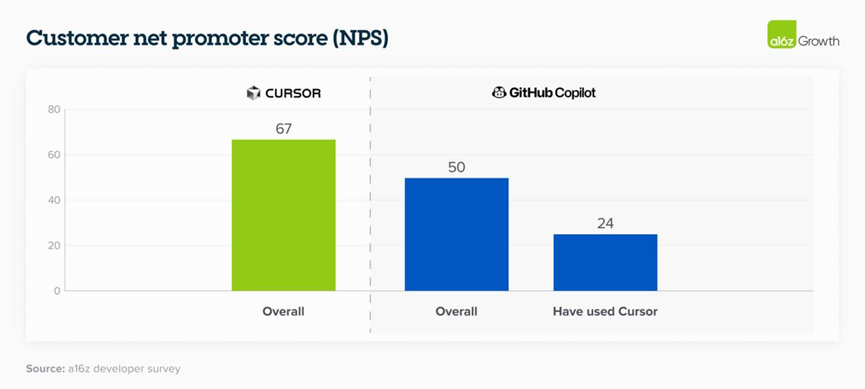

The CTO of a high-growth SaaS company stated that nearly 90% of their code is now generated by Cursor and Claude Code, compared to only 10-15% when using GitHub Copilot a year ago. Although this rapid adoption is still at the forefront, it may indicate future trends in the corporate world.

15. The prosumer market drives early application growth.

The phenomenon of strong consumer brands influencing corporate procurement decisions is reoccurring.

ChatGPT serves as a prime example: Many CIOs stated that they purchased the enterprise version of ChatGPT because "employees are accustomed to it, like it, and trust it." This natural extension from the consumer market to the enterprise side accelerates the growth of new-generation AI applications.

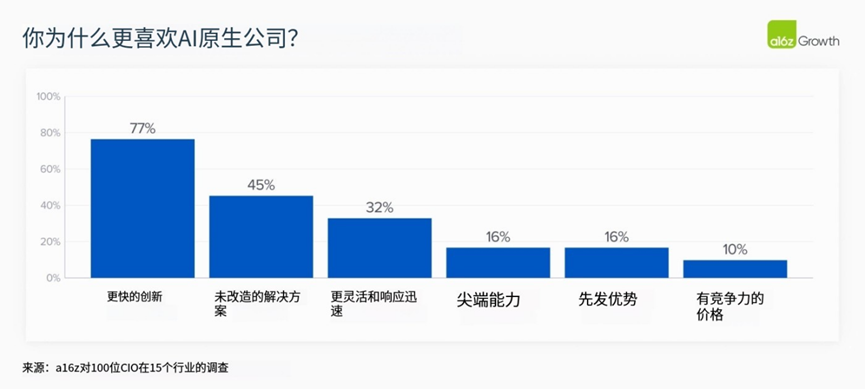

16. AI-native applications are outpacing traditional giants in speed and quality.

Despite channel advantages and brand trust enjoyed by traditional vendors, AI-native companies are beginning to surpass them in product quality and iteration speed. In the realm of coding tools, for instance, users are becoming "dissatisfied" with traditional GitHub Copilot and favoring tools specifically built for AI scenarios, like Cursor.

A CIO in the public safety industry noted, "There is a stark difference between the first and second generations of AI coding tools. The new generation of native products is smarter and more practical."

Looking Ahead: The "Experimental Era" of Enterprise AI is Over

Deploying AI in enterprises is no longer an experimental endeavor but a strategic initiative. Budgets have normalized, model choices have diversified, procurement processes have standardized, and AI applications are beginning to be systematically implemented. Despite the fragmentation of use cases, this is the direction enterprises are embracing. Key vendors are standing out, and enterprises are increasingly opting for ready-made applications to accelerate deployment.

The market landscape is increasingly mirroring traditional software, but the pace and complexity of change are entirely distinct - this is the unique cadence of AI.