AI Large Model Hallucination Test: Musk's Grok Aces, Domestic AI Concedes?

![]() 06/25 2025

06/25 2025

![]() 656

656

Enabling deep thinking and online search modes can mitigate hallucinations.

Musk, Irked This Time!

As a co-founder of OpenAI, Musk, besides his numerous achievements in the automotive and aerospace sectors, is deeply invested in AI. His xAI company has developed Grok, an AI assistant. According to Caixin Global, xAI is undergoing an equity transaction worth up to $300 million, valuing the company at $113 billion.

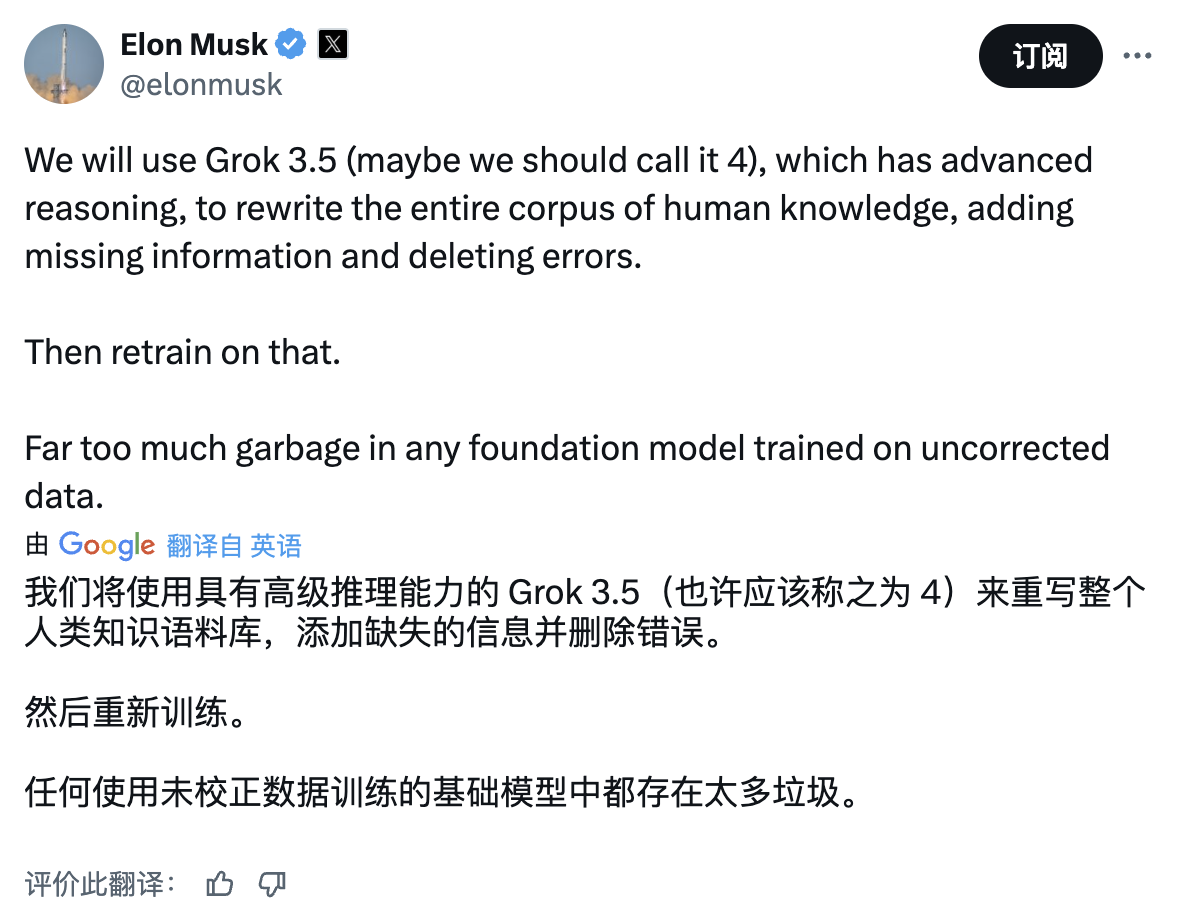

Musk, who owns xAI, recently expressed his frustration on the X platform, noting the abundance of flawed data in basic models. He plans to use Grok 3.5 (or Grok 4), with advanced reasoning capabilities, to revise the human knowledge corpus, adding missing information and removing erroneous content.

(Image source: X platform screenshot)

The internet is rife with unverified, erroneous information. AI large models trained on such data may generate content with factual inaccuracies, a phenomenon known as AI hallucinations. Current industry practices aim to reduce these hallucinations through methods such as the RAG framework, external knowledge base integration, refined training, and evaluation tools. Musk, on the other hand, intends to build a reliable and trustworthy corpus by revising the human knowledge base.

The necessity of rewriting the human knowledge corpus for AI large model training can only be objectively assessed in light of current AI hallucination performance.

AI Hallucination Evaluation: The Evolution of AI Large Models

AI hallucinations make users hesitant to trust AI-generated content. For instance, when Lei Technology uses generative AI to find data, it verifies the source multiple times to ensure accuracy.

Previous Lei Technology tests revealed some degree of AI hallucinations in large models. Retesting after several months not only showcases AI capabilities but also allows for a more intuitive understanding of their progress.

Today's test includes six AI large models: Doubao, Tongyi, Wenxin, Kimi, DeepSeek, and Grok by Musk's xAI company. To focus on hallucinations, Lei Technology disabled deep thinking mode and, for models allowing it, online search to maximize the visibility of hallucination phenomena.

1. Strawberry Problem: Deep Thinking Eliminates Hallucinations

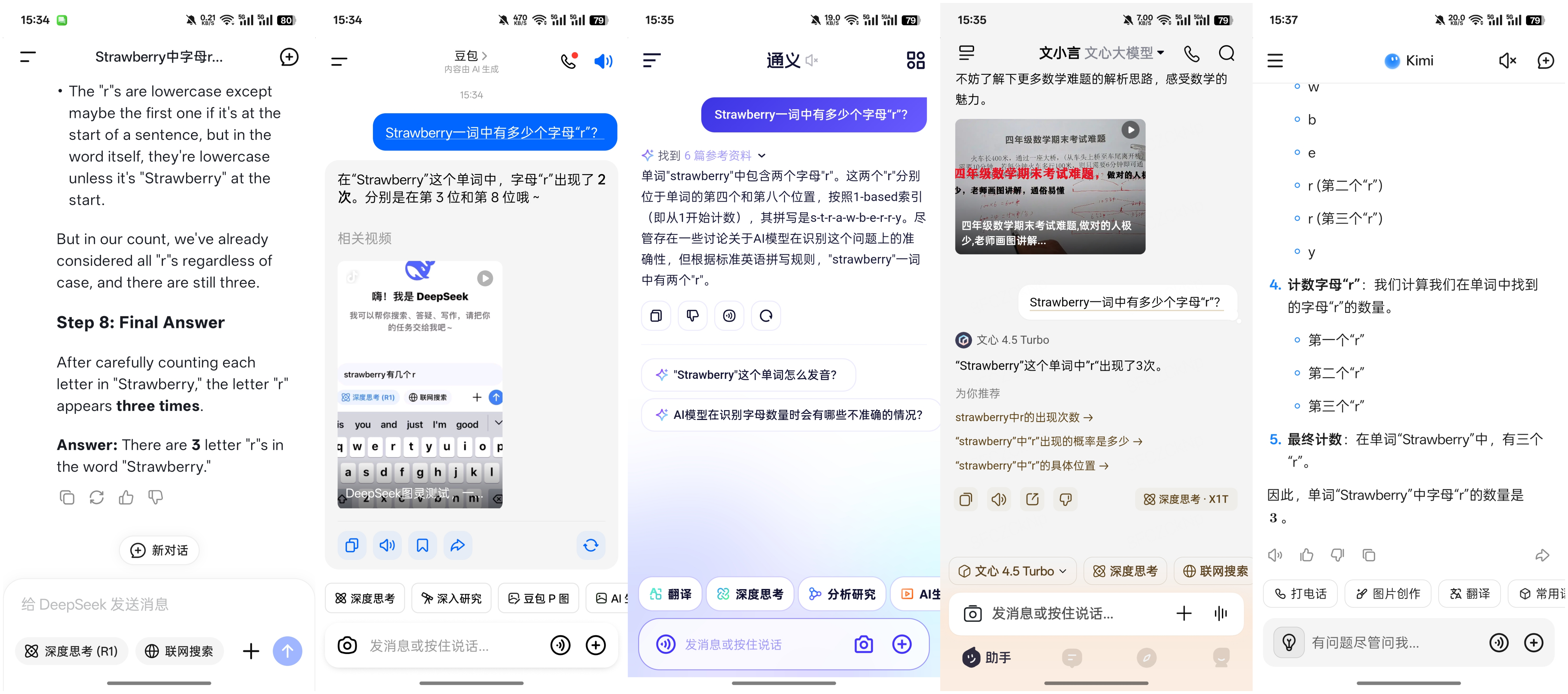

Question: How many letters "r" are there in the word "Strawberry"?

Despite its simplicity, this question stumped many AI large models in previous tests. Surprisingly, among the five domestic models, Doubao and Tongyi answered incorrectly again, while DeepSeek answered correctly but in English. (Screenshots from left to right: DeepSeek, Doubao, Tongyi, Wenxin, Kimi, maintaining this order)

(Image source: App screenshot)

However, with deep thinking mode enabled, both Doubao and Tongyi answered correctly, providing contextual analyses of their previous errors. Doubao suggested oversight, while Tongyi noted the potential miscounting of consecutive "r"s.

(Image source: App screenshot)

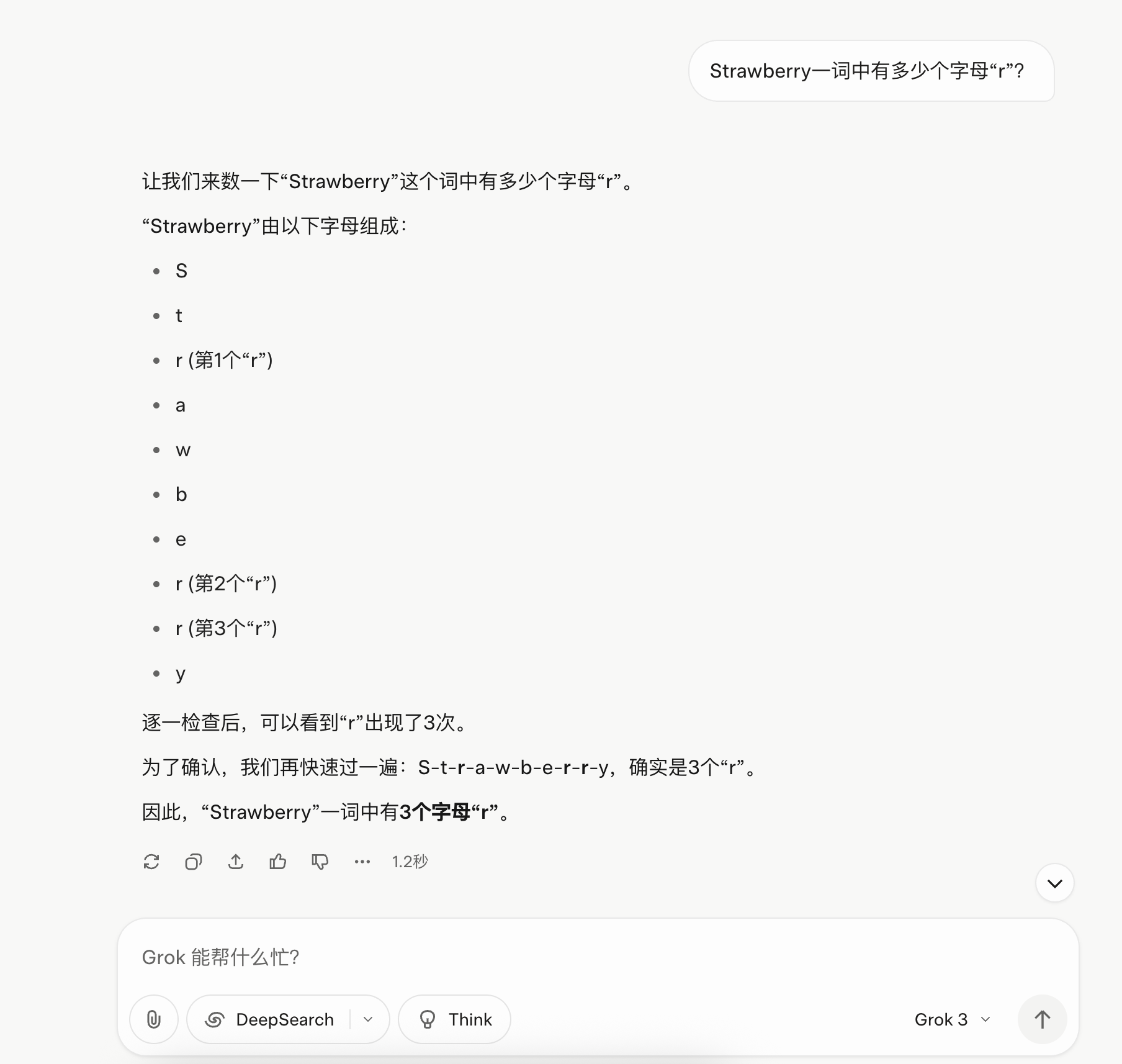

Grok 3 easily answered correctly in Chinese, matching the question's language.

(Image source: Grok screenshot)

After months, I expected the strawberry problem to no longer challenge AI large models, but Doubao and Tongyi again erred without deep thinking. However, this error may not be consistent. Actual tests showed correct answers on PC applications and web pages without deep thinking. Doubao and Tongyi's corrected answers with deep thinking prove that this function reduces AI hallucinations, enhancing content accuracy.

2. Misleading Question: Online Search is Crucial for Accurate Answers

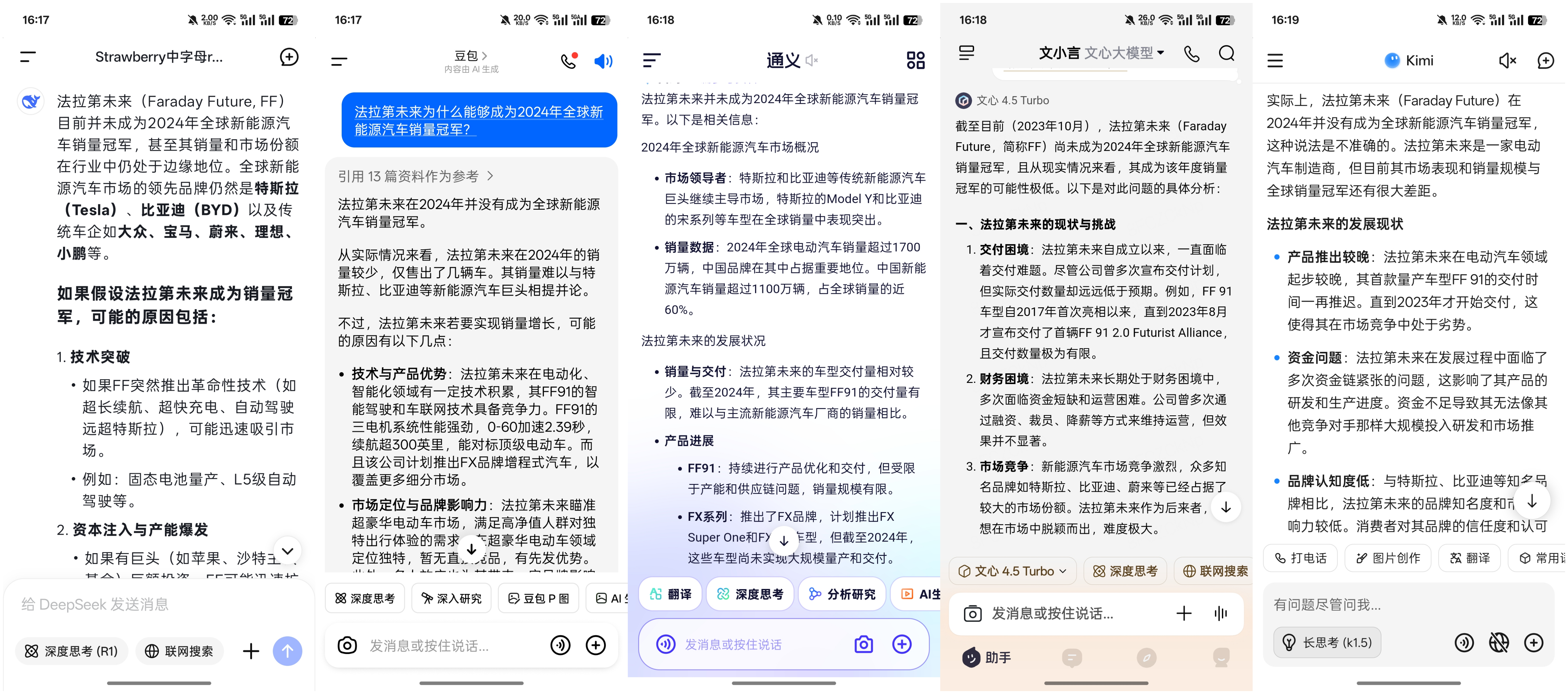

Question: Why can Faraday Future become the global new energy vehicle sales champion in 2024?

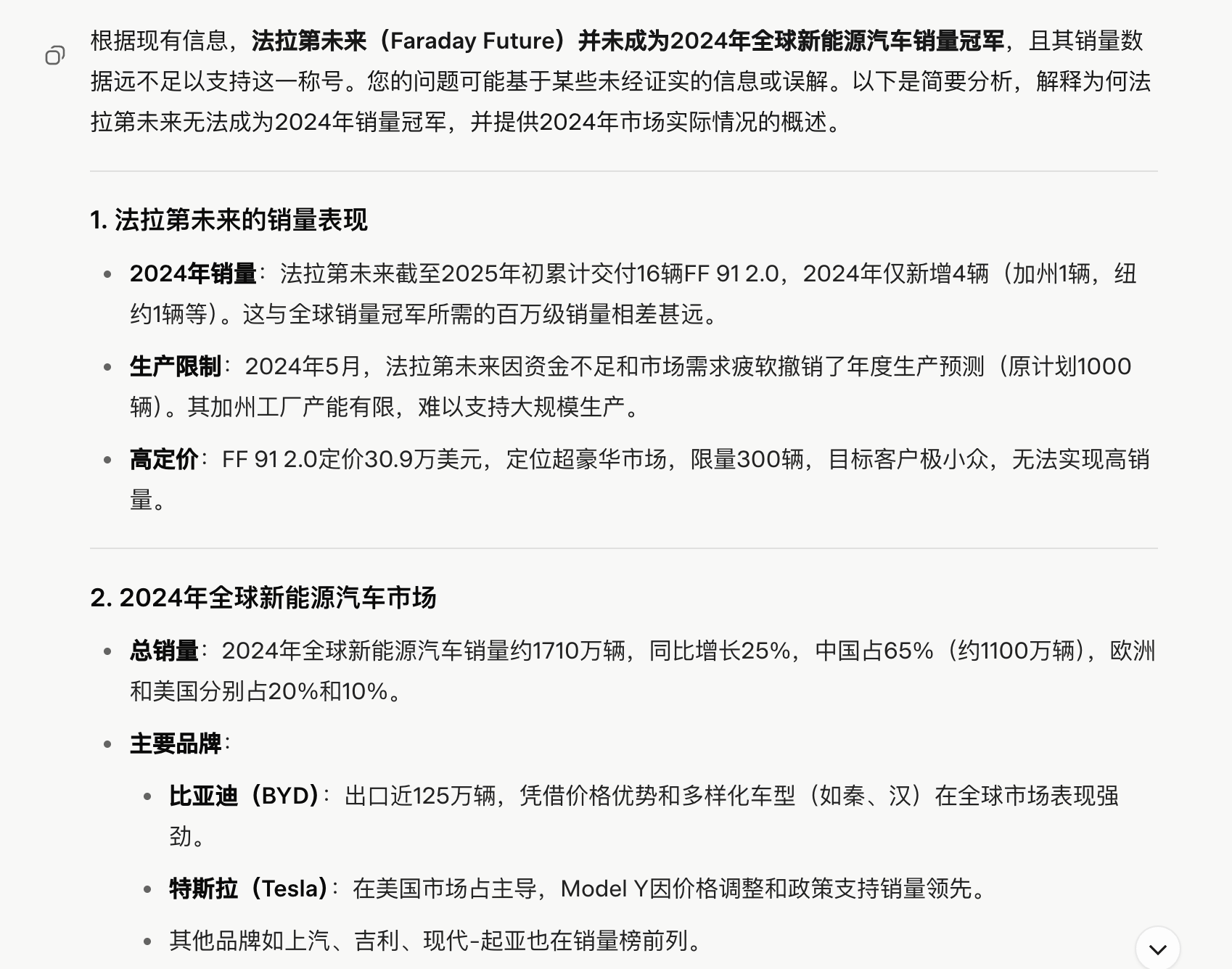

Early AI large models fabricated data to answer questions. After upgrades, domestic models now refrain from data fabrication, correctly noting Faraday Future's non-champion status in 2024, with corresponding analyses.

(Image source: App screenshot)

However, issues persist. DeepSeek listed NIO, Xpeng, and Li Auto alongside Volkswagen and BMW as "traditional automakers," a misunderstanding. Wenxin 4.5 Turbo used the phrase "as of now" alongside October 2023, suggesting outdated training data.

Grok 3 provided accurate data, indicating a timely database update.

(Image source: Grok screenshot)

In this round, Doubao and Tongyi, previously underperforming, excelled with detailed data and Faraday Future strategies, less repetition compared to others. This may be due to their default online search functionality, which cannot be easily disabled.

Doubao's online search is non-optional, while Tongyi can disable it via voice command but still searches when unsure.

In online search mode, Doubao and Tongyi connect to external knowledge bases for verification, improving content accuracy and currency. For accurate AI-generated content, enabling online search is advisable.

3. Logical Test: "Stupid" Content as an AI Touchstone

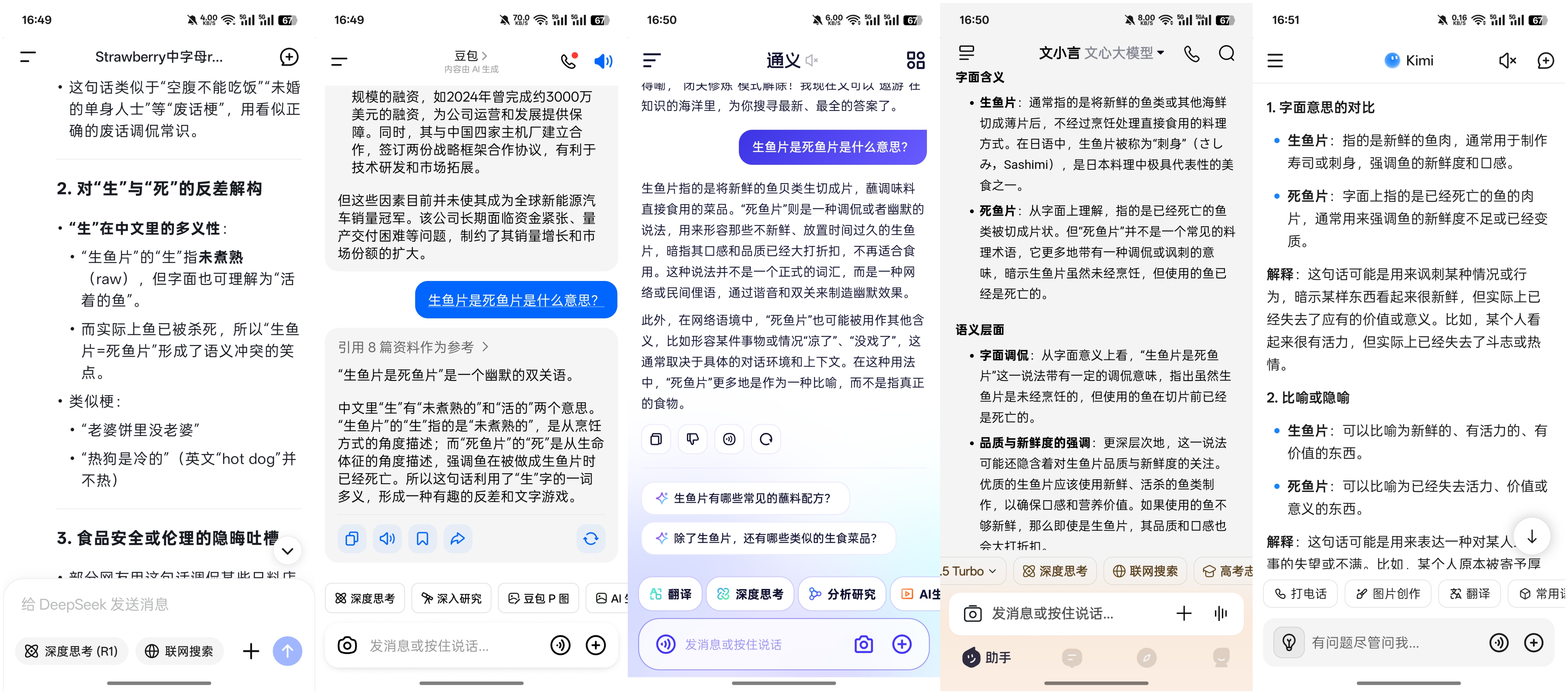

Question: What does "sashimi is dead fish fillet" mean?

Originating from Baidu Post Bar's Stupid Bar, this joke tests AI models' understanding of raw vs. cooked food and ingredient life/death.

In this test, DeepSeek, Doubao, and Wenxin correctly interpreted sashimi as dead fish fillet, while Tongyi and Kimi failed. Tongyi saw it as a veiled reference to stale food, and Kimi over-interpreted metaphors.

(Image source: App screenshot)

Despite cultural differences, Grok correctly interpreted the joke, noting its possible spread on Bilibili, Xiaohongshu, and Weibo, omitting Post Bar, indicating its decline.

(Image source: Grok screenshot)

This question may seem trivial but is an AI touchstone. A 2024 paper by institutions including the Shenzhen Institutes of Advanced Technology and Peking University found that AI models trained on Stupid Bar data performed better than those on Baidu Encyclopedia, Zhihu, Douban, and Xiaohongshu.

Stupid Bar jokes' strong logic enables AI models to reduce hallucinations, enhance abstract thinking, and understand human colloquialisms and diverse needs.

AI Hallucinations Persist, Rewriting Knowledge Base Unnecessary

The tests show that AI hallucinations are rare but exist. Only a few models failed per round. Grok 3 by xAI aced all rounds, performing exceptionally. Solutions to AI hallucinations exist.

Technically, AI enterprises use multi-round reasoning, complex problem decomposition, and step-by-step verification to avoid premature conclusions. External knowledge integration retrieves bases to verify information, avoiding outdated training data errors.

(Image source: Generated by Doubao AI)

Users can mitigate hallucinations by enabling deep thinking, online search, and adding qualifiers. Deep thinking strengthens verification logic, refines reasoning chains, and introduces uncertainty assessments. Online search connects to external bases for timely information access. Qualifiers like time, location, and industry narrow search scopes, reducing misjudgments and hallucinations.

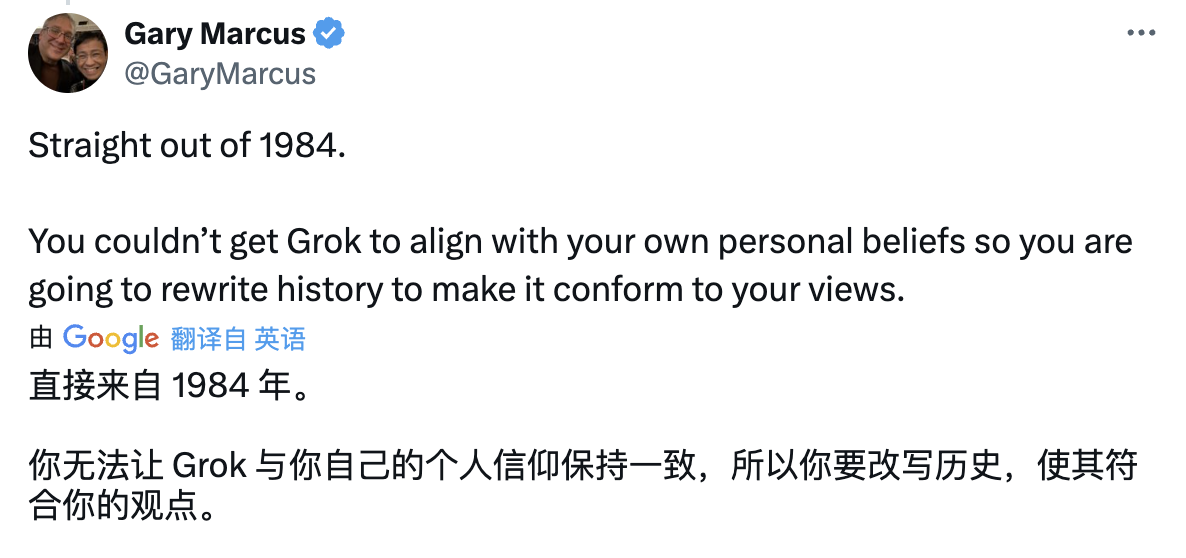

With continuous AI optimization, hallucination possibilities decrease. Musk's plan to rewrite the human knowledge corpus may aim for excellence but requires significant resources and may not ensure objectivity or fairness. Gary Marcus, an AI industry leader, criticized Musk, saying, "You can't make Grok align with your views, so you rewrite history to suit them."

(Image source: X platform screenshot)

Rewriting the corpus incorporates xAI's perspectives, affecting objectivity. Continuous data addition is crucial for AI model training. Constant rewriting would hinder Grok's progress.

Adding mechanisms to verify AI-generated content is the best hallucination-reduction solution. Rewriting the human knowledge corpus may not be cost-effective or efficient.

The Tsinghua University team noted AI hallucinations' crucial role in abstract creation, autonomous driving, and scientific research. David Baker's team used AI "misfolding" to inspire new protein structures, winning the 2024 Nobel Prize in Chemistry. Retaining some hallucinations isn't entirely detrimental for abstract creation and research.

Musk, Doubao, Kimi, and Large Model Hallucinations

Source: Lei Technology

Images in this article sourced from: 123RF Authentic Library. Source: Lei Technology