2018~2025: A Detailed Examination of NVIDIA's Strategic Deployments in Embodied AI Robotics

![]() 06/25 2025

06/25 2025

![]() 620

620

NVIDIA CEO Jensen Huang has consistently emphasized that "the next wave of AI will be Embodied AI." Based on this foresight, NVIDIA has proactively rolled out its Embodied AI strategy since 2018, committed to establishing a comprehensive technological cycle and a robust underlying development ecosystem.

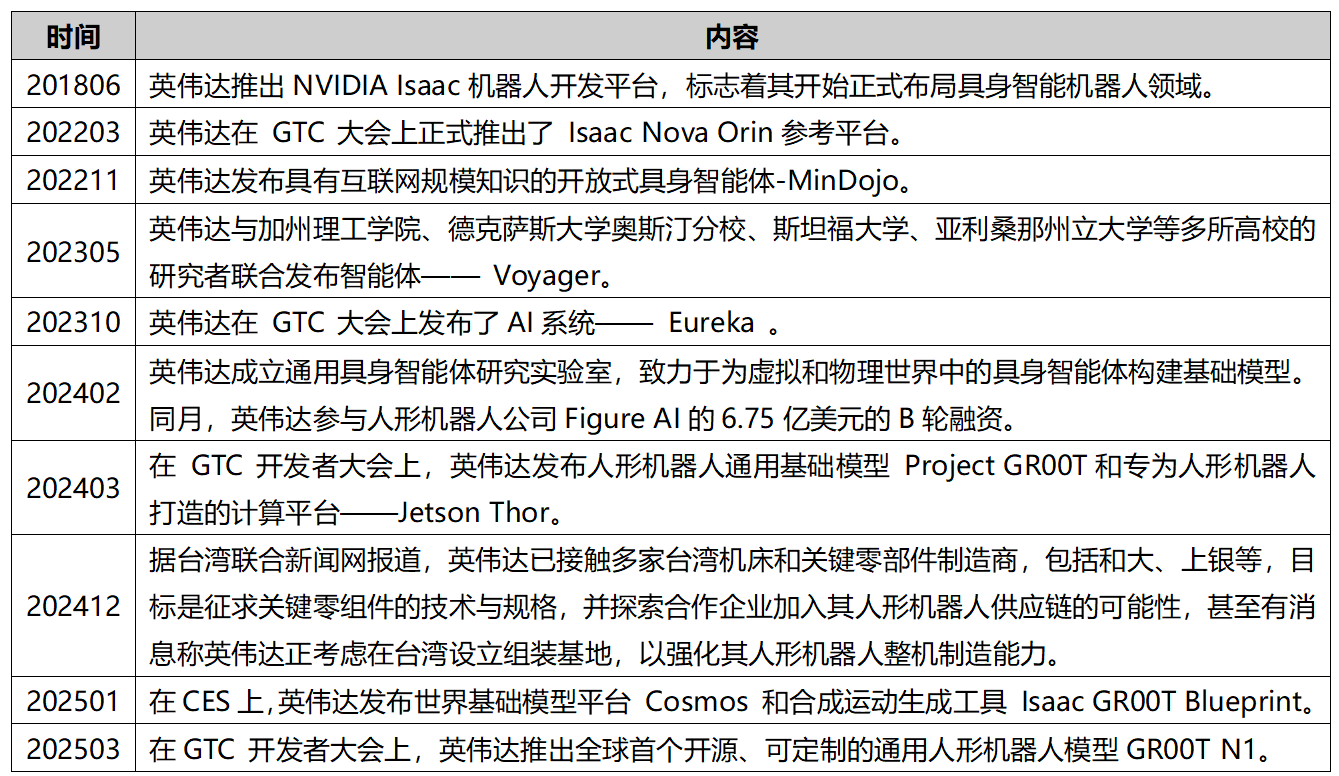

NVIDIA's Strategic Deployments in the Field of Embodied AI (Table by The Age of Machine Awakening)

2018: Laying the Foundation for Embodied AI - Initial Construction of Robot Development Platform

1. In June 2018, NVIDIA introduced the NVIDIA Isaac Platform.

June 2018 marked the launch of the NVIDIA Isaac Robot Development Platform, comprising hardware (Jetson Xavier computing platform) and a suite of software tools (including Isaac SDK, Isaac IMX, and Isaac Sim), thereby setting the stage for robot development, training, and validation.

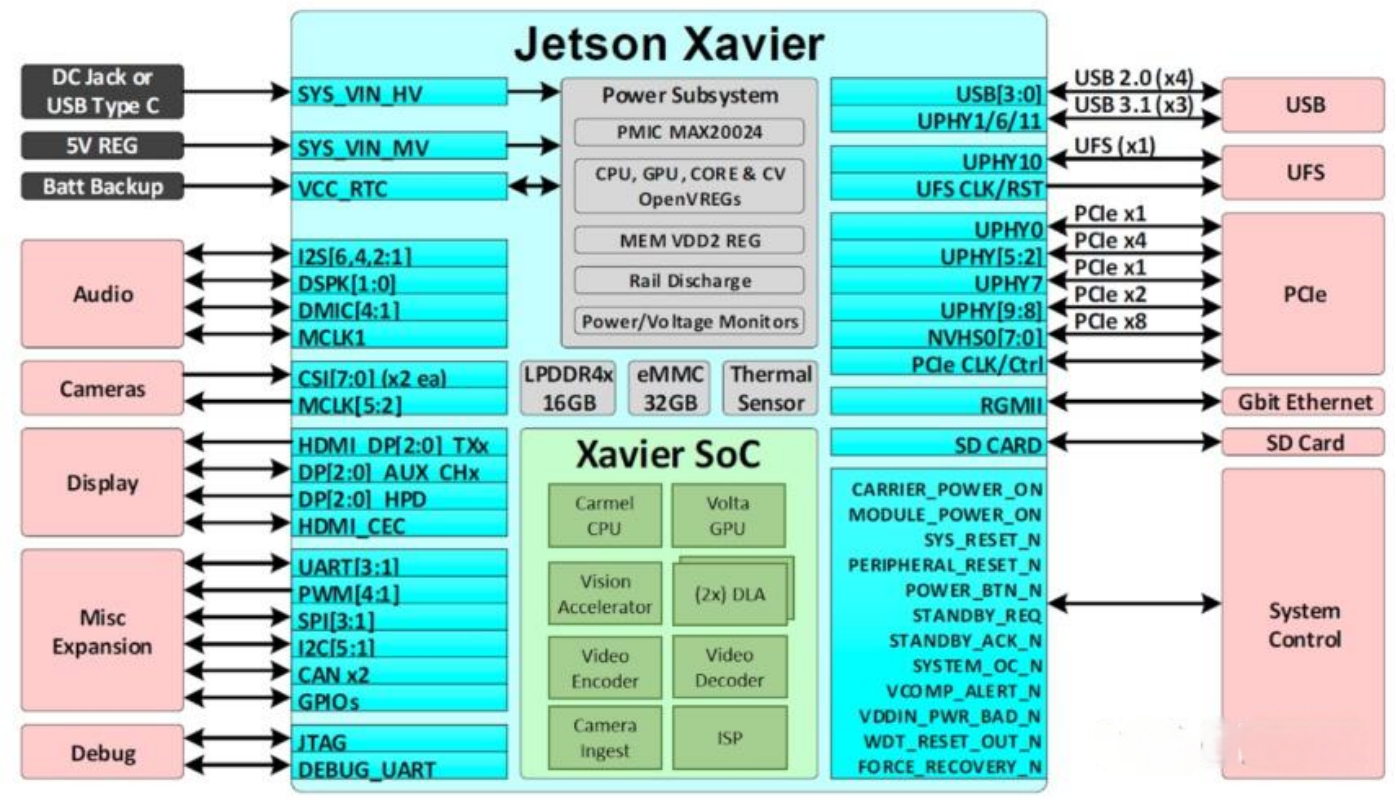

1) Hardware

Jetson Xavier: A computing platform tailored for robots, featuring the Xavier SoC chip with over 9 billion transistors capable of performing over 30 trillion floating-point operations per second. This powerful chip includes six high-performance processors: a Volta architecture Tensor Core GPU, an eight-core ARM64 CPU, two deep learning accelerators (DLAs), an image processor, a vision accelerator, and video encoders and decoders. With this robust hardware configuration, Jetson Xavier can simultaneously run multiple algorithms in real-time, encompassing tasks such as sensor processing, ranging, localization and mapping, visual perception, and path planning.

Jetson Xavier Architecture Diagram (Source: NVIDIA)

2) Software

NVIDIA offers a comprehensive set of robot learning software tools for Jetson Xavier, covering the entire spectrum from simulation to training, validation, and deployment. These include:

Isaac SDK: A runtime framework equipped with APIs and tools, along with fully accelerated libraries for developing robot algorithm software.

Isaac IMX (Intelligent Machine Acceleration): A collection of pre-developed and optimized algorithm software for robot development, covering diverse areas such as sensor processing, vision and perception, localization, and mapping.

Isaac Sim: Provides developers with a highly realistic virtual simulation environment for autonomous training and supports hardware-in-the-loop testing using Jetson Xavier.

Isaac Working Principle (Source: NVIDIA)

Note: From 2018 to 2019, Isaac Sim was independent of Omniverse and developed based on the Unreal/Unity engine. Since then, NVIDIA has gradually migrated Isaac Sim to the Omniverse platform. For instance, the Omniverse Isaac Sim released in 2021 is fully integrated with the Omniverse architecture, replacing the earlier Unreal/Unity engine and incorporating Omniverse's core technologies such as the PhysX 5 physics engine, RTX rendering, and OpenUSD scene description.

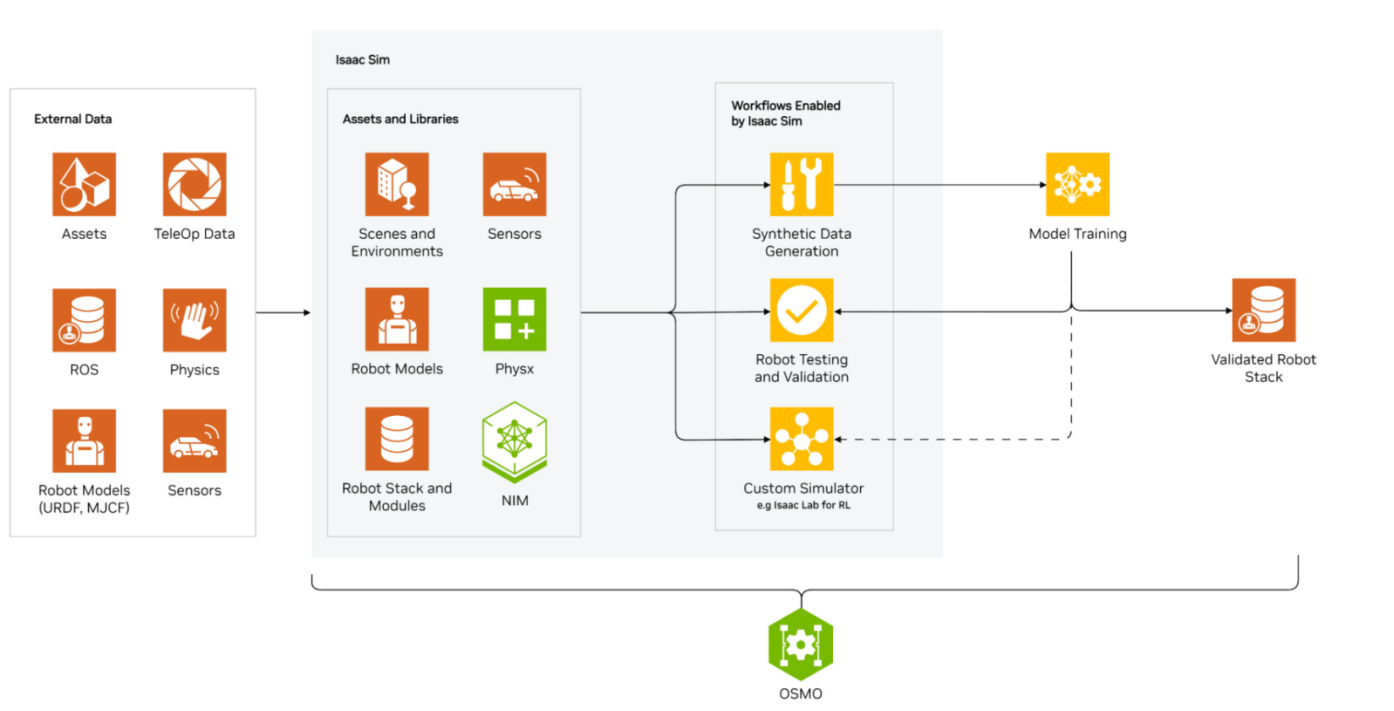

Today, Isaac Sim stands as a comprehensive robot simulation platform built on NVIDIA Omniverse™, enabling high-fidelity simulations through advanced physical effects and realistic rendering. Focused on synthetic data generation (SDG), testing, and validation (SIL/HIL), it serves as a customizable template for robot simulators.

References

1. NVIDIA Isaac Sim Working Principle

https://developer.nvidia.cn/isaac/sim

2022: Technological Iteration and Ecosystem Expansion

2. In March 2022: NVIDIA unveiled the Isaac Nova Orin Reference Platform.

At the GTC conference in March 2022, NVIDIA officially introduced the Isaac Nova Orin Reference Platform, a computing and sensor platform designed exclusively for autonomous mobile robots (AMRs). This platform comprises up to two Jetson AGX Orin computers (≥550TOPS) and a sensor suite tailored for the new generation of AMRs, aiming to accelerate the development and deployment of AMRs.

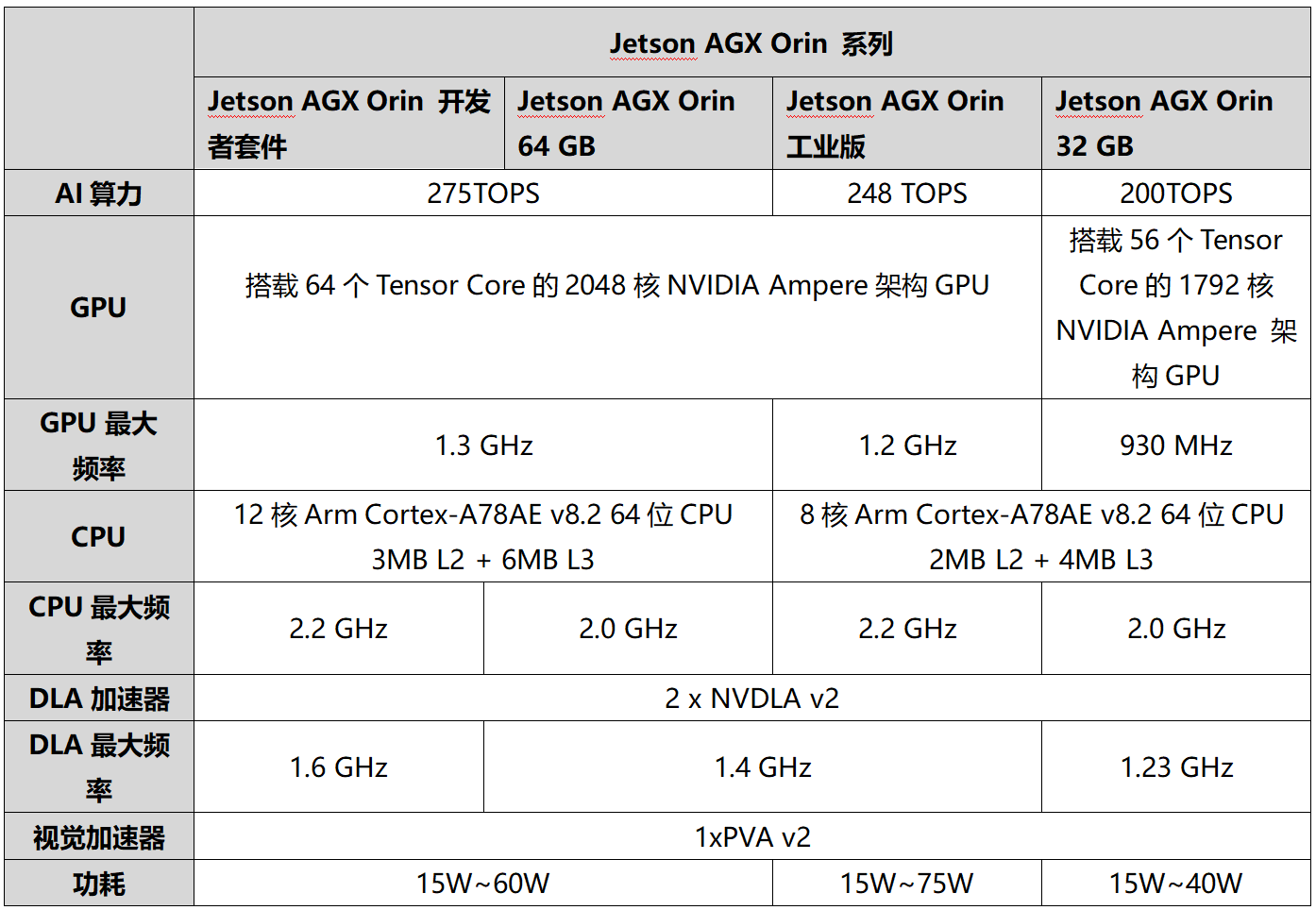

The Jetson AGX Orin is equipped with an NVIDIA Ampere architecture GPU and an Arm Cortex-A78AE CPU, along with a new generation of deep learning and vision accelerators.

Basic Parameter Information of the Jetson AGX Orin Series (Table by The Age of Machine Awakening)

The sensor suite includes 6 cameras (2 depth perception cameras + 4 wide-angle cameras) + 3 LiDARs (2 navigation 2D LiDARs and 1 optional 3D LiDAR for mapping) + 8 ultrasonic radars.

Note: Subsequent Expansion - In March 2024, NVIDIA collaborated with Ninebot to release the Nova Orin Developer Kit, optimized for the Nova Carter AMR platform and pre-installed with the Isaac Perceptor stack, further streamlining the secondary development process.

3. In November 2022: NVIDIA launched the Embodied Agent MinDojo.

On November 22, 2022, NVIDIA introduced MinDojo, an open-ended embodied agent endowed with internet-scale knowledge. Built on the Minecraft game, it represents a novel framework for embodied agent research.

This model constructs the three crucial elements of embodied agents: a multi-task and goal-oriented environment, a large-scale multimodal knowledge database, and a flexible and scalable agent architecture, providing a solid foundation and framework for embodied agent research and development.

Note: Minecraft is a sandbox game developed by Mojang Studios, a subsidiary of Microsoft.

References

1. NVIDIA Isaac Nova Orin

https://zhuanlan.zhihu.com/p/555258658

2023: Integration of Generative AI and Robotics

4. In May 2023: NVIDIA introduced the Agent - Voyager.

In May 2023, NVIDIA, in collaboration with researchers from Caltech, The University of Texas at Austin, Stanford University, Arizona State University, and other institutions, jointly unveiled the Agent - Voyager.

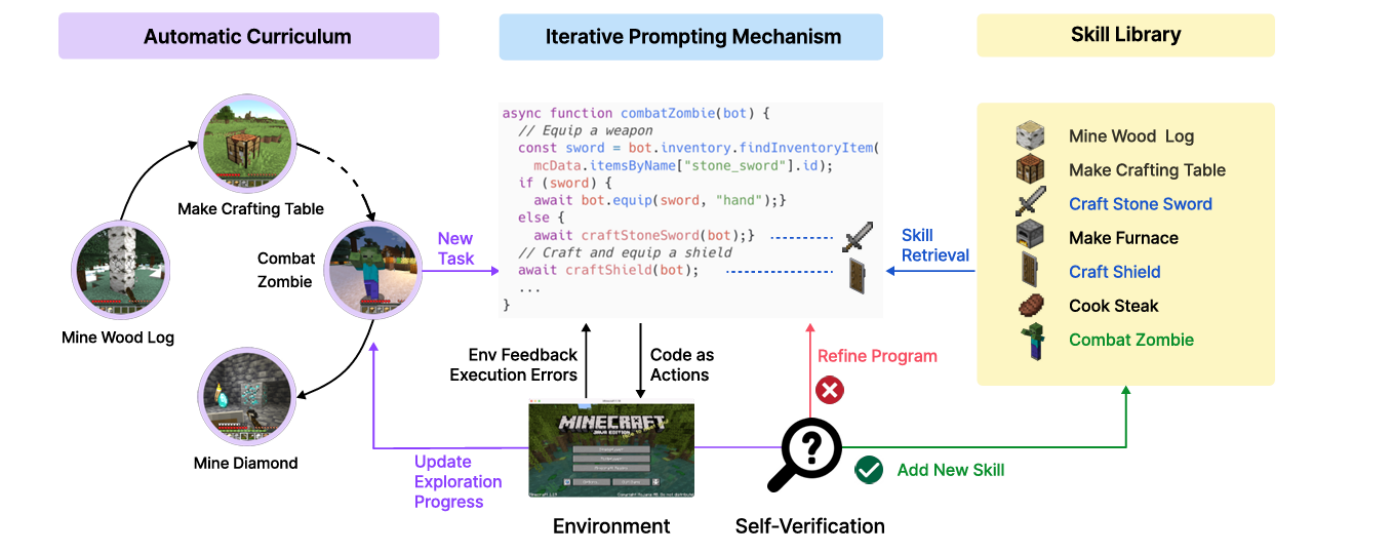

The Voyager agent comprises three core components: automatic learning paths, an iterative prompting mechanism, and a skills library.

Voyager Agent Working Principle

Voyager is an LLM-driven embodied agent capable of lifelong learning, showcasing the impressive capability of large language models in guiding agents to learn and explore complex tasks. This provides new insights and directions for the evolution of artificial intelligence. In the Minecraft virtual environment, Voyager can autonomously explore, generate tasks based on its surroundings and state, continuously learn new skills, and store them in the skills library, embodying the essential traits of embodied agents that interact with their environment and evolve through learning.

Note: Minecraft is a sandbox game developed by Mojang Studios, a subsidiary of Microsoft.

5. In October 2023, NVIDIA released Eureka.

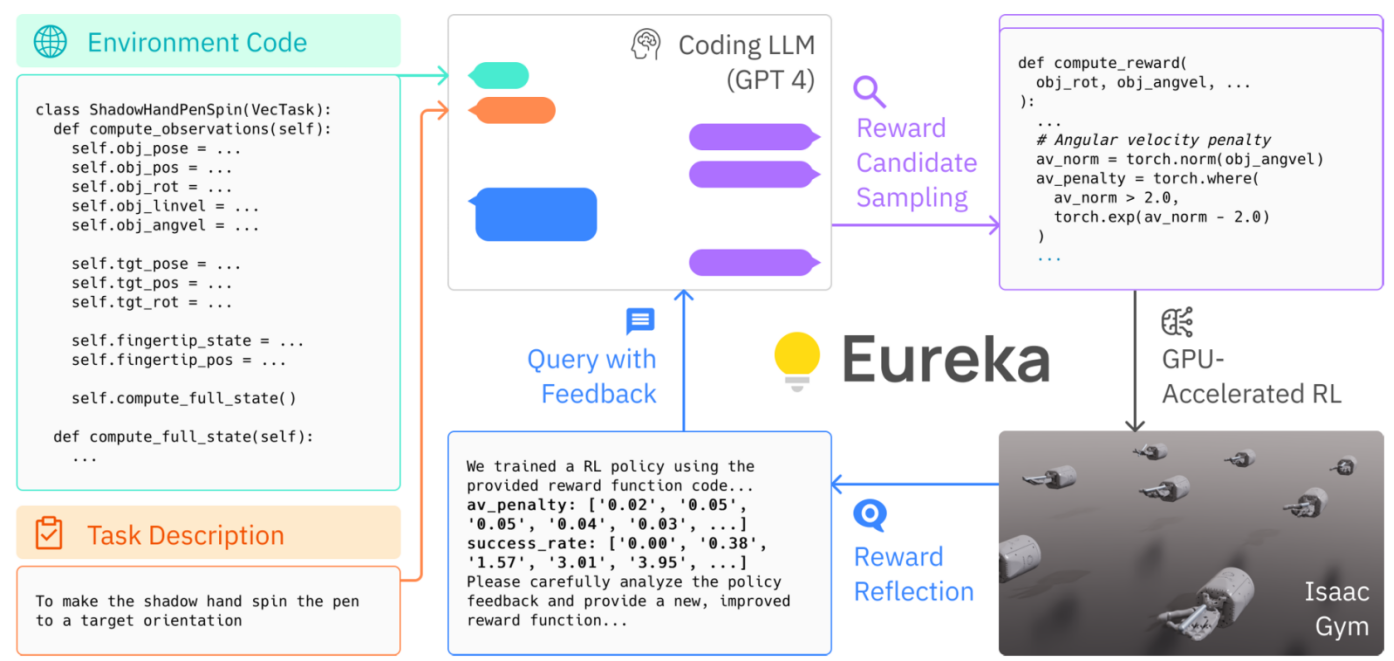

At the GTC conference in October 2023, NVIDIA introduced Eureka, an AI system focused on robot training. Utilizing generative AI and reinforcement learning methods, Eureka automatically generates and optimizes reward functions, enhancing the training efficiency and performance of robots.

Eureka Working Principle (Source: NVIDIA)

Working Principle: Eureka is powered by the GPT-4 large language model, employing a hybrid gradient architecture where GPT-4 refines the reward function in the outer loop, while reinforcement learning trains the robot controller in the inner loop. In the diagram, Eureka uses unmodified environment source code and language task descriptions as context to generate executable reward functions through zero-shot coding of large language models. The framework iteratively optimizes between reward function sampling, GPU-accelerated reward evaluation, and reward reflection, gradually improving the output quality of the reward function.

Application Scenarios: Eureka is primarily used for training robots on complex tasks, particularly those requiring precise control and advanced skills, such as dexterous manipulation and learning of intricate movements.

Reference Papers

1. Voyager: An Open-Ended Embodied Agent with Large Language Models

2. Eureka: Human-Level Reward Design via Coding Large Language Models

2024: NVIDIA Unveils Universal Foundation Model GR00T

6. In February 2024, NVIDIA established the Embodied Agent Research Laboratory (GEAR).

In February 2024, NVIDIA founded the Generalist Embodied Agent Research (GEAR) Laboratory, led by Jim Fan and Yuke Zhu. The laboratory is dedicated to developing foundational models for embodied agents in both virtual and physical worlds, focusing on four key research areas: multimodal foundational models, generalist robot models, virtual world agents, and synthetic data for simulation.

7. In March 2024, NVIDIA released the Universal Foundation Model Project GR00T.

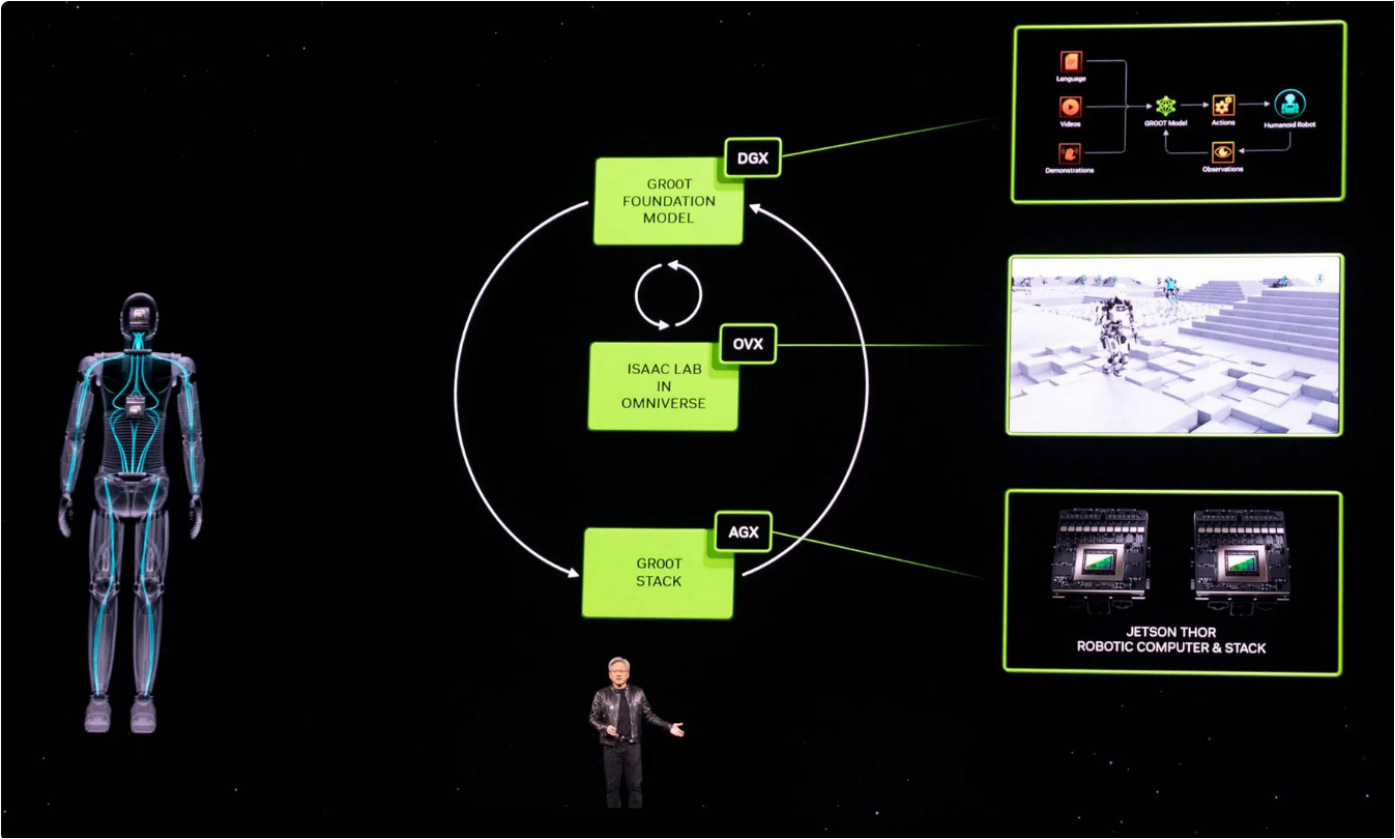

At the GTC Developer Conference in March 2024, NVIDIA introduced Project GR00T, a universal foundation model for humanoid robots. Through natural language text/speech understanding and imitation learning from human behavior videos and real-life demonstrations, GR00T accelerates humanoid robots' ability to learn and coordinate various skills, enabling them to adapt and interact with the real world.

NVIDIA Releases Universal Foundation Model Project GR00T for Humanoid Robots (Source: NVIDIA)

Furthermore, NVIDIA has announced collaborations with multiple humanoid robot companies, including 1X Technologies, Agility Robotics, Apptronik, Boston Dynamics, Figure AI, Sanctuary AI, Unitree Robotics, Fourier Intelligence, and XPeng Robotics, to jointly develop the "GR00T" project.

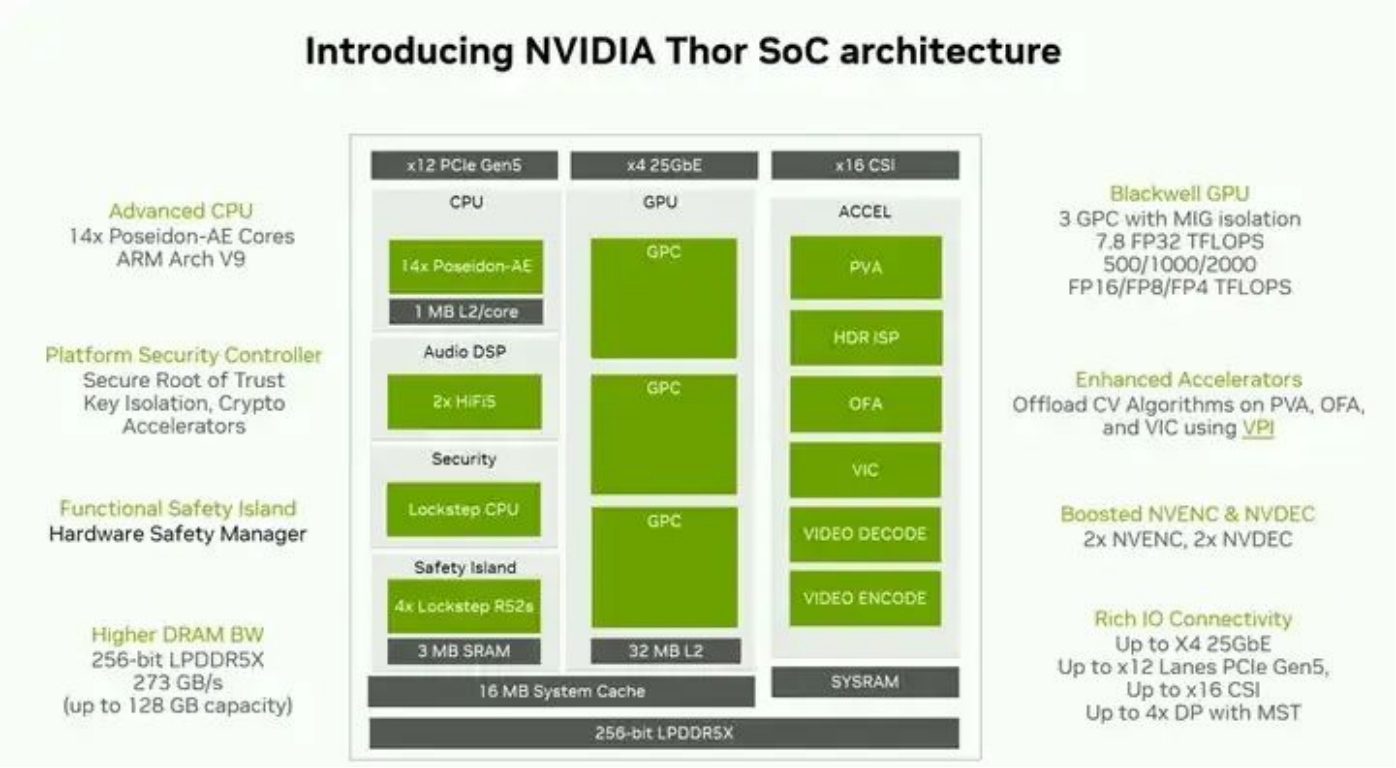

Simultaneously, at the conference, NVIDIA also introduced the Jetson Thor computing platform, specifically designed for humanoid robots, supporting parallel computation of multimodal AI models (such as vision, speech, and motion planning).

The Thor SoC chip features a GPU based on the Blackwell architecture, integrated with a Transformer engine, and directly supports FP4 (4-bit floating-point) and FP8 (8-bit floating-point) operations, significantly reducing the inference power consumption and latency of large-scale Transformer models (like GPT, BERT). Additionally, the GPU is divided into three independent clusters, supporting flexible allocation of computing resources through MIG technology to enable multitasking and resource isolation.

In terms of CPU and memory performance, the Thor SoC chip boasts a 14-core CPU (including AE extension cores), offering a 2.6x performance improvement over its predecessor, enhancing real-time control (such as motor drive, sensor fusion). The memory bandwidth capacity has doubled to 128GB, with a bandwidth of 273GB/s, supporting local loading of ultra-large models and high-speed data throughput.

Moreover, Jetson Thor integrates a functional safety island and various traditional accelerators such as ISP, video codecs, a vision processing engine (PVA), an optical flow accelerator (OFA), etc. It provides a unified development framework called Vision Programming Interface (VPI) across accelerators (PVA/OFA), simplifying design and integration efforts.

NVIDIA Thor SoC Chip Architecture Block Diagram (Image Source: NVIDIA)

Additionally, NVIDIA announced significant upgrades to the Isaac robot development platform:

1) Launch of new base models and related tools

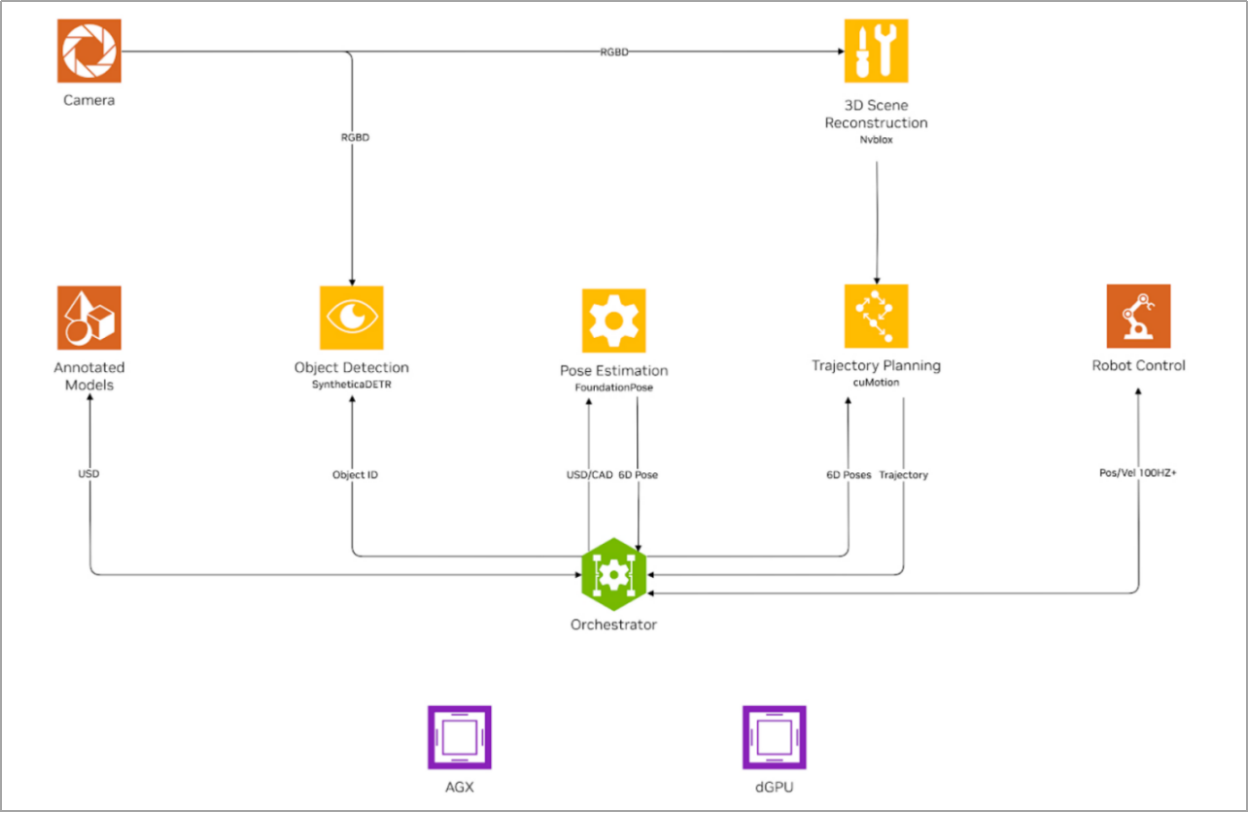

Isaac Manipulator: Built on Isaac ROS, it comprises NVIDIA CUDA acceleration libraries, AI models, and reference workflows for robot developers. It aims to assist robot software developers in creating AI robotic arms or manipulators capable of perceiving, understanding, and interacting with their environments, supporting functions such as motion planning, object detection, pose estimation, and tracking.

NVIDIA Isaac Manipulator Working Principle (Image Source: NVIDIA)

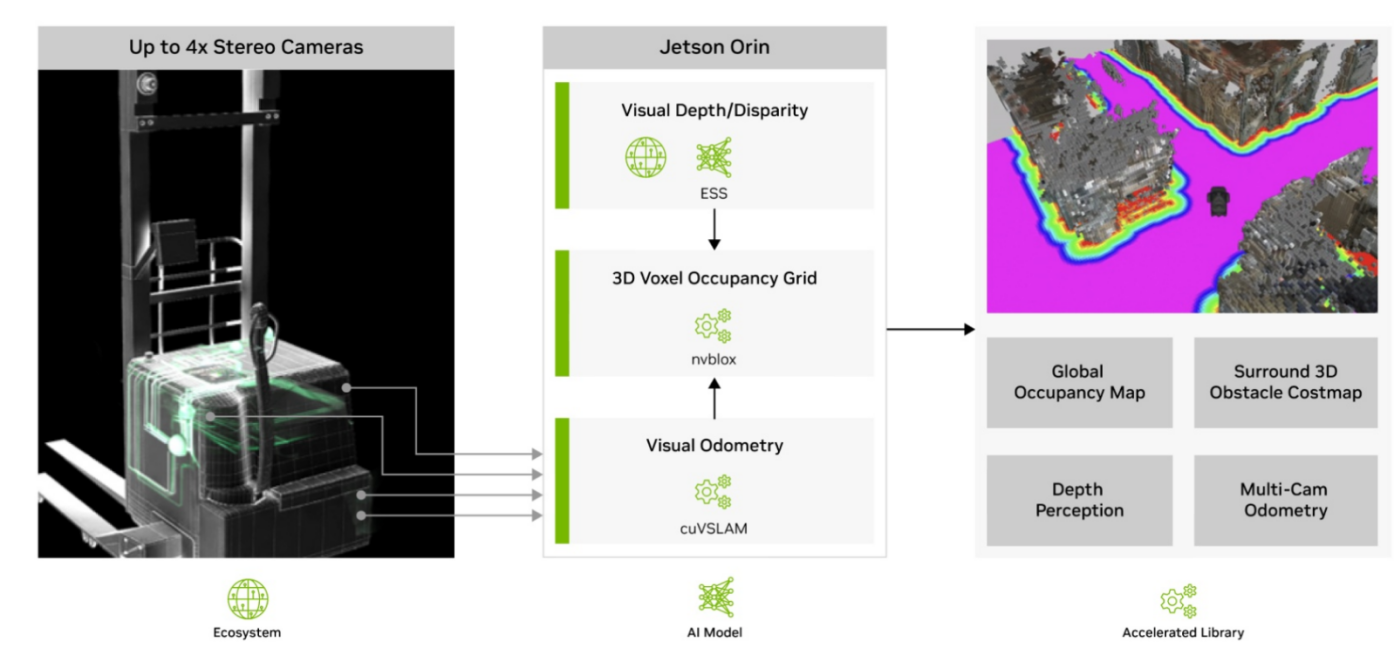

Isaac Perceptor: Built on Isaac ROS, it is a collection of NVIDIA CUDA acceleration libraries, AI models, and reference workflows for developing Autonomous Mobile Robots (AMRs). It provides support for reliable visual odometry and 360-degree surround vision for obstacle detection and occupancy mapping. Its objective is to aid AMRs in perception, localization, and operation in unstructured environments such as warehouses, factories, and outdoor settings.

NVIDIA Isaac Perceptor Working Principle (Image Source: NVIDIA)

2) Enhanced Simulation Capabilities

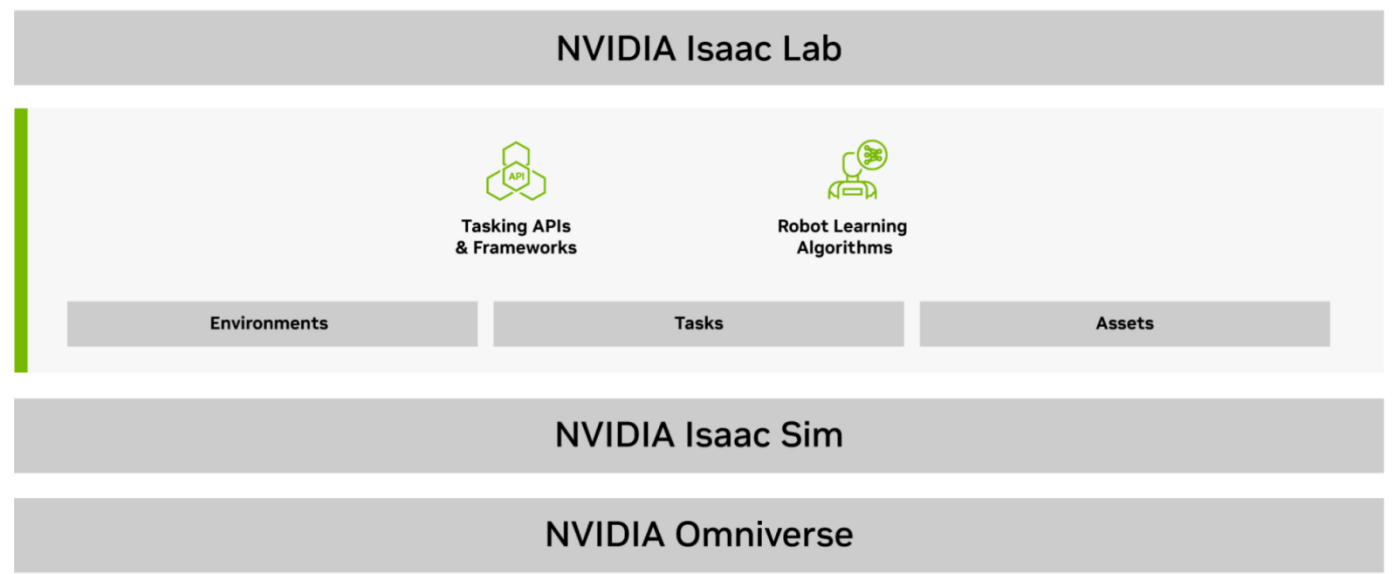

Isaac Lab: A lightweight open-source framework built on Isaac Sim, utilizing NVIDIA PhysX and RTX rendering for high-fidelity physics simulations. It bridges the gap between high-fidelity simulations and perception-based robot training. Optimized for robot learning workflows, it aims to simplify common tasks in robot research, including reinforcement learning, imitation learning, and motion planning.

NVIDIA Isaac Lab Architecture Block Diagram (Image Source: NVIDIA)

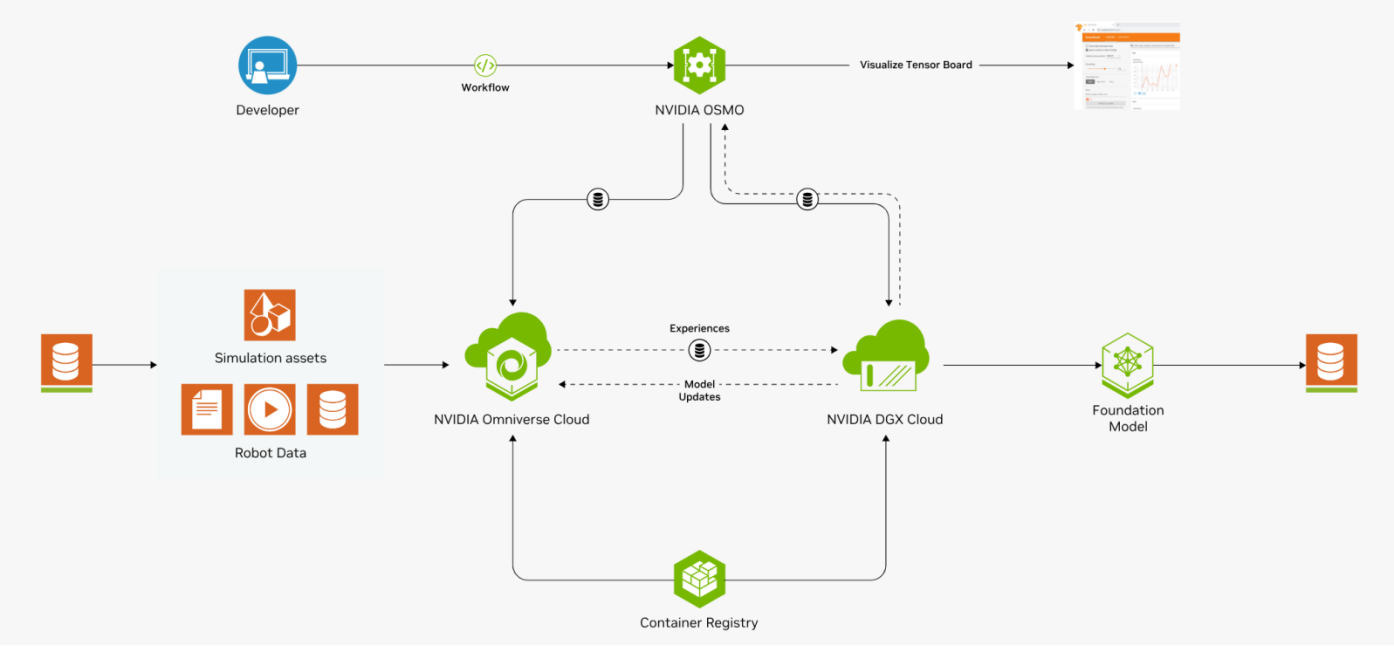

OSMO: A cloud-native workflow orchestration platform for scaling complex, multi-stage, and multi-container robot workloads locally, in private clouds, and public clouds. It helps users orchestrate, visualize, and manage a range of robot development tasks on the Isaac platform, encompassing synthetic data generation, model training, reinforcement learning, and software-in-the-loop testing for humanoid robots, AMRs, and industrial manipulators.

NVIDIA OSMO Working Principle (Image Source: NVIDIA)

References:

1. Deep Dive into NVIDIA's Third-Generation Computer Jetson Thor

https://mp.weixin.qq.com/s/XnONhdCEjN3YC1Y9guSH1w

2. NVIDIA Isaac AI Robot Development Platform

https://developer.nvidia.cn/isaac

Embodied Intelligence Layout in 2025: An Open-Source Base Model for Humanoid Robots

In January 2025, NVIDIA unveiled Cosmos and Isaac GR00T Blueprint at CES.

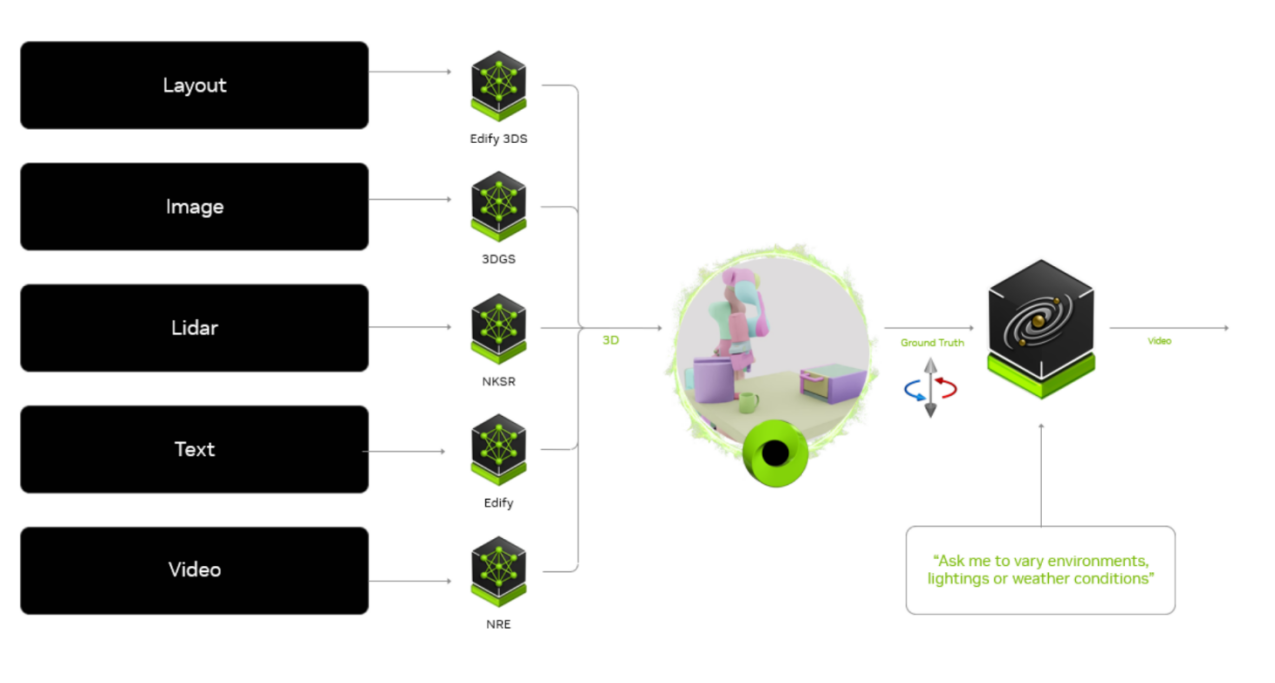

1) World Foundation Model Platform: Cosmos

NVIDIA Cosmos is a groundbreaking generative World Foundation Model platform that integrates the Generative World Foundation Model (WFM), Advanced Tokenizer (Cosmos Tokenizer), a Guardrails system, and Accelerated Video Processing Pipeline (NeMo Curator). This platform assists developers in generating vast amounts of physics-based synthetic data, thereby reducing reliance on real-world data.

Cosmos accepts prompts in text, image, or video formats to create highly realistic virtual world states, accelerating the development of physical AI for autonomous driving and robotics. Additionally, it provides robust security mechanisms to ensure data safety and compliance. Developers can fine-tune the Cosmos model to create bespoke AI models tailored to specific application needs.

Pre-trained with 20 million hours of robotics and autonomous driving data, this foundational world model generates physics-based world states. It includes a suite of pre-trained multimodal models that developers can use straight out of the box for world generation and reasoning, or retrain to develop specialized physical AI models.

Cosmos Predict: A versatile model capable of generating virtual world states from multimodal inputs like text, images, and videos. Built on a Transformer-based architecture, it supports multi-frame generation and can predict intermediate behaviors or motion trajectories given starting and ending input images. Additionally, the model is trained on tokens from 9,000 terabytes of robotics and autonomous driving data, specifically designed for post-training.

Cosmos Transfer: Generates controllable and realistic video outputs from structured visual or geometric data inputs, such as segmentation maps, depth maps, LiDAR scans, pose estimation maps, and trajectory maps. It employs the ControlNet architecture, leveraging spatio-temporal control maps to dynamically align synthetic and real-world representations, ensuring precise spatial alignment and scene composition. This world-to-world transfer model bridges the perceptual gap between simulated and real-world environments.

Cosmos Reason: A fully customizable multimodal reasoning model built on an understanding of space and time, thus possessing spatio-temporal perception capabilities. It uses chain-of-thought reasoning to understand video data and predict interaction outcomes for planning responses. The Cosmos Reason model undergoes training in three phases: pre-training, general supervised fine-tuning (SFT), and reinforcement learning (RL), enhancing its ability to reason, predict, and respond in real-world scenarios.

NVIDIA Cosmos Application Cases include Synthetic Data Generation (SDG), Policy Model Initialization, Policy Model Evaluation, and Multi-View Generation.

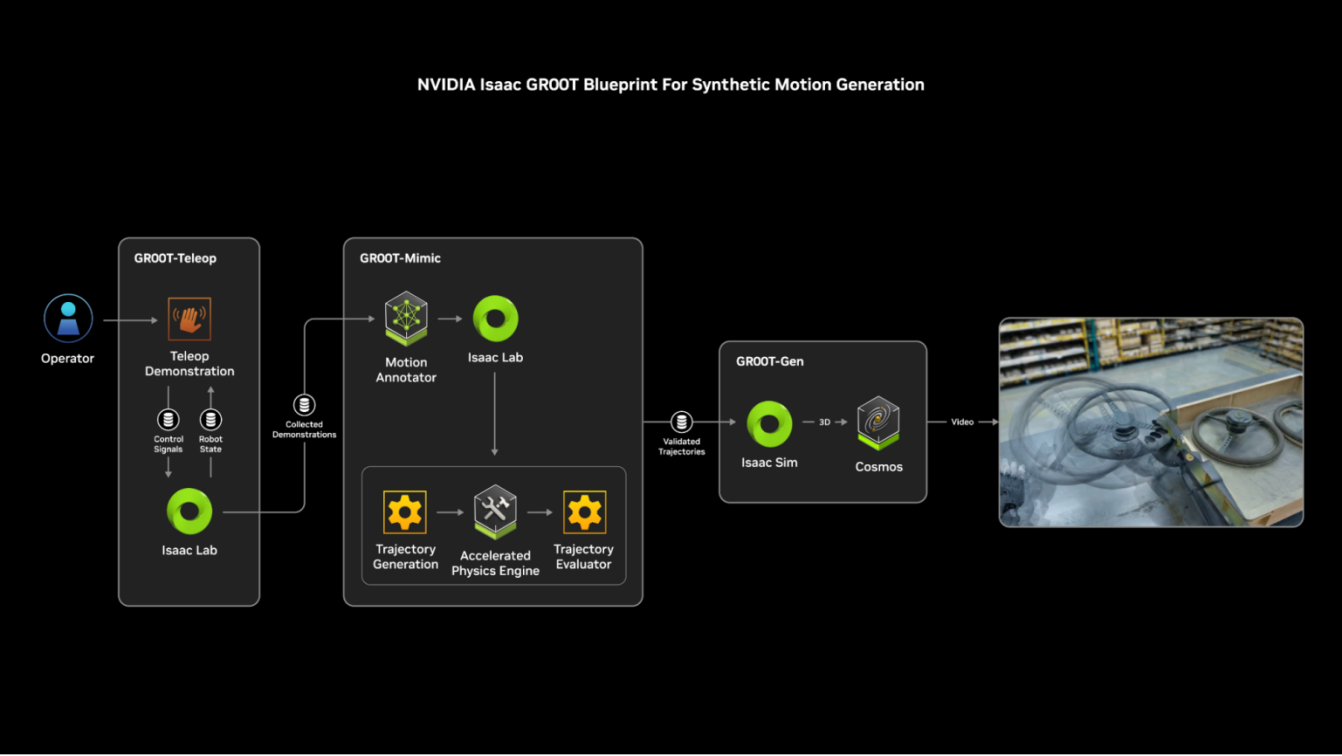

2) Synthetic Motion Generation Tool: Isaac GR00T Blueprint

Isaac GR00T Blueprint offers a comprehensive solution comprising a robot base model, data pipeline, and simulation framework, providing a digital twin training environment for general-purpose robots. It aids developers in generating vast amounts of synthetic motion data to train robots through imitation learning.

In March 2025, NVIDIA introduced GR00T N1, the world's first open-source, customizable base model for generalist humanoid robots, at the GTC Developer Conference.

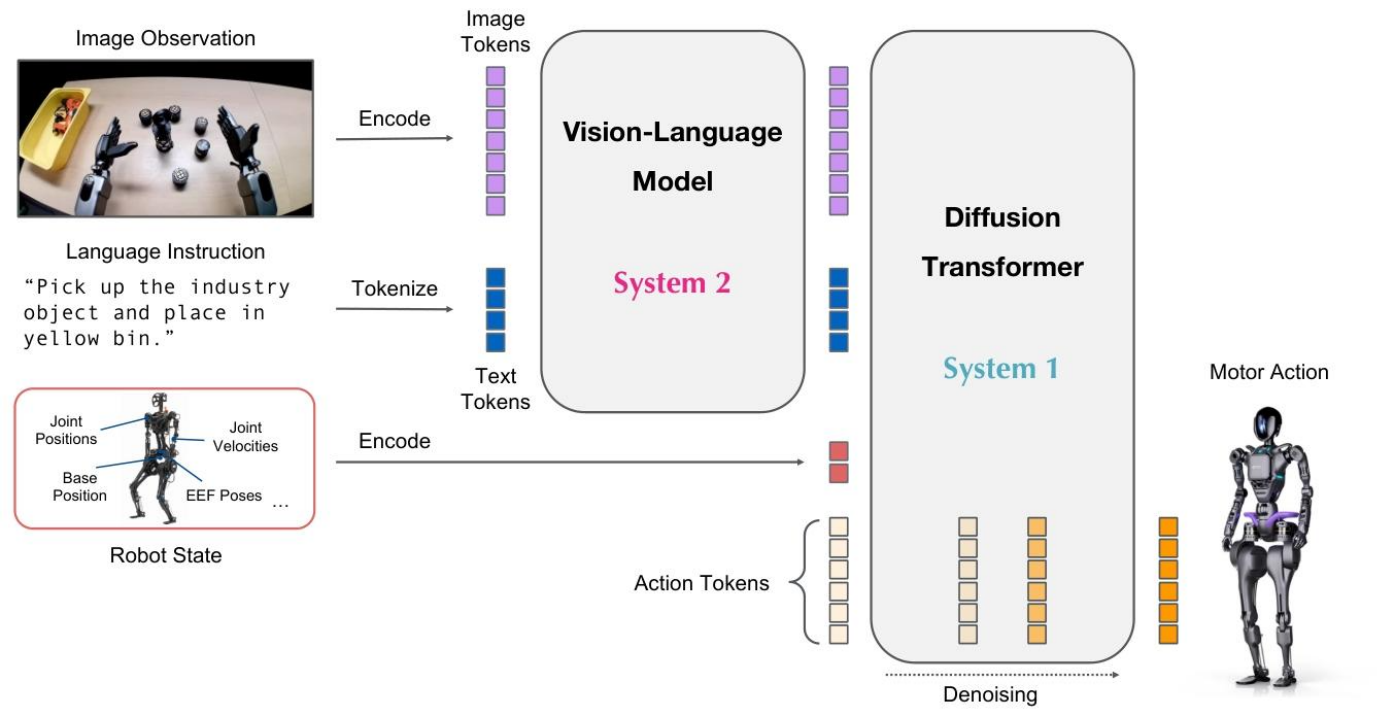

GR00T N1 is a Vision-Language-Action (VLA) model with a dual-system architecture. "System 1" is the action module based on the Diffusion Transformer (DiT), which attends to the output tokens of the Vision-Language Model (VLM) through a cross-attention mechanism. It employs embodiment-specific encoders and decoders to process variable-dimensional states and actions, enabling motion generation at a higher frequency (120Hz).

"System 2" is the reasoning module based on the Vision-Language Model (VLM), running at 10Hz on NVIDIA L40 GPUs. It processes the robot's visual perception and language instructions to interpret the environment and understand task goals.

Both "System 1" and "System 2" are neural networks built on Transformers, tightly coupled and jointly optimized during training to achieve efficient collaboration between reasoning and execution.

The data used for pre-training the GR00T N1 model includes real robot trajectories, synthetic data, and human videos.

Collaboration Cases: Robot companies such as 1X Technologies, Agility Robotics, Boston Dynamics, and Fourier have integrated GR00T N1, utilizing the base model and its supporting toolchains to develop new-generation robot products and implement them in diverse application scenarios.

References:

2. Paper: GR00T N1: An Open Foundation Model for Generalist Humanoid Robots

Conclusion

NVIDIA's layout in embodied intelligence is reshaping the landscape of embodied intelligent robotics by positioning itself as a "driver of underlying computing power + builder of development ecosystems." Through the Jetson series of chips, it provides high-performance edge computing support, combined with the Isaac/Omniverse development platform and the GR00T general foundation model, constructing a full-stack technology loop from hardware to software.

At the strategic level, NVIDIA is pioneering the humanoid robot sector through investments in Figure AI and collaborations with leading enterprises like Boston Dynamics. Jen-Hsun Huang's vision of "all moving machines will eventually become autonomous" is steadily being realized through deep technological integration. For instance, NVIDIA integrates Omniverse digital twin technology, the Cosmos physical world model, and the Isaac Sim simulation framework to build a comprehensive physical AI system. This system enables robots to complete behavioral validation and capability iteration in virtual environments, ultimately facilitating seamless migration from virtual to real-world scenarios, significantly enhancing the development efficiency and application adaptability of embodied intelligent robots.

At the commercial level, NVIDIA leverages its strategy of hardware standardization and open software ecosystems to foster industry-wide collaboration, accelerating the establishment of industry standards. From optimizing logistics scenarios for Amazon and manufacturing scenarios for Toyota to investing and collaborating with Microsoft and OpenAI, NVIDIA is gradually solidifying its position as a core infrastructure provider in the era of embodied intelligence through a trinity model of "computing power + models + tools".