Samsung Is Poised for a Major Leap! Local Large Model Set to Debut on S26—Is the On-Device AI Era Dawning?

![]() 12/22 2025

12/22 2025

![]() 483

483

The optimal approach for mobile AI still lies in edge-cloud synergy.

Over the past year, smartphone manufacturers have ramped up their AI endeavors. Honor's YOYO has expanded its integration with third-party services, bridging AI capabilities between system and application layers. Huawei's Xiaoyi assistant can seamlessly navigate across apps with a single command, streamlining task completion.

Despite these increasingly powerful AI capabilities, a closer examination reveals a practical limitation: these AI functions still fundamentally rely on an internet connection. In essence, mobile AI remains confined to the edge-cloud collaboration phase, lacking further breakthroughs.

Recently, an X platform user (Semi-retired-ing) disclosed that Samsung plans to equip its upcoming Galaxy S26 series with a local large model capable of running most AI functions directly on the device. This model even boasts advanced privileges to clear memory when necessary, ensuring responsive performance for user needs at all times.

(Image source: Oneleaks)

In fact, Samsung unveiled a local large model named 'Gauss' in 2023, which was rumored to be pre-installed in the Galaxy S25 series. However, for reasons unknown, Samsung heavily promoted Google's Gemini while rarely mentioning 'Gauss'—until recently, when discussions about the local large model resurfaced.

Why is Samsung attempting to embed models directly into phones when most manufacturers still depend on cloud-based solutions? Is this an effort to 'leapfrog the competition,' or has the mobile ecosystem reached a stage where local large model deployment is viable? Regardless of the answer, one thing is certain: a new chapter in mobile AI is about to unfold.

Smartphone makers won't abandon edge-cloud synergy

If Samsung truly deploys large models locally, does this signal a shift away from edge-cloud collaboration toward purely on-device solutions? In reality, such a transition is unlikely in the near term.

Edge-cloud collaboration remains nearly the ideal solution for current mobile AI. The cloud handles model scaling, complex reasoning, and rapid iteration, leveraging its abundant computational resources for easier model updates, unified management, and security reviews. Meanwhile, the device processes initial user commands like wake-up calls, voice recognition, and basic intent judgment before offloading complex requests to the cloud.

This division of labor suits casual AI users well. A one- or two-second delay in retrieving information doesn't significantly degrade the experience, and this model doesn't heavily strain device resources, making it feasible even for lower-performance phones. However, Samsung's strategy of embedding large models in the Galaxy S26 series likely won't extend to older models—that's the key distinction.

(Image source: Samsung)

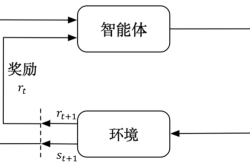

The challenge arises as AI usage frequency increases. As mobile AI evolves, manufacturers aim beyond 'answering questions' to 'completing actions.' AI is transitioning from a conversational interface to understanding screen content, breaking down tasks, planning execution paths, and forming complete AI agent workflows.

Once AI enters high-frequency, continuous, system-level interaction scenarios, the limitations of edge-cloud collaboration become evident. For example, cloud response delays in weak network environments create noticeable interruptions, while network disruptions during multi-step commands can halt entire processes—resulting in a poor user experience.

This explains why manufacturers are increasingly discussing 'on-device large models.' However, this doesn't imply abandoning the cloud entirely but rather keeping more immediate judgments and critical decisions on the device itself. Edge-cloud collaboration remains the optimal solution for this stage.

What makes on-device large models challenging to implement?

Given the drawbacks of edge-cloud collaboration, why are local large models difficult to deploy on smartphones? It's not that manufacturers are unwilling to try—the constraints are simply too pronounced.

First, hardware limitations. Memory, computational power, and energy efficiency are the three core requirements for on-device AI. Even moderately sized models that run continuously in the background strain system resources, a fact that forced Apple to increase iPhone memory.

Second, stability and maintenance costs. Cloud models allow for rapid iteration and instant error fixes, while local models depend on system updates for optimization—posing higher risks and testing costs for system-level AI.

(Image source: Oneleaks)

However, 2025 brings significant advancements: chip capabilities have improved dramatically, making purely on-device large models nearly feasible.

Take Qualcomm's fifth-gen Snapdragon 8 Supreme as an example. Its Hexagon NPU achieves approximately 200 tokens/s in local generative tasks, enabling continuous and natural language generation—a prerequisite for AI to handle complex interactive commands.

Similarly, MediaTek's Dimensity 9500 introduces more aggressive power efficiency designs in its NPU 990. According to official claims, on 3B-scale on-device models, it improves generation efficiency while significantly reducing overall power consumption—suggesting that on-device models can now run more sustainably in the background.

New devices equipped with the latest flagship chips are leveraging these computational gains to introduce various AI interaction features. For instance, Honor's YOYO agent on the Magic8 Pro supports automatic task execution across over 3,000 scenarios.

Yet, even with these advancements, relying solely on on-device AI for complex tasks remains challenging.

Even the Galaxy S26, rumored to include a local large model, requires periodic system resource cleanup to maintain model operation. This underscores that fully depending on on-device models for complex AI tasks is still unrealistic in the short term.

On-device AI won't 'disrupt' but will define flagship differentiation

From the current strategies of mainstream manufacturers, edge-cloud collaboration remains the safest approach.

Take Huawei as an example: Xiaoyi remains the most comprehensive system-level AI assistant in China, covering voice interaction, system control, and cross-device collaboration. However, its core architecture still relies on edge-cloud collaboration—with the device handling perception and basic understanding while the cloud manages complex reasoning.

This isn't because manufacturers 'can't implement on-device solutions' but rather a pragmatic trade-off. As AI deeply integrates into system and service layers, stability, efficiency, and resource control take precedence over aggressive deployment.

Meanwhile, the most notable development this year is AI attempting to take control of 'operation rights.' Doubao Mobile Assistant, for example, has shifted large model capabilities to the interaction layer, enabling AI to not just answer questions but directly understand screen content, plan actions, and even simulate user behavior across apps—a model that has ignited industry excitement.

(Image source: Doubao Mobile Assistant)

However, Doubao Mobile Assistant, Huawei Xiaoyi, Honor YOYO, Xiaomi Super Xiaoi, and other 'self-driving' mobile AI assistants represent a forward-looking direction. As previously mentioned, this is a necessary skill for next-generation AI phones.

Regardless, on-device large models won't drastically alter the trajectory of mobile AI anytime soon. Whether it's Samsung, Huawei, or other major Chinese manufacturers, the current preference remains edge-cloud collaboration.

After all, smartphones weren't designed for large models, forcing them to balance performance, power efficiency, stability, and security. Once AI deeply intervenes in system operations, user experience cannot be compromised—which is why manufacturers hesitate to follow suit rashly.

From this perspective, on-device large models may not become the 'headline feature' of smartphone launches but will quietly raise the technical bar for flagship devices. This will create a gap in AI functionality between phones with on-device capabilities and those relying solely on the cloud—a divide that may arrive sooner than expected.

Mobile AI, Samsung, Doubao

Source: Leitech

Images from: 123RF Licensed Library