Technology Insight | Xiaomi YU7 Elevates Sentinel Mode: From Monitoring to Comprehension with Large Models

![]() 06/27 2025

06/27 2025

![]() 612

612

Produced by ChiNeng Tech

At the recent Qualcomm Automotive Summit, Xiaomi Motors unveiled the 'Enhanced Sentinel Mode,' which we had the privilege to discuss in detail with the engineers.

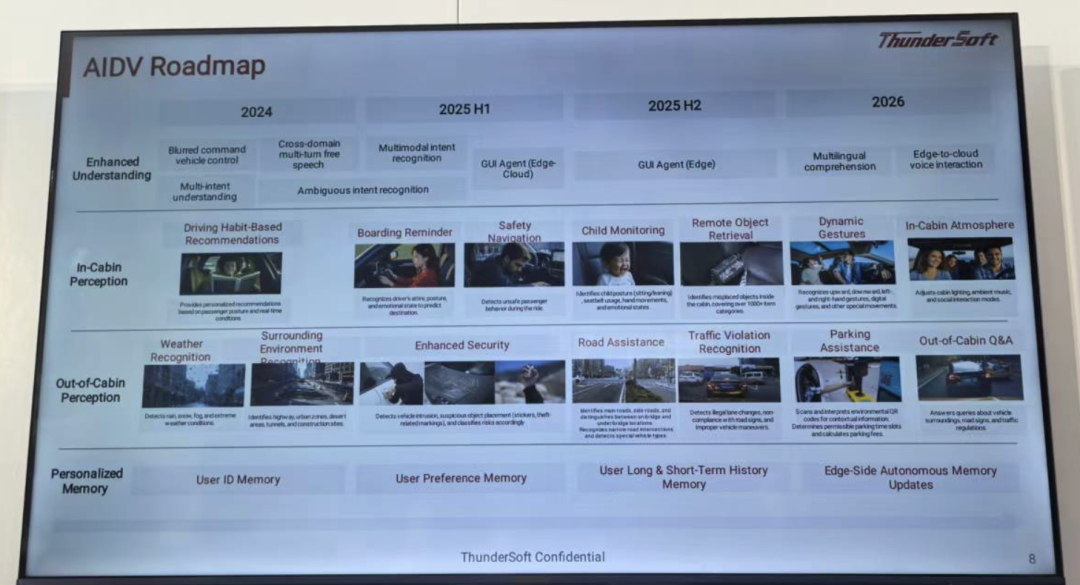

This unveiling underscores the rapid integration of large models in smart cabins, with the Sentinel Mode, a pivotal feature in smart car security systems, undergoing a transformative upgrade.

The Enhanced Sentinel Mode transcends traditional distance sensing and timed recording, embracing behavior recognition and event summarization driven by large models. This evolution not only amplifies alarm efficiency and information utility but also prompts systemic shifts in privacy protection, computational challenges, and edge deployment strategies.

Let's delve into the mode's working mechanism, model prerequisites, chip support capabilities, and user experience enhancements.

01

Sentinel Mode: Evolution Logic and Technical Nuances

The conventional Sentinel Mode relies on static physical triggers, such as when an individual approaches within 5 centimeters of the car and lingers for over 10 seconds, initiating a three-minute security video. While this method offers basic alarm capabilities, it suffers from high false alarm rates and lengthy, unfiltered alarm information.

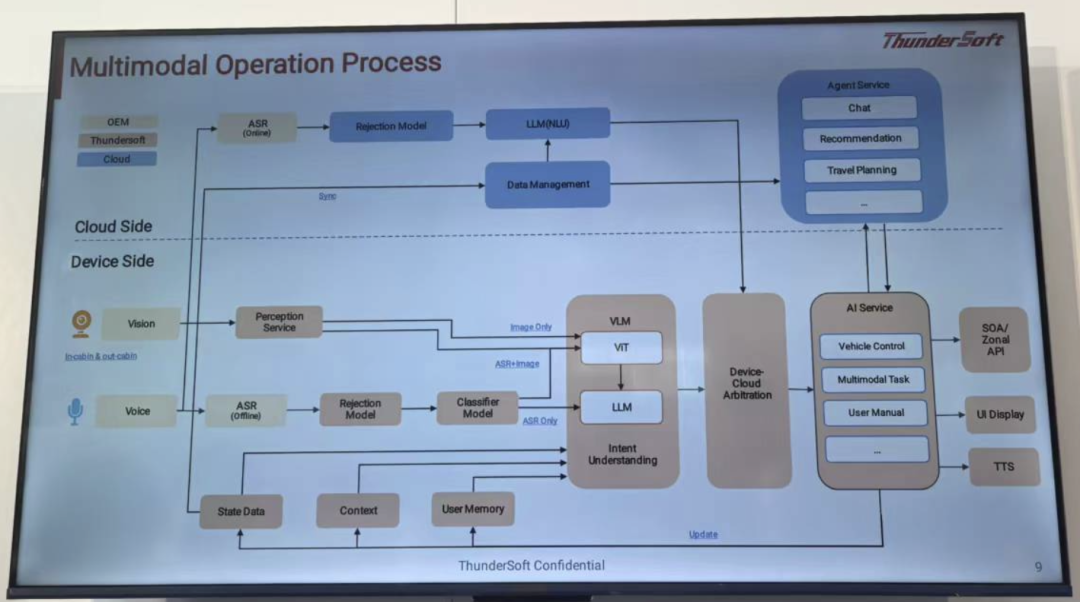

The Enhanced Sentinel Mode's core innovation lies in integrating 'large models' into the monitoring process. Shifting away from rule-based triggers, this mode employs large models with visual comprehension and language generation capabilities to dynamically analyze the external environment.

Upon detecting specific actions like scratching, pulling door handles, kicking, or tire deflation, the system triggers an event summarization module.

These summaries encapsulate event highlights and provide behavioral logic-based natural language descriptions, completing a closed-loop process of 'external abnormal behavior → event abstraction → structured presentation'.

To achieve this, the model's semantic understanding and scene analysis capabilities are paramount. Behavior recognition necessitates intricate temporal image analysis, while event summarization requires language organization based on understanding, falling under generative tasks.

Models adept at such tasks typically possess over 5-6 billion parameters, a computational threshold that determines local execution feasibility.

02

Chip Support and the Evolution of Local Deployment Capabilities

The Enhanced Sentinel Mode's implementation hinges not only on model capabilities but also on the computing platform's ability to support its operations.

Current mainstream cabin chips like the 8295, despite boasting approximately 200 TOPS of computing power, are benchmarked for 2 billion parameter models or less, insufficient for behavior recognition and summarization. Thus, this function necessitates higher-end chips for local deployment.

The introduction of the Qualcomm 8797 chip marked a pivotal shift. Supporting large models of 7 billion or even 14 billion parameters, coupled with significantly enhanced NPU computational power, the chip achieves an edge inference speed of 40 TPS, progressing towards the 50-60 TPS goal. This processing capacity surpasses the basic thresholds for response speed and concurrent performance in generative tasks, enabling real-time image recognition, behavior classification, event organization, and summarization on the vehicle.

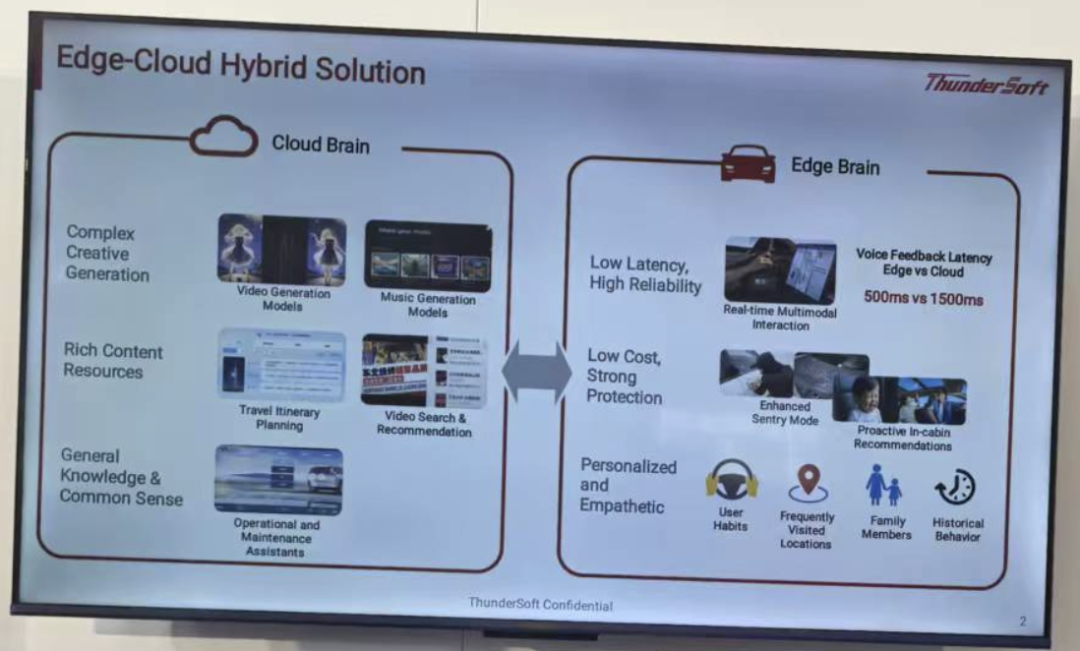

Local deployment of the Qualcomm 8797 not only enhances response efficiency but also fortifies privacy security. All model inferences, user behavior recognition, and in-car conversation data processing occur locally, mitigating the data leakage risks associated with traditional cloud solutions.

For instance, the system can analyze in-car voice through a locally running 14 billion parameter model to generate user profiles (like 'Mr. Wang enjoys classical music and frequents Hongqiao Airport'), enabling personalized voice commands such as 'open Mr. Wang's window'.

Compared to cloud solutions, local processing reduces the average response time from 1-2 seconds to approximately 0.2 seconds, significantly enhancing the interaction experience.

From a broader perspective, large models' localized deployment not only optimizes the Sentinel Mode but also introduces a unified multimodal perception framework for in-car systems.

For example, the 8397 chip supports 7-8 billion parameter models in high-end cabins, offering multimodal fusion functions including DMS (Driver Monitoring System) and OMS (Occupant Monitoring System), establishing a more comprehensive cabin AI capability.

Summary

The introduction and implementation of the Enhanced Sentinel Mode signify smart cars' transition from 'recording the scene' to 'comprehending the scene.' Leveraging edge-deployed large models, it identifies threatening behaviors and presents events in a structured summary format, vastly improving user information acquisition efficiency and minimizing invalid alarms.

Core technological breakthroughs focus on two fronts: local inference of 5-14 billion parameter models through advanced chip computational power, and efficient collaboration between visual understanding and language generation models to establish automated event summarization capabilities. The local processing approach ensures data privacy and compliance, laying the groundwork for advanced security applications.