Is NVIDIA, the Universe's Top Stock, Reporting Another Stellar Performance?

![]() 09/05 2025

09/05 2025

![]() 509

509

If there's one topic that can prompt Wall Street's AI analysts to nod in unanimous agreement, it's undoubtedly the breakneck speed of AI development. The answer doesn't hinge on intricate charts or figures; one simply needs to observe the growth reflected in NVIDIA's financial statements. As the cornerstone of global AI development, NVIDIA's corporate expansion is a microcosm of the entire AI era.

On the morning of August 28, NVIDIA unveiled its financial report for the second quarter of fiscal year 2026 (covering July 2025). Once again, NVIDIA has delivered a 'blockbuster' performance.

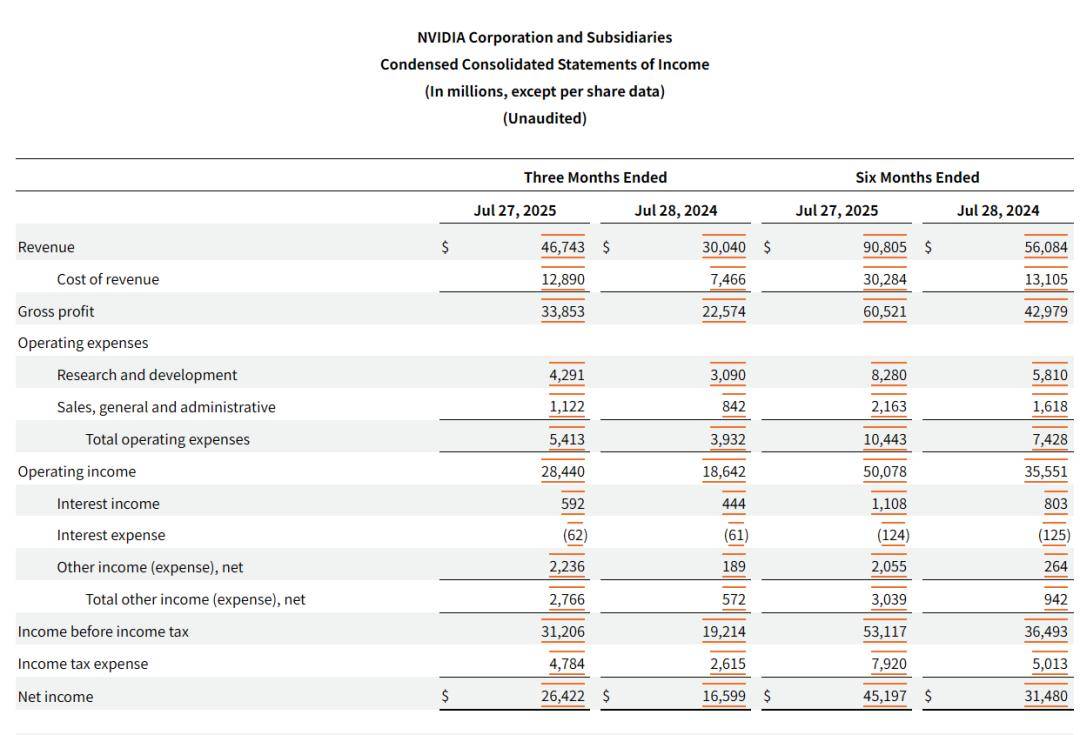

At the core data level, NVIDIA's total revenue for the quarter reached $46.7 billion, marking a quarter-over-quarter increase of $2.7 billion. The adjusted gross margin stood at 72.7%, slightly surpassing market expectations. The company's net profit (GAAP) was $26.4 billion, reflecting a year-over-year increase of 57%.

The primary driver behind NVIDIA's sustained growth remains its data center business, which is most intimately linked to AI. NVIDIA's data center revenue for the quarter hit $41.1 billion, a year-over-year surge of 56.4%, contributing nearly 90% of the company's total revenue. Notably, this achievement was attained despite the adverse impact of the H20.

Looking ahead to the next quarter, NVIDIA anticipates revenue of $54 billion (excluding expected H20 sales to China), representing a quarter-over-quarter increase of $7.3 billion, with a gross margin (GAAP) of 73.3%, both indicating further growth.

NVIDIA's confidence in its growth trajectory primarily stems from substantial investments by cloud service providers. Since 2023, the world's top four cloud providers—Google, Meta, Microsoft, and Amazon—along with Chinese counterparts like Alibaba, Tencent, and Baidu, have ramped up their AI capital expenditures, particularly aggressively in 2025. Notably, Google, Microsoft, and Meta explicitly stated in their earnings calls that they would continue to escalate investments in the latter half of the year, providing a fundamental growth catalyst for NVIDIA's short-term performance.

However, NVIDIA is clearly not resting on its laurels with its data center business. The company is also exploring avenues for growth beyond AI training and inference computing power.

In terms of new growth avenues, NVIDIA has set its sights on smart cars and embodied AI. These two domains are precisely where China excels.

Since this year's GTC Summit in Paris, NVIDIA CEO Jensen Huang has been highly active. Just last month, Huang lauded Chinese companies on various occasions and secured a series of collaborations in intelligent driving and embodied AI.

In the AI era, NVIDIA boasts numerous accolades. However, NVIDIA has always recognized that it is not invincible.

How Impressive Can the Performance of the Universe's Top Stock Be?

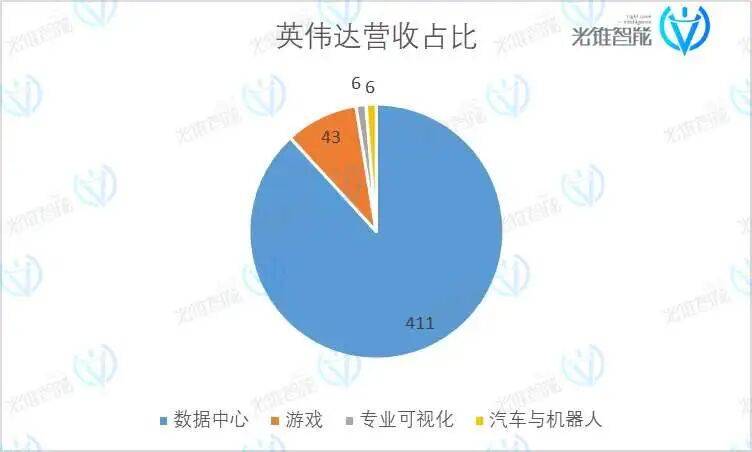

NVIDIA's business is primarily segmented into four major areas: data centers, gaming, professional visualization, and automotive and robotics.

The data center business (Data Center) encompasses NVIDIA's computing cards (including GPUs like H20 and A100), InfiniBand (network solutions), and the CUDA platform. Revenue for the quarter reached $41.1 billion, a year-over-year increase of 56.4%, accounting for 88% of total revenue. Over the past three years, driven by the boom in AI large models and IT infrastructure construction, the data center segment has been the absolute linchpin of NVIDIA's revenue and growth.

The gaming business (Gaming) primarily refers to RTX gaming graphics cards and gaming cloud platforms, which laid the foundation for NVIDIA's establishment. This year, NVIDIA launched its latest generation of 50-series graphics cards, driving overall business growth. Revenue for the quarter was $4.29 billion, a year-over-year increase of 48.9%, accounting for 9% of total revenue.

Notably, considering the performance of NVIDIA's 'longtime rival' AMD, AMD's gaming business also experienced growth this quarter, with revenue of $1.1 billion, a year-over-year increase of 73%. However, NVIDIA still maintains a significant advantage with its RTX 50-series graphics cards. From gamers' perspectives, AMD has indeed become less favored by high-end gamers this year. Currently, a high-end gaming PC configuration list typically includes 'X3D' (AMD's gaming CPU) + RTX 5070 TI or higher.

Professional visualization (Professional Visualization) mainly encompasses NVIDIA's 3D rendering and simulation software, such as Omniverse used for 'digital twins' in the industrial sector. Revenue for the quarter was $600 million, a year-over-year increase of 32%, accounting for 1% of total revenue. Overall, this is an area where NVIDIA still has significant potential for development. Some core clients include animation companies like Pixar and Disney.

Finally, there is the automotive and robotics segment (Automotive). NVIDIA's automotive business primarily includes NVIDIA DRIVE AGX Orin, DRIVE AGX Thor, and NVIDIA Jetson Thor. Revenue for the quarter was $590 million, a year-over-year increase of 69%, accounting for 1% of total revenue. From the automotive industry's perspective, NVIDIA's intelligent driving chips account for most of the high-level intelligent driving demands in the smart car industry. Many automakers also utilize NVIDIA's higher-computing-power Thor chips as the hardware platform for their next-generation VLA large model intelligent driving systems. Additionally, following the trend of widespread high-level intelligent driving, many models equipped with Orin N chips have been released this year.

However, overall, the automotive business remains just a 'tiny speck' in NVIDIA's 'empire.'

NVIDIA maintained high-speed growth throughout the second quarter of fiscal year 2026. At the same time, NVIDIA also maintained exceptionally high revenue quality.

The financial report reveals that NVIDIA's gross margin (GAAP) for the quarter was 72.4%, an increase of 12 percentage points from the previous quarter, returning to NVIDIA's traditionally robust level. It should be noted that the lower gross margin in the previous quarter was primarily due to the impact of H20 inventory write-downs and the ramp-up of production for the latest computing card, Blackwell.

NVIDIA's gross margin exceeding 70% is attributed to its positioning as a Fabless company in the semiconductor industry. While GPUs are NVIDIA's main products, the company does not directly manufacture them. Essentially, NVIDIA sells software and solutions, so its profit margins cannot be directly compared to those of IDM companies like Intel. Compared to globally dominant software companies, NVIDIA's profit margins are at a normal level.

In terms of expenses, NVIDIA's research and development (R&D) expense ratio for the quarter was 9.2%. The company's R&D investment increased by $300 million quarter-over-quarter, with the overall R&D expense ratio remaining relatively stable. The sales and administrative expense ratio was 2.4%, maintaining a normal and stable level.

It should be noted that NVIDIA's performance this quarter was still affected by the H20 ban. However, overall, NVIDIA continued to maintain high growth. According to the earnings call, NVIDIA anticipates revenue of $54 billion for the next quarter (excluding expected H20 sales to China), representing a quarter-over-quarter increase of $7.3 billion.

Using the PEG valuation method, NVIDIA's price-to-earnings ratio (TTM) of 50 implies an expected 50% growth rate. However, as NVIDIA finds it challenging to provide complete guidance, we can only roughly conclude, based on AI industry development trends, that NVIDIA's current market capitalization of $4 trillion meets the computing power demands generated by cloud providers (its main revenue source) + AI applications, intelligent driving, and embodied AI (secondary sources).

After reviewing this quarter's financial report, one cannot help but marvel at NVIDIA's status as the universe's top stock. NVIDIA not only generates high-quality profits but also does so at an increasingly rapid pace.

Riding the AI Rocket, Data Center Revenue Soars

Three years ago, NVIDIA was still a 'small player' with annual revenue of less than $50 billion and a market capitalization less than one-tenth of its current value, struggling to hold its own among tech giants.

What transformed NVIDIA into the highest-valued company in human history? Undoubtedly, it was the heavy reliance of AI large models, centered around Transformer technology, on GPUs for training and inference.

In simple terms, current AI large models have 'overturned' most of the previous AI research paradigms. Catalyzed by the Scaling Law, AI only requires sufficient computing power and data to swiftly solve traditional modal recognition, denoising, CV, NLP, and other technical steps that were previously studied in AI. In the GPT era, AI talents from the older generation seem like characters writing four different forms of the character 'hui' (a reference to a stereotypical scholar in Chinese literature) to the new generation. Large models have ushered AI into an era of industrial-scale production.

During the three years when AI large models have 'dominated the charts,' the absolute growth in NVIDIA's gaming, visual design, and smart car businesses has not been significant. However, the data center business, most closely tied to AI, grew from $14.6 billion in Q2 of fiscal year 2023 to $41.1 billion today, causing the revenue structure to appear almost entirely dominated by the data center segment.

Over the past two years, NVIDIA has also made numerous improvements to its data center business.

At the software level, NVIDIA has continuously lowered the technical barriers for AI software. For example, in this year's updated CUDA 13.0 version, one of the features allows developers to define data blocks and specify operations for these blocks. In simple terms, AI code can now be 'built with blocks.' On the other hand, NVIDIA also released the Nemotron model series. The primary purpose of this software is to complement NVIDIA's comprehensive AI 'toolbox,' helping enterprises customize and implement their own AI agents.

At the hardware level, NVIDIA's updated Blackwell architecture last year continued to adhere to the philosophy of 'making GPU core chips bigger and stronger.' Reflecting on the sales side, NVIDIA stated in its Q1 fiscal year 2026 earnings call that Blackwell is the fastest-adopted product in the company's history, contributing 70% of the revenue from data center computing and successfully completing the transition from the previous generation Hopper architecture products.

'The H100 is sold out, and the H200 is also sold out. Large cloud service providers (CSPs) are coming out, renting capacity from other CSPs. AI native startups are really scrambling to get capacity so that they could train their reasoning models. The demand is really, really high.' (H100 is sold out, H200s are sold out. Large CSPs are coming out, renting capacity from other CSPs. And so the AI native startups are really scrambling to get capacity so that they could train their reasoning models. And so the demand is really, really high.)

As Jensen Huang summarized, NVIDIA's products are once again in extremely high demand.

The global tech giants are the ones putting their money where their mouth is to support NVIDIA. Reviewing the earnings reports and market capitalization performances of tech giants over the past year, AI capital expenditures (Capex) have become a key factor influencing company market capitalizations.

Recently, AI companies in the United States announced another round of computing infrastructure plans. In July, Mark Zuckerberg stated that Meta would invest hundreds of billions of dollars to build several large-scale AI data centers. In August, Google and OpenAI successively announced plans to build new data center projects. According to Jensen Huang's prediction, 'AI infrastructure spending will reach $3 trillion to $4 trillion by the end of this decade.' (We see $3 trillion to $4 trillion in AI infrastructure spend by the end of the decade.)

On the Chinese company front, affected by the H20 incident, companies like ByteDance, Tencent, and Alibaba have not recently publicly disclosed specific information about their expansion of computing hardware. However, we can still analyze from the perspective of related products that China is also in the expansion phase of AI computing infrastructure. According to recent IDC forecasts, China's spending on generative AI-related network hardware (primarily used for interconnecting computing cards) will continue to accelerate, increasing from 6.5 billion yuan in 2023 to 33 billion yuan in 2028, with a compound annual growth rate of 38.5%.

Why are AI giants willing to continuously pay for AI computing hardware? Primarily because AI can generate tangible business value.

For example, Microsoft mentioned in its latest earnings call that out of the 39% year-over-year growth in its cloud computing business, 16 percentage points were driven by AI. On the other hand, Tencent, which has been reluctant to discuss AI, 'uncharacteristically' mentioned the role of AI in its advertising, gaming, and cloud computing businesses during its recent earnings call. Subsequently, Tencent also announced that it would disclose the daily active users (DAU) of Yuanbao this year. This marks that AI revenue in China has also reached a stage where it can be publicly disclosed and quantified.

'The more you buy, the more you make.' (The more you buy, the more you make.)

As Jensen Huang commented on the relationship between AI computing hardware procurement and business revenue. Overall, NVIDIA's GPU business still has considerable short-term performance guarantees. According to Jensen Huang's understanding during his participation in the third China International Supply Chain Expo's advanced manufacturing theme event in Beijing this year, 'Artificial intelligence is the next major technological revolution, but it has only just begun.'

NVIDIA's Ambitions Extend Far Beyond AI Computing Hardware

Objectively speaking, NVIDIA's GPUs hold significant global dominance.

In the nascent days when artificial intelligence (AI) first started garnering widespread attention, numerous tech behemoths embarked on the ambitious journey of developing bespoke computing chips tailored for AI applications. Notable examples include Google's TPU (Tensor Processing Unit) and Tesla's Dojo supercomputer. However, recent reports have surfaced, revealing that Tesla is scaling back its efforts on Dojo, while Google continues to place orders with NVIDIA. These developments not only underscore the relentless evolution of AI, encompassing both core technology and hardware architecture, but also highlight the universal appeal and value of NVIDIA's GPUs.

“Huang Renxun succinctly captured this sentiment by stating, 'Accelerated computing diverges from general-purpose computing. You don't merely write software and compile it into a processor.'”

On the flip side, as AI officially steps into the era characterized by a surge in inference demands, a considerable number of companies are still attempting to carve out a niche in the computing power chip market. They hope to displace NVIDIA's GPUs in scenarios with relatively well-defined use cases.

For instance, Amazon's management explicitly articulated at the re:Invent event in December 2024 that the proportion of self-developed chips for AI training and inference would see a significant uptick in the future. In China, a slew of companies, including Huawei Ascend, Biren, MetaX, Enflame Technology, Moore Threads, Baidu Kunlunxin, and Yuntian Lifei, have launched edge inference or Deepseek-supported training-inference all-in-one machines.

When it comes to applications in niche scenarios, NVIDIA has also made attempts to directly engage, but the outcomes have been rather underwhelming.

In March of this year, Huang Renxun announced a collaboration with General Motors to construct an autonomous driving fleet. He projected that with future collaborations from automakers like Toyota and Mercedes-Benz, they would rake in $5 billion in revenue from the autonomous driving business by 2026. However, recent media reports have quoted General Motors executives as describing NVIDIA's assisted driving solution as 'rather alarming.'

Even a powerhouse like NVIDIA can only cater to a fraction of the market's demands.

So, when NVIDIA's market value skyrocketed to $4 trillion, making it the world's largest, Huang Renxun wasn't overly elated. Instead, he visited China and extensively 'praised' smart car and embodied intelligence companies.

'Following the artificial intelligence (AI) boom, robotics is poised to become another major growth engine for chip manufacturers.'

This was Huang Renxun's assertion at NVIDIA's annual shareholder meeting in June 2025. Over the past two years, on multiple occasions, Huang Renxun has repeatedly championed embodied intelligence and smart cars. At the GTC summit in Paris in June 2025, Huang Renxun also remarked, 'The next decade will belong to autonomous driving and robotics... In the not-so-distant future, all moving entities will be propelled by robots, and the next frontier will be automobiles.'

This year, NVIDIA amalgamated its automotive and robotics businesses in its financial report, with second-quarter revenue soaring to $590 million, marking a 69% year-over-year increase. A substantial portion of this remarkable growth rate can be attributed to the development of China's smart car and embodied intelligence industries.

From an industry vantage point, most Chinese models capable of urban NOA (Navigate on Autopilot) utilize NVIDIA's Orin X chips. The latest NVIDIA Thor chip has already been deployed in models from Li Auto and Zeekr.

On the embodied intelligence front, although the industry has yet to reach a consensus on the 'brain' computing power chip, according to Guangzhui Intelligence, renowned humanoid robot companies such as PM Robotics, Galaxy Man, UBTECH, and Unitree have already adopted NVIDIA's recently unveiled Jetson Thor chip (which can be regarded as the embodied intelligence version of the automotive Thor chip).

Since the dawn of this year, NVIDIA has significantly bolstered its cooperation with Chinese companies.

This not only reflects NVIDIA's acknowledgment of China's AI large model capabilities but also stems from China's 'absolute advantage' in the realms of smart cars and embodied intelligence. Regarding revenue expectations for the Chinese market this year, Huang Renxun stated, 'I've estimated the China market to present about a $50 billion opportunity for us this year.'

In the smart car sector, the competition for high-level intelligent driving has once again entered a 'computing power race' phase this year. Under the pressure from some automakers developing their own high-computing power chips, Li Auto is researching ways to maximize the utilization of NVIDIA Thor chip's computing power at the algorithm and data levels. There are also intelligent driving suppliers akin to SenseTime that are researching intelligent driving solutions based on NVIDIA Thor chips. In addition to the aforementioned manufacturers, leading intelligent driving car companies such as BYD, Yuanrong Qixing, GAC Group, IM Motors, WeRide, Xiaomi, Zeekr, and Zhuoyu are actively embracing DRIVE AGX Thor.

Image: The NVIDIA Thor chip on display at SenseTime's booth at the 2025 Auto Show

Image: The NVIDIA Thor chip on display at SenseTime's booth at the 2025 Auto Show

On the robotics front, at this year's WRC (World Robot Conference), NVIDIA collaborated with over a dozen Chinese companies, including Zhongjian Technology, Fourier Intelligence, Beijing Humanoid Robot Innovation Center, LimX Dynamics, and UBTECH. These collaborations span scenarios such as industrial manufacturing, logistics, and retail. NVIDIA aspires to construct an infrastructure network of 'computing power-simulation-data' with embodied intelligence companies, mirroring the model established in automotive autonomous driving.

In the era of AI applications, NVIDIA finds customers everywhere.