Domestic Implementation of '100,000 GPU' Clusters Accelerates

![]() 06/29 2025

06/29 2025

![]() 634

634

In the rapid evolution of artificial intelligence, computing power has emerged as a pivotal element of core competitiveness. The level of computing power supported by the scale of graphics cards stands as one of the most critical indicators determining the performance of large models. It is widely acknowledged that 10,000 NVIDIA A100 chips represent the threshold for creating effective AI large models.

In 2024, China's intelligent computing center construction entered a fast track, with the most notable trend being the accelerated implementation of 10,000 GPU cluster projects. A 10,000 GPU cluster refers to a high-performance computing system comprising 10,000 or more dedicated AI acceleration chips such as GPUs and TPUs. It deeply integrates cutting-edge technologies including high-performance GPU computing, high-speed network communication, large-capacity parallel file storage, and intelligent computing platforms, consolidating underlying infrastructure into a super powerful 'computing beast.' Leveraging such clusters, the training of large models with hundreds of billions or even trillions of parameters can be efficiently completed, significantly reducing the model iteration cycle and facilitating the rapid evolution of AI technology.

However, with the continued rise of the AGI concept, the industry's demand for computing power has intensified. The '10,000 GPU cluster' has gradually become inadequate to meet the needs of explosive growth, and the 'arms race' in the field of computing power has intensified. Today, 100,000 GPU clusters have become indispensable for the world's top large model enterprises. International giants such as xAI, Meta, and OpenAI have deployed their strategies, and domestic enterprises are not far behind, actively participating in this competition for computing power.

01

Enormous Challenges for 100,000 GPU Clusters

Globally, leading technology companies such as OpenAI, Microsoft, xAI, and Meta are racing to build GPU clusters exceeding 100,000 GPUs. Behind this grand plan lies staggering financial investment, with server costs alone exceeding $4 billion. Additionally, issues such as space limitations and insufficient power supply in data centers pose obstacles hindering project progress.

In China, constructing a 10,000 GPU cluster alone involves a GPU procurement cost of up to several billion yuan. Therefore, only a handful of major companies such as Alibaba and Baidu were originally capable of deploying 10,000-scale clusters. The magnitude of financial investment required to deploy a 100,000 GPU cluster is immense.

Apart from financial costs, the construction of 100,000 GPU clusters also faces numerous technical challenges.

First is the extreme test of power and heat dissipation. A 100,000 GPU H100 cluster requires approximately 150MW of power for critical IT equipment alone, far exceeding the carrying capacity of a single data center building. Distributed deployment across multiple buildings within the park is necessary to achieve power distribution, while also addressing voltage fluctuations and stability challenges. The cooling system must also match the massive thermal load – if the heat generated by high-density GPUs during operation cannot be dissipated promptly, it will directly lead to equipment downtime. The energy consumption and maintenance costs of efficient cooling solutions also need to be optimized simultaneously. GPUs are sensitive hardware, and even daily temperature fluctuations can affect the failure rate of GPUs. Moreover, the larger the scale, the higher the probability of failure. When Meta trained llama3, it used a cluster of 16,000 GPUs, with an average failure occurring every 3 hours.

Furthermore, unlike the serial characteristics of traditional CPU clusters, the training process of large models requires all GPUs to participate in parallel computing simultaneously, posing a greater challenge to network transmission capacity. If a fat-tree topology is used to achieve full GPU high-bandwidth interconnection, the hardware cost of four-layer switching will increase exponentially. Therefore, the 'computing island' model is often adopted: high bandwidth within the island ensures communication efficiency, while reduced bandwidth between islands controls costs. However, this requires precise balancing of communication task allocation under different training modes such as tensor parallelism and data parallelism to avoid bandwidth bottlenecks caused by topological design flaws. Especially when the model scale exceeds trillion parameters, the front-end network traffic will increase sharply with the application of sparse technology, necessitating a refined trade-off between latency and bandwidth optimization.

Finally, compared to their American counterparts, Chinese large model enterprises face a unique challenge. Due to well-known reasons, domestic enterprises cannot adopt the NVIDIA solution like Musk but must use heterogeneous chips including domestic GPUs. This also means that even with the same 100,000 GPUs, domestic enterprises struggle to match American enterprises in terms of computing power scale.

Computing power is the core of large model development, but its growth has shifted from linear to planar. Building a 100,000 GPU cluster involves not only an increase in computing power but also technical and operational challenges. Managing a 100,000 GPU cluster is fundamentally different from managing a 10,000 GPU cluster.

02

Domestic '100,000 GPU' Clusters Implementation Accelerates

'There's actually no need to worry about chip issues. Using methods such as stacking and clustering, the calculation results are comparable to the most advanced levels.' These remarks by Huawei President Ren Zhengfei not only boosted the confidence of all sectors of society in the development of AI in China but also highlighted the key role of cluster computing in AI R&D applications. From the previous '10,000 GPU cluster' entry-level requirement to the new goal of '100,000 GPU cluster,' the construction of domestic intelligent computing centers continues to reach new heights.

In September last year, the second phase of the 'Supercomputing Sea Plan,' a construction plan targeting a single cluster with ultra-large-scale computing power of 100,000 GPUs, was announced and initiated. The 'Supercomputing Sea Plan' embodies the meaning of 'embracing all rivers and forming a tower from sand,' aiming to establish a large-scale single cluster for model training. It is reported that the second phase of the 'Supercomputing Sea Plan' was initiated by Beijing Parallel Technology Co., Ltd. (hereinafter referred to as Parallel Technology), with partners including Beijing Zhipu Huazhang Technology Co., Ltd., Beijing Mianbi Intelligence Technology Co., Ltd., Wuhan Branch of China Mobile Communications Group Hubei Co., Ltd., Wuhan Branch of China United Network Communications Co., Ltd., Wuhan Branch of China Telecommunications Corporation, Wuhan University Information Center, and Inner Mongolia Xindong Jitai Technology Co., Ltd. participating in the launch ceremony. In Helinger, Inner Mongolia, the first phase of the 'Supercomputing Sea Plan' construction project, covering over 50 acres, was launched in May this year. The project plans for 4,000 20kW high-power intelligent computing cabinets, which can support the construction of a single intelligent computing cluster with a scale of up to 60,000 GPUs. Not more than 100 meters away from this project, the second phase of the 'Supercomputing Sea Plan' has been planned and launched. The second phase will rely on a single large cluster for unified management and scheduling, accommodating powerful computing resources of up to 100,000 GPUs.

At the end of July 2024, Gansu Yisuan Intelligent Technology Co., Ltd. invested 307 million yuan in Qingyang to build China's first domestic 10,000 GPU inference cluster. In June this year, Gansu Yisuan and its ecological partners planned to invest 5.5 billion yuan to build a 'domestic 100,000 GPU computing power cluster,' providing no less than 2.5 petaflops of computing power services. It is expected to be completed and put into use by December 30, 2027. The planned 100,000 GPU computing power cluster in Qingyang will adopt domestic chips and independent architectures, deeply integrating Qingyang's energy advantages with the technological potential of the Yangtze River Delta, constructing a national linkage of 'western computing power + eastern intelligence,' and creating an open computing power platform to build a 'Chinese foundation' for AI large model training and scientific computing.

ByteDance's layout in the field of intelligent computing is equally ambitious. In 2024, its capital expenditure reached 80 billion yuan, close to the combined total of BAT (about 100 billion yuan). It is expected that this figure will double to 160 billion yuan in 2025, with 90 billion yuan used for AI computing power procurement and 70 billion yuan invested in data center infrastructure construction and supporting hardware. According to third-party estimates, using 400T (FP16) AI computing cards as the standard, ByteDance's current training computing power demand is approximately 267,300 GPUs, and its text inference computing power demand is approximately 336,700 GPUs. Future inference computing power demand is expected to exceed 2.3 million GPUs.

03

Domestic AI Chip Companies Benefit

In this wave of enthusiasm, domestic AI chip companies capable of building 100,000 GPU clusters will also benefit.

At the Huawei Developer Conference 2025 (HDC 2025) held on June 20, Zhang Ping'an, Executive Director and CEO of Huawei Cloud, announced the full launch of a new generation of Ascend AI cloud services based on CloudMatrix384 super-nodes, providing abundant computing power for large model applications. Through 432 node cascading, a supercomputing cluster of 160,000 GPUs can be constructed, meeting the training needs of large models with parameters of up to ten trillion, breaking through the expansion limits of traditional architectures.

Huawei Cloud's new generation of Ascend AI cloud services are based on CloudMatrix384 super-nodes, pioneering the full peer-to-peer interconnection of 384 Ascend NPUs and 192 Kunpeng CPUs through the new high-speed network MatrixLink, forming a super 'AI server,' with single-card inference throughput leaping to 2,300 Tokens/s.

The super-node architecture can better support the inference of mixed expert (MoE) large models, achieving 'one card, one expert.' One super-node can support 384 experts for parallel inference, significantly improving efficiency. At the same time, the super-node can also support 'one card, one computing task,' flexibly allocating resources, enhancing task parallel processing, reducing waiting time, and increasing the effective usage rate of computing power (MFU) by more than 50%. Additionally, the super-node supports the integrated deployment of training and inference computing power, such as 'daytime inference and nighttime training,' enabling flexible allocation of training and inference computing power to help customers optimize resource utilization.

Moreover, Baidu's Baihe 4.0 has achieved efficient management of 100,000 GPU clusters through a series of product and technological innovations such as HPN high-performance networks, automated mixed training splitting strategies, and self-developed collective communication libraries.

Tencent also announced last year the comprehensive upgrade of its self-developed Xingmai high-performance computing network. Xingmai Network 2.0, equipped with fully self-developed network equipment and AI computing power network cards, can support large-scale networking of over 100,000 GPUs, with network communication efficiency improved by 60% compared to the previous generation, increasing large model training efficiency by 20%.

Alibaba has also released news that Alibaba Cloud can achieve efficient collaboration between chips, servers, and data centers, supporting scalable clusters of up to 100,000 GPUs, and has served half of the country's AI large model enterprises.

04

Computing Power Internet and East-to-West Data Transmission Project Address Market Bottlenecks

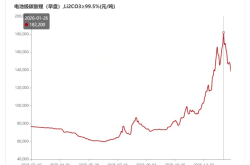

Currently, the problem of insufficient supply of intelligent computing power in China is prominent, with the growth rate of large models' demand for computing power far exceeding the improvement pace of single AI chip performance. Relevant reports show that in 2023, China's demand for intelligent computing power reached 123.6 EFLOPS, while supply was only 57.9 EFLOPS, making the supply-demand gap evident. Utilizing cluster interconnection to compensate for the performance shortcomings of single cards may be the most worthwhile approach to explore and practice at this stage to alleviate the shortage of AI computing power.

After the construction of '100,000 GPU clusters,' how to fully harness their application value and maximize their role in suitable scenarios such as AI training and big data analysis, while preventing resource idleness and waste, is an urgent problem to be solved. The construction of intelligent computing centers is just the beginning, and more importantly, their subsequent effective utilization. In other words, the key lies in resolving market bottlenecks. Against this backdrop, to address relevant market bottlenecks, the computing power internet and the East-to-West Data Transmission Project have been proposed and received widespread attention.

The computing power internet is not a novel network but rather an enhancement of the existing internet, interconnecting dispersed computing resources from diverse locations. Utilizing standardized computing power identifiers and protocol interfaces, it achieves cross-domain resource interconnection, enabling intelligent perception, real-time discovery, and on-demand acquisition of heterogeneous computing power throughout the entire network. In essence, it is a network dedicated to facilitating the flow of computing power, aimed at further boosting the interconnection and interoperability of these resources, revitalizing existing capacities, enhancing usage efficiency, lowering costs, and ultimately providing a superior user experience. On May 17, the China Academy of Information and Communications Technology, in collaboration with the three major telecom operators, jointly announced the launch of the 'Computing Power Internet Test Network' and unveiled the 'Computing Power Internet Architecture 1.0.' This initiative seeks to interconnect the computing power of the three operators and dispersed social computing resources nationwide for general-purpose, intelligent, and supercomputing, as well as cloud, edge, and end public computing resources, empowering users to effortlessly 'find, allocate, and use' computing power. Looking ahead, users can anticipate the flexibility to purchase and utilize computing power resources by the 'card-hour,' akin to purchasing electricity by the 'kilowatt-hour,' ensuring a convenient service where they only pay for what they use.

Conversely, the East-to-West Data Transmission Project aims to establish a new, integrated computing power network system encompassing data centers, cloud computing, and big data. It aims to direct computing power demand from the east to the west in an orderly manner, optimize the construction layout of data centers, and foster coordinated development between the two regions. In February 2022, China embarked on the construction of national computing power hub nodes in eight regions, including Beijing-Tianjin-Hebei, the Yangtze River Delta, the Guangdong-Hong Kong-Macao Greater Bay Area, Chengdu-Chongqing, Inner Mongolia, Guizhou, Gansu, and Ningxia, and planned 10 national data center clusters, marking the official and comprehensive initiation of the East-to-West Data Transmission Project. Its core objective is to enable western computing power resources to better support the computation of eastern data, thereby empowering digital development. This not only alleviates the energy shortage in the east but also opens up new avenues for development in the west.

The synergistic advancement of the computing power internet and the East-to-West Data Transmission Project is expected to address market bottlenecks, optimize the allocation of computing power resources, and propel the sustained and healthy growth of China's AI industry. On one hand, the computing power internet facilitates the cross-regional and cross-industry circulation of computing resources, enhancing resource utilization efficiency. On the other hand, the East-to-West Data Transmission Project leverages the energy and land resource advantages of the west to reduce computing power costs while easing the pressure on data center construction in the east. These two initiatives complement each other, collectively offering solutions to the imbalance between supply and demand of intelligent computing power in China.

If 2024 marked the inaugural year of China's 10,000 GPU clusters, 2025 will witness the advent of 100,000 GPU clusters.