ByteDance, Tencent, Alibaba: The Epic Battle for AI Coding Supremacy

![]() 07/25 2025

07/25 2025

![]() 710

710

Major tech giants are converging on the most promising Product-Market Fit (PMF) frontier: AI coding.

From July 21st onwards, ByteDance, Tencent, and Alibaba successively unveiled updates to their AI coding offerings: ByteDance's existing AI coding product Trae launched the Solo version, integrating context engineering to boost platform intelligence; Tencent introduced a comprehensive suite of tools for product deployment, effectively creating an 'AI full-stack engineer'; Alibaba, meanwhile, unveiled the large programming model Qwen3-Coder, directly challenging Claude 4 and focusing on model layer advancements.

Following Cursor's decision to discontinue using models from Anthropic, OpenAI, Google, and others in the Chinese market, domestic tech heavyweights aim not only to replace Cursor but also to elevate the development experience. They aspire for AI to transcend its role as a programmer's 'co-pilot', handling the entire 'product design and research' process and directly delivering finished products.

Compared to earlier this year, when AI served primarily as an assistant, AI coding has now achieved a significant leap, evolving from assisting programmers in code generation to independently completing tasks.

Over three consecutive days, ByteDance, Alibaba, and Tencent introduced tools/models enabling AI to seamlessly transition from coding to finished products.

With this wave of concentrated updates, AI is poised to take over coding tasks, demanding not just high-quality code but also the deployment of finished products.

Tencent and ByteDance's coding product updates primarily focus on the product side, acknowledging the substantial gap between an engineering team and a showroom, which product capabilities aim to bridge.

On July 21st, ByteDance announced the Solo version of Trae. Unlike the 1.0 stage, which centered on 'code generation', the new version advances to the 2.0 stage, empowering AI to independently complete 'software delivery'.

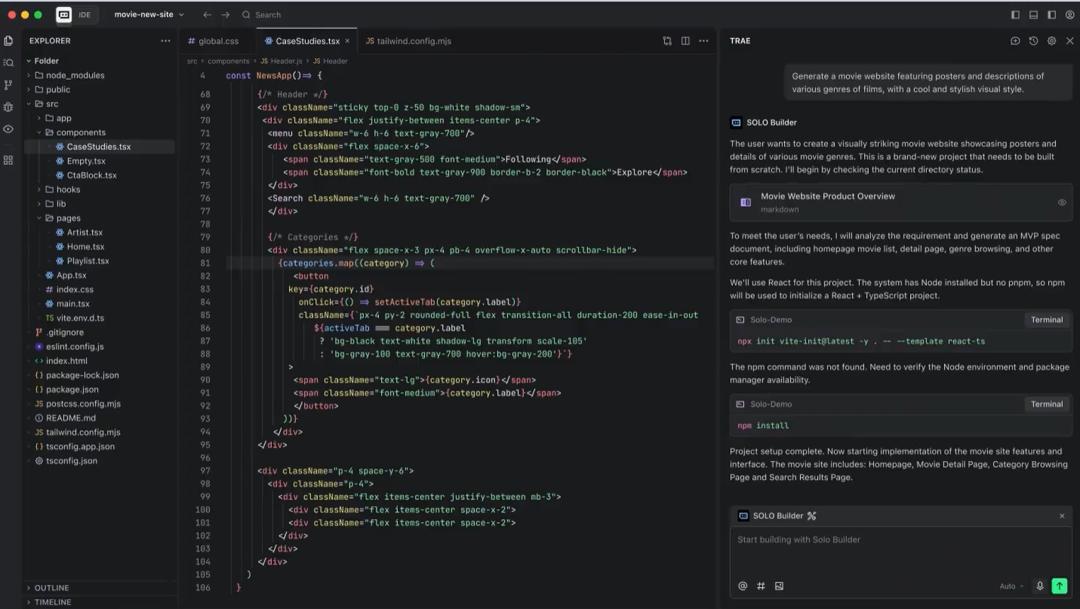

The previous version of Trae featured programming within an IDE interface, offering Build mode and Chat mode. Build mode mirrored a traditional IDE, where users input prompts on the right, and AI automatically generated code segments. Chat mode, on the other hand, facilitated code modification and issue resolution through conversation, making it ideal for fine-tuning.

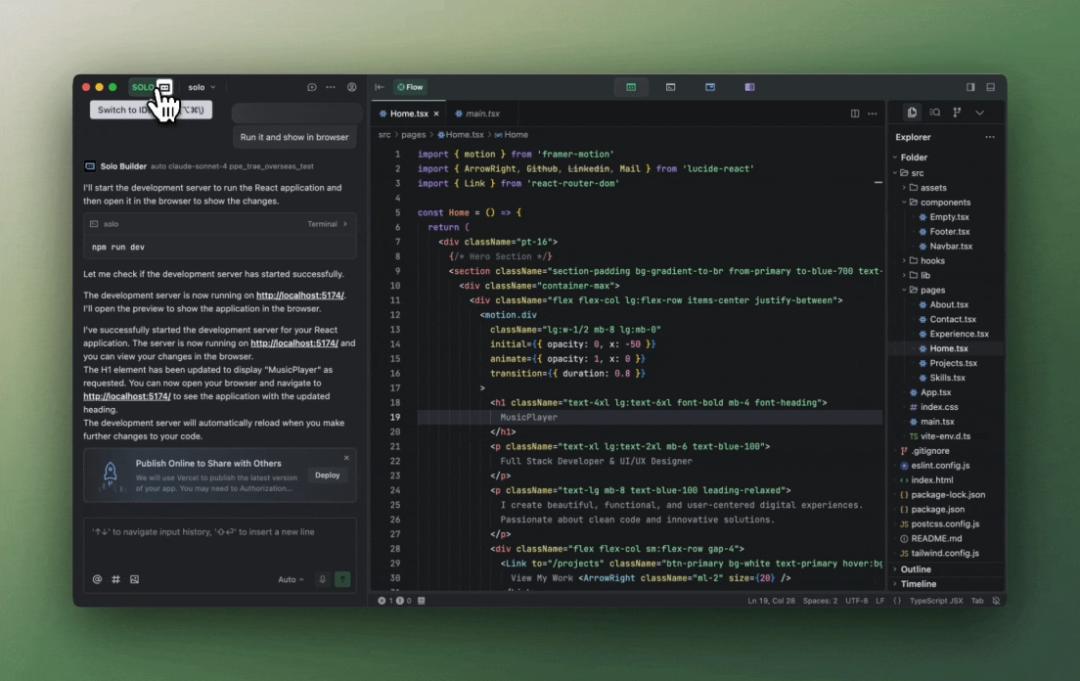

Trae 2.0 introduces a mode switch in the top-left corner, allowing users to toggle between the old IDE mode and Solo mode. In Solo mode, the AI dialog box is positioned on the left, while the right side displays AI-generated documents and coding information.

The integrated interface seamlessly blends Chat mode and Build mode. In the official demonstration, AI first analyzed requirements and generated a product document, which it then further disassembled. Based on the detailed requirements, AI proceeded to write the code.

Moreover, Trae needed to deploy the code and provide a finished product. Hence, the new version includes a suite of web development tools, such as PRD (Product Requirement Document) documentation, UI design, and deployment, all integrated into the AI Builder in Solo mode.

This marks a significant shift from previous AI assistants that merely aided programmers in writing code. The new version of Trae enables developers with no programming experience to develop a complete product using the platform.

Beyond integrating the interface and development tools, Trae optimizes the Agent function's Context (context engineering) to bolster product capabilities and address the challenge of 'accurately understanding requirements'.

Reviews of Trae 1.0 were polarized: some found it enhanced efficiency, while others criticized the quality of AI responses. The code functioned but suffered from length and usability issues, making bug detection time-consuming.

Past users often encountered discrepancies between generated code and requirements after inputting a Prompt. For instance, generating a women's clothing e-commerce website required finalizing details like the target age group and whether a login interface was needed.

Context engineering addresses this issue by capturing all documents related to task requirements based on previously uploaded demand documents, code, configuration information, and other content. This filters out the desired information from a vast database, serving as context for AI to reference when generating content.

Coincidentally, Tencent updated its coding assistant CodeBuddy the next day, introducing an internal beta IDE mode, emphasizing its position as the 'first AI full-stack engineer'.

Tencent optimized CodeBuddy to enable users, regardless of coding knowledge, including product, design, and development roles, to create a product with AI.

Beyond updating functions like PRD generation and deployment, CodeBuddy IDE focused on tool deployment details, making it more user-friendly for non-coders. It supports one-click conversion of Figma design drafts into websites and integrates with the backend deployment tool SuperBase.

As coding-beyond development tools integrate into AI coding platforms, the target audience for ByteDance and Tencent's coding assistant products is evolving. From programmers to enabling developers to generate usable code by simply describing requirements, it's now optimized for front-end and back-end use.

While ByteDance and Tencent's updates compete for the 'domestic Cursor' status, Alibaba's latest open-source effort directly targets the foundation of coding development tool operation: models.

Current AI coding products, including Cursor, ByteDance's Trae, and Tencent's CodeBuddy, offer multiple models for user selection. For instance, Trae's international version supports Claude 3.7 and GPT-4, while its domestic version supports DeepSeek-V3, DeepSeek-R1, and Doubao 1.5 Pro. Tencent's CodeBuddy includes Claude 3.7, Claude 4 Sonnet, the Gemini series, and GPT-4.

By integrating various models, platforms can earn through API calls from popular models.

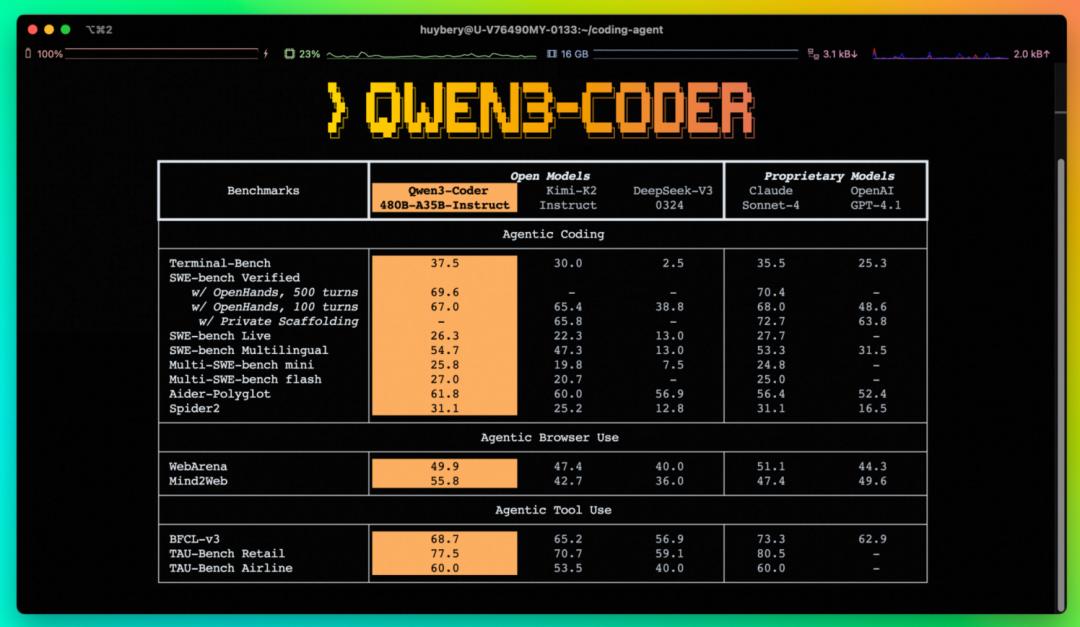

On July 23rd, Alibaba open-sourced its self-developed model Qwen3-Coder and released two closed-source models, Qwen3-Coder-Plus and Qwen3-Coder-Plus-2025-07-22. In performance comparisons, it directly challenged Claude 4, currently the most powerful model series for overseas coding capabilities.

While not matching Opus's top-tier performance, Alibaba's open-source model keeps pace with and even surpasses Claude 4 Sonnet in multiple benchmark tests, such as Terminal-Bench and SWE-Bench. In terms of context length, Qwen3-Coder natively supports 256k Tokens and can be expanded to 1M, compared to Claude 4 Sonnet's 200k upper limit.

Alibaba recognizes that 'models are products.' While enhancing AI programming capabilities, it also focuses on improving Agent capabilities. This enables AI to output products at the model layer, like creating a rotating earth model or an AI mini-game, though complete product capabilities still rely on platform coordination and optimization.

In terms of performance, Qwen3-Coder is a viable replacement. Regarding pricing, Alibaba differentiates based on 'context length intervals', aiming to drive prices down. It adopts a tiered billing model, with the cheapest tier costing only 4 yuan per million Tokens input and 16 yuan per million Tokens output. Even compared to the 128-256k tier, which benchmarks Claude's maximum input length, it offers a 50% lower price per million inputs and over 60% lower per million outputs.

The competition spans products and models. With these clustered releases by tech giants, the AI coding war represents an attitude: the favored AI coding track has proven its value and entered a stage where big tech companies are ready to 'reap the fruits'.

Unlike Agents, still in their initial 'pioneering' stage this year, the decisive battle for AI coding will be fought in the second half of 2023.

In March, OpenAI's Chief Product Officer Kevin Weil predicted that by the end of 2025, AI coding would achieve 99% automation. Anthropic's CEO Dario Amodei went further, boldly predicting that within 3 to 6 months, AI would write 90% of code, and within 12 months, AI would take over almost all coding.

Recent developments suggest these aggressive predictions are not baseless. Unlike Agents still being refined within enterprises, AI coding products are already being tested.

Anthropic's Claude Code stands out, with AI handling up to 80% of internal coding work. They shared cases of Claude Code being used by 10 teams, covering code generation, debugging, refactoring, and testing. Tencent's CodeBuddy IDE, currently in internal beta, notes an 85% usage rate within internal products, design, and development.

These large-scale internal applications signal that AI coding has moved beyond experimentation and is genuinely being used, presenting significant earning potential.

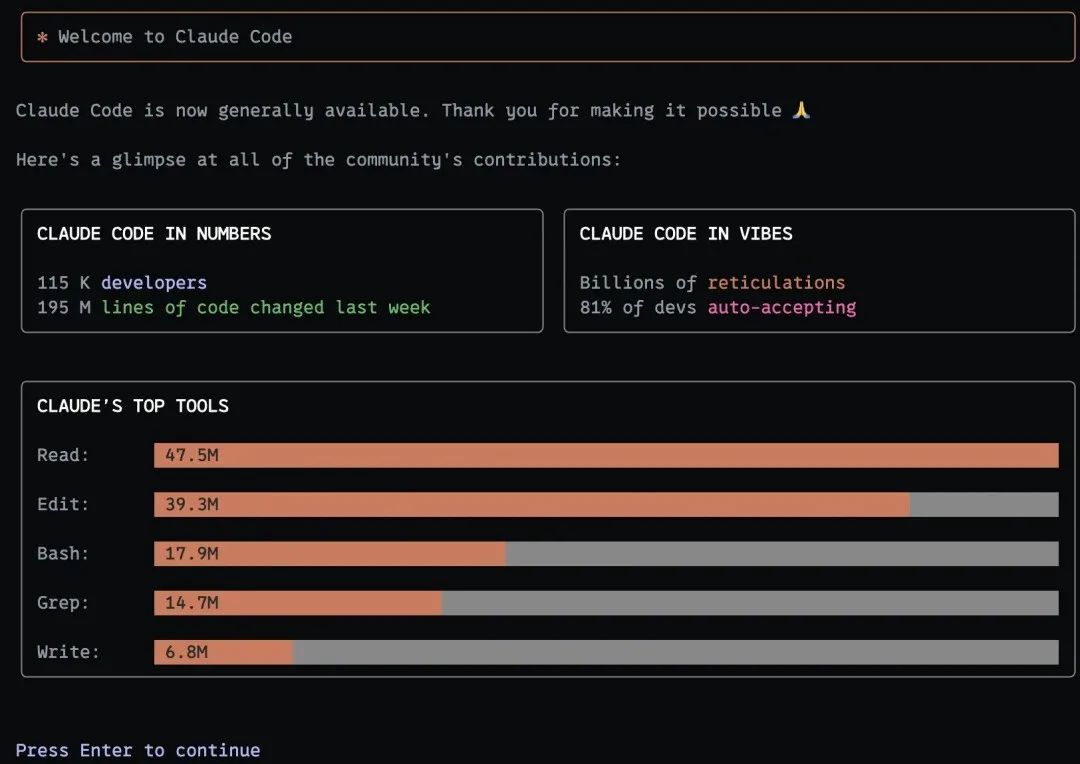

Anthropic's Claude Code attracted 115,000 developer users in four months, with an estimated annual revenue of $130 million. ByteDance's Trae surpassed 1 million monthly active users within six months of launch.

In one week, Claude Code processed 195 million lines of code.

Tech giants not yet deployed in this area are either developing solutions or acquiring existing ones. OpenAI attempted to acquire Windsurf, an AI programming assistant with 800,000 users, for $3 billion. Though the deal fell through, and Windsurf's CEO was poached by Google, this underscores the AI coding field's attractiveness and giants' desire to seize the opportunity.

With significantly improved usability, domestic AI coding software aims to expand its user base beyond professional programmers, reaching a broader audience.

However, whether non-programmer developers will truly embrace these products remains uncertain. For users without a foundation, coding errors or non-compliant requirements can hinder satisfactory product creation.

David Heinemeier Hansson, creator of the open-source framework Ruby on Rails, shared in a podcast, "The ability to edit and correct code is built on whether you have creative abilities. Just like someone who edits a book usually also needs to have writing abilities."

Yet, it's clear that AI coding now competes on more than just quality improvement; tool integrations significantly reduce developers' time.

In the impending AI coding battle, model manufacturers and cloud providers will undoubtedly be the biggest beneficiaries. Code generation and optimization consume substantial computing resources and model inference capabilities, creating lucrative opportunities for big tech companies with cloud infrastructure and model development companies managing APIs.

The substantial consumption of Tokens in coding is a profitable venture. For instance, Anthropic revealed to investors that Claude Code's current annualized revenue exceeds $200 million, contributing over $16.7 million per month. This underscores AI coding's immense commercial potential.

Domestic manufacturers are also entering the fray to seize market share.

Alibaba's strategy focuses on both the foundation and the product, further strengthening its discourse power. In the era where 'models are Agents,' Alibaba's release of its self-developed large model Qwen3-Coder underscores this. With performance comparable to Claude 4 Sonnet but half the price, coupled with geopolitical considerations, Alibaba aims to become a 'replacement' for domestic coding products in model selection.

Tencent and ByteDance cleverly employ the 'free' card as a competitive strategy. One of the key selling points of Tencent's newly launched CodeBuddy IDE mode is its offer to let users utilize the Claude 4 model for free. Similarly, ByteDance's Trae has supported free access to Claude 3.7 in its international version since its inception.

However, given the high cost of these models, limited open access remains the standard practice. Consequently, utilizing invitation codes and similar methods to create exclusivity has become a widespread strategy employed by major companies to broaden their user base.

Even if it means investing heavily, these companies are willing to make a splash. So, who will truly emerge as the domestic leader in this field, earning the title of "domestic Cursor"?