Designing an Autonomous Driving Perception System for Optimal Safety

![]() 07/25 2025

07/25 2025

![]() 461

461

As autonomous driving technology matures, the design and implementation of the perception system have become paramount in ensuring driving safety. This system not only gathers environmental information but also determines the vehicle's ability to respond to emergencies and its overall safety margin. To achieve high reliability, availability, and robustness in a diverse and dynamic real-world road environment, it is essential to harmonize technical architecture, hardware deployment, software algorithms, system redundancy, and safety management across multiple levels and aspects to construct a resilient perception system.

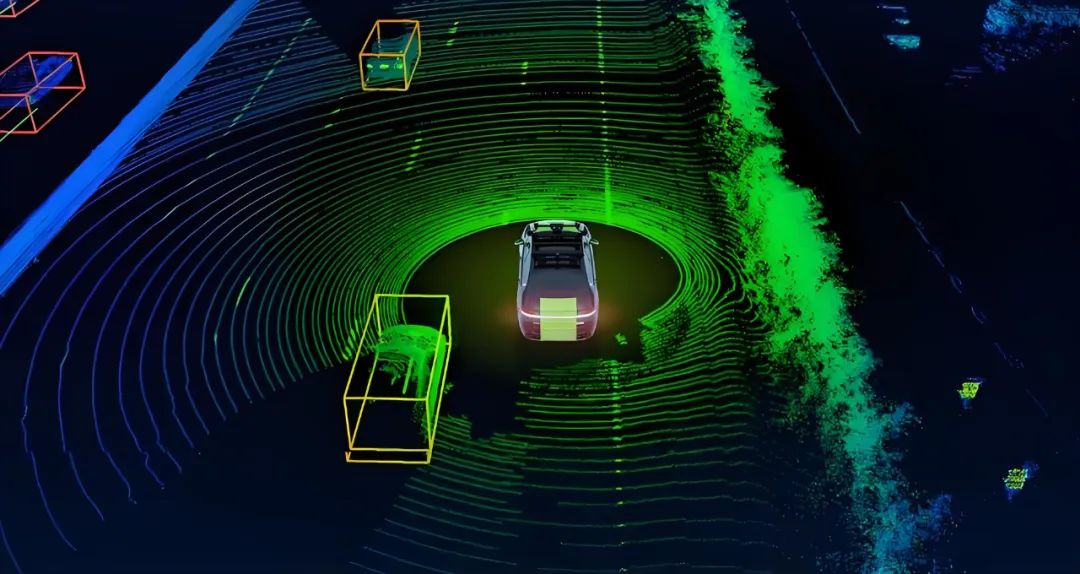

The crux of the autonomous driving perception system lies in "seeing" the traffic environment, requiring sensors of varying physical principles to work in tandem. High-resolution LiDARs provide centimeter-level 3D point cloud data, accurately depicting the shapes and distances of surrounding objects. Millimeter-wave radars penetrate water mist and dust, enabling stable target detection under adverse weather conditions like rain, snow, and fog. Vision cameras leverage rich color and texture information for semantic recognition, aiding in distinguishing traffic signs, lane lines, pedestrian clothing colors, and signal light states. Ultrasonic sensors play a vital role in low-speed parking and close-range obstacle detection, while Inertial Measurement Units (IMUs) and high-precision differential GPS (RTK/PPP) continuously output the vehicle's attitude and precise position, furnishing reliable priors for target tracking and prediction. The distributed placement of these heterogeneous sensors on the vehicle's front, sides, rear, and roof not only facilitates 360-degree environmental monitoring but also offers multiple redundancy guarantees in case of single-path failure.

To avert system failure due to a single point of failure, critical components must employ redundancy design and achieve isolation in power supply and communication. For instance, a design with primary and backup LiDARs operating in parallel can be adopted. When the primary device malfunctions due to physical collision or other issues, the system can seamlessly switch to the backup device within milliseconds, maintaining 3D perception capability. Similarly, cameras should be arranged in a front, rear, left, and right configuration to ensure that when any side fails, the opposing camera can compensate for the lost field of view. Between the control unit and sensors, dual network channels or a star-ring hybrid topology should be established. The crisscrossing links effectively resist local link breaks or communication congestion. All connectors, cables, and housings used by sensors and controllers must meet industrial-grade or even aerospace-grade standards for vibration resistance, high and low-temperature tolerance, dustproofing, and waterproofing, ensuring stability and reliability in extreme environments.

However, even with reliable hardware, improper spatial and temporal alignment between sensors can lead to accumulated fusion errors, impacting the final perception effect. Therefore, online calibration and data preprocessing are indispensable steps. During vehicle startup and operation, the system continuously corrects external parameter deviations between sensors using road sign reflectors, lane line scales, and static obstacle features, ensuring precise alignment of point clouds, images, and radar data within the same coordinate system. Voxel filtering and statistical outlier removal methods are applied to denoise the original point clouds. Morphological and depth filtering are performed on images to eliminate interferences like raindrops and glare. The sensor data is synchronized with microsecond-level timestamps through a high-precision clock source, effectively mitigating the misalignment phenomenon of "images preceding point clouds" or "radar lagging behind cameras". Consequently, the multi-modal fusion module at the system's backend can maximize its effectiveness based on high-quality data.

Multi-modal data fusion represents the core competitiveness of the perception system and the key technology for achieving robust perception. Fusion typically occurs at three levels: raw data, feature, and decision. At the raw data level, leveraging the results of external parameter and time calibration, the LiDAR point cloud and camera image are rigidly registered to generate RGBD images, which carry both spatial depth and visual texture information, laying the groundwork for subsequent target detection and segmentation. At the feature level, deep learning models extract multi-scale features from the bird's-eye view (BEV) and plane front view (RGB) of the point cloud. A cross-attention mechanism within the network facilitates deep interaction of multi-source data, yielding richer and more stable scene representations. At the decision level, the target lists and confidence values output by each channel are weighted and synthesized using Bayesian estimation or the Dempster-Shafer fusion algorithm to determine the target's position, speed, and category with the globally optimal confidence level. This process not only enhances the detection accuracy of static and dynamic obstacles but also compensates based on the confidence of other channels when single-path data is abnormal, ensuring the system's safe output.

While possessing high-precision perception capabilities, the perception system must also adhere to the dual constraints of real-time performance and resource utilization. Autonomous vehicles' onboard computing platforms often face limitations in power consumption, heat dissipation, and space. Algorithm design must minimize computation and memory usage while maintaining accuracy. Therefore, perception algorithms often adopt a two-stage network architecture: In the first stage, a lightweight neural network or traditional image/point cloud processing method rapidly prescreens the entire scene, filtering out potential regions of interest (ROIs). In the second stage, only these ROIs invoke large-scale deep networks or hierarchical refinement networks for in-depth analysis and high-precision detection results. Simultaneously, by monitoring the utilization rate, temperature, and power consumption of onboard hardware, dynamic scheduling is achieved, prioritizing perception accuracy in low-speed and complex environments while appropriately reducing model complexity in high-speed scenarios to shorten response time. Combined with model compression techniques such as network pruning, weight quantization, and knowledge distillation, large models with hundreds of millions of parameters can operate with acceptable accuracy and latency on limited computing platforms.

Hardware and software redundancy and optimization alone are insufficient to fully guarantee safety. The system must possess comprehensive health monitoring and active degradation capabilities, akin to the vehicle's "self-check" and "first aid" mechanisms. At the sensor level, indicators like LiDAR's laser emission power, camera lens cleanliness, and radar noise levels can be monitored in real-time. At the data level, system errors can be promptly detected by comparing the same target's measurement results across different sensors or by matching radar/LiDAR ranging with high-precision maps. At the algorithm level, key performance indicators such as target tracking loss rate, confidence distribution fluctuation, and anomaly detection rate are monitored. Once any health indicator falls below the preset safety threshold, the system automatically triggers a degradation strategy: reducing the autonomous driving maximum speed, limiting the maximum braking or steering amplitude, enabling driver takeover prompts, or even executing emergency stops in extreme cases. Such rigorous health monitoring and active emergency response processes provide multiple safety defenses for the perception system in the face of hardware failures, software anomalies, or external interferences.

Relying solely on onboard sensors to gather external information, the perception system may struggle to maintain stable performance in scenarios such as tunnels, under overpasses, and heavily occluded areas. With the assistance of high-precision maps and precise positioning technology, valuable prior knowledge and assistance can be provided to the perception system. High-precision maps usually contain semantic elements like lane geometry, traffic sign locations, and intersection topology. These maps are collected by crowdsourced vehicles and periodically corrected offline to ensure their content aligns with the actual road. When the vehicle is in motion, the fusion of IMU and RTK/PPP GNSS offers centimeter-level positioning accuracy. By matching the current pose with the map, the target search area can be narrowed, and deviations caused by multi-sensor fusion errors can be corrected. In scenarios with sudden light changes or severe local occlusion, the prior information from high-precision maps acts as a "virtual sensor", compensating for the perception system's blind spots and significantly enhancing reliability in situations like tunnel entrances, structures under bridges, or obstructions caused by large vehicles.

Conducting nearly blind-spot-free and all-weather perception testing in real road environments is extremely costly and risky, necessitating a simulation and verification framework that integrates online and offline methods. Offline, vehicles must undergo repeated testing under various conditions, including highways, urban blocks, rural roads, tunnels, and adverse weather conditions like rain, snow, ice, and fog. This testing focuses on the perception capabilities and emergency responses in edge scenarios, such as pedestrians crossing, non-motor vehicles changing lanes arbitrarily, large vehicle occlusions, and complex intersections and ramps. Online, digital twin technology and hardware-in-the-loop (HIL) simulation platforms can generate thousands of extreme and rare scenarios in batches, rapidly iterating to verify algorithm robustness and the effectiveness of defect repairs. For each scenario type, quantitative indicators like detection rate, false alarm rate, latency, and completeness must be set, and a continuous regression testing system must be established to ensure that each software upgrade does not introduce new risks. Only by extensively covering typical and edge scenarios can the perception system's performance in the laboratory environment be translated into reliable performance on real roads.

In addition to integrating the above technical elements, the design and development of the perception system must be embedded within the functional safety lifecycle management framework. Adhering to the ISO 26262 "Road Vehicles – Functional Safety" standard, starting from the initial Hazard Analysis and Risk Assessment (HARA), identify the hazardous events that may be triggered by various failure modes of the perception system and assign corresponding safety goals and development processes based on the risk level (ASIL). During the architecture design phase, safety requirements are translated into hardware redundancy, software degradation, and monitoring strategies, with the design's correctness verified through means such as model checking, static code analysis, and automated testing. Upon entering the integration testing and verification phase, comprehensive use of unit testing, HIL simulation, road scenario testing, and other methods is required to ensure that safety requirements are fully met. Finally, during the mass production and operation phases, continuously monitor sensor performance and system health, and promptly push security patches and configuration improvements through OTA update mechanisms to address changes in road environments and regulatory requirements. Through this closed-loop management process, the perception system can maintain the necessary safety and reliability throughout every aspect of the product lifecycle.

The safety design of the autonomous driving perception system is a systems engineering endeavor requiring coordinated efforts across multiple dimensions, including hardware, software, algorithms, testing and verification, and safety management. By leveraging the redundancy and complementarity of multi-modal sensors, online calibration and high-quality preprocessing, layered multi-modal fusion, efficient real-time algorithm scheduling, comprehensive health monitoring and active degradation, high-precision map and positioning assistance, simulation testing encompassing typical and edge scenarios, and full ISO 26262 safety lifecycle management, a truly reliable and sustainable perception platform can be realized. Facing increasingly complex and dynamic road environments and stricter safety regulations in the future, the perception system design team must maintain a focus on both technological innovation and engineering rigor, continually optimizing and iterating to provide a solid safety foundation for the implementation of autonomous driving.

-- END --