7 Revolutionary AI Products at WAIC 2025: Beyond Hype, Just Real Impact

![]() 07/29 2025

07/29 2025

![]() 458

458

The WAIC stage beckons innovators with bold visions.

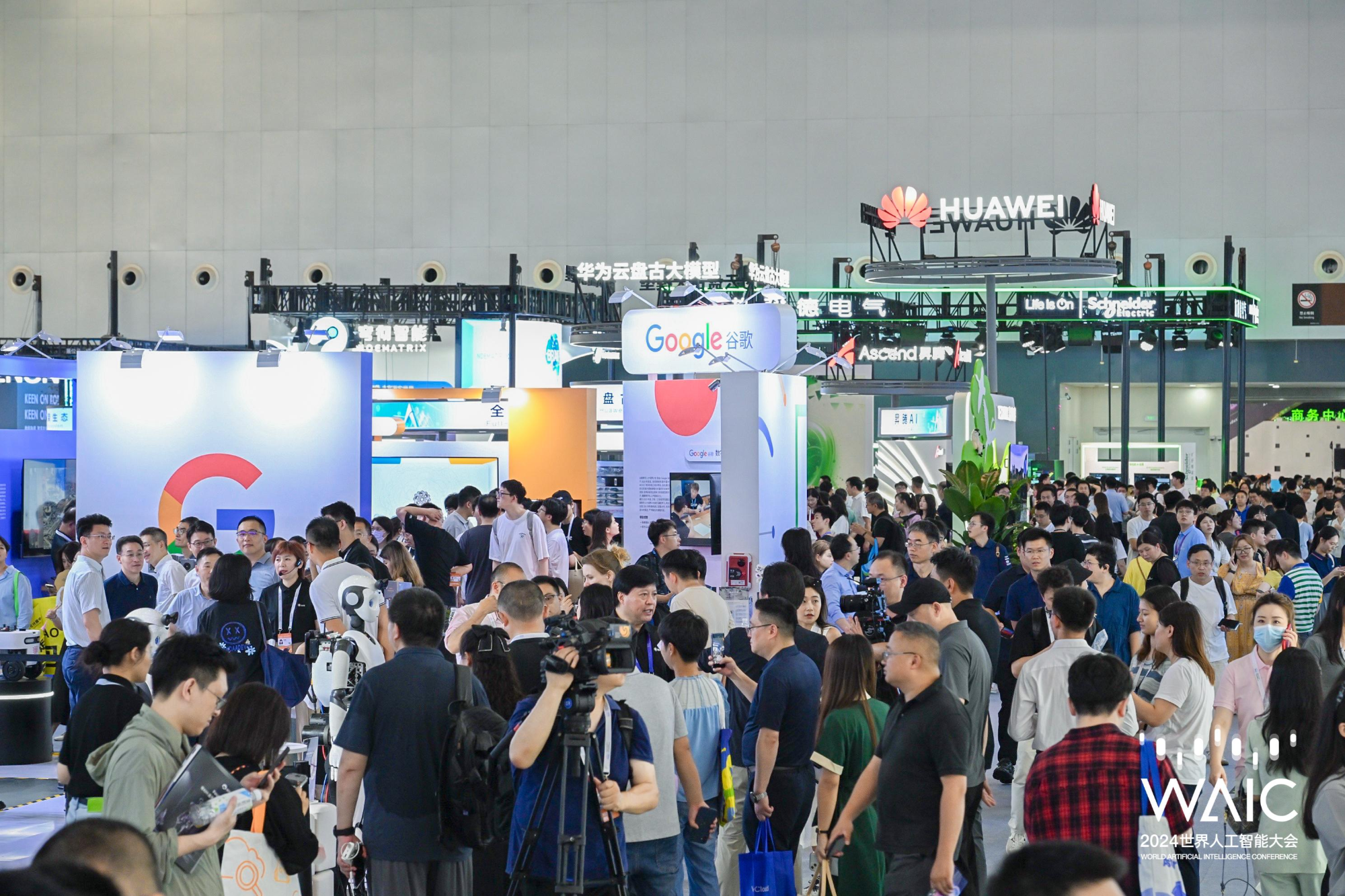

If there was a tech hotspot this past week, it was undoubtedly the Shanghai World Expo Center, where WAIC 2025 (World Artificial Intelligence Conference) unfolded.

On July 28, the closing ceremony of WAIC 2025 marked the culmination of a two-day event that showcased a grand AI parade. The exhibition spanned the entire AI industry chain in China, with global players showcasing everything from computing power chips to large models, algorithm platforms, and smart terminals.

Photo/WAIC

What set this year's WAIC apart was not just the model competitions but the diverse array of AI-powered devices: glasses, robots, virtual humans, and exoskeletons all made their mark.

AI glasses were ubiquitous, with Rokid Glasses leading the pack. Rokid's founder and CEO Zhu Mingming revealed that sales of the new Rokid Glasses have surpassed 300,000 units. "This year, sales are not our concern; production is the priority," he said. As AI+AR glasses, Rokid Glasses can display speeches, provide real-time translation, take photos, record, and offer navigation reminders, making them an always-on AI assistant.

At Tencent's booth, visitors were greeted not by physical robots but by lifelike "Wonderful Digital Humans" on screens. These AI-driven avatars support over 3,000 public digital human avatars, engaging in natural dialogue, announcing products, interacting with viewers, and even customizing personas and styles. For businesses, they are cost-effective "anchors"; for technologists, they represent the evolution of embodied intelligence from physical bodies to digital avatars.

Photo/Tencent

WAIC 2025 also saw the launch of numerous new products, including Kuake AI glasses, which debuted with capabilities ranging from scanning codes for payment and voice translation to packing all these features into a frame weighing less than 50 grams. Other highlights included humanoid robots like Fourier GR-3, designed for elderly care and emotional companionship, and consumer-grade exoskeletons like Ausar Intelligence's VIATRIX, MiniMax Agent, and Jieyue Xingchen's Step 3 large model.

Behind these innovative products lies a clear signal: AI is evolving from merely "talking" to "acting," from "helping you think" to "acting on your behalf." While WAIC 2025 may not have been the most competitive in terms of models, it was undoubtedly the most lively and tangible edition.

Among the myriad of new products at WAIC 2025, Alibaba's surprise launch of Kuake AI glasses stood out among smart terminals.

As Alibaba's first wearable device, Kuake AI glasses prioritize practical daily scenarios like walking navigation, scanning codes for payment, product price comparison, and voice assistant. These needs are met with a nearly zero-operation experience: no need to pull out your phone or open an app; just look up and speak.

Photo/Alibaba

Technologically, Kuake AI glasses adopt a dual-chip architecture of Qualcomm AR1 and Besound BE S2800, similar to Xiaomi's, and are equipped with the Tongyi Qianwen large model. Unlike aiming to be a "super hardware," Kuake integrates product logic around Alibaba's ecosystem, supporting navigation via Gaode Maps, price comparison on Taobao, travel reminders via Fliggy, and payment via Alipay.

While other AI glasses emphasize perception and recording, Kuake takes a different path, positioning itself as a "smarter life executor," an AI tool seamlessly integrated into human daily life. It may not be the coolest, but it's closest to daily use and most aligned with the definition of "usable," precisely what consumer-grade AI wearable devices need to achieve.

In the humanoid robot race, the ability to "move" is common; what's rare is understanding "who to move for" and "for whose benefit." Fourier Intelligence's GR3, unveiled at WAIC 2025, is a prime example of "de-industrialization." Instead of moving bricks or engaging in production, GR3 wears a soft shell and enters hospitals, wellness centers, and senior living facilities.

Photo/Fourier

GR3, Fourier's new generation of humanoid robot, differs vastly from traditional counterparts. It lacks cold mechanical arms or "Terminator"-like strength but adopts warm-toned colors and flexible materials, giving it a friendly companion look. GR3 focuses on "emotional companionship" and "rehabilitation assistance," equipped with a full-sensory interaction system enabling voice communication, physical interaction, and cognitive training and rehabilitation guidance.

Fourier emphasizes that GR3 doesn't compete on traditional parameters like speed, strength, or balance but addresses unseen rigid demands in society, such as emotional care for dementia patients, daily guidance for rehabilitation patients, and functional supplementation when healthcare resources are insufficient. This positioning sets it apart from experimental humanoid robots.

Exoskeletons were once confined to rehabilitation centers, military training camps, or industrial assembly lines, expensive and complex systems with little relevance to ordinary people. However, Ausar Intelligence's VIATRIX, introduced at WAIC 2025, transforms the exoskeleton into an accessible daily technology product.

Photo/Ausar

This consumer-grade power exoskeleton, worn around the waist and thighs, assists with walking, squatting, climbing stairs, and more. It adopts the FLOAT 360 floating hip joint architecture, balancing power drive with natural gait. The core dual motor provides a total output of up to 46Nm, enhancing movement efficiency while minimizing foreignness.

VIATRIX's biggest breakthrough isn't in "performance" but in "target audience": it's not for elderly rehabilitation patients or manual laborers but for sports enthusiasts, fitness buffs, or anyone needing to walk outdoors for extended periods. Ausar positions VIATRIX as an "enhancement tool," not a therapeutic device, allowing users to "walk longer, jump more stably, and train harder."

Entering the consumer-grade exoskeleton market is challenging, with high costs, bulkiness, and limited uses hindering many attempts. Ausar's latest effort redefines the category's "entry threshold," relying on enhancement rather than pain or illness to spark demand. Whether it can truly integrate into daily life depends on its long-term wear experience.

Over the past six months, the AI industry has explored intelligent agents, and MiniMax is no exception, showcasing its intelligent agent platform MiniMax Agent at WAIC 2025. Based on the self-developed M1 model, MiniMax Agent is a full-stack development platform that breaks down requests into multiple sub-tasks, with multiple sub-agents (Researcher, Coder, Designer, PM, etc.) collaborating to complete them.

Photo/MiniMax

Simply input a prompt like "build a travel recommendation website," and MiniMax Agent automatically schedules tools to generate UI design, code logic, content copywriting, and even deploys it online. Unlike traditional "you ask, I answer" AI chat logic, MiniMax Agent is an "executive employee" that doesn't need prompting and doesn't get tired. AI is no longer just a chatbot but a combination of product managers, junior engineers, and visual designers.

In scenarios like webpage building, content generation, and advertising creativity, MiniMax Agent demonstrates astonishing production efficiency and low trial-and-error costs. While its stability and accuracy in real business processes remain to be seen, one thing is clear: agents are no longer just a concept; execution is the new AI battleground.

Amidst rapid model iteration this year, Jieyue Xingchen's answer isn't "another large model" but a foundation model focused on "practicality."

Step 3, Jieyue Xingchen's new large model, adopts the MoE (Mixture of Experts) architecture with 321 billion parameters, but only about 3.8 billion parameters are activated per inference, significantly reducing costs while maintaining large model capabilities. It supports native multimodal inputs and has reasoning abilities across text, images, mathematical logic, and other domains, achieving SOTA scores on multiple benchmarks like MathVision, MMMU, and LiveCodeBench.

What stands out most about Step 3 is its focus on actual deployment and industrial application. Not only is the model open-source but it's also adapted to domestic AI chip platforms like Huawei Ascend, Muxi, Biren, Cambricon, and Tianshuzhixin, jointly initiating the "Model-Chip Ecosystem Innovation Alliance" with these vendors. On domestic platforms, its inference efficiency can be up to three times that of DeepSeek R1.

Photo/Jieyue Xingchen

In the current model field user competition, Step 3 stands out as a "general intelligent base" for enterprise developers, aiming to serve companies genuinely deploying models rather than hyping model concepts. This shift from the parameter battlefield to landing value might be the beginning of true differentiation for domestic large models.

While humanoid robots and AI assistants compete in intelligence, Tencent Advertising showcased another "pragmatism-first" AI application: the "Wonderful Digital Humans" system for merchants focused on marketing.

Unlike most digital humans emphasizing interactive experience or virtual companionship, "Wonderful Digital Humans" were born to sell. They're not about chatting or showing off technology but being 24/7 live-streaming "shopping anchors." The system offers over 3,000 digital human avatars, covering different ages, styles, and industry roles. Merchants can also customize exclusive digital humans for short video promotion and e-commerce live streaming.

More importantly, the platforms behind "Miaosi" and "Miaobo" can generate videos, write copywriting, analyze live chat interactions in real-time through "Live Brain," and make voice responses, automating the live streaming process. The voice can replicate a real person's 1:1, combined with industry-common phrases, for a highly realistic live streaming experience. Data shows these digital human live streaming rooms have longer viewing times, higher interaction rates, and can reduce merchants' live streaming costs by 90%.

In other words, Tencent isn't creating a "show-off" digital human but reshaping the entire marketing process from content generation to persona creation, live streaming execution to data analysis, enabling small and medium-sized businesses to have their own "AI anchor team."

The joint booth of Shenyuan AI and COSMOPlat, Haier Group's industrial internet platform, also attracted attention with Shenyuan AI's self-developed L4-level intelligent agent mother system, MasterAgent. Unlike consumer-grade intelligent agents, MasterAgent focuses on architectural-level agent construction, suited for complex collaboration scenarios at the industrial and enterprise levels.

MasterAgent, the "mother system," supports multi-agent collaboration, automatic task assignment, and decision optimization, with a highly scalable scheduling structure. Its applications target manufacturing, logistics, and energy scenarios, coordinating multiple sub-agents (like planning, scheduling, perception, etc.) to enhance the system's overall intelligent execution capabilities rather than single application landings.

Its emergence represents an upgrade of the domestic intelligent agent ecosystem from "consumer tasting" to "industry-level system integration." MasterAgent is not just a toolkit but a new type of AI infrastructure that can mobilize multiple agents for coordination and execution in complex industrial processes. This L4-level system mother logic indicates that intelligent agents will move towards "integrated control and intelligent scheduling" in future industrial landings.

If MiniMax Agent serves as an "execution AI" tailored to end-user demand scenarios, MasterAgent takes on a more pivotal role as the "background brain" in large-scale, multi-agent system collaborations. This allows AI to transcend mere task processing, embracing task management and process control.

If these novel products showcased at WAIC 2025 were condensed into a single image, it might be challenging to discern their shared category at first glance: some are facial glasses, others leg-worn devices, still others are "conversational" digital humans, and yet more are "operational" software agents. Not to mention a robot that nods and bows gracefully. However, placing them within the context of AI evolution unexpectedly reveals a striking consensus: AI is comprehensively transcending the model stage, embracing its own moment of "embodiment".

From Rokid and Quark's AI glasses to Aorsha's wearable exoskeletons and Fourier's humanoid robots, this year's WAIC AI offerings clearly exhibit a greater variety of "physical forms". They are no longer confined to language models or image generators residing on screens but have infiltrated users' living spaces in more intuitive ways—wearable, attachable, and interactive.

Particularly in the realm of AI glasses, the product evolution has shifted from Meta's photo-taking social applications, through Rokid's focus on visual assistance, to Quark's integration into daily life execution. These glasses have transformed from "novelty toys" into "practical tools".

Image/Alibaba

Furthermore, the wave of agents is spreading beyond the technical domain, permeating the product layer. MiniMax Agent has evolved beyond being a traditional "question-and-answer AI", possessing system capabilities for task scheduling and collaborative execution. It is now capable of delivering complex content such as webpages, scripts, and advertisements. Meanwhile, products like GR-3 and VIATRIX exemplify how AI is beginning to "move its body", with robots no longer merely demonstrating balance but learning to provide healthcare companionship, and exoskeleton devices transitioning from medical aids to fitness accessories.

Whether performing mental tasks or physical actions, AI is shifting from "talking logic" to "doing real things". Step Star's Step 3 is also illustrative, emphasizing not the size of parameters but the ability to run on domestic platforms like Ascend chips and Tianshichip, serving actual systems cost-effectively.

Models are no longer the "capability center" from above but are basic modules integrated into products and services. From this vantage point, AI is transitioning from the platform era to the "system collaboration era", signifying that the future core of AI development will increasingly focus on systemization capabilities.

Source: Lei Technology