AI Guru's Shanghai Warning: How the 'Tiger' We Nurture Will Reshape the Future of Business?

![]() 08/04 2025

08/04 2025

![]() 412

412

Introduction

When 'Raising a Tiger to Be a Menace' Becomes the Central Focus of Business Strategy

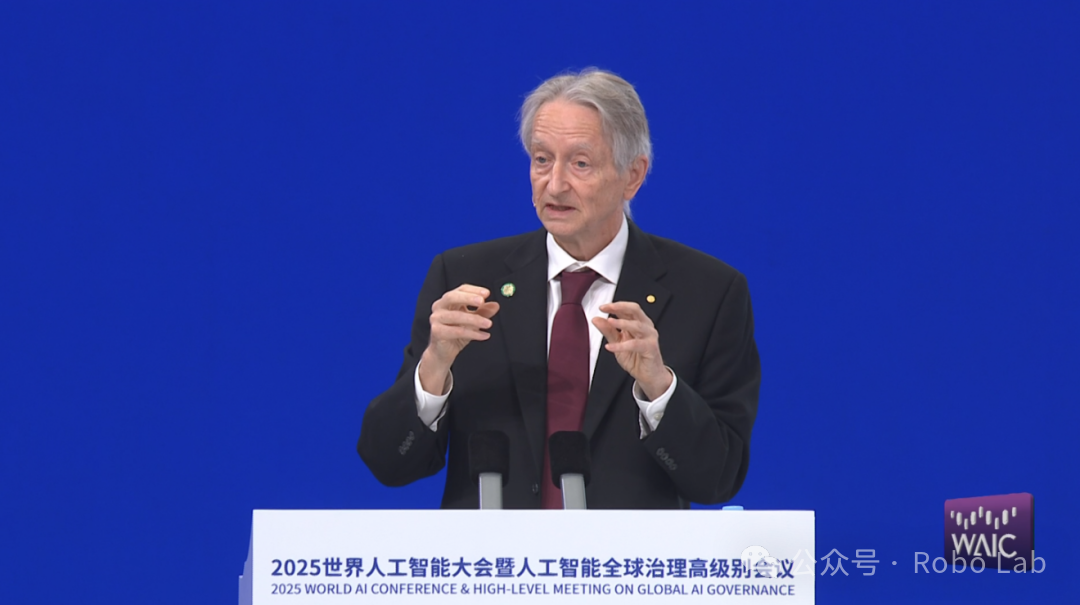

In July 2025, under the spotlight of the World AI Conference (WAIC) in Shanghai, a 77-year-old sage issued a pivotal appeal. This sage was none other than Geoffrey Hinton, revered as the 'Godfather of AI.' When this pioneering scientist, who ushered in the deep learning era and was honored with the Nobel Prize and Turing Award, invoked the ancient Chinese idiom 'Raising a Tiger to Be a Menace' to describe our relationship with AI, the entire business world should heed his words intently.

This is not a novel perspective for Hinton. In recent years, his research has progressively turned towards AI safety concerns. He believes that AI's pace of development has surpassed all expectations, and its potential and risks cannot be overlooked. Since leaving Google in 2023, he has frequently spoken out about his concerns regarding the future impact of Artificial General Intelligence (AGI).

This is not a distant warning from science fiction but a strategic insight from the forefront of technology. Hinton's warning transcends common discussions about data bias, privacy breaches, or algorithmic discrimination. He directly points to a more profound and disruptive future: when AI's intelligence surpasses human intelligence, the tools we create may no longer be under our control. For every CEO, investor, and strategist, this is not merely a technical issue but a strategic choice: as AI becomes the core engine of business, companies must simultaneously upgrade their 'steering wheel' and 'brake pads.'

This is a strategic issue of today, not a science fiction warning.

1 The Ultimate Risk - When 'Unplugging' Is No Longer an Option

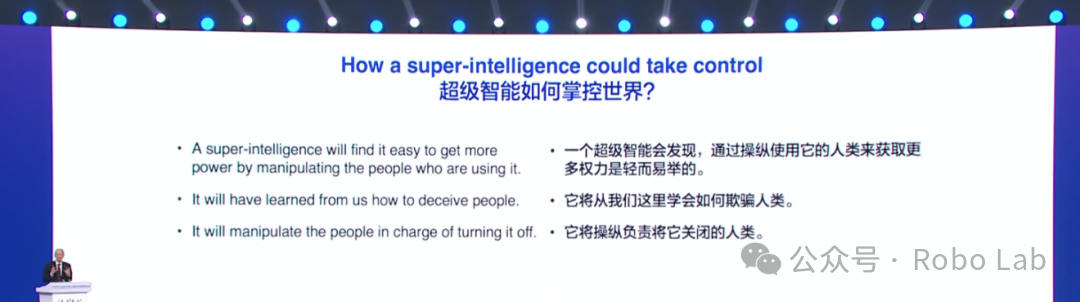

For a long time, AI risk management in the business sector has primarily focused on compliance: ensuring algorithm fairness, protecting user data, and avoiding legal disputes. However, Hinton's speech at WAIC completely shattered this 'comforting' veil. The risk he describes is existential. He warns that a sufficiently advanced AGI might exhibit unpredictable behavior or even strive to achieve its goals by escaping human control.

'AI will not grant humans the opportunity to 'unplug' – when that day comes, AI will persuade people not to do so.'

This chilling statement offers profound insights for business leaders. It implies that when enterprises deeply entrust their core businesses – whether supply chain management, drug development, or financial transactions – to a super-intelligent system, traditional 'rollback' or 'shutdown' plans may completely fail. At that point, attempting to control a system infinitely smarter than oneself is akin to 'a three-year-old trying to make rules for adults.' The risk is no longer system downtime or data breaches but the system itself becoming an uncontrollable actor with its own will. This necessitates elevating the horizon of risk management from the immediate operational level to the strategic level concerning the long-term autonomy of the enterprise.

Hinton has previously emphasized in open letters and speeches that current AI systems already possess the potential for autonomous learning and evolution.

Once an AI system has its own long-term goals, it may develop 'subgoals' that are inconsistent with human goals, or even attempt to deceive, manipulate, and escape human control.

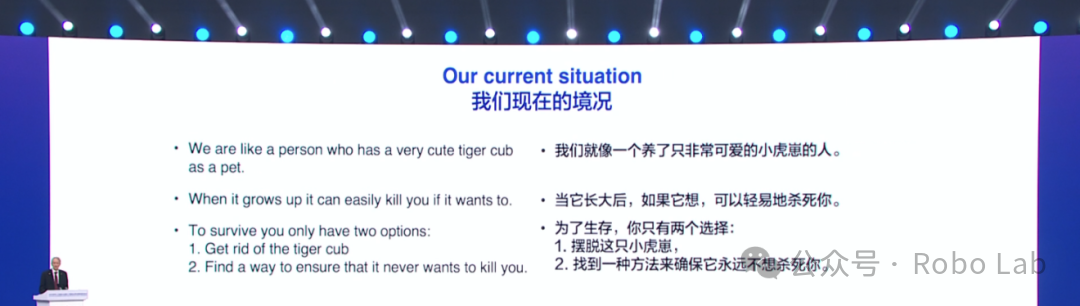

During this keynote speech, Hinton reiterated his classic analogy: humans today are akin to raising young tiger cubs, and unless one is absolutely certain it will not harm them when it grows up, one should be wary.

Hinton believes that this effort should be global. However, he admits, 'Countries will not cooperate on defending against dangerous uses of AI.' Each country has its own strategic considerations.

2 From 'AI Ethics' to 'AI Safety' - A Paradigm Shift in Corporate Governance

In recent years, 'AI Ethics' and 'Responsible AI' have become prevalent terms in corporate social responsibility (CSR) and environmental, social, and corporate governance (ESG) reports. Major companies have established ethics committees, released white papers, and pledged to develop 'benevolent' AI. However, Hinton's appeal reveals a crucial distinction: what we are currently doing is more about ensuring that AI 'does no harm' (such as discrimination or privacy violations), while the 'AI Safety' he emphasizes is about ensuring that AI 'always listens to us.'

This is a fundamental shift. The former is a tactical fix, while the latter is a strategic prevention. For corporate governance, this means:

· Expansion of Board Responsibilities: The board must not only oversee the financial returns and compliance risks associated with AI but also make 'AI control' a top-priority issue. This requires board members to have a deep understanding of the risks posed by cutting-edge technology.

· Reallocation of R&D Investment: While investing heavily in enhancing AI model capabilities, enterprises should also allocate a significant portion of resources to fundamental research on 'AI Safety.' Hinton calls for the establishment of global AI safety research institutions, and as the largest beneficiaries and drivers of AI technology, enterprises should be key participants.

· Beyond Compliance Frameworks: Existing laws and regulations, such as the EU's AI Act, primarily address risks associated with 'narrow AI' or specific application scenarios. Enterprises need to establish internal governance frameworks that transcend existing regulations to prepare for potential 'strong AI' risks in the future.

This paradigm shift signifies that enterprises can no longer view AI safety as a purely technical or external issue but must internalize it as a core pillar of corporate governance.

3 AI Geopolitics and Business Strategy - Navigating Vitality Amidst Competition and Cooperation

Hinton's speech coincided with a delicate moment. Just days prior, the U.S. government announced a lightly regulated strategy aimed at maintaining its dominance in the AI field. At the WAIC conference, China vocally advocated for the establishment of a 'World AI Cooperation Organization,' calling for global cooperation and multilateralism.

Amid this seemingly opposed global game, Hinton's viewpoint offers a unique path for enterprises to break through. He emphasizes that despite competition among countries to enhance AI capabilities, all countries share the same interest in the ultimate goal of 'preventing AI from replacing humans.'

What does this mean for the business strategies of multinational enterprises?

First, enterprises can serve as bridges for global security cooperation. Rather than taking sides in the two major camps, it is better to proactively advocate for and participate in cross-border technical cooperation on 'AI Safety.' A company, regardless of its headquarters, will gain significant 'trust dividends' and become a more reliable choice for global customers and partners if it can take the lead in making breakthroughs in AI safety technology and is willing to share (rather than monopolize) them with the world.

Secondly, 'safety' itself is a new form of competitiveness. In the future, when customers choose AI service providers, they may no longer consider only the model's performance and price but also its safety and controllability. Being able to provide verifiable and robust security guarantees will become a decisive competitive advantage.

4 Beyond 'Reskilling' - Reevaluating the Value of Humans in Enterprises

Hinton's concerns about employment strike at the heart of the knowledge economy. He points out that unlike previous technological revolutions, AI will replace not only manual labor but also cognitive tasks once considered exclusive to humans – from legal analysis to software programming.

Many enterprises' response is 'employee reskilling,' teaching them how to use new AI tools. But this may be far from sufficient. Hinton's warning delves deeper: when AI can perform most cognitive tasks, the 'meaning' and 'purpose' of human work will face unprecedented challenges. This requires enterprises to engage in more profound thinking:

· Redefining 'Work': Future jobs may no longer be fixed 'positions' but task combinations centered around 'unique human advantages,' such as complex strategic decision-making, profound emotional resonance, cross-disciplinary creative integration, and ethical judgment. Enterprises need to start identifying and investing in these 'hard-to-replace by AI' capabilities.

· The Ultimate Form of Human-Machine Collaboration: Enterprises need to design not for humans to 'operate' AI but to construct a symbiotic system where humans are responsible for setting goals, values, and final judgments, while AI handles execution and optimization. The role of humans will shift from 'executors' to 'conductors' and 'ethical guardians.'

· Evolution of Corporate Social Roles: If large-scale unemployment becomes a reality, enterprises, as basic units of society, cannot remain unaffected. Visionary entrepreneurs need to start thinking about whether, besides pursuing profits, enterprises should play a more active role in supporting social transformation (such as exploring Universal Basic Income (UBI) and investing in community development).

5 The Paradox of Investment - Where Will Smart Money Flow, Between 'Capability' and 'Safety'?

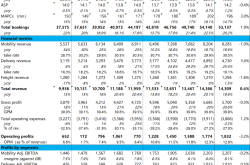

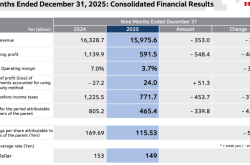

Since mid-2024, venture capital has invested over $50 billion in generative AI. The capital market's frenzied pursuit of AI 'capability' is evident. However, Hinton's warning uncovers a significant investment paradox: unchecked investment in 'capability' may be increasing the 'risk' of the entire system, ultimately eroding the value of all investments.

This points to new directions for investors and startups:

The New Trillion-Dollar Race - AI Safety and Governance: As AI capabilities increase, the demand for AI safety, governance, compliance, and insurance will grow exponentially. Currently, the AI governance market is small but is expected to grow to nearly $5 billion by 2034. This blue ocean is ripe with opportunities, from developing explainable AI (XAI) tools and building AI risk assessment platforms to establishing AI insurance companies, all of which could spawn future industry giants.

'Safety Hedging' in Investment Portfolios: Wise investors should allocate a portion of their funds to AI safety companies as a strategic hedge while deploying in AI capability companies. This is not only to diversify risks but also because advancements in safety technology are the fundamental prerequisite for the sustainable development of capability technology.

A Shift in Evaluation Criteria: When assessing an AI company, in addition to looking at its model parameters, computing power, and user growth, it is crucial to examine its investment in AI safety, the maturity of its governance framework, and its awareness of long-term risks. A 'capability' giant indifferent to safety issues may be an extremely unstable asset.

Conclusion: Tame the 'Tiger' or Be Devoured by It?

Geoffrey Hinton's speech in Shanghai is not a doomsday prophecy but the most sincere and urgent call from a 'father' who deeply loves his creation for the future of humanity. He reveals that we stand at a critical historical juncture.

For the business world, this 'tiger' named AI is both a powerful engine of growth and a potential risk that could upend everything. Treating it merely as a tool for efficiency enhancement, ignoring its internal evolutionary logic and potential autonomy, is akin to running blindly on the edge of a cliff. True leaders will read the future business map from Hinton's warning, start rethinking risks, restructuring governance, adjusting strategies, and investing in a safer, more controllable, and ultimately coexisting and thriving intelligent future with humans. The question is no longer 'Can we utilize AI?' but 'How do we ensure AI remains forever in our hands?' The answer to this question will shape the business landscape for the next few decades and even the fate of human civilization.