Crafting a 3D World from a Single Sentence! Google Unveils Genie3, Igniting a Global Contest for World Models?

![]() 08/07 2025

08/07 2025

![]() 419

419

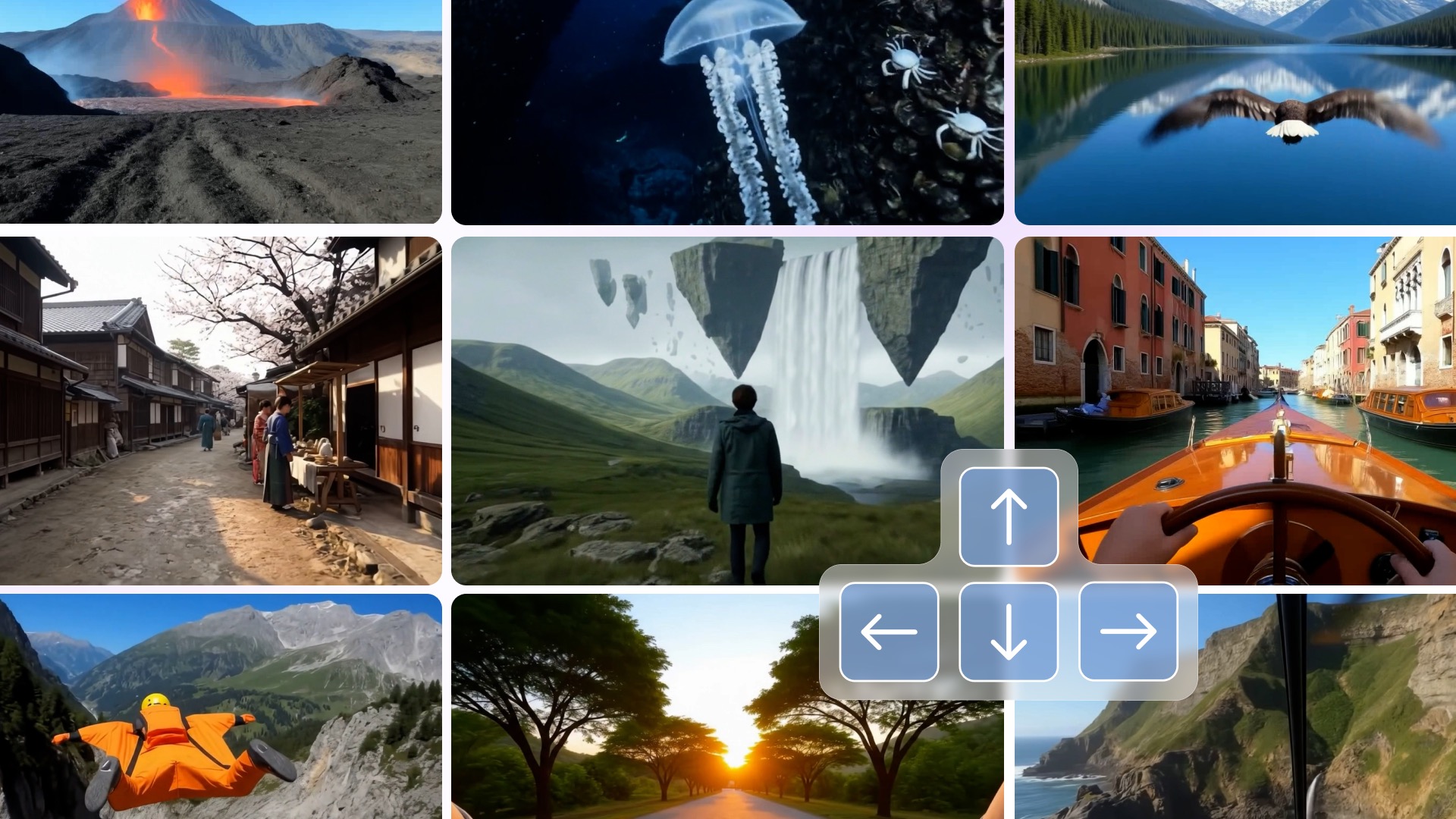

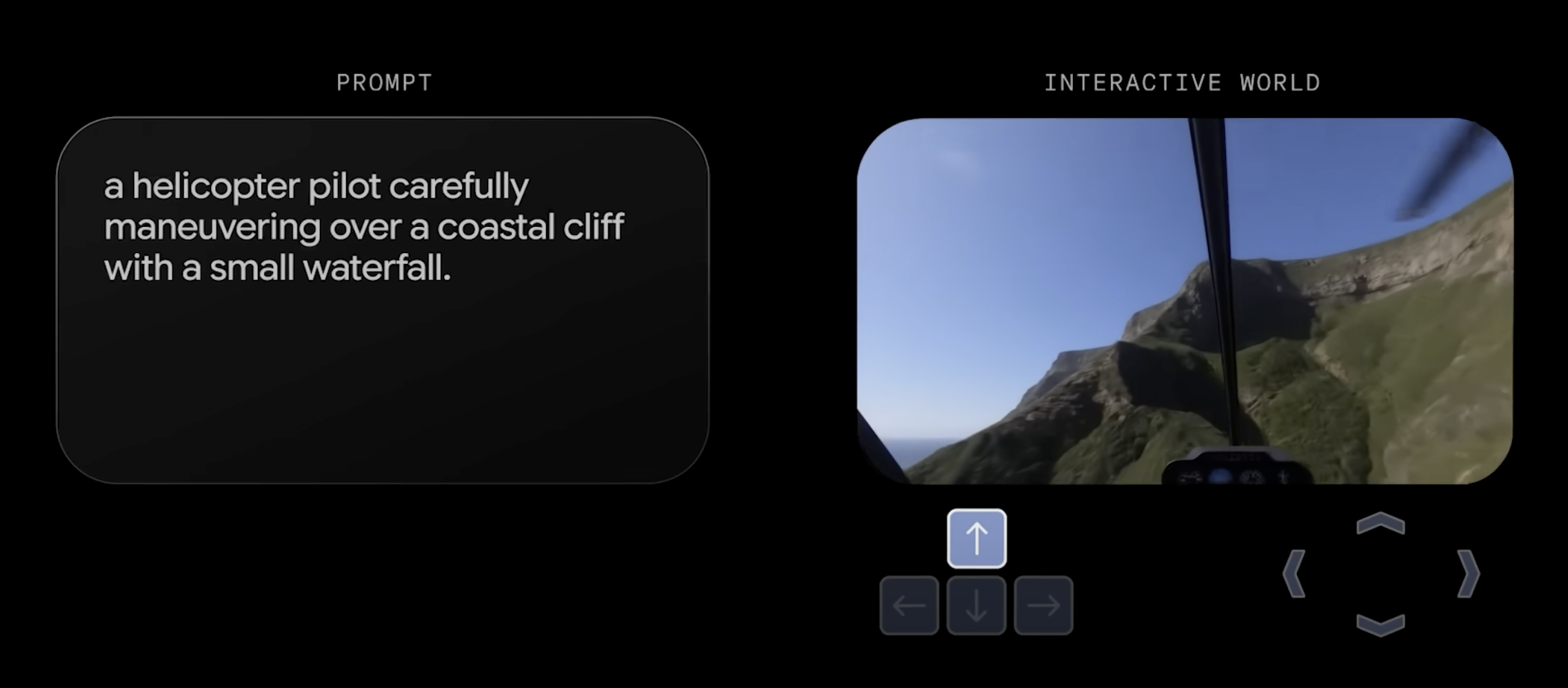

Imagine crafting an immersive 3D world that lasts for minutes, all from a single sentence.

The large model community has buzzed with activity over the past two weeks. Last week, Alibaba bolstered its investment in the Qwen series, releasing several open-source models. This week, OpenAI followed suit, making the weights of its language model available for the first time in five years, launching GPT-4 on August 6, Beijing time, pushing the competition of open-source models to unprecedented heights.

The timing is strategic. Both Alibaba and OpenAI are vying for supremacy in language models (LLMs), enhancing dialogue, reasoning, and text generation capabilities using open-source small models, and competing for developers and ecosystems with more open strategies. However, Google chose to drop a "depth charge" in a different lane on the same day—the versatile world model, Genie3.

This concept briefly gained traction last year when OpenAI released Sora. Many viewed video generation models like Sora as the sole path to achieving world models, accelerating the realization of AGI. But the fervor quickly waned: Sora-like models (including Google's Veo 3) ultimately produced only videos, lacking navigable and interactive environmental logic, and failing to foster an iterative ecosystem, effectively silencing the "world models" discussion.

The advent of Genie 3 is more than just a name change.

Architecturally designed for real-time interaction, Genie3 can generate a 3D world lasting several minutes based on text prompts, supporting character movement, object manipulation, weather changes, and other events, along with consistent visual memory, giving the virtual world "spatial coherence." Importantly, this world model is not merely a content generator for skill demonstration but a natural environment for training AI agents:

It offers a controllable, low-cost, and repeatable simulation environment where agents can learn decision-making and actions without relying on costly and risky real-world testing scenarios.

From these perspectives, Leitech believes that the launch of Genie 3 could not only signify a technical breakthrough but also herald the next round of AI competition—this time, with the battleground shifting to AI-generated worlds.

To understand the significance of Genie3, compare it with two familiar categories: last year's "phenomenal" video generation model, Sora, and Hunyuan 3D, which has been continuously iterating in the field of 3D generation.

Sora sparked heated debates last year for a simple reason: it could generate short videos up to one minute long, with rich details, smooth camera transitions, and light, shadow, and material qualities akin to real films. However, its core remains video generation—the output is fixed from start to finish, and users cannot enter the video or influence its direction. Sora-like models ultimately lack "controllable interaction"; you cannot make the characters turn to look at you, nor can you introduce a sudden rainstorm or move a chair.

In other words, Sora gives you a completed film, not a world to explore. Genie3's design goal is the opposite, akin to game engines like UE5.

Clip compressed and accelerated 2x, Image/ Google

Generated with one prompt, Image/ Google

Genie3 generates navigable and interactive virtual physical environments, supporting 720p real-time rendering for several minutes, with environmental details recorded—when you leave a room and return, the paint on the wall remains, or the book on the table stays open as before.

Clip compressed and accelerated 2x, Image/ Google

According to Google DeepMind, the Genie 3 environment remains highly consistent within minutes, with visual memory traceable back to one minute ago. This visual memory mechanism is one of Genie 3's underlying highlights, giving the generated world "spatial coherence" and immersive interaction.

Furthermore, Genie3 supports promptable world events, allowing dynamic changes through new prompts—you can switch between sunny and rainy weather, add a cat, or replace a bear with a person on horseback, and these changes persist in the world. It can not only "generate" but also "update," offering possibilities for open-ended exploration and game-like interaction.

Clip compressed and accelerated 2x, Image/ Google

Clip compressed and accelerated 2x, Image/ Google

Tencent's Hunyuan 3D series is renowned for quality and speed in 3D asset generation. The latest Hunyuan3DPolyGen, released in July, boasts higher wiring precision and richer details, supporting both triangular and quadrilateral faces, facilitating import into game engines or 3D rendering pipelines. The advantage of this model generation lies in rich details and precise texturing, ideal for asset production, animation, or industrial design, but it generates static 3D objects only.

Model generated by Hunyuan 3D, Image/ Tencent

Genie3, on the other hand, takes a different approach. Instead of generating isolated models, it constructs a dynamically running physical environment serving as a sandbox for training AI agents: robots can test path planning, self-driving cars can simulate obstacle avoidance, and game NPCs can practice dialogue and task logic.

Critically, this environment is repeatable, controllable, and low-cost, eliminating physical limitations and safety risks of real-world scenarios.

However, the Google DeepMind team also acknowledges Genie 3's current limitations, such as limited action support despite allowing modifications within the same world. Additionally, interactive agent training presents technical challenges, with complex interactions between multiple agents remaining an area for breakthrough, making Genie 3 more potential than realized at present.

Considering that Genie 1 only supported 2D interaction upon release and Genie 2, launched at the end of last year, supported 3D interaction for up to 20 seconds, Genie 3's emergence is undoubtedly a significant leap forward, reflecting rapid progress in world models.

The term "world model" gained prominence in the AI community in early 2024. OpenAI's video generation model, Sora, not only amazed the audience with its technical demonstration but was also interpreted by insiders as the "prelude" to world models. The reason is simple: it could generate long videos with a degree of physical consistency, seemingly preparing for future interactive virtual environments.

During that period, numerous analyses and reports claimed that "world models are the only path to achieving artificial general intelligence (AGI)," widely believing that AI would first learn everything in virtual worlds before moving to reality, with the fervor even overshadowing news of large language model upgrades.

This is the backdrop for Genie3's debut: a field with immense technical potential but decreased topicality and resource support. Its difference lies in moving beyond the "video generation" stage into the realm of "interactive 3D worlds"—continuous rendering lasting several minutes, controllable event triggering, and consistent visual memory, directly addressing thresholds technology struggled to cross in the past year.

So, will Genie3 be the turning point for world models, shifting them from "cold" to "hot"?

Clip compressed and accelerated 2x, Image/ Google

On the positive side, it at least provides a tangible example: world models are not mere hypothetical constructs in research papers but can exist as product prototypes serving specific tasks—whether it's agent training, virtual simulation, or future immersive content creation. This offers the industry new narrative material and may attract capital to reassess the commercial potential of this direction.

However, to ignite real competition, several conditions are necessary:

First, more participants must enter the field, so world models are not just a Google experiment.

Second, an open or semi-open ecosystem is required, enabling external developers to build applications based on the model, driving iteration.

Third, clear landing scenarios must be found, even if they are high-value applications in niche markets, allowing technical validation and commercial closed loops to reinforce each other.

What is certain is that Genie3 has once again placed "world models" at the center of technological discourse. Will world models quickly become a contested ground among various schools of thought? Or will they, like Sora's impact, fade into silence after a brief surge? This depends not only on the speed of technological iteration but also on whether the entire AI industry is ready to embrace a new main battlefield.

From Alibaba and OpenAI's successive moves in the language model lane to Google opening another door to the future with Genie3, AI industry competition in these weeks has resembled a multi-front tug-of-war. Unlike comparing the capabilities and open-source strategies of LLMs, Genie3 focuses on "interactive worlds" construction, taking a crucial step towards world models' usability with continuous rendering lasting several minutes, controllable events, and visual memory.

It may not immediately spark a new industrial boom but at least proves that world models have entered a new stage, offering new possibilities for agent training, virtual simulation, and even immersive content creation. Next, whether it can attract more participants, form an open ecosystem, and find clear landing scenarios will determine whether this track experiences a brief resurgence or truly heads towards prosperity.

The race for world models has just begun.

Source: Leitech

Images in this article are from: 123RF Stock Photo Library Source: Leitech