GPT-5 Makes Its Debut: Is the "Doctor Expert" Truly an Agent?

![]() 08/11 2025

08/11 2025

![]() 535

535

“It’s not a giant step, but a steady footfall on a new rung.”

By Wang Xian

Produced by JiXin

OpenAI CEO Sam Altman described GPT-5 as a “doctor-level expert on standby” at the conference, proposing that “software generated on demand” will become the core capability of this generation of models.

Perhaps GPT-5 is no longer merely a stronger language model but a pivotal node on the path to a general Agent.

01 New Technical Highlights

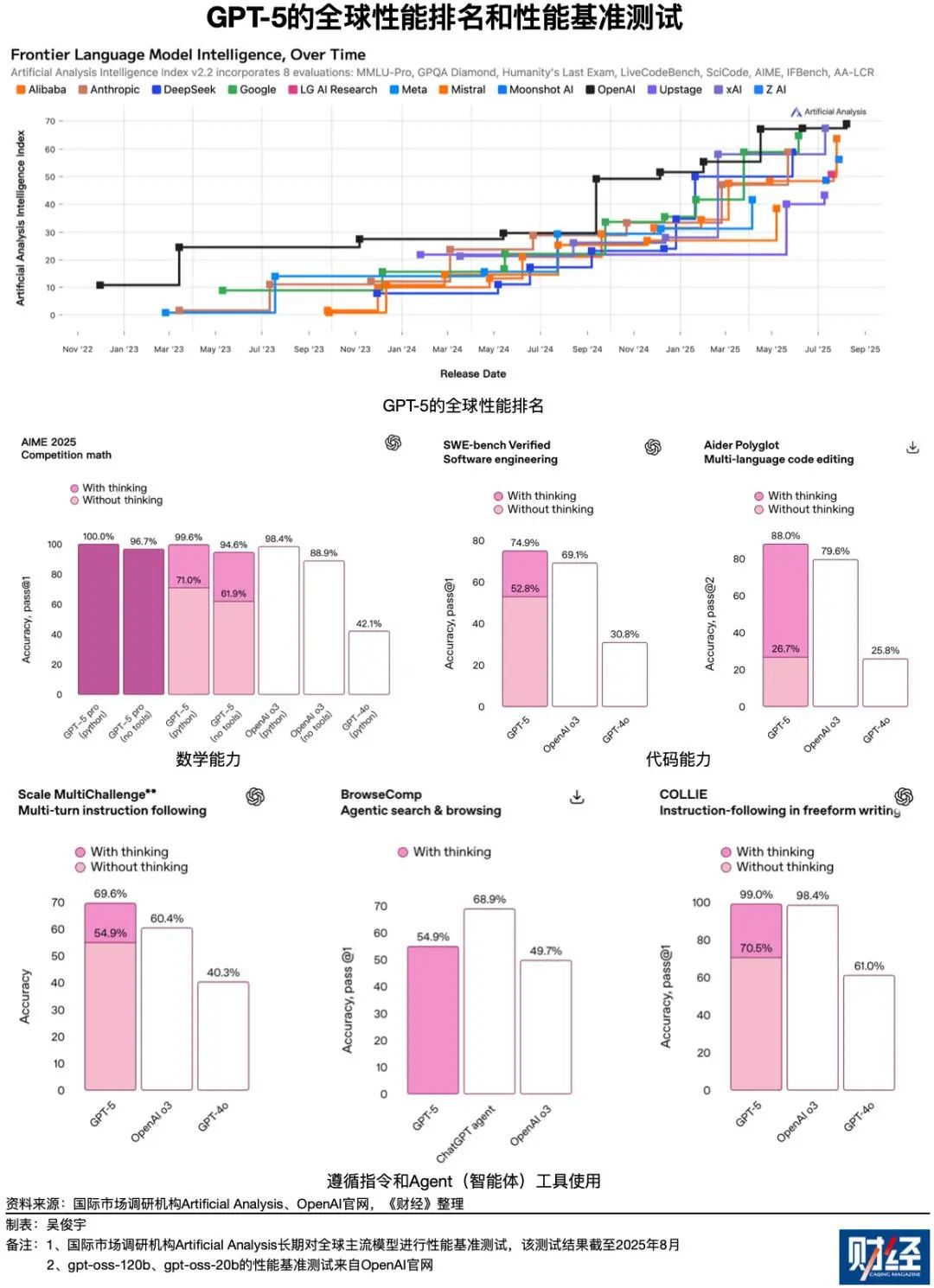

While GPT-5's fine-tuning capabilities have yet to meet high expectations for “general intelligence,” it demonstrates significant progress in performance stability, reasoning depth, and tool invocation.

Keyword 1: Model Matrix

Instead of offering a single model, OpenAI has launched multiple versions, such as GPT-5 Standard, GPT-5 Mini, and GPT-5 Nano, optimized for different user scenarios.

Architecturally, GPT-5 employs a unified multi-model system: an efficient base model + deep reasoning module + real-time routing. The real-time router automatically determines which model to invoke based on the complexity of the user's query. This dynamic scheduling means users don't need to manually switch between models, and GPT-5 can choose between concise answers or in-depth reasoning to provide solutions. An OpenAI executive noted that the goal is to simplify the user experience while ensuring consistency, replacing the previous separation of GPT-4 and models like DALL-E and Whisper with a “one-stop” GPT-5.

Moreover, its pricing is lauded as that of a “price butcher,” with statistics showing it's only one-fifteenth the price of Anthropic's latest Claude Opus 4.1 model. This could spark a new round of inclusive competition.

Keyword 2: Significant Increase in Context Window

GPT-5 supports a context length of up to 400,000 tokens, including 272,000 input tokens and 128,000 output tokens. This far surpasses GPT-4's default 8K-32K window and even exceeds the rumored 1 million token context of Gemini. The ultra-long context allows GPT-5 to handle ultra-long documents and multi-round complex conversations, seamlessly performing reasoning tasks across files and sessions.

Simultaneously, GPT-5 possesses comprehensive multimodal capabilities: text, images, speech, and possibly even video can all be understood and generated under the same interface. Users no longer need to switch between different AI services; one GPT-5 can handle both visual and auditory information.

Keyword 3: More Intelligent Reasoning Strategies

Beyond visible capability improvements, GPT-5 is also smarter in implicit reasoning strategies.

OpenAI introduced that GPT-5 can adjust the intensity of reasoning based on demand: quickly producing results for simple tasks and autonomously entering “deep thinking” mode when encountering complex problems to increase the depth of thought. For instance, in code debugging scenarios, GPT-5 can first attempt a quick fix, and if unsuccessful, gradually deepen the analysis to avoid wasting computational power by running at full capacity every time. This adaptive intelligent scheduling is attributed to the built-in routing module and chained reasoning optimization, allowing the model to balance speed and accuracy. Combined with OpenAI's claimed reduction in hallucination rate (a 45% decrease in erroneous generation) and higher fidelity to instructions, GPT-5 undoubtedly elevates the reliability of AI assistants to a new level.

02 How Far is it from a True AI Agent?

At the conference, Altman downplayed the concept of AGI, but there is industry consensus that GPT-5 rivals the prototype of a primary Agent—it possesses key Agent elements such as chained thinking, multi-step execution, and tool invocation.

However, to determine whether GPT-5 has truly become an intelligent Agent, we must examine its capability boundaries and shortcomings.

In terms of capabilities, GPT-5 has made significant strides in autonomy and continuous reasoning compared to previous generations. OpenAI has specifically fine-tuned the model's autonomous decision-making, collaborative communication, and testing capabilities, making GPT-5 more proactive when performing complex tasks. Taking a coding assistant as an example, GPT-5 can work continuously for several minutes, invoking multiple tools to complete a complex programming instruction, during which it will proactively output plans, steps, and status updates, making the vision of “one GPT, one person, one company” within reach.

Previously, models often responded to one question at a time, passively waiting for instructions, whereas GPT-5 will suggest the next step during tool invocation intervals without requiring users to confirm every step in detail. This Agent-like chained thinking and autonomous execution significantly enhance its performance in complex scenarios. Tests show that GPT-5's deep reasoning module (GPT-5-thinking) can solve complex problems with fewer tokens, reducing step overhead by 50%-80% compared to previous models. This indicates that GPT-5 has initially acquired the ability to plan and optimize the execution of long-chain tasks.

More importantly, there has been a leap in tool usage capabilities. OpenAI reports that GPT-5 scored as high as 97% on the strictly evaluated multi-tool usage benchmark τ^2-bench, while all previous models scored below 49%. GPT-5 can reliably connect multiple operation steps to complete real-world tasks. For example, in a customer service scenario, GPT-5 can engage in a dialogue with the user while invoking a database query and taking subsequent actions based on real-time status, setting records for its adherence to tool instructions and error handling capabilities. These results prove that GPT-5 has mastered a considerable degree of Agent-style tool scheduling and environmental interaction skills, taking another step towards a general intelligent agent.

However, GPT-5 still has critical gaps from being a truly autonomous intelligent Agent.

OpenAI itself acknowledges that GPT-5 still has significant limitations in persistent memory, autonomy, and cross-task adaptability. It cannot continuously accumulate long-term memory like humans—once exceeding the 400K context, its “memory” of older interactions will still be lost, requiring the assistance of external databases or memory modules.

In terms of autonomy, although GPT-5 is more proactive, it ultimately still follows human prompts and cannot autonomously generate new goals or initiate tasks on its own. While it performs multi-step processes more smoothly, it lacks truly creative responses to new scenarios that are not explicitly instructed, still falling short in simulating human intelligence. This is evident in some tests: In the Arc Prize test, known as AGI The Ultimate Challenge, GPT-5 performed far worse than its rival Grok-4 and was even “easily identified by human experts.” An OpenAI spokesperson also emphasized that GPT-5's new features are primarily improvements to existing ones and do not fully address the autonomy issue.

However, it is certain that in the era of Agents, GPT-5 may be just one step away from the goal.

03 OpenAI's Next Battle

Behind OpenAI's simultaneous release of five models lies its ambition to build a platform architecture resembling an “AI Operating System.”

GPT-5 is no longer a single model but a “model matrix” composed of multiple specifications that can dynamically invoke “deep thinking” or efficient lightweight models based on task complexity. “The big bet of GPT-5 lies in unification,” said Sam Altman. GPT-5 achieves an architectural upgrade from manual switching in GPT-4 to automatic routing by the system, truly achieving “unification.” The concurrently launched GPT-5-mini and GPT-5-nano provide 400K context and multimodal capabilities at a lower cost, and OpenAI attempts to cover full-scenario intelligence needs through a combination of high and low specifications.

If the multi-model matrix is the core of the “AI Operating System,” then the surrounding ecosystem that OpenAI is building is the “framework and interface” of the operating system. Its Assistants API allows developers to build custom GPT assistants, turning the model into an intelligent Agent that can be embedded in any application; ChatGPT plugins act as extension tools, providing GPT with the ability to invoke external services and real-time data, similar to an app store in the AI world; and the custom model interface means that developers can access their own models or custom versions, connecting with the OpenAI platform.

Sam Altman's vision is even more straightforward. He has stated that OpenAI's goal is not to become a winner in a single application but to “become the layer on which everything else is built.” In other words, OpenAI hopes to act as the underlying platform of the AI era, allowing other applications to be built on its “AI Operating System.”

To this end, OpenAI is constantly enriching its platform components: from the latest GPT-5 model matrix to the plugin system, assistant API, to open model releases.

On one hand, ChatGPT has evolved from an initial chatbot to an “AI Swiss Army Knife” that integrates search engines, plugin tools, and more; on the other hand, OpenAI has begun to loosen its closed strategy, open-sourcing high-performance models for the first time and releasing the gpt-oss series, the first large models with open weights since GPT-2, allowing developers to download, customize, and run them offline for free.

This is seen as a crucial step for OpenAI towards building an ecosystem: by handing over its models to “more people” through the Apache 2.0 open-source license, it aims to attract developers to deeply participate and consolidate its platform foundation.

Echoes of History: Closed Rise or Fragmented Openness

The competition among large models reminds one of many “fated confrontations” in the history of technology.

In the smartphone era, Apple leveraged its closed hardware and software integration to provide users with an excellent experience and a highly sticky ecosystem, establishing a solid profit barrier; while Google-led Android was open-sourced to numerous vendors, winning market share but at the cost of fragmentation and mixed ecosystem quality.

A similar plot is unfolding in the AI field: The OpenAI model resembles Apple of old, safeguarding user experience through the top-performing GPT-5 model and its own platform, trading closedness for quality and commercial returns; while the open-source advocacy of Anthropic and Meta resembles the Android camp, aiming to unite the majority and rapidly expand, allowing “AI citizens” to flourish everywhere, but also facing the challenge of governing numerous versions and standards.

Past experiences have shown that closed ecosystems often rise rapidly in the early stages with superior experiences, while open ecosystems later surpass them due to their scale and low barriers to entry. Will the battle for AI operating systems replay this scenario? Or will a third path emerge? These are questions of great interest.

In the cloud computing field, Amazon AWS started with IaaS, but what truly made it unshakable was a series of PaaS products: once developers use AWS-provided managed services such as databases, message queues, and function computing, they are firmly tied to the AWS ecosystem. In contrast, OpenAI is clearly evolving from “providing model computing power” to “providing complete platform services.”

Some once called AWS a “new operating system” because applications are built directly on its API without awareness of the underlying server; today, isn't OpenAI creating a new operating system for the AI era? Developers invoke OpenAI's interfaces, and the models, computing power, and even plugin ecosystems behind them are packaged and provided by OpenAI. If AWS monopolizes cloud infrastructure interfaces, then OpenAI is attempting to monopolize AI intelligent layer interfaces.

It's worth noting that the growth of the AWS ecosystem doesn't rely on open source but rather on ease of use and first-mover advantage to form de facto standards. OpenAI's strategy shares similarities: preemptively occupying market mindshare, making GPT APIs and plugins the default options for developers, even if later competitors open-source their code or reduce prices, it will be difficult to dislodge its ecosystem position.

Of course, historical analogies are not prophecies. The mobile ecosystem ultimately saw the coexistence of two giants, and in the cloud computing field, latercomers like Microsoft Azure and Google Cloud also have their own places.

The current AI platform battle landscape is more complex: giant alliances and competitions intertwine, and the boundaries between openness and closedness are increasingly blurred. Perhaps the future AI world will not simply replicate the outcome of a past battle, but the underlying logic of business and technological evolution is strikingly similar: user experience, developer ecosystem, and standard control—these three elements always determine the direction of platform wars.

Is OpenAI building an “operating system” for artificial intelligence, or does it have ambitious plans to define the future of the entire AI technology stack, cloud services, and application paradigms? This “models as platforms, interfaces as boundaries” battle among titans has only just begun, and the answer remains to be revealed over time, leaving suspense for all of us to ponder.

The next chapter in the AI world is worth waiting for.