Tesla Pivots AI Strategy: Focus on AI5/AI6 Chips

![]() 08/14 2025

08/14 2025

![]() 684

684

Produced by Zhineng Zhixin

Tesla is embarking on a new chapter in AI chip and hardware development. The pioneering automaker, renowned for its in-house hardware innovations, has disbanded its Dojo supercomputer R&D department, redirecting its core computing resources towards two new generations of proprietary chips: AI5 and AI6.

Shifting away from the 'training-centric' approach of Dojo, Tesla's new strategy revolves around these chips to build an integrated distributed computing platform that supports both inference and training. This platform places the inference cluster at the heart of Full Self-Driving (FSD) research and development.

This transformation reflects a fundamental shift in Tesla's autonomous driving training system, moving from reliance on real road data to leveraging synthetic data generated by world models.

By concurrently running world models in the cloud, Tesla can mass-produce synthetic driving data that covers extreme and long-tail scenarios. This synthetic data, combined with real data, enables rapid training and iteration of deployable FSD models in vehicles, reducing the cost and cycle of data collection and annotation. Additionally, it gives Tesla greater control over safety and system manageability.

Part 1: AI Chip Strategy Adjustment and Hardware Architecture Evolution

Since its inception, Tesla's Dojo project has been a significant milestone in the company's pursuit of autonomous driving and AI computing autonomy. Initially designed to create a proprietary supercomputing platform for large-scale neural network training, Dojo aimed to reduce dependence on external GPU vendors and provide a continuous, rapidly iterative training environment for FSD models.

However, as FSD research and development evolved and the importance of synthetic data generation grew, Tesla recognized that the original Dojo approach had issues with redundancy and resource fragmentation.

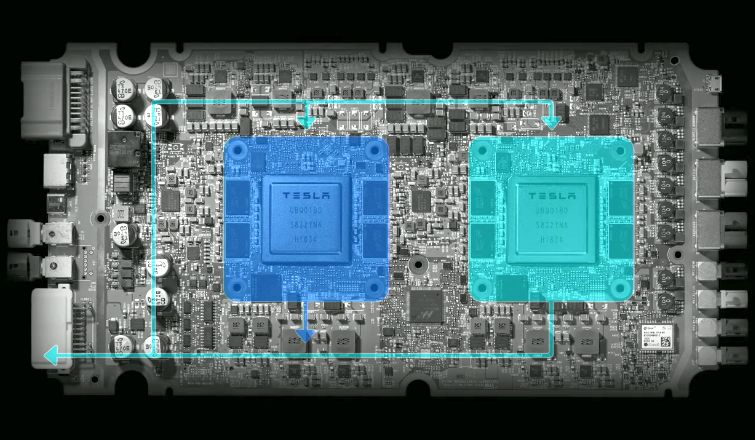

The closure of the Dojo supercomputer R&D department does not signal an end to Tesla's commitment to in-house AI hardware but rather a refocusing of core resources on the AI5 and AI6 chips. Both chips, designed by Tesla and manufactured by TSMC, possess dual capabilities for inference and training.

In a recent statement, Musk emphasized that, rather than developing multiple product lines concurrently, it is more effective to modularize the computing power of AI5 and AI6 through architectural, bandwidth, and packaging optimizations to meet the diverse computing needs of scenarios ranging from vehicles to the cloud.

Tesla's hardware architecture now includes a flexible expansion mode: a single AI5 or AI6 chip can handle inference tasks at the vehicle or robot end, while multiple chips connected in parallel (up to 512) can form a high-bandwidth inference cluster capable of handling world model operations and large-scale synthetic data generation.

This solution, dubbed 'Dojo 3', is no longer a single training supercomputer but a distributed computing platform driven by inference that also supports training.

The AI5 and AI6 chips emphasize a balance between transistor density and data channel bandwidth. Each chip contains tens of billions of transistors, spans 645 square millimeters, and uses modular packaging to simplify thermal management and power distribution.

In terms of production, Samsung may undertake some manufacturing tasks for AI6, while Intel is rumored to participate in chip packaging, creating a collaborative model involving TSMC, Samsung, and Intel. This diversified supply chain mitigates the risk of relying on a single foundry and gives Tesla greater control over production capacity and costs.

Despite this shift, Tesla continues to utilize external computing resources, leveraging NVIDIA GPU clusters for world model training, which complements its internal AI5/AI6 inference clusters.

NVIDIA retains a mature software ecosystem and performance advantages in ultra-large-scale training scenarios, while Tesla's proprietary chips offer higher energy efficiency ratios for specific inference tasks.

This hybrid architecture provides Tesla's computing platform with both compatibility and flexibility, allowing the company to maintain technological autonomy while leveraging the stability of external mature resources.

Part 2: Reconstructing the FSD Training System with Synthetic Data

The core impetus behind Tesla's strategic adjustment extends beyond hardware design optimization and is closely tied to a fundamental change in its FSD model training approach.

Previously, FSD training heavily relied on real road data collected from Tesla's fleet. While this approach ensured data authenticity and diversity, it struggled to cover extreme scenarios, long-tail distributions, and high-risk driving situations. Furthermore, the cost of collecting and annotating real data was high, and iteration cycles were constrained by real-world conditions.

Tesla is now shifting its focus to synthetic data generated by world models.

A world model is an ultra-large-scale AI model trained in the cloud, capable of simulating roads, traffic participants, and various weather and lighting conditions in a virtual environment. By performing inference on this model, Tesla can rapidly generate a vast amount of synthetic driving data with diverse distributions and high controllability. This synthetic data is then combined with real road data to train new FSD models.

This strategy has significantly increased the role of inference in FSD model training:

◎ Traditionally, inference primarily occurred on the vehicle side, where the model was used for actual driving decisions after training.

◎ Now, a significant portion of inference tasks have shifted to the cloud for world model operations, focusing on synthetic data generation.

This transition requires the cloud inference system to possess extremely high bandwidth and low latency capabilities to support the parallel generation of thousands of scenarios. The AI5/AI6 inference cluster (i.e., Dojo 3) perfectly meets these requirements.

Under the new training system, Tesla's AI workflow is broadly divided into three levels:

◎ First, NVIDIA GPU clusters are used to train ultra-large-parameter world models. These models do not directly deploy to vehicles but serve as synthetic data generation engines.

◎ Second, the world model runs through the AI5/AI6 inference cluster to generate synthetic data covering a wide range of scenarios through large-scale parallel computing. These data can flexibly adjust parameters, such as adding rainy and snowy weather, night driving, special traffic events, and other long-tail scenarios.

◎ Finally, the synthetic data is combined with real road data to train a small-parameter FSD model deployable on vehicles, enabling rapid iterations and feedback updates within the fleet.

The use of synthetic data offers advantages in efficiency, controllability, and safety. Many dangerous or hard-to-reproduce real-world scenarios can be infinitely simulated in the virtual world, enhancing the model's response capability without increasing real-world risks.

This approach is gaining traction in the AI field, with models like Grok 4 and GPT-5 adopting similar strategies, incorporating synthetic data as a crucial part of their training sets.

Summary

Tesla's concurrent shift in hardware and data strategies has created a new closed loop for its FSD research and development model: GPU clusters train the world model, the AI5/AI6 inference cluster generates synthetic data in batches, and the final lightweight model is deployed to millions of vehicles for validation and optimization. This architecture not only leverages the mature ecosystem of NVIDIA GPUs but also grants Tesla autonomy over computing power in key areas through its proprietary chips.

In an industry exploring the fusion of autonomous driving and general artificial intelligence, Tesla's approach offers a reference path. It demonstrates that inference and training are no longer distinct, and real and synthetic data are no longer opposed but highly coupled in hardware, algorithms, and production processes. This may well be the mainstream direction of AI competition in the coming era.