Beijing's Emerging AI Unicorn: Revolutionizing the Film Industry with AI Movie Studio

![]() 08/19 2025

08/19 2025

![]() 515

515

Interview | Pencil News Song Ge, Xin Xiao

Written by | Pencil News Song Ge

Imagine a scenario where you describe a scene to AI, and it generates a cinematic-quality video for you – no cameras, actors, or film crew required. Just a few conversations with AI.

This could be a groundbreaking opportunity for the fusion of AI and movies.

Recently, a Beijing-based AI+film unicorn has emerged.

Liang Wei, a 16-year veteran of the film industry, has witnessed numerous "impossible missions." Now, he's rebooting his business with AI. "It's like giving every ordinary person a movie studio," Liang Wei describes his startup project.

His upcoming product, Movie Flow, aims to do for the film industry what "Meitu Xiuxiu" does for photo editing: compressing months of work by hundreds of people into "one-click generation."

Liang Wei reveals that Movie Flow's upcoming tool+community platform could become the next "film version of Xiaohongshu." Here, ordinary people's micro-films can gain traction and revenue, while professional directors can discover talent.

Movie Flow integrates the world's top AI video models (including OpenAI, Google, etc.), and the generated footage is nearly indistinguishable from the real thing. However, controversy ensues: will traditional special effects companies disappear? Who owns the copyright of AI-generated content?

"In the next three years, the film industry will undergo more changes than it has in the past 30 years," predicts Liang Wei. Read Pencil News' interview with MovieFlow CEO Liang Wei for more insights.

1. What is the most noteworthy new opportunity in film technology?

Traditional film technology has stagnated, and AI's core value is cost reduction. Only by reducing costs can production be sustainable. Tools help professionals cut costs in shooting, visual effects, and other aspects.

2. What sets Movie Flow apart from other video-generating AIs?

It focuses on narrative video generation while building a creator community. Tools are tied to the community to solve work exposure and cooperation issues.

3. Can AI-generated content be identified?

Current content is already hard to distinguish from the real thing and will become even harder in the future. By integrating global top models (OpenAI, Google, etc.) and enabling "one-click video production" through agent scheduling.

4. What is the tipping point for AI+film?

It will first explode on the consumer end, possibly next year: video generation capabilities will take another leap forward.

Disclaimer: The interviewee has confirmed the article's information as true and accurate, and Pencil News endorses its content.

- 01 - The Biggest Opportunity for AI+Film

Pencil News: Entering AI video editing software is related to your personal experience?

Liang Wei: This is my second startup; the first was in traditional theatrical films.

I've worked in theatrical film for 16 years, from 2009 to 2024. We've done production, investment, marketing, distribution, and opened cinemas.

The opportunity arose last year when traditional film shooting and production became difficult, coupled with industry changes. I've always been interested in technology. Initially, I wanted to research whether AI could reduce animated film costs. We wanted to cut costs and shorten cycles while retaining copyright. Later, I found AI could not only reduce costs but also improve efficiency – potentially halving or even reducing costs to one-third with three to four times the efficiency.

So last year, I focused on AI and imaging, looking for a breakthrough. As an entrepreneur, I wanted a direction that excited me and had a profound impact. Finally, I decided on "tools+community," determined in May this year, and now the product will soon launch.

Liang Wei, CEO of MovieFlow

Pencil News: In film technology, what's the most exciting new opportunity for you?

Liang Wei: Film technology's core is still film, especially helping the industry reduce costs. Frankly, under current AI and filming technologies, there's been no real innovation in film for a long time. The last was 3D movies like "Avatar," where everyone expected glasses-free 3D. But after 3D's popularization, pseudo-3D and 2D-to-3D conversions became more prevalent, and the viewing experience deteriorated. Glasses darken the screen, so now many IMAX theaters show 2D films, with 3D becoming less common, unless it's a special case like "Avatar".

In production, special effects technology has also reached its limits, and audience aesthetics have become fatigued. Traditional film technology has hit a bottleneck and won't have major breakthroughs in the short term unless there's disruptive technology, like truly usable glasses-free 3D.

Now, more changes are happening in stories and themes, but innovation here isn't easy either. Traditional films have long creation cycles, often taking several years to complete a work, and creations from a few years ago are hard to match the social mood and audience psychology at release. The result is a more centralized industry – resources are concentrated on big directors and actors, leaving fewer opportunities for newcomers.

In contrast, decentralization is the future trend. Using AI to build images and enabling everyone to unleash their productivity aligns with this broader direction, but traditional film is still highly centralized.

Globally, European films are more decentralized because they emphasize artistic expression and respect individual creativity. Chinese and Hollywood films are polarized: one end is big-budget, big-director, big-actor productions; the other is niche creations, with middle-tier works shrinking. Return on investment has also declined significantly. In the past, investing hundreds of millions often led to billions in box office receipts, but now it's difficult to even reach 1 billion yuan, let alone 500 million.

In summary, traditional film technology has stagnated, and there's been no breakthrough in viewing modes. No matter how many cinema screens there are, if there's no technological change, the status quo will only be maintained. The situation for long dramas is similar. On streaming platforms, the proportion of users who watch from the first episode to the last is extremely low, production costs are high, and returns are low, so the production model for long dramas is also under threat.

I believe AI's only role in film technology is to help reduce costs. Only by reducing costs can production be sustainable. Our future Movie Flow product will also serve professional filmmakers, customizing solutions for shooting, visual effects, and final production needs, helping them reduce costs.

Pencil News: How does your current product, Movie Flow, address this issue?

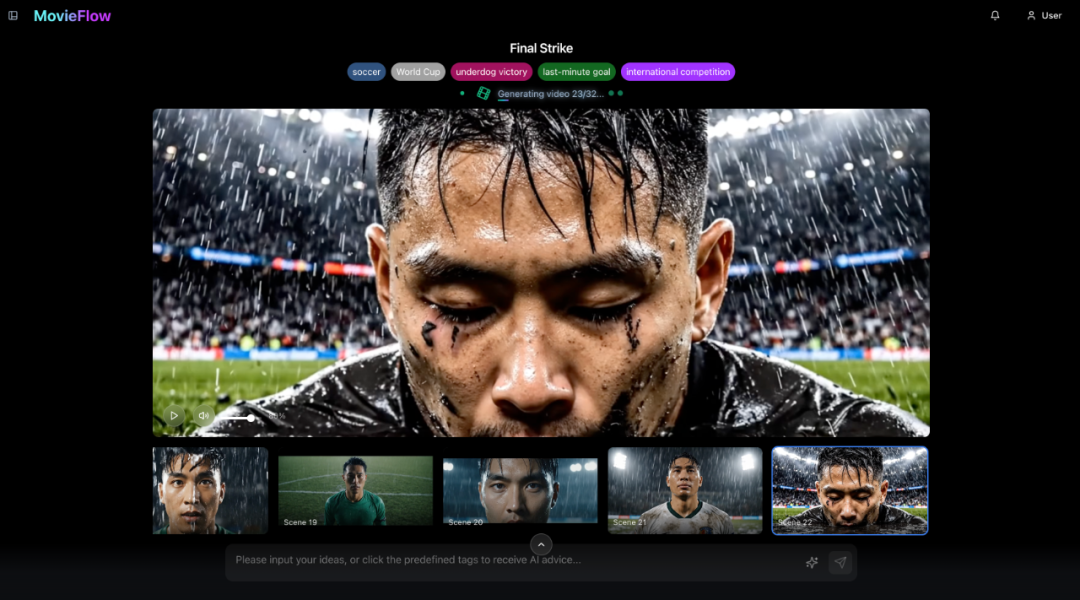

Liang Wei: We hope to create an AI tool that boosts video creation productivity. On one hand, it helps users quickly produce high-quality footage like popular image generation tools; on the other, we hope it generates dynamic videos – not just a few seconds of material, but structured, plot-driven videos.

Internally, we divide this tool into two directions:

First, it's a content production tool for individual creators, enabling them to realize their creative ideas faster and at a lower cost. For example, if someone has a short film script, they may have previously needed a team of photographers, actors, artists, post-production staff, etc., but now, through AI, they can produce it themselves in a short time.

Second, it's an auxiliary tool for professional film production. For example, it helps film teams quickly create storyboards and concept previews before shooting or enhances special effects and editing efficiency in post-production, reducing labor and time costs.

- 02 - Solution: AI Tools Plus Community?

Pencil News: How does it differ from current video-generating AIs on the market?

Liang Wei: Mainly in two ways. First, we're more focused on "narrative," not just generating a few seconds of material, but allowing AI to understand and output a story structure. Second, besides the tool, we'll build a community where creators can showcase, exchange, and be discovered, making it not just a tool but a creative ecosystem.

Pencil News: So the tool and community will be tied together?

Liang Wei: Yes. With just a tool, creators' works can easily get lost. A community helps works find an audience and partners, making the creative process more rewarding.

Pencil News: What's the customer buy-in rate? Can they tell it was made with AI?

Liang Wei: AI will become more realistic and harder to distinguish. What we make with Movie Flow now can't be identified as AI content when posted on any platform, and in the future, it will be even harder. This will, of course, bring ethical and moral issues: the line between real and fake will become increasingly blurred.

This isn't just Movie Flow; it's a trend in the entire large model field. We use the best models worldwide – both overseas and domestic – through a hybrid model invocation method. Our own training models are next, where we'll train small models for small problems; for the video large model stage, we use the best readily available capabilities: video models from OpenAI, Google, domestic companies like Keling, Jimeng, ByteDance, etc., and the footage will become increasingly realistic, which is the overall trend.

It's precisely because of this trend that products like Movie Flow can make "AI-produced imagery" accessible to ordinary people.

We package the entire film production process and "entire crew" into an agent, making it available to ordinary people, so they can "make movies" – movies in quotation marks, meaning cinematic-quality footage. Without AI, ordinary people were too far from traditional film shooting to even think about making movies; the entertainment industry was for spectators.

But if one day it becomes simple: in your phone, you tell Movie Flow a few sentences, and a one-minute video is produced in just over ten minutes; you don't need to be a screenwriter, editor, or photographer, nor write prompts or operate complex software. As long as you clearly express your ideas, we can produce something watchable, which you can then fine-tune according to your preferences.

The significance is like the transition from photo studios to smartphones. Before, you had to go to a photo studio to take pictures; now everyone uses an iPhone. That's Movie Flow's significance for films and ordinary people. Our engineering effort "schedules" large model capabilities to create a large Agent, which contains countless small Agents – we currently have 6: Screenwriting Agent, Directing Agent, Cinematography Agent, Editing Agent, Music Agent, and Personalization Agent.

They collectively support Movie Flow's large Agent. Ordinary people don't need to understand lighting, camera positions; they just need to express their emotions and intentions, and we'll recognize and help them shoot, edit, and present. The foundation lies in AI's video model capabilities reaching this point, to the extent of being "indistinguishable from the real thing." Taking another small step forward next year will further blur the line between real and fake.

MovieFlow's software interface

Pencil News: Will this eliminate traditional special effects companies?

Liang Wei: Special effects will also be eliminated or reshaped in the future. As film production shrinks, the demand for special effects decreases; with AI generation capabilities amplifying, why would traditional special effects companies be needed? In the future, special effects companies will be more like roles that "patch up" large model capabilities. Future video AI's generation capabilities will be very powerful. We're doing this because no company has yet successfully integrated "film generation and production" with "video large model capabilities" into a large Agent, like pressing a phone button to produce a film ordinary people can use.

Of course, just because everyone has a smartphone doesn't mean everyone takes good photos. The simpler the tool, the more it amplifies the gap in creativity and aesthetics: people with creative abilities are more liberated; many potential good creators were previously too far from imagery, but now one person can make short dramas, or even daily episodes of 30-40 minutes. Our App will launch the month after the tool, a "Netflix version of Xiaohongshu" community, which is our positioning: tools + community.

Pencil News: Will you develop in this direction?

Liang Wei: Absolutely, it's about tools plus community. We aim to achieve "universal access to image creation," akin to everyone picking up a pen and writing. Everyone can film, yet the quality varies: some users create content for personal amusement and share it on WeChat Moments, while others may have a background in writing novels or directing but lacked access to traditional production opportunities. Now, they can produce dramas on Movie Flow. For instance, one episode can be two minutes long, and you can complete it in half an hour. We've even compressed the production time to 25 minutes (one-time generation), giving you a few more minutes to fine-tune it. Consistency across models and within the same model (characters, props, scenes) is not perfect—we can't claim 100%—but we've addressed over 90% of these issues. There are numerous one-click video production and video agent tools on the market for e-commerce, account creation, short story filming, and more. However, we focus on "cinematic-quality narrative storytelling," and we don't currently see any similar products globally.

Our broader objective is to unlock productivity. What we do represents a shift in "production tools" and does not conflict with any major model companies—Keling, Jimeng, Runway, Google, Sora, etc., we leverage them all.

When users employ our product, we utilize agents to determine which model to call upon for each scene, similar to how each large language model has its unique "style." During the creation process, we recognize the user's ideas, text, or voice, and optimize the utilization of different models within the story, making the interaction and packaging seamless.

Most current AI products require input in a specific format, but ordinary users may not know how to use them or have much to input. We've lowered the threshold to the point where users can "use it by chatting."

If you have a script, you can simply upload it and start filming. Alternatively, upload two pages first (one page per minute), create a one-minute video to see if it meets your expectations, make adjustments, and then proceed with the next two minutes, and so on. In two or three days, you might have a 90-minute movie.

From this perspective, Movie Flow and its community have the potential to create a new ecosystem: a vast number of MF creators generating content and earning revenue within the community using MFB (Magic Coins), akin to the value of online literature platforms.

In the future, within the MF community, the format of short dramas may evolve. For example, instead of releasing 100 episodes consecutively, you might release them in sets of three. You could produce five or six episodes in the morning, take a break in the afternoon, and review feedback in the evening. If people like it, you can continue; if not, switch to different content.

- 03 - Many small opportunities require navigating competition from large companies

Pencil Road: Do you have a significant domestic user base for your product? Is it more suitable for the domestic or overseas market?

Liang Wei: Currently, our testing primarily focuses on overseas markets. We are a purely overseas product, driven by copyright considerations. We aim to reach a global audience and enable users worldwide to leverage our image generation capabilities.

Pencil Road: Do you charge overseas C-end users directly, or is there a free model available?

Liang Wei: It's entirely paid, with no free option. The entry-level version allows for two videos, while the advanced version is tailored for professionals. There's also a user-friendly Pro version in between. We rely solely on revenue from paid usage.

AI video is still an emerging industry due to limited adoption. Most individuals capable of creating AI videos are enthusiasts, professional designers, or advertisers. Currently, large AI video models are in their infancy, with OpenAI's Sora and Runway being just one or two years old, and Google's VEO slightly over a year old. Therefore, it's still in an early and somewhat chaotic stage.

Pencil Road: What has been your biggest challenge in recent months?

Liang Wei: We're actively recruiting every day. Our efficiency is already high, but there's always room for improvement. The primary challenge is finding the right people for an AI-native team, where everyone needs to be highly proficient in using AI.

Staying current in the AI era is challenging, especially for tech products where one person can be as productive as a hundred. If capabilities are insufficient, delivery speeds and efficiency will suffer. Our system is extensive, requiring judgment from each agent, and we must also optimize speed.

There are also engineering challenges. Linear collaboration can lead to significant losses, so we employ parallel collaboration. People are our most critical resource. We seek young AI-native talents born after 2000, primarily in Beijing and Hangzhou. Time is always a constraint with numerous tasks, but in a way, pressure drives efficiency.

We now iterate on a version every half-day, and every significant agent adjustment necessitates corresponding changes in other agents. Testing has reached an advanced stage, and open testing will commence soon.

Pencil Road: Have you considered integrating Movie Flow into games?

Liang Wei: Frankly, yes, we have considered it. The first step is to launch the tool and operate the community, followed by integration into games. Movie Flow currently has a cinematic feel, with visuals and pacing comparable to movies. In the future, we may add gaming capabilities or integrate with eyewear devices.

We utilize large models to integrate the best global offerings and optimize engineering. Future opportunities for startups in the AI video field will involve an increasing number of niche demands, such as speech videos, e-commerce videos, etc. However, the core remains storytelling, which is our strength.

Pencil Road: What new opportunities will arise in this sector?

Liang Wei: There will be numerous small opportunities in the future, but large companies will dominate. Addressing niche demands, such as wedding videos, is feasible.

In the future, we will also open up some of our technology to enable others to easily create explanatory videos using our agents. Digital human companies are also moving in this direction, where they can deliver an entire script in a few minutes, indistinguishable from live footage or digital humans. This is already common in e-commerce.

However, it will also bring challenges, such as differentiating between AI-generated content and the real thing. This is irreversible and can only be managed through discernment and regulation.

Pencil Road: Do you believe the explosion will occur in the B-end or C-end market?

Liang Wei: I think it will be the C-end market, possibly next year. Next year, video generation capabilities will take another significant step forward. We've already made progress, but there's still much room for growth. When large models take a leap forward, MF can also make a substantial leap.

Pencil Road: What is the key here?

Liang Wei: Multi-modal processing and model iteration. While large model companies focus on research iterations, we prioritize delivery, combining the best aspects of various model capabilities and packaging them into products accessible to ordinary people.

Pencil Road: What is the current user feedback on the product?

Liang Wei: During the testing phase, both directors and investors have been surprised. With just one sentence of input, a story of about two minutes can be generated, with varying paces, ranging from slightly over one minute to about two minutes.

Pencil Road: Why haven't large companies ventured into this space?

Liang Wei: They are reluctant to change and remain entrenched in their traditional ways. When they focus on film and television, they prioritize cost reduction, whereas we prioritize AI and consider what AI can bring to everyday people.

Large companies will eventually enter this field, but in the AI era, the technology itself poses no barriers. The key lies in comprehensive abilities such as efficiency, user perception, operations, teamwork, timing, product understanding, user insights, and marketing. Many people want to enter this space, but there are numerous hurdles to overcome to achieve success. Even large model companies must face these challenges.

What is my advantage?

I have 16 years of experience in the film industry, which allows me to view films holistically, making decisions from concept to distribution and copyright. This perspective also informs product design, where one must see the big picture rather than just one aspect. This is why traditional film companies don't venture into this area; they focus on film production and cannot fully commit to AI image generation.

For traditional film companies, undertaking this task is difficult unless the CEO fully supports it. This is not a decision that can be made by professional managers. In film companies, the CEO must be personally involved, as professional managers are typically constrained by financial considerations.

Truly exceptional agents are developed internally, possibly leveraging capabilities from universal large models like OpenAI, Google, or cloud-based models.

Pencil Road: During your practical process, have there been any surprises that exceeded your expectations, such as receiving new feedback or discovering new points that made you say 'wow'?

Liang Wei: There are surprises every day. We iterate daily, and currently, the effect is only about 40%, leaving much room for improvement. Each time we adjust parameters or context, or change the pacing of some agents, the effect changes. Movies involve numerous factors, so we need to encapsulate all knowledge within agents and instruct the large model on how to utilize it. For example, when filming a sad scene, the lighting, sound effects, and human voices on a rainy day must be precisely matched.

AI is not yet at the cinematic level, including action scenes and comprehensive understanding, but we will find ways to adjust or train our models to compensate for deficiencies. Building this system daily is inherently rewarding, and even if latecomers try to catch up, our experience and methods are not easily replicable.