Key Contradictions Facing the U.S. AI+ Industry

![]() 08/27 2025

08/27 2025

![]() 542

542

This article is based on public information and intended solely for information exchange, not as investment advice.

After market close yesterday (August 26), China's high-level blueprint for the AI industry, titled "Opinions on Deepening the Implementation of the 'AI+' Action," was officially unveiled, outlining the overall goals and roadmap for the country's AI industry over the next five years.

As a guiding document for the application layer, its release signals that the computational challenges that have hampered the industry in its early stages will soon be overcome, ushering in a new era of innovation across all sectors of AI applications.

In other words, the value of innovations in AI business models will gradually escalate, aligning with advancements in computational infrastructure to form a typical "software and hardware integrated" AI technology innovation and entrepreneurial cycle.

By examining the experiences of others, we can refine our own approach. This year marks the third year of large AI models transforming the technology industry. As the U.S., which leads in both computational power and business models, faces significant contradictions within its AI application industry and numerous pitfalls, their experiences and lessons offer valuable insights to avoid similar issues.

01 Current Main Contradiction in the AI Application Industry: Balancing Costs and Growth

Observing the U.S. version of "AI+" over the past three years, a notable phenomenon emerges: on one hand, there have been numerous financing announcements and remarkable revenue growth stories in AI vertical applications; on the other hand, a truly epoch-defining, global-scale product has yet to emerge.

Over the past three years, the "hot spots" in AI applications have shifted constantly, from initial AI chatbots and AI video generation to AI education, general AI agents, and most recently, AI programming becoming the star race.

Market observers increasingly focus on a core issue: in industries dominated by technology giants and large model vendors, shadows are emerging. AI innovation companies collectively face a fundamental dilemma – balancing costs and growth.

If we consider growth rates alone, the AI vertical application field is undeniably in a golden era.

Across various sub-sectors, we see enterprises converting code, creativity, and computational power into tangible revenue at astonishing speeds, thanks to AI.

Figure: Representative AI-native companies in different vertical application sectors

Figure: Representative AI-native companies in different vertical application sectors

• Video Generation: Heygen announced the completion of a $60 million Series A funding round in August 2025, with its annual recurring revenue (ARR) soaring from $1 million to over $35 million in just over a year, valuing the company at over $500 million.

• AI Note-Taking and Productivity Tools: Sierra's ARR reached $20 million; Abridge raised $250 million at a valuation of $2.5 billion; Freed, targeted at clinicians, saw its ARR grow from $5 million to $15 million.

• Legal, Translation, Medical, and Other Professional Fields: Harvey AI, DeepL, EliseAI, etc., have also established themselves in their respective fields.

Is there a bubble behind these astonishing valuations? Can their high valuations be sustained, especially when facing cost challenges?

A small clue can reveal much. The prosperity of the AI programming sector is a microcosm of the large model gold rush:

The AI programming tool Replit had an annualized revenue of only $2 million in August 2024, which then soared to over $10 million in 2024 and skyrocketed to over $32 million in February 2025, reaching $144 million in July 2025. After its latest funding round, Replit's valuation has reached $3 billion.

Lovable, an AI programming assistant from Sweden, reported an ARR exceeding $100 million in July 2025, according to TechCrunch. Lovable reached this milestone in just eight months since its launch, and in June 2025, Lovable announced an ARR of $75 million, implying a monthly revenue of $25 million. After its latest funding round, Lovable's valuation has reached $1.8 billion.

Another larger AI programming product, Cursor, has also achieved revenue results comparable to the two star products mentioned earlier. According to Bloomberg, the startup Anysphere behind Cursor surpassed $500 million in annual revenue in June 2025, up from $200 million in annualized revenue reported publicly three months earlier, representing a $300 million increase in revenue during this period. The latest public report shows that Anysphere's valuation has reached $20 billion.

AI vertical applications are essentially the most loyal "token consumers" of large model vendors. They have precise user groups and serve end-users directly through the superpowers of models and precise product design. This convenient application channel intercepts some users who originally belonged to large model vendors, explaining why even Sam Altman has personally ventured into AI programming products.

AI programming has succeeded previous star sectors such as AI chatbots, AI videos, and AI education products, becoming the star in investors' eyes. However, behind this glamour, the cost dilemma that cannot be ignored is also prominent.

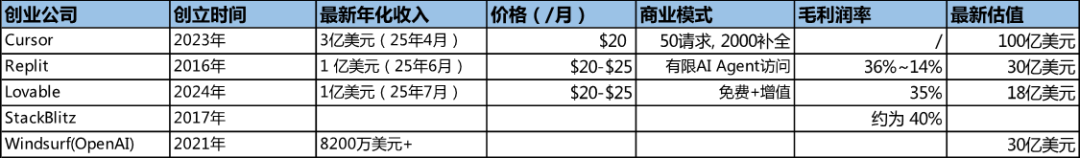

According to The Information, with the surge in user demand, the gross margin of the programming assistant Replit fluctuated drastically between 36% and 14%.

However, this exaggerated fluctuation range reveals the hidden dangers behind the prosperity of the AI programming sector. In fact, various public reports domestically and internationally have found that the gross profit margins of AI programming assistant enterprises are generally low, basically around 30-40%.

Figure: Basic gross profit margins of representative companies in the AI programming sector

The core reason for the low gross margin is the uncompressible and, to some extent, uncontrollable large model token cost, which is the "soul" of AI programming assistants.

Taking another AI programming product, Windsurf, as an example, the architecture costs are usually high, especially the usage cost of large language models (LLMs). To maintain user stickiness amidst fierce competition, AI programming products have to "follow up" and iterate their underlying models to the latest version whenever large model vendors release new models.

New models often bring improvements in task completion and effectiveness, and AI programming users who are skilled in technology are often sensitive and quite picky about whether the model is the latest. If AI programming products do not use the new model, they may easily lose some users. At this stage, several popular AI programming products have not yet completely diverged and formed a core competitive advantage independent of other products.

From a pricing perspective, on one hand, as several model vendors compete in model release frequency and performance, the price per token decreases; on the other hand, the increasing satisfaction with model performance drives a faster surge in actual user usage. If the price of model tokens drops by half, the usage of models may increase severalfold or even tenfold. The "bridge" between these two – the profit margin of AI vertical application enterprises – is subsequently compressed. The token cost of large model API calls cannot be effectively diluted as the scale expands.

This is vastly different from the financial models of traditional SaaS enterprises (such as Salesforce or Zoom) and the typical subscription model of Netflix.

02 Main Cause of the Contradiction: Cost Dilemma Brought About by Tokens and Scaling

Dario Amodei, co-founder of Anthropic, described the rolling process between model input costs and generated revenue in a podcast episode:

1. In 2023, you trained a model that cost $100 million.

2. In 2024, the model generated $200 million in revenue. At the same time, due to scaling laws, you had to spend $1 billion to continue training the model in 2024.

3. In 2025, the model brought in $2 billion in revenue. Similarly, the cost of training the model in 2025 may have risen to $10 billion.

From a traditional financial perspective:

In the first year, there was no revenue, and the company suffered a net loss of $100 million.

In the second year, despite $200 million in revenue, the cost of training the model was $1 billion, resulting in an $800 million loss.

In the third year, with $2 billion in revenue, the cost of training the model was $10 billion, leading to an $8 billion loss.

This is a terrifying cycle. Although Dario Amodei's focus is on large model vendors, this "cost paradox" also exists in the AI vertical application sector.

This sector is essentially a "token consumer" or distribution channel for large model vendors. For startups in this sector, token costs are essentially variable costs that change with usage rather than fixed costs, with almost no diminishing marginal effect. This means that:

• For every additional user, there is an additional token consumption;

• For every additional call, there is an additional cost expenditure;

• The more complex the user's task, the more tokens are consumed and the higher the cost.

We can construct a simple unit economic model to illustrate this issue. If an AI product is priced at $10 per month, the revenue and cost changes with different user levels are as follows:

• 1 user: Token cost $6, revenue $10, gross profit $4, gross profit margin 40%;

• 10,000 users: Token cost $60,000, revenue $100,000, gross profit $40,000, gross profit margin 40%;

• 1 million users: Token cost $6 million, revenue $10 million, gross profit $4 million, gross profit margin still 40%.

This cost structure reveals a brutal reality: Token costs cannot be compressed and are a real cost of goods sold (COGS), which is fatal to gross profit margins. The faster the revenue grows, the faster the costs expand, and the gross profit margin may even decrease instead of increase.

This is the drawback of a single subscription model and the core difference from subscription businesses like Netflix or SaaS models, where costs are not effectively shared among users but increase with the growth in subscriptions.

Secondly, for enterprises to expand their market, the subscription model may not be an ideal payment method. Many AI vertical projects can thrive in European and American markets (high-end users + high subscription penetration), but the payment rate is low in other markets, and the business model is unstable. Due to economic differences, users in many countries worldwide are resistant to subscriptions and are mostly accustomed to one-time buyouts or free + advertising models.

03 Individual Dilemma of AI Applications: Low Moat, High Competition

Currently, many AI programming tools do not have fundamental technical differences. Taking Cursor as an example, its architecture is encapsulated by Electron's VS Code, similar to Copilot's proxy, which is responsible for token invocation and task processing, and other programming tools are similar.

The AI programming products that are the focus of this article are already industry leaders, and the differences between them can only be seen in very narrow areas such as UI style and development environment convenience, but these differences obviously cannot form a moat.

• Lovable: Targeted at non-technical founders, small teams, and beginners, simplifying the application creation process and lowering the entry barrier;

• Replit: Suitable for individuals and small teams, providing "guardrail" functions to help beginners get started quickly;

• Cursor: Targeted at experienced developers, especially VS Code users, requiring more technical interaction;

• Windsurf: Positioned as a one-stop intelligent development environment, suitable for intermediate and junior developers, with a UI similar to modern IDEs.

How large is the programmer group that they are all competing for? Slash released a simple data set in May this year, estimating the latest number of global developers at 47 million, nearly double the 25 million estimated by many institutions.

Although there is a user base of 47 million, these are far from sufficient for the emergence of a stable revenue and profit-generating AI programming product. Perhaps, only when these sector stars continue to infiltrate and compete with each other will one remain to serve these 47 million users.

04 The Path to Breaking the Deadlock: Revolutionary Breakthroughs Needed from Cost Structure to Pricing Models

1. Pricing Model Optimization – From Single Subscription to Hybrid Subscription and Pay-per-Compute

From a competitive perspective, the competition for AI vertical application startups is much higher than that for large model enterprises. Driven by strong capital, the company valuation model in the AI industry will be more brutal and clear.

The subscription model is a relatively challenging model in internet business models. Revenue growth depends on the increase in subscription prices and the growth in the number of subscribers, essentially replicating the Netflix model from this perspective. This requires the services provided by enterprises to match their price increases and drive subscription growth.

AI application products are essentially API businesses that cannot build strong moats on their own, so they have weaker bargaining power. In other words, they are hard work. Under fierce competition, someone will be willing to do the hard work. If a single subscription price does not work, a more complex pricing model must be adopted.

Firstly, the token pricing model needs adjustment. Charging by task fails to capture the varying complexity of tasks and model consumption, whereas charging by compute more precisely aligns with backend costs, ensuring healthy gross profit margins.

In response to cost pressures, enterprises are already rethinking their pricing strategies:

Take Replit, for instance. Its model initially charged a flat 25 cents per "checkpoint" (akin to an agent completing a programming task). However, after updating its underlying model, task execution costs soared, pushing its gross profit margin into negative territory.

Consequently, Replit had to revise its pricing. In July, it announced a shift from per-task charging to a "compute-based" model, where prices adjust according to the computational power required. This change resulted in some tasks jumping from 25 cents to $2.

While this adjustment addresses Replit's profit woes, it may adversely affect subscriptions. Nonetheless, Replit can afford some optimism. Other AI agents, equally burdened by costs, will inevitably follow suit. By leading the charge, Replit may find that when everyone raises prices, customer churn slows, and subscriptions stabilize.

Public reports suggest Cursor is likewise tweaking its pricing model.

2. Business Model Innovation: Value-Added Services

AI programming apps present a fleeting blue ocean for micro-enterprises. Many corporate IT departments have in-house AI platforms, but varying technical expertise and inadequate data security open opportunities for AI vertical application products to offer value-added services.

By deploying privately, AI vertical applications can incorporate data security and privacy protection as value-added modules, boosting revenue while expanding enterprise service scope and enhancing security and stability.

Serving users generates vast amounts of behavioral and industry data. After cleaning and analyzing this data, AI vertical applications can provide enterprises with valuable industry reports, trend predictions, or user behavior insights. For example, AI programming tools can analyze millions of developers' programming habits, offering enterprises insights into technology stack preferences, efficiency bottlenecks, and more.

3. Bold Vision: Redefining Industry Pricing Models

In 2023, OpenAI's generative AI burst onto the scene. Three years later, AI pervades daily life, and "Deepseek's saying" carries significant weight. Soon, large models will become the new essentials, akin to water, electricity, gas, and mobile data. This transformation will spawn diverse pricing models for AI vertical applications.

Let's think creatively and draw parallels with water, electricity, and gas billing—usage-based and tiered pricing. Users can initially "stockpile" tokens at a lower price, replenishing them at a slightly higher rate post-consumption. A more relatable example is mobile data, often overlooked yet ubiquitous.

AI vertical applications can adopt mobile data pricing, featuring a comprehensive "basic subscription + data/compute package." Users select packages based on usage. Exceeding token limits triggers additional fees or new "token package" purchases, with monthly balances rolling over.

05 Conclusion: The Irreversible Rise of AI

Large AI models represent one of this era's most thrilling technological shifts, with AI vertical applications rapidly integrating AI into our lives.

We've already benefited from large models, offloading tedious tasks to AI, which serves both as assistant and mentor. As models evolve, our reliance on AI will grow, making a life without it unimaginable.

With AI becoming as pervasive as mobile data, it's time to transcend technological constraints and consider pricing from a consumer's perspective. This could be a game-changing innovation.

Today's cost challenges for AI vertical applications will undoubtedly be addressed ingeniously in the future, particularly in China. Just as DeepSeek V3.1 leverages algorithmic innovation to benefit China's chip computing industry, so too will pricing models evolve to suit the times.