Understanding the Evolution of Tesla's FSD Autopilot: From Assisted Driving to End-to-End Intelligence

![]() 10/11 2025

10/11 2025

![]() 620

620

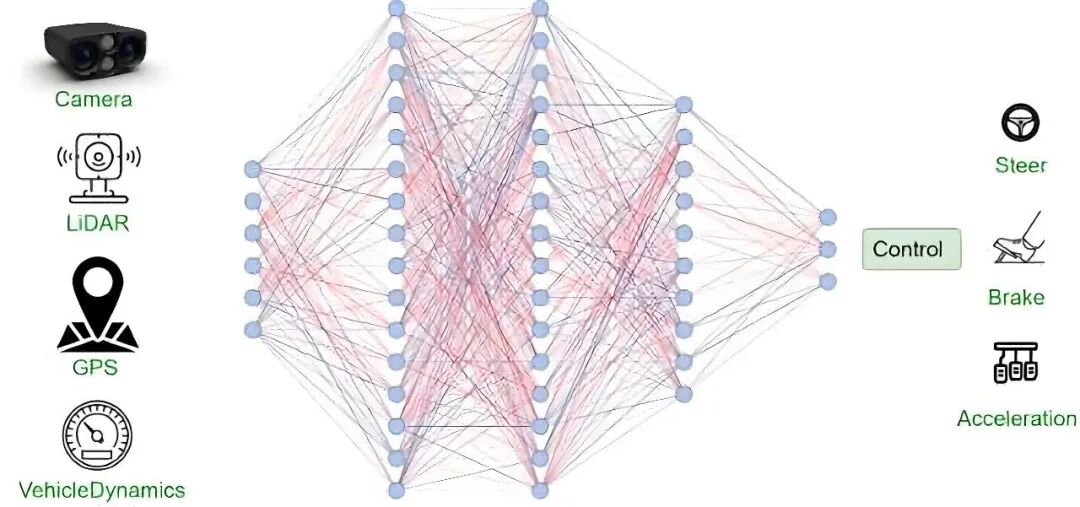

Since the inception of the autonomous driving sector, Tesla has emerged as a benchmark for numerous companies. Every update to its FSD (Full Self-Driving) system attracts considerable attention. Initially, autonomous driving systems were structured hierarchically, comprising multiple modules such as perception, localization, prediction, planning, and control, each tasked with distinct functions. These modules relied on explicit coding and predefined rules to execute tasks. However, with the exponential growth in data volume and computing capabilities, Tesla began integrating more functions into machine learning models. Notably, with the introduction of V12 (dubbed 'Supervised') in 2024, Tesla made significant strides in its 'end-to-end' approach. This innovation entails a neural network that directly translates camera images into control commands, adhering to a 'photon in, controls out' philosophy. Consequently, a substantial portion of the previous explicit C++ code has been supplanted by neural network weights. Today, let's embark on an exploratory journey through the evolutionary path of Tesla's FSD.

From Modular to End-to-End: A Paradigm Shift

A retrospective analysis of Tesla's hardware and software evolution elucidates why FSD can achieve rapid and groundbreaking functional updates. In October 2014, Tesla unveiled its Autopilot hardware system HW 1.0, featuring a front-facing monocular camera supplied by Mobileye, a 77GHz millimeter-wave radar (with a maximum detection range of 160 meters) from Bosch, 12 ultrasonic sensors (with a maximum detection range of 5 meters), and the Mobileye EyeQ3 computing platform, complemented by high-precision electronic brake assist and steering systems.

As technological advancements surged, Tesla introduced HW 2.0 in October 2016. Built on the Drive PX2 platform, co-developed with NVIDIA, this iteration marked a substantial performance leap, increasing the number of cameras to eight and boosting processor computing power to 12 TOPS, a staggering 48-fold increase over HW 1.0. Nevertheless, Tesla had already embarked on an in-house development trajectory during the HW 2.0 phase, initiating the development of its proprietary in-vehicle FSD chip. In April 2019, with the official installation of HW 3.0, which incorporated two self-developed FSD chips, Tesla's intelligent driving technology entered a new era of full-stack in-house development. These hardware upgrades were not merely for showcasing performance but were essential to support the increasingly large and complex neural network models.

In the realm of software, traditional autonomous driving systems typically compartmentalized 'perception-semantic understanding-prediction-planning-control,' with each segment executed through engineering rules or small models. Tesla's 'end-to-end' endeavor with V12 represents a more integrated approach, entrusting the entire functional process—from visual input to lateral and longitudinal control—to neural network learning. This model can glean human-like comprehensive decision-making strategies from extensive real-world driving footage, adeptly handling numerous boundary conditions that previously necessitated manual coding. While end-to-end systems reduce engineering complexity in certain scenarios (owing to fewer rule-based codes), they also present significant challenges. The internal decision-making processes of end-to-end models are not easily interpretable, and verifying their coverage demands larger datasets and more rigorous offline/online testing strategies to ensure safety. Tesla itself acknowledged in its release notes that V12 trained the network with 'millions of video clips' to replace hundreds of thousands of lines of C++ code.

The adoption of end-to-end systems does not imply a complete abandonment of the modular concept. Even if the primary control strategy is dominated by a large network, many teams still retain certain modules as safety valves or observable points (such as redundant discrimination logic for driver monitoring and collision warning). The advantage of this hybrid approach lies in leveraging end-to-end learning to enhance decision quality while preserving auditable or quickly repairable control logic at safety-critical junctures.

Key Features, Version Evolution, and User Experience

Tesla's FSD has witnessed several pivotal milestones in functionality, encompassing highway NOA (Navigate on Autopilot), urban road autonomous navigation with traffic light recognition, automatic lane changing, and autoparking. These features have been progressively rolled out through continuous software updates. Since the introduction of FSD Beta in 2021, Tesla has relentlessly iterated on challenging scenarios such as urban driving conditions and unprotected left turns. By the release of FSD V12 (Supervised) in 2024, Tesla publicly declared that it had amalgamated the urban driving stack into a single end-to-end network and gradually expanded the testing scope.

From late 2024 to 2025, Tesla successively unveiled versions such as V13 and V13.2, introducing user-friendly features like park-to-drive (enabling direct initiation from Park mode). By October 2025, Tesla rolled out FSD v14 (with v14.1 as the inaugural version), marking a substantial functional and experiential upgrade within the year. Targeting HW 4.0 in-vehicle computing power, it introduced more Robotaxi-style features, such as 'arrival options' (allowing users to specify where they want the system to park the vehicle: garage, curbside, parking lot, etc.), more refined speed modes (introducing more conservative speed settings like 'Sloth'), and enhanced handling of yielding to emergency vehicles. The initial update was tailored for models equipped with HW 4.0 due to the significant increase in the v14 model's parameter count, necessitating stronger computing power to ensure real-time performance.

In the Chinese market, Tesla also implemented phased software rollouts. In early 2025, Tesla executed a significant update to Autopilot in China, incorporating features such as navigation assistance on urban roads, traffic signal detection, and navigation-based automatic lane changing (these updates were widely reported in the media and owner communities, with the focus shifting towards the maturity of specific features and regulatory approvals).

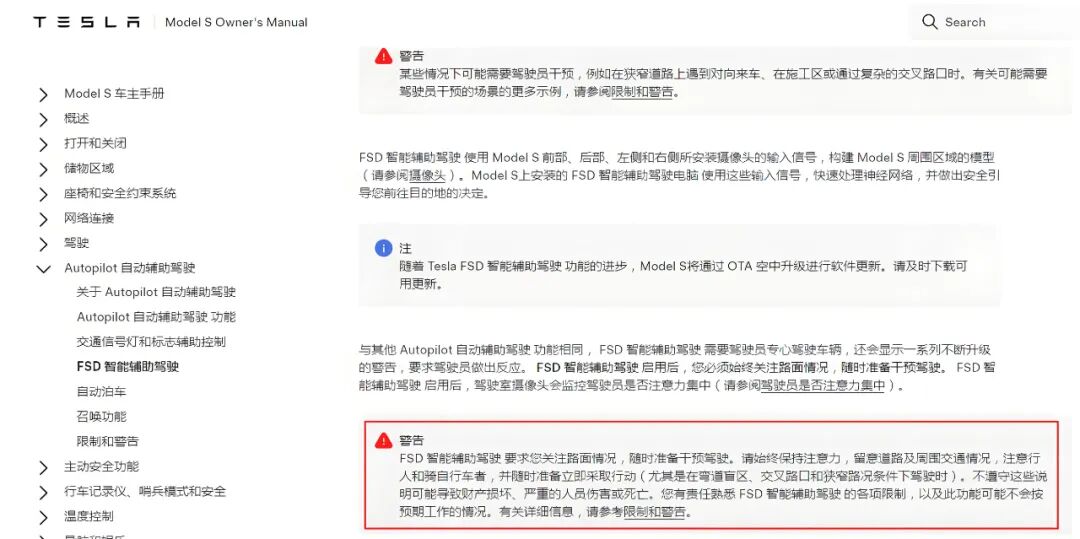

Users who have experienced different versions of Tesla's FSD have reported that each major version update brings noticeable new features or experiential enhancements (smarter turning, parking, and route selection). However, rare or edge scenarios still exhibit incorrect judgments or suboptimal behaviors. Consequently, drivers must remain vigilant and ready to intervene when using FSD, a point also emphasized on Tesla's official website.

Limitations on Tesla's FSD Development

Delegating all driving decisions to neural networks enhances the system's ability to navigate complex perception scenarios but also brings verification challenges, interpretability issues, and liability boundary dilemmas to the forefront. Over the past few years, Tesla has faced safety investigations and lawsuits in multiple countries concerning Autopilot/FSD, with controversies centering on whether the system overstates its capabilities in marketing, whether driver attention monitoring is adequate, and the legality and liability distribution of software behavior in actual accidents. By fall 2025, U.S. regulators once again conducted a preliminary assessment of a large number of vehicles equipped with FSD (focusing on issues such as traffic light recognition, running red lights, and entering opposing lanes). According to a Reuters report on October 9, the U.S. National Highway Traffic Safety Administration is investigating 2.88 million Teslas (TSLA.O), initiating an inquiry into newly labeled vehicles equipped with a fully autonomous driving system amid more than 50 reports of traffic safety violations and a series of crash reports. This underscores that regulatory scrutiny remains intense and can influence the pace of feature rollouts.

FSD, an acronym for 'Full Self-Driving,' has led some consumers to misconstrue it as an autonomous driving function that can operate without supervision. Meanwhile, Tesla labels the product as 'Supervised,' undoubtedly imposing a 'shackle' on the FSD's full potential. Although Tesla has released videos showcasing vehicles autonomously driving from factories to deliveries and even in short-distance delivery scenarios, these demonstrations are often conducted under controlled conditions or limited scenarios and cannot be simply equated with unsupervised operation on any public road.

Final Thoughts

Tesla's FSD embodies a technological pathway driven by vast amounts of real-world driving data, computing power, and end-to-end learning. It can significantly enhance user experience in the short term (exhibiting smarter following, lane changing, and parking behaviors) and demonstrate 'near-autonomous driving' capabilities in certain controlled scenarios. However, achieving truly comprehensive autonomous driving still requires time. This entails not just better model training but also establishing widely accepted safety, supervision, and liability mechanisms by society. For vehicle owners, treating FSD as a powerful assistance tool and strictly adhering to monitoring requirements is currently the most prudent approach. For policymakers, it is imperative to encourage innovation while ensuring public safety and information transparency. Over the next few years, every iteration of FSD will be worth observing, as it reflects not just technological progress but also the delicate balance between industry, regulation, and society.

-- END --