Who is the Optimal Solution for Autonomous Driving: VLA or World Model?

![]() 11/05 2025

11/05 2025

![]() 790

790

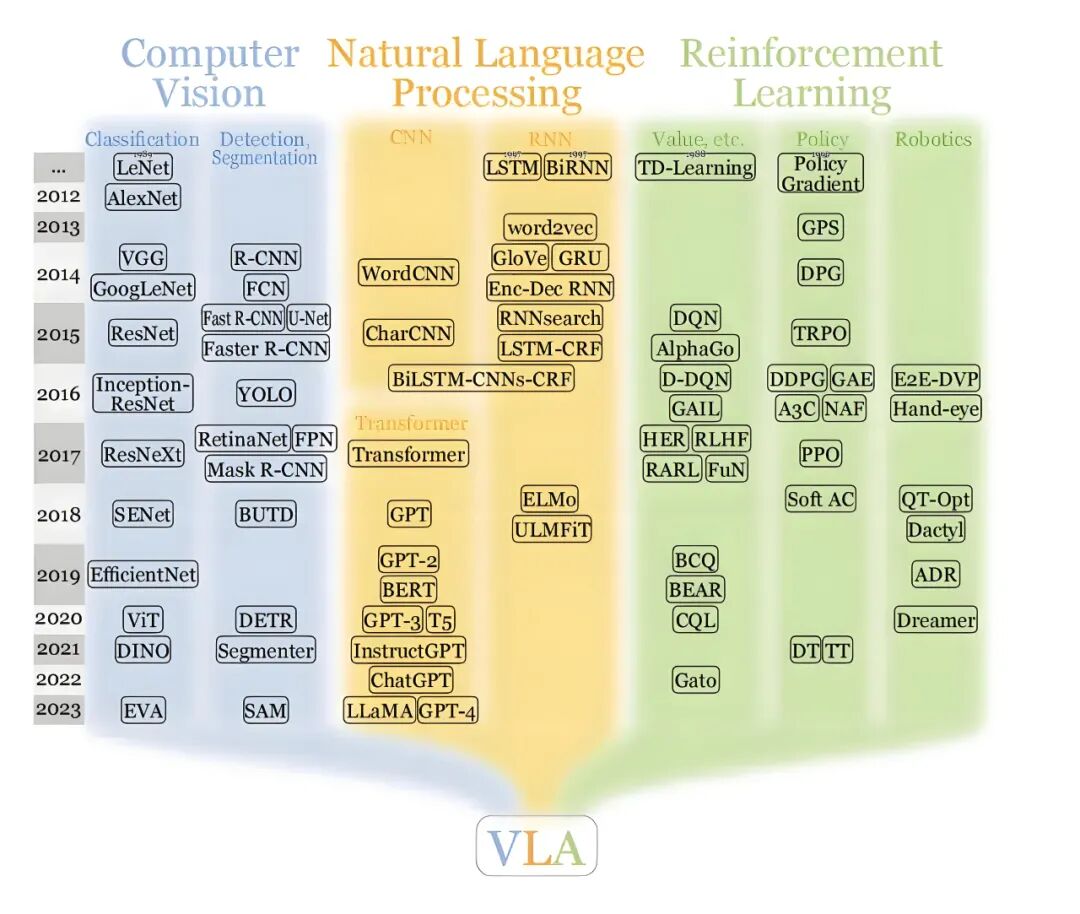

As autonomous driving technology makes strides, two distinct implementation paths have emerged. One is the VLA (Vision-Language-Action) model route, championed by Li Auto, XPENG, and Xiaomi. The other is the World Model route, led by Huawei and NIO. Both paths pave the way for the swift deployment of autonomous driving, but which one stands out as the optimal solution?

What is the VLA Model?

The VLA model, also known as the Vision-Language-Action model, is a method that seamlessly integrates visual perception, language comprehension, and action generation. Initially, it employs a visual encoder to transform the images captured by the camera into semantically rich feature vectors, utilizing models like SigLIP and Dino V2/V3 for this purpose. Subsequently, these visual features are "translated" into language-like representation units (tokens) and fed into a Large Language Model (LLM). Following multimodal transformation, the LLM's role extends beyond merely generating text; it performs higher-level semantic reasoning based on visual information. This includes analyzing lane conditions, predicting pedestrian intentions ahead, or evaluating the rationality of various driving strategies. The LLM's reasoning outcomes are then converted into specific control instructions, such as trajectory and speed, to drive vehicle execution.

Image Source: Internet

Theoretically, the VLA model can be challenging to grasp. In simpler terms, VLA enables the vehicle to first articulate what its "eyes" see in language, then engage in language-based thinking, and finally translate these thoughts into actions. The advantage of this approach lies in the language level's natural suitability for abstract and long-term reasoning, facilitating the integration of contextual information and rule-based knowledge. This allows for the construction of a bridge from perception to decision-making based on clearer and more transferable semantic representations.

Given language models' proficiency in synthesizing scattered information into high-level conclusions, VLA can theoretically make "conceptual" judgments more effortlessly in diverse complex scenarios. It also simplifies the incorporation of human rules, regulations, or scenario descriptions in text form into the training and tuning process.

However, reliably converting visual features into tokens that LLMs can effectively utilize is no easy feat, and numerous issues must be addressed. Problems such as information loss and alignment between vision and language must be resolved. The conclusions generated by language reasoning must also be strictly confined within physically feasible action ranges; otherwise, situations may arise where the "idea is sound" but the "execution is unsafe." Additionally, challenges like the reasoning overhead of LLMs, system real-time performance, and decision explainability need to be tackled. While language boasts strong abstract capabilities, the physical world imposes extremely high demands on control precision and constraints. Establishing a trustworthy mapping between semantic abstraction and precise control is even more crucial for VLA to overcome.

The strength of VLA lies in its robust semantic understanding capabilities, which confer a natural advantage in complex social interactions and rule comprehension. It excels at capturing behavioral intentions in scenarios with relatively few explicit rules. For manufacturers aiming to leverage "data and models" to transfer driving experience across different vehicle models and cities, VLA's generality and abstract capabilities are highly appealing. Its weaknesses, however, include the need for additional engineering measures to ensure physical precision and safety constraints, as well as relatively higher challenges in reasoning latency, model explainability, and system verification.

What is the World Model Route?

The core tenet of the World Model is to model the environment, objects, and behaviors as a computable and deducible "physical world." Decision-making does not hinge on natural language as an intermediary but can be conducted directly within the state space. The World Model emphasizes "spatial cognition and physical deduction." Starting from multi-sensor data, it constructs a continuous and predictable representation of the world state and generates and verifies behaviors based on physical rules.

Taking Huawei WEWA's "cloud-local collaboration" mode as an example, the team can construct a high-fidelity physical simulation environment in the cloud. This allows the model to continuously "drive" in the virtual world and generate a vast amount of simulated trajectories. The simulation environment provides extremely high data density, enabling the model to learn the causal relationships of the physical world in numerous controlled or even extreme scenarios. Through a reward-punishment mechanism that scores the model's generated behaviors, the model can gradually learn how to avoid risks and make compliant and stable decisions in various situations.

Huawei WEWA Technical Architecture, Image Source: Internet

Once training is complete, complex cloud models can be transformed into lightweight versions capable of running in real-time on the vehicle through model distillation or compression techniques. This enables the vehicle to directly generate trajectories and control commands based on real-time sensor data.

The strength of the World Model lies in its exceptional controllability and physical consistency. Since decision-making is grounded in explicit and verifiable state and dynamic models, it simplifies formal verification, safety boundary checks, and enforcement of physical constraints. This also enhances the explainability and falsifiability of safety-critical scenarios. Given the utilization of simulation training, extreme scenarios that are rare in reality but crucial for safety can be artificially created, effectively compensating for the lack of real road-collected data and thus boosting system robustness in dangerous situations.

Like the VLA model, the World Model technical route also faces numerous challenges. High-fidelity simulation, complex dynamic modeling, and accurate reconstruction of the vehicle and environment all necessitate massive computational support and cost investment, representing a significant expense. Constructing a sufficiently diverse simulation environment to encompass the complexity of the real world and effectively bridging the "simulation-reality gap" are also pressing issues. Additionally, this route heavily relies on the type and precision of perception sensors. If a LiDAR-centric solution is adopted, it will directly increase system costs and deployment thresholds, thereby impeding the process of large-scale deployment.

The strength of the World Model lies in its decision-making results being more closely aligned with the real physical world, simplifying the injection of constraints and formal verification. Simulation training can efficiently cover various risk scenarios, making it suitable for productization paths with extremely high safety requirements. Its weaknesses, however, include the difficulty of completely eliminating the gap between simulation and reality, the complexity of system modeling, and the potential for high-precision sensor dependence to drive up overall costs. Furthermore, in certain scenarios requiring "common sense" or long-term social reasoning, purely physically rule-driven models may lack the flexibility and intuition of those incorporating language intermediaries.

Core Differences Between the Two Routes

Comparing the two routes, it becomes evident that they diverge significantly in several dimensions: "how the world is represented," "how decisions are formed," "the sources of training data," and "deployment strategies."

Regarding how the world is represented, VLA tends to express the world using semantic tokens, emphasizing abstract concepts and high-level intentions. This representation method facilitates the injection of human knowledge and rules into the system in language form. In contrast, the World Model represents the world as continuous state variables and spatial relationships between entities, highlighting geometric attributes, dynamics, and predictability.

In terms of reasoning mechanisms, VLA relies on the semantic reasoning capabilities of large language models and excels at handling long-term dependencies and comprehensive judgments in complex contexts. However, it must map language conclusions to specific actions and ensure they adhere to physical constraints. The World Model, on the other hand, directly conducts physical deduction and strategy generation in the state space. Its reasoning process is more closely aligned with physical laws, and the results are typically easier to verify. Nevertheless, it may lack the flexibility of the former in handling semantic ambiguity, rule interpretation, or long-term social behavior inference.

The sources of training data for the two also differ markedly. VLA relies more heavily on large volumes of annotated multimodal data, real road scenario data, and language data for alignment. The World Model, conversely, heavily depends on high-quality simulation data and multi-sensor fused real driving logs, with simulation data offering a clear advantage in terms of data volume and scenario controllability.

The two also exhibit different focuses in deployment strategies. VLA necessitates a more complex model stack to complete the full mapping from vision to language and then to control. The reasoning overhead and real-time requirements introduced by LLMs affect their direct application on the vehicle end. Consequently, many technical solutions adopt lightweight, model distillation, or hierarchical decision-making methods, placing high-level planning in the cloud or development stage and deploying strictly constrained execution modules on the vehicle end. The World Model's "cloud simulation training, vehicle-end model distillation" process is more straightforward, compressing the strategies learned in simulation to run on the vehicle end, where the vehicle-end system can make physical-level decisions directly based on real-time perception.

Final Thoughts

When comparing VLA and the World Model, it becomes apparent that each possesses its own strengths and limitations. It may be challenging to definitively conclude which one is more advantageous. In the future, VLA and the World Model may move toward deep integration, with VLA serving as the "brain" for perception and decision-making, responsible for understanding complex scenarios and high-level planning, and the World Model becoming the "cerebellum" for control and execution, ensuring all actions comply with physical laws and safety boundaries.

-- END --