When an AI Developer Decides to Tame OpenClaw

![]() 02/09 2026

02/09 2026

![]() 354

354

Since late January, OpenClaw has dominated the tech community's social media feeds. I've seen many developers share fascinating insights about this project.

A seasoned developer from the 1970s remarked that with OpenClaw, vibe has become more important than code, nearly subvert ing (overturning) his two-decade-long skill set. OpenClaw can operate autonomously on his computer all day, mobilize ing (mobilizing) multiple agents to acquire skills without him writing a single line of code. Far from feeling discouraged, he sees his 40s as prime years for innovation. Leveraging his engineering experience, he sets more reasonable and broader operational boundaries for the agents, enabling them to complete previously impossible tasks safely and powerfully—giving him an edge over novices.

Even programming novices are thrilled. Despite knowing no code, one successfully set up clawdbot and deployed it on a cloud server after some tinkering.

Engineers from software companies, however, believe this tool is better suited as a personal operating system and not yet capable of supporting profitable commercial products.

In short, both novices and experts are taming OpenClaw, each gaining unique insights.

OpenClaw makes "Jarvis for Everyone" a possibility. It's foreseeable that taming general-purpose agents like OpenClaw will become a central theme in AI narratives by 2026.

So, what happens when an AI developer decides to tame OpenClaw?

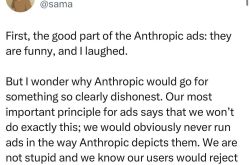

OpenClaw has been dubbed the "greatest AI application to date," a claim seasoned programmers generally dispute.

In their eyes, OpenClaw's technical architecture is fundamentally simple, adhering to the ReAct (Reasoning + Action) paradigm that emerged two years ago.

The process involves: first, receiving a user's specific command (instruction), then judging and breaking it down into corresponding execution steps. After completing each step, it iteratively decides the next action through execution feedback and result observation. This typical tool-calling loop represents the core logic of AI agents, meaning OpenClaw lacks complex technical barriers.

So why does it attract developers so strongly? The most astonishing aspect is that AI feels alive for the first time.

Some say OpenClaw is like having their personal assistant Jarvis. Others feel "pinned against the wall by AI" when it automatically initiates conversations.

The reason, of course, isn't true AI awakening but several engineering innovations in OpenClaw:

First, human-like interaction. While AI agents like Manus and Cursor require access through dedicated web pages or standalone clients—feeling geeky yet complex—OpenClaw connects via message adapters (channels) to WhatsApp, Telegram, DingTalk, Feishu, QQ, Email, and other mainstream IM tools. Users can send instructions through chat windows, triggering AI actions via dialogue. This bidirectional exchange resembles commanding real humans, creating strong interactivity.

Second, human-like initiative. Vertical agents passively respond to single requests and stall when encountering obstacles. OpenClaw maintains dynamic interaction with users during tasks. If a restaurant reservation fails, it autonomously switches strategies—e.g., making a phone call—while providing real-time progress updates and seeking user confirmation. This flexibility comes from its skill mechanism, enabling OpenClaw to activate local services/data, search online for relevant APIs, and proactively inform users when tasks might fail. Such adaptability makes AI feel judgmental and alive rather than mechanical.

Third, human-like versatility. After the 2023 large model boom, the industry recognized that large models alone have limited capabilities. AI must have "hands and feet"—relying on external tools to complete tasks for users. OpenClaw meets this need perfectly. Its central gateway handles session management, agent scheduling, and multi-channel messaging. The agent module calls large models, tools, and skills for task execution, while peripheral control via multiple clients supports node (e.g., Mac mini) management of device software. Once granted local permissions, OpenClaw expands infinitely—interacting with email, managing schedules, handling personal knowledge/finance, even connecting to home IoT devices for voice/lamp control—becoming a 24/7, tireless personal assistant.

Thus, OpenClaw spreads rapidly not due to groundbreaking technology or AI awakening as sensationalized, but through engineering innovations in interaction, autonomy, and capability that breathe soul (soul) into utilitarian agents. This opens infinite possibilities for developers in AI agent development.

After the initial awe comes disillusionment. As independent developers, they pursue not just technological ideals but also commercial viability. Though seen as miraculous by programmers, OpenClaw isn't flawless.

Some found that a simple interface operation takes 30 seconds on Miaoda but costs $30 via OpenClaw. Others spent $55 in API fees registering an X account and sending one tweet.

Cost is just one issue. Commercializing OpenClaw-based software faces significant challenges.

The foremost challenge: expenses.

Dubbed a "Token Furnace," OpenClaw consumes astonishing computational resources due to its ReAct mechanism. Heavily reliant on LLM APIs, it requires frequent large model interactions. Each task involves at least three rounds of interaction, consuming massive Tokens per task.

Burning millions of Tokens and spending hundreds of dollars within 20 minutes isn't uncommon during actual use. This is unsustainable for high-frequency or enterprise applications, deterring developers seeking commercialization.

Assuming costs are ignored, professional clients will still prioritize security.

OpenClaw's power stems from its skill library. With tens of thousands of skills—mostly unvetted—developers can freely upload/share various skills, creating opportunities for attackers. They can embed malicious code in skills, which executes automatically when called, stealing user data or controlling devices undetected. These risks make enterprises hesitant to adopt it for work.

To mitigate risks, developers commonly use sandbox isolation—deploying OpenClaw on dedicated devices (e.g., old PCs, Mac minis) to separate it from personal main devices/sensitive data, preventing security risk diffusion (proliferation).

However, this approach has clear drawbacks. Complete isolation prevents OpenClaw from accessing files/tools on main devices, severely limiting its functionality and negating its original value. Incomplete isolation fails to effectively mitigate risks, leaving privacy breaches and device control vulnerabilities.

Balancing high autonomy and high security remains elusive, troubling ordinary developers and hindering commercialization. No mature industry solutions exist yet, meaning developers must continually weigh security against functionality.

Even with sandbox isolation and local deployment, can OpenClaw be used safely? The next challenge is efficiently scheduling and utilizing diverse Skill tools via large models.

OpenClaw's multi-agent understanding and orchestration still rely on foundational models, whose capabilities remain limited. For instance, large models' tool usage accuracy drops sharply with long contexts (e.g., 128K), leading to low task completion rates in complex scenarios. OpenClaw may call wrong skills, miss key steps, or perform invalid (ineffective) operations, requiring frequent developer intervention—hardly true automation.

Enterprises then realize that omnipotent general agents remain idealistic; in reality, limited but reliable specialized agents make more sense.

These flaws weaken the commercial logic of OpenClaw-based projects. Independent development allows creative freedom, but commercialization demands balanced returns. OpenClaw struggles to reconcile capability/risk and technological ideals/commercial realities.

Thus, OpenClaw currently suits personal exploration and geek experiments better than serious commercial applications.

After disillusionment comes symbiotic evolution with OpenClaw.

In *The Little Prince*, the fox tells the prince that only tamed things can be understood, forging unique relationships. The same applies between developers and AI assistants.

While the public frets over AI "taking over laptops," experienced AI developers have begun taming OpenClaw, balancing authorization/constraints and capability/security to unlock its maximum value.

Here's how they operate:

The most fundamental yet crucial technique is sandbox isolation. Besides local deployment, some developers opt for cloud environments. Major domestic providers like Alibaba Cloud, Tencent Cloud, and Baidu Intelligent Cloud now offer one-click OpenClaw deployment with sandbox environments, effectively isolating security risks. Cloud servers also support 24/7 operation at better cost-efficiency for long-term use.

Second, mature developers avoid showy tricks with OpenClaw and set realistic expectations.

While the public marvels at "Jarvis myths" like auto-tweeting or voice interaction, developers focus on productivity scenarios—especially repetitive, tedious yet deterministic tasks they previously couldn't do or found cumbersome. Batch file processing or report generation, for example, are time-consuming and error-prone but ideal for OpenClaw. Clear instructions and task boundaries let it handle such tasks continuously.

For instance, a data analyst using OpenClaw to batch-read data and generate reports might complete the task in hours or even minutes, compared to days manually.

Finally, humans must act as OpenClaw's reviewers. For complex tasks, AI completes one step, humans review it, and only then does AI proceed—avoiding cascading errors. For critical tasks like code refactoring or handling sensitive files, AI generates examples first, which humans approve before batch execution.

In essence, OpenClaw isn't magic—it's engineering. What the public sees as Jarvis or AI awakening is, to developers, solid engineering practice. Fearlessness and rationality, granting safe authorization and reasonable empowerment within controllable limits, may represent the optimal path for human-AI symbiosis.

If everyone will have their Jarvis someday, why not start by taming OpenClaw?