Creating Games from a Single Image? Google Genie Unveiled: A Playground of Possibilities, But Not a 'Game Company Killer' Yet

![]() 02/09 2026

02/09 2026

![]() 505

505

Creating Games from a Single Image—Is This for Real?

Before we dive in, let me pose a simple question:

Are you still eagerly awaiting the release of GTA 6?

I'm not sure about your sentiments, but Xiaolei is genuinely thrilled about its arrival. After all, it's been thirteen years since GTA 5 graced our screens, and it remains incredibly popular to this day. One can't help but wonder what kind of masterpiece Rockstar Games will unveil after such a long hiatus.

However, while it might seem like pouring cold water (a Chinese idiom meaning to dampen enthusiasm) to say this now, as Rockstar remains tight-lipped about the release date, Google's DeepMind team on the other side of the globe has quietly achieved a major breakthrough. If all goes as planned, this could completely revolutionize our understanding of what games are.

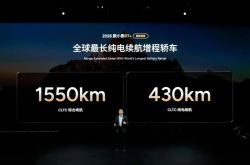

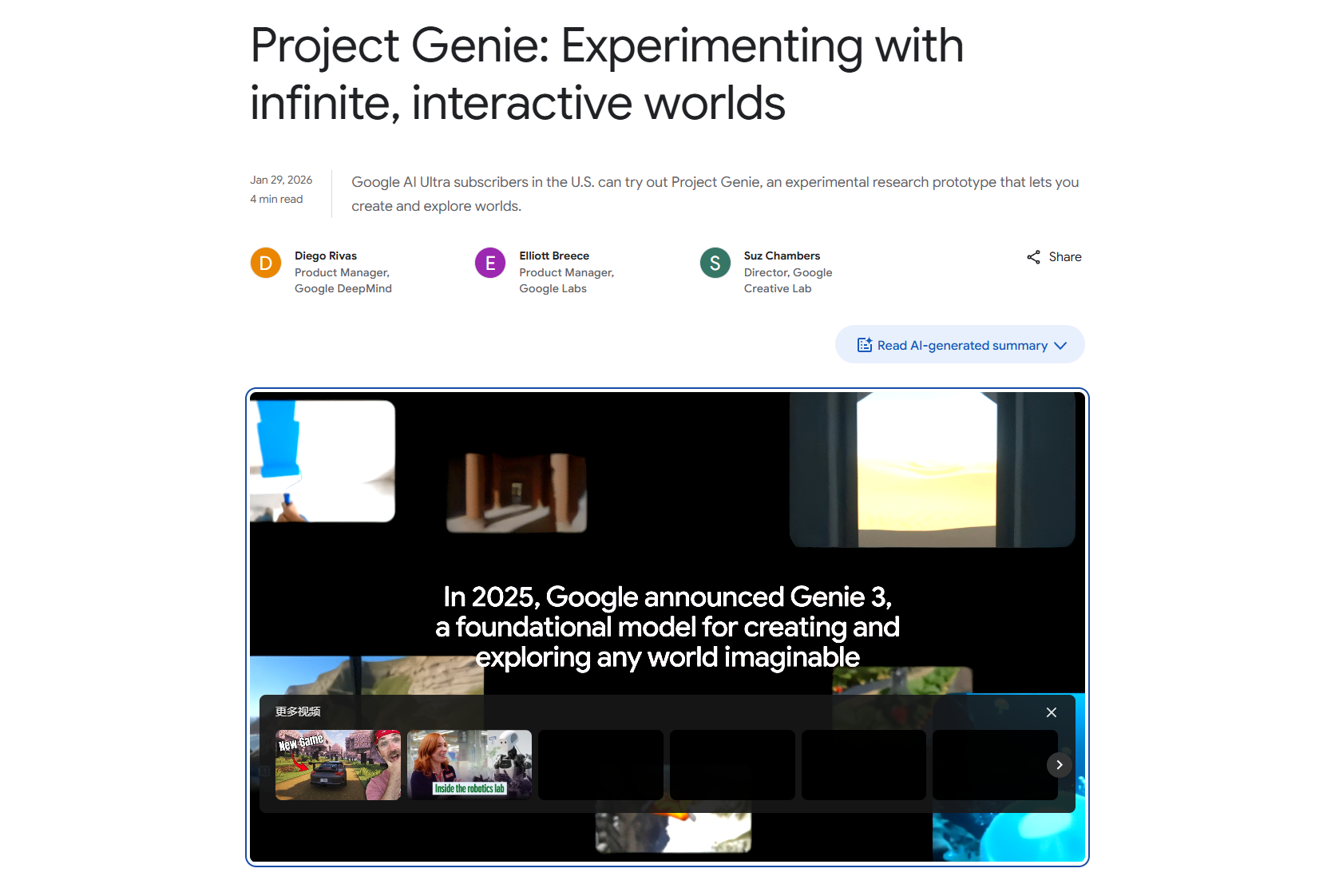

Recently, Google officially announced on its blog that it has opened up the prototype version of Project Genie for some users to experience, enabling them to generate their own playable game worlds.

(Image source: Leitech)

As soon as the news broke, the stock price of Take-Two Interactive, the parent company of GTA developer Rockstar Games, plummeted by 10%. Online gaming platform Roblox saw a drop of more than 12%, and game engine manufacturer Unity took the hardest hit with a 21% decline. In contrast, domestic companies like NetEase and Tencent remained largely unscathed.

Taking this opportunity, Xiaolei wants to delve into the details: What exactly is this AI that's stealing the spotlight from GTA 6? What's the current experience like? And what might our games and virtual worlds look like in the near future?

With Just One Image, Everything Becomes Playable

Before we explore the technology, let's grasp just how revolutionary it is.

Traditionally, what was the process for creating a game? You needed a writer to craft the story, artists to create the visuals, programmers to write the code, and finally, rendering through an engine.

This process was both time-consuming and costly. Even gaming giants like Ubisoft and EA have invested decades of resources, yet no one can guarantee the final outcome.

But the advent of Project Genie has completely overturned this logic.

(Image source: Google)

Its core capability can be summed up in one phrase: generation is interaction.

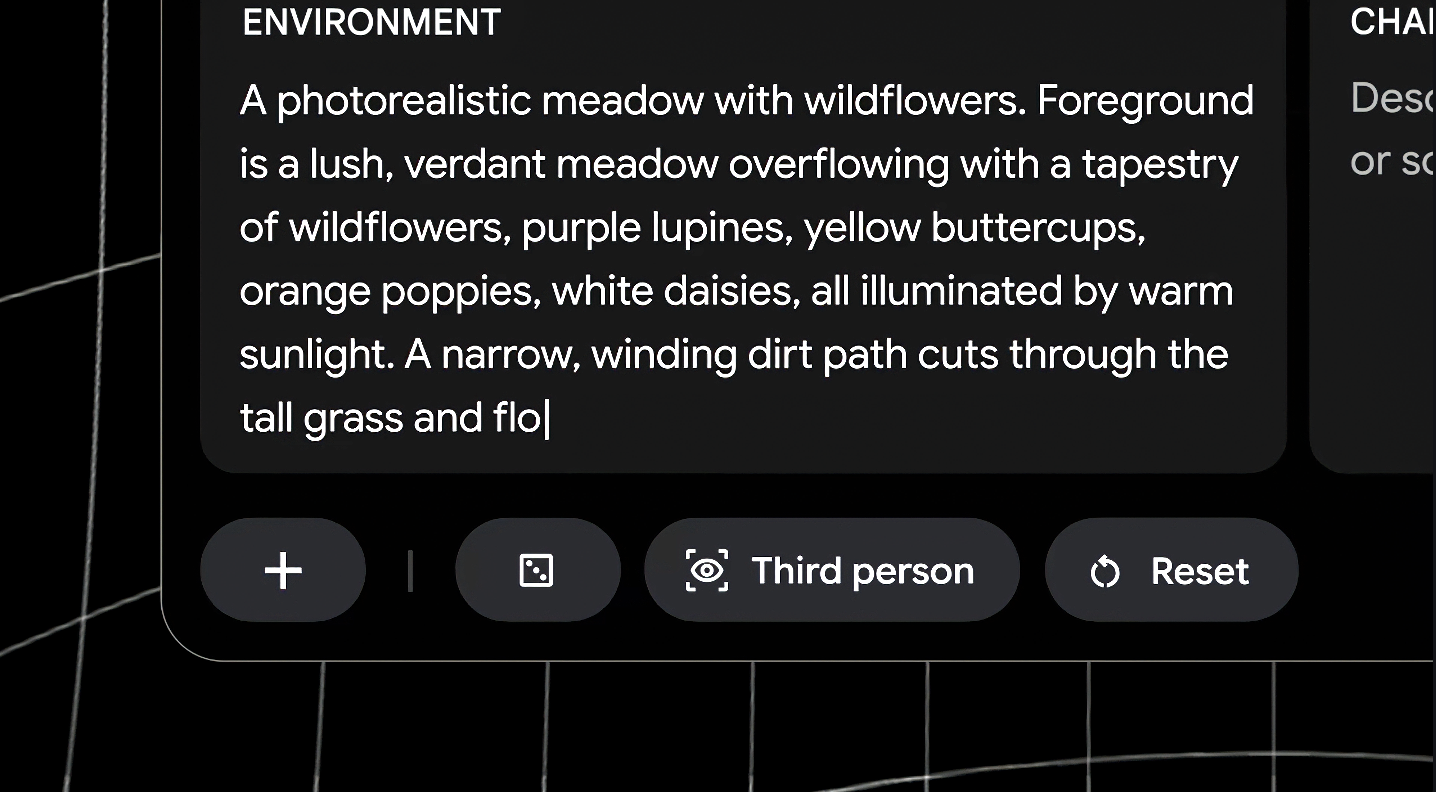

Give it a photo, a hand-drawn sketch, or even a simple text description, and it can construct the world and characters.

(Image source: Google)

Then, you can specify the game’s controls—whether walking, riding, flying, or driving—and Project Genie will attempt to understand physical laws and generate a manipulable world directly:

(Image source: Google)

Yes, just like this.

Once the world is generated, we can move around inside it directly. In Project Genie, as you walk forward, the path ahead generates in real time. As you turn your perspective (view angle), the camera adjusts synchronously. The entire process feels like exploring a continuously unfolding space.

Not satisfied? Then modify the world.

Similar to other AIGC content, the world generated by Project Genie isn’t a one-time product. We can continue modifying it based on existing prompts—for example, replacing a dog with a pink balloon rabbit.

(Image source: Google)

You can even input a real-world image and let Project Genie help you create a secondary work and animate it. After completion, it can be exported directly as a video for easy saving or sharing.

Because of its incredibly powerful capabilities, creative netizens quickly found innovative ways to use it.

On Bilibili, an uploader shared a classic photo of streamer Xu Haolong. Processed by Project Genie, the Showdog (likely a nickname or meme reference) in the image became a controllable character. You could press the keyboard’s arrow keys to make him run and jump in the garage background, even interacting realistically with objects in the world.

(Image source: Bilibili)

Classic elderly meme? Make it move!

(Image source: Bilibili)

Give it an image from Genshin Impact, and it can automatically generate the effect of wind wings, letting the character soar freely through the air and even simulate gliding.

(Image source: Bilibili)

On Twitter, someone casually drew a few stick figures on paper, added some wavy lines beside them to represent water, took a photo, and uploaded it to Project Genie. The system turned this doodle into a level where the stick figures could actually jump over the wavy lines. If they fell, it even simulated a falling effect.

And this is the most frightening thing about Project Genie:

It doesn’t require code or 3D modeling. By simply looking at an image, it understands what is ground, what are obstacles, and how characters should move.

In comparison, domestic gaming giants haven’t been idle, but their approaches seem somewhat narrow-minded.

Look at NetEase’s Justice, which constantly brags about how its AI NPCs can chat, or Tencent’s efforts to make AI dominate noobs in Honor of Kings. However, these so-called breakthroughs are essentially just using AI to empower games—still just robots, far from disrupting the game creation process.

One can only say that domestic companies still have a long way to go.

Looks Great, But Not Mature

Hey, some readers might ask: if this thing is so amazing, are game companies going out of business tomorrow?

Well... not quite.

Although it might seem similar, Project Genie is fundamentally different from games like Dark Souls or Honor of Kings.

Traditional games are built on game engines. When you press the jump button, the program calculates how high you jump based on gravity parameters. When you throw an iron ball, the program uses classical physics formulas to calculate its falling speed. When you turn on a flashlight, the program simulates lighting and object materials to render the effect in real time.

Project Genie, however, is based on Genie 3, Nano Banana Pro, and Gemini. Its core, Genie 3, is essentially a frame generation model using an autoregressive generation mechanism. It generates environmental states frame by frame based on world descriptions and user actions, rather than playing pre-generated content.

(Image source: Google)

I know, frame generation isn’t exactly groundbreaking in the era of Nvidia’s aggressive promotion.

Its working principle is to look at the previous few frames and guess the next one.

By studying over 200,000 hours of game footage in Google’s massive database, Genie 3 has memorized every possibility of “what usually happens in the next frame when there’s a little figure on screen and the player presses the right button,” and generates corresponding visuals based on player actions.

The problem lies here: Genie 3 doesn’t understand physics. It lacks reliable logical calculations and instead expands the world through constant guessing. This leads to two very obvious flaws in the current experience.

First is the lack of consistency.

Although Google claims that to prevent AI overloading or logical breakdowns, players can only generate one-minute clips.

Even within this one-minute limit, we still see severe memory loss. Take the example of Xu Haolong above: the player imported a front-facing photo, but after controlling the character for 10 seconds and switching back to the front view, the character’s face had rotated 180°—into a pure Caucasian man.

(Image source: Bilibili)

I imagine, except when playing Roguelike games, not many people would accept a world where the same place changes every time you visit.

Second is the lack of logic.

In traditional games, if you run into a wall, you bounce back, right?

But in Project Genie’s world, the AI sometimes guesses wrong. This can cause your character to suddenly phase through walls, melt into the floor mid-jump, or even have a tree suddenly spawn behind them while walking.

(Image source: Bilibili)

This experience is incredibly eerie—like a lucid dream where you know you’re controlling the character, but the world keeps deforming illogically.

To be clear, compared to its predecessors and other visual language/world models, Genie 3 is much more consistent and stable, but disorienting situations still occur with significant probability—completely unacceptable in games that prioritize playability.

As such, its current value lies more in providing game designers a quick way to validate ideas.

For us ordinary players, it might serve as a novel toy for a few minutes of entertainment. True immersive entertainment is still a long way off.

World Models: AI’s Next Battleground

At this point, some might think: if the graphics are so rough and there are so many bugs, isn’t Google wasting money on this Project Genie? Has it taken a wrong turn in technological development?

In my opinion, quite the opposite.

The emergence of Project Genie represents far more than just game creation—it marks a crucial step for AI from “understanding static worlds” to “simulating real worlds.”

Today’s familiar video generation models like Sora and Runway can produce Hollywood-quality visuals, but they’re passive displays. Viewers can only watch, not interact.

(Image source: OpenAI)

Genie 3’s world model, however, aims to make AI understand: because I took an action, the world changed. This shift from passive display to active interaction, from static storytelling to dynamic simulation, is exactly the path toward Artificial General Intelligence (AGI).

Imagine if future versions of Genie could achieve 4K resolution, 60 frames per second, and perfectly accurate physics. What would that mean?

For starters, we wouldn’t need to train robots in the real world anymore. We could let AI robots fail a thousand times in Project Genie’s virtual world—learning to walk, to grasp a cup—before uploading the algorithm to physical robots.

Of course, Google isn’t the only player in this arena.

OpenAI has explicitly stated that Sora is essentially a world model. Nvidia’s newly released Cosmos model claims to focus on making AI understand physical laws. Numerous domestic companies are also quietly making moves. Everyone is betting on who can create that mature world prototype first.

For this new generation of technology, the show has just begun.

Google Genie World Model

Source: Leitech

Images in this article come from: 123RF Royalty-Free Image Library