How Does SLAM Provide Spatial Awareness for Autonomous Driving?

![]() 02/09 2026

02/09 2026

![]() 346

346

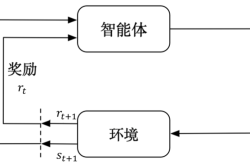

In the fields of artificial intelligence and robotics, enabling machines to understand space like living organisms is an unavoidable core proposition. When humans find themselves in an unfamiliar environment, they can not only identify obstacles with their eyes but also quickly outline the contours of the surrounding environment in their minds and accurately judge the distance between themselves and the obstacles. This seemingly instinctive spatial awareness ability is concretely represented in the field of engineering as Simultaneous Localization and Mapping technology, commonly known as SLAM. In the development of autonomous driving, SLAM is not only a skill for vehicles to "survive" in unknown environments but also the underlying support for achieving centimeter-level high-precision positioning, path planning, and environmental semantic understanding.

The Engineering Logic of Spatial Awareness

To understand SLAM, one must first grasp the working logic of robot localization. If a robot wants to know its location, it needs a map of the environment; however, to construct an accurate map, it must know its precise position at every moment. The core value of SLAM lies in its clever breaking of this "chicken-and-egg" dilemma. By processing sensor data in real time, it allows mobile platforms to determine their own poses while simultaneously mapping the geometric structure of the surrounding environment in completely unfamiliar settings. This capability is crucial for autonomous vehicles, especially in scenarios where Global Navigation Satellite System (GNSS) signals are weak or absent, such as deep tunnels, areas densely packed with skyscrapers, or complex underground parking lots. Such capability is key to ensuring the normal operation of autonomous driving.

In the sensor suite for autonomous driving, LiDAR and cameras are the two core hardware components for constructing an SLAM system. LiDAR-based SLAM obtains high-precision 3D point clouds of the environment by emitting laser beams and receiving reflected signals. This data format possesses strong geometric authenticity, as each laser beam's returned angle and distance information constitute the hard scale for the vehicle to perceive the surrounding physical world. In contrast, visual SLAM more closely resembles human perception, utilizing monocular, stereo, or depth cameras to capture continuous image sequences. By analyzing the displacement of feature points between adjacent image frames, visual SLAM can infer the camera's motion trajectory. Although visual solutions are prone to failure in areas with extremely poor lighting or lacking environmental texture, their rich color and texture information provide the vehicle with semantic perception capabilities beyond pure geometric structures.

The limitations of single sensors have driven autonomous driving systems to evolve towards multi-sensor fusion. This fusion is not merely a simple stacking of data but a deep collaboration. LiDAR can provide accurate initial depth values for the visual system, resolving the scale uncertainty problem in monocular vision; meanwhile, Inertial Measurement Units (IMUs) can output acceleration and angular velocity at extremely high frequencies, "pre-filling" the vehicle's pose between sensor sampling intervals. In a tightly coupled fusion framework, data of different frequencies and characteristics are fed into the same optimization backend, achieving the optimal estimation of the vehicle's state through complex mathematical tools. This mechanism ensures that even in extreme cases where a sensor temporarily fails, the autonomous driving system can still maintain continuous and stable positioning.

The Precise Operation of the System Framework and Error Correction Mechanism

A complete SLAM system consists of four key modules: front-end odometry, back-end optimization, loop closure detection, and map construction. Front-end processing serves as the system's "perceptual outpost," tasked with extracting information representing environmental features from raw sensor signals. For visual solutions, this involves feature point extraction and matching or directly modeling the differences in pixel gray values; for LiDAR solutions, it entails point cloud downsampling, registration, and alignment. The pose changes calculated by the front end constitute a local motion trajectory. However, due to minor errors introduced by sensor noise and algorithmic approximations, this trajectory inevitably drifts as the driving distance increases. Without an effective correction mechanism, these initial errors, even if minuscule, will lead to large-scale distortions and ghosting in the map.

Back-end optimization acts as the system's "logical hub," responsible for globally organizing the pose information transmitted from the front end. Early systems often adopted methods like Extended Kalman Filters, but their effectiveness was frequently limited when dealing with strongly nonlinear long-distance travel. Modern mainstream solutions have shifted towards graph-based optimization, treating each moment's pose as a node in a graph and the observed constraints as edges connecting these nodes. The goal of back-end optimization is to minimize the "total energy" of all constraint relationships by adjusting the positions of these nodes. This method demonstrates stronger robustness when processing large-scale maps and can effectively suppress the growth of cumulative errors.

Loop closure detection is a highly intelligent design within the SLAM system, endowing the platform with the ability to "recognize familiar routes." When an autonomous vehicle returns to a previously visited area after a long journey, if the loop closure detection module can identify this scene, the system can establish a strong spatiotemporal constraint. This identification relies on bag-of-words models or deep learning features. The bag-of-words model transforms image features into a discrete form similar to text words, judging image similarity by statistics (Note: " statistics " should be "statistically analyzing") analyzing the frequency and weight of word occurrences. Once a loop is detected, the system tightens and straightens a previously loose string, correcting all accumulated positional drifts in the back-end optimization to ensure global consistency of the entire map in space.

It must be emphasized that loop closure detection is a "double-edged sword." Accurate loop matches can significantly enhance system precision, but false positives can destructively disrupt the map structure. Therefore, multiple verifications are incorporated into engineering practice. Temporal consistency checks ensure that detected loops are continuous and reasonable on the timeline; geometric structure checks use algorithms like RANSAC to verify whether two sets of observations truly align in physical space. For safety-critical applications like autonomous driving, it is better to miss some ambiguous loops than to make a single erroneous judgment.

The In-Depth Application and Value of SLAM in Autonomous Driving Scenarios

Within the autonomous driving architecture, SLAM is not merely a component of the perception module but a hub connecting perception, planning, and execution. SLAM provides real-time localization capabilities that surpass traditional maps. Although High-Definition Maps (HD Maps) offer rich static information for autonomous driving, the real world is dynamically changing. Road construction, tree trimming, and even seasonal vegetation changes can render pre-loaded maps ineffective. SLAM enables vehicles to perceive these subtle changes by constructing local maps in real time and dynamically matching them with the environment, promptly updating their own localization coordinates.

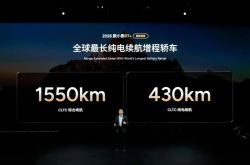

Furthermore, SLAM technology significantly enhances a vehicle's autonomous navigation capabilities in constrained environments. In multi-level parking garages or streets surrounded by high-rise buildings, satellite navigation errors can reach tens of meters, rendering it unusable for autonomous vehicles requiring precise docking or lane keeping. In such cases, SLAM can utilize onboard LiDAR and cameras to construct a relative coordinate system independent of external signals by identifying pillars, wall features in parking lots, or unique textures on streets. Combined with data from wheel speed sensors and IMUs, vehicles can achieve centimeter-level autonomous obstacle avoidance, path searching, and precise parking in these environments.

Another major application value of the SLAM system lies in its ability to fuse and tolerate heterogeneous data. An autonomous vehicle equipped with a complete SLAM framework can continue to operate even if a sensor fails due to extreme weather or hardware malfunctions. For instance, in dense fog, the visibility of visual sensors significantly decreases, and the system can automatically increase the weight of LiDAR-based SLAM and IMU to maintain positioning; when encountering large areas of smooth glass curtain walls where LiDAR may misjudge, visual information can compensate for the lack of geometric features. Through this cross-modal complementarity, SLAM significantly enhances the robustness and safety of autonomous driving systems, enabling them to operate more safely in complex and ever-changing real-world conditions.

The Future Evolution Led by Semantic Understanding and Artificial Intelligence

With the development of deep learning technologies, SLAM is undergoing a transformation from "geometric mapping" to "semantic mapping." Traditional SLAM systems, although precise in depicting the position of every point in space, treat pedestrians, road signs, buildings, and moving vehicles as indistinguishable collections of point clouds or pixels. The emergence of semantic SLAM breaks this deadlock. By integrating algorithms like Convolutional Neural Networks (CNNs), the system can classify and segment objects in the scene while constructing geometric maps. This means the vehicle can understand that it is not just seeing an "obstacle" but a "pedestrian preparing to cross the road."

The introduction of semantic information has a profound impact on the positioning stability of autonomous driving. In crowded urban traffic, numerous dynamic feature points (such as surrounding moving vehicles) can interfere with the motion estimation of front-end odometry. Semantic SLAM can identify and exclude these feature points belonging to dynamic objects, using only static backgrounds like streetlights and building facades for positioning, thereby significantly reducing the probability of system failures. Semantic maps also support more advanced human-machine interaction and path decision-making. When the system identifies an upcoming "school zone" or "crosswalk," the planning layer can proactively make deceleration decisions based on semantic labels rather than passively avoiding obstacles based solely on geometric distance.

Artificial intelligence has not only transformed the form of maps but also reshaped the underlying algorithms of SLAM. End-to-end learning-based visual odometry has begun to demonstrate potential surpassing traditional geometric methods by training on large-scale driving datasets to directly learn the mapping relationship between image sequences and motion vectors. In map rendering, the application of new technologies like Neural Radiance Fields (NeRF) enables SLAM to generate not just cold, fragmented point clouds but realistic 3D scene models with lifelike lighting and textures. These models not only provide more precise references for autonomous driving perception and decision-making but also greatly advance the construction of digital twins and high-fidelity simulation environments.

Final Remarks

The SLAM technology in autonomous driving is the core for vehicles to achieve environmental perception and autonomous navigation. By fusing multi-sensor data, it constructs high-precision maps of the surrounding environment in real time and simultaneously determines the vehicle's precise position within that map. This process not only provides the foundation for path planning and decision-making but also supports the reliable operation of vehicles in unknown or dynamic environments. As algorithmic efficiency and hardware capabilities continue to advance, SLAM is propelling autonomous driving towards safer and more intelligent levels.

-- END --