Huawei Cloud Container Management: Balancing Technology and Ecosystem Leadership

![]() 08/18 2025

08/18 2025

![]() 653

653

Huawei Cloud has emerged as a global leader in container management, proving that the best way to shape the future is to create it oneself.

- Introduction

Solomon Hykes, a bearded programmer who resembles a quintessential Silicon Valley coder, is in fact a sophisticated Frenchman at heart. In an informal Silicon Valley poll, he was hailed as one of the 12 coolest programmers in history, with his LinkedIn page brimming with praise from industry insiders. His crowning achievement is the creation of Docker, a groundbreaking container product.

Docker ignited a container revolution that persists to this day. A notable milestone was the development of Kubernetes by three Google engineers between 2013 and 2014. This innovation combined the evolution of Docker container technology with the technical prowess of Borg, a large-scale cluster management system running within Google for years. To this day, Kubernetes remains the most influential practical industry demonstration in the container field.

Huawei Cloud, too, can be considered a steadfast supporter and practitioner of container technology worldwide.

In 2016, as the large-scale application of Kubernetes was just beginning, Huawei Cloud officially launched its first container service product, Cloud Container Engine (CCE). This marked Huawei Cloud's comprehensive deployment of container technology in the public cloud domain, integrating Huawei's expertise in hardware, networking, and operating systems to deeply fuse Kubernetes with proprietary technology.

Huawei Cloud's adoption of container technology kept pace with global mainstream trends while gradually developing its unique rhythm.

There is a clear hierarchy in the container's progression and the dimensionality enhancement of application practices.

From a purely technical standpoint, container technology has achieved a three-step leap in a decade.

Early container technology primarily replaced virtual machines in certain scenarios. In the latter's application, each application occupied a virtual server exclusively, akin to a truck carrying only one piece of equipment. While isolation was complete, the cost was high. In contrast, a container resembles a standard shipping container with a built-in, standardized protective layer (isolated environment). Regardless of the mode of transportation, the application within it can run seamlessly and directly.

The next advancement in container technology came with the emergence of technologies represented by Kubernetes and CCE. These act more like scheduling centers mobilizing millions of containers, offering functions such as automatic scaling, service discovery, and failure recovery.

The advent of such technologies elevated containers from mere development tools to enterprise-level infrastructure. Especially after 2020, these mobilization centers began supporting cross-cloud deployment, addressing management challenges in enterprise-level hybrid cloud scenarios and making container technology one of the most crucial technology stacks for cloud-native environments.

Subsequently, containers continued to play a pivotal role in microservices, Serverless, center-edge deployment in the context of the Internet of Things, and the latest AI wave, thereby ushering in the third stage of their lifecycle – intelligence.

The dimensionality enhancement process of cloud container practice applications aligns with the development trajectory of this technology.

Taking Huawei Cloud as an example, amidst the wave of cloud-native technology reshaping enterprise IT architectures, it has established a container product matrix encompassing all scenarios. Cloud Container Engine (CCE) is compatible with Kubernetes and serves as the cornerstone of all container applications. Cloud Container Instance (CCI) provides Serverless agile capabilities, enabling developers to pay per request without managing servers, significantly enhancing both attention and IT resource utilization. Unified Cloud-native Services (UCS) realizes unified governance across clouds and regions. Together, these three form a comprehensive closed loop of "build-run-extend," which is not only compatible with the Kubernetes ecosystem but also achieves self-breakthroughs in performance, security, and global management dimensions.

Consider an example to understand better. Currently, enterprises generally face high-concurrency scenarios such as AI training, real-time video processing, and flash sales on e-commerce platforms. Traditional container clusters often encounter performance bottlenecks. Cloud Container Engine (CCE) developed CCE Turbo specifically to fully unleash CPU resources by offloading 100% of container network/storage forwarding tasks to smart network cards. By utilizing the cloud-native AI scheduling engine Volcano, it automatically optimizes task scheduling strategies by sensing the distinct characteristics of AI, big data, and web services, thereby achieving large-scale concurrent scheduling capabilities of 10,000 containers per second.

CCE Autopilot is a powerful tool for providing Serverless Kubernetes services. It goes beyond intelligent scheduling, allowing users to focus solely on the container application itself without managing nodes, clusters, or scaling policies.

Containers are inherently a technology that fine-tunes the kernel, and CCE Autopilot can achieve fine-grained billing based on the actual CPU seconds and memory GB run by the container, making idle costs approach zero. When users employ virtual machine technology, resource utilization rarely exceeds 50%. In comparison, although virtual machines still offer certain advantages in physical isolation, their cost-effectiveness and technological advancement lag far behind CCE Autopilot, similar to the gap between traditional fuel vehicles and intelligent new energy vehicles, which have already experienced generational differences.

Furthermore, Huawei Cloud CCI is the world's first Kubernetes-based Serverless container service. By completely decoupling applications from infrastructure, it achieves "zero cluster management," meaning users do not need to preset nodes or maintain the control plane (such as Master/Worker nodes) and can directly submit container images to run loads.

Due to the high technological intensity of container technology, it is challenging for the author to provide a more detailed explanation in a short article. What we should observe more keenly is the significant impact of container technology on the underlying technological trends of the cloud-native era, which can be broadly divided into three stages:

The first stage, which I term the "packing and moving" stage, involves the permanent migration of a vast number of applications from traditional virtual machine architectures to containers. This achieves software and hardware decoupling that was previously unattainable, paving the way for the large-scale application of containers on new and old IT resources in the future.

The second stage, which I call the "from individual to group" stage, involves the transformation of containers from a development tool into an infrastructure with the aid of automated orchestration and scheduling technology. This allows more enterprises and developers to redirect their focus from operation and maintenance technology itself to application development. This "refocusing" is a universal rule for high-dimensional technologies entering maturity, driving the emergence and popularization of typical cloud-native + container technologies such as microservices scaling, Serverless, edge container runtime, and zero cluster management.

The third stage, which is currently unfolding, is the era of containerized AI. Its prominent feature is that it not only enables container technology to serve AI (such as large model training and inference) but also leverages technologies like machine learning to make container technology itself highly intelligent, such as automatically optimizing container deployment in hybrid cloud environments. This stage can be said to have just begun, but its promising future is already evident.

In embracing AI, Huawei Cloud has already undertaken some cutting-edge practices.

I once wrote in an article: "AI applications will accelerate their integration into our lives. And the demand for infrastructure from AI will be unimaginable. Against this backdrop, the most efficient way to build China's computing power infrastructure is to use products like CloudMatrix384 to unify standards for super intelligent computing servers."

In fact, in terms of Cloud for AI, the cornerstone of Huawei Cloud's construction of the CCE intelligent computing cluster is the CloudMatrix384 super node, a super intelligent computing server. Its individual capability is already extremely powerful, and the cloud-native infrastructure built from it is even more formidable. It can provide large-scale super node topology-aware scheduling, PD separation scaling, AI load-aware elastic scaling, and container ultra-fast startup capabilities, significantly accelerating AI training and inference and enhancing the efficiency of AI task execution.

But more importantly, the CCE intelligent computing cluster not only benefits from CloudMatrix384 but also underscores the significance of highly integrated "super nodes" from another perspective. It breaks through the performance and scalability bottlenecks of traditional server architectures/clusters, providing full-stack optimized container technology support for AI model training and inference at the trillion-parameter level or even higher, and offering it in a product form with a very high degree of completion and concentration.

Simultaneously, AI technology is reshaping the cloud service experience. Huawei Cloud's newly released CCE Doer embeds AI Agents throughout the entire container usage process, covering business processes such as intelligent question and answer, intelligent recommendation, and intelligent diagnosis. It supports the diagnosis of over 200 key abnormal scenarios with a root cause location accuracy rate exceeding 80%, realizing automation and intelligence in container cluster management.

In fact, the CCE + CloudMatrix intelligent computing cluster solution has been successfully applied in large-scale training and inference practice scenarios.

A domestic Internet platform, facing increasing pressure from real-time review work with millions of daily content publications, built a trillion-parameter large model training and inference integration platform based on Huawei Cloud's CloudMatrix. Through CCE Turbo, intelligent scheduling of CloudMatrix384 super node computing power is achieved, enabling immediate review of massive content upon publication.

The visual creation platform Meitu also realizes efficient scheduling of diversified AI computing power based on CCE and Ascend Cloud Services, supporting the deployment and inference of diverse models/algorithms. This ensures Meitu's large-scale training and rapid iteration, supporting 200 million monthly active users to instantly share beautiful moments in life.

In the Serverless domain, Huawei Cloud's Cloud Container Instance (CCI) stands out with its extreme elasticity and cost-effectiveness, as demonstrated by STARZPLAY, the No. 2 OTT platform in the Middle East and North Africa.

STARZPLAY's old platform was a classic traditional silo architecture with no elasticity capabilities. It required substantial manpower for preparation in advance when handling super events like the Cricket World Cup. However, with the continuous growth of high-concurrency access, the "add water to flour, add flour to water" model became unsustainable.

At this juncture, Huawei Cloud presented STARZPLAY with a full-stack Serverless solution based on CCI. Its extreme elasticity and efficient operation and maintenance features garnered trust from customers in distant countries, thereby helping STARZPLAY "effortlessly" handle elastic access demands ranging from millions to billions for the first time during the 2024 Cricket World Cup. This resulted in a 20% reduction in resource costs, cementing STARZPLAY's reputation in this emerging market.

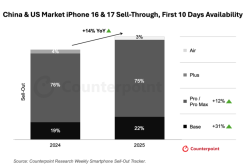

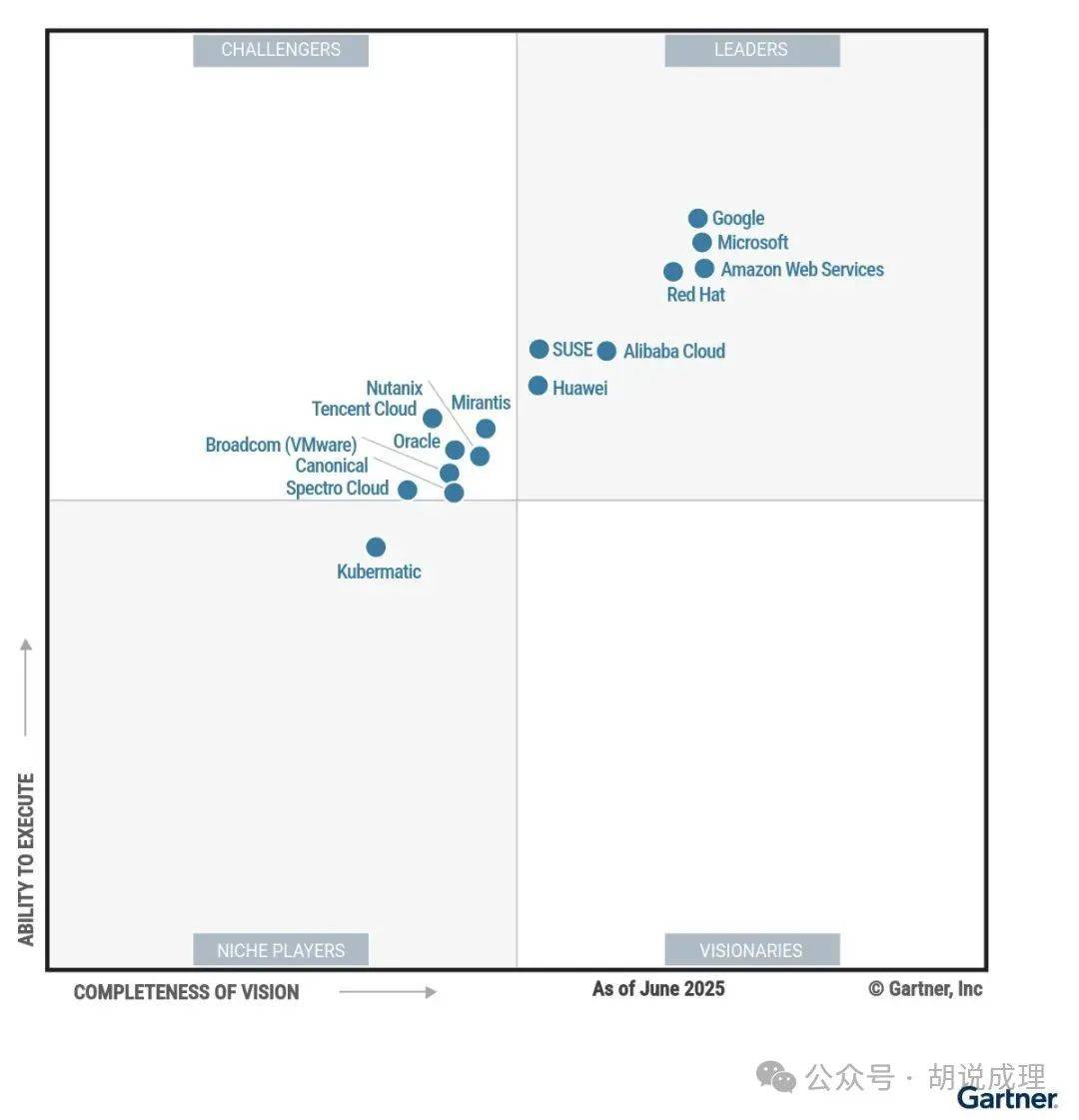

It has been a decade since Huawei Cloud released its first container product. As a memorable birthday gift, on August 6, Gartner officially released the 2025 "Magic Quadrant for Container Management" report, with Huawei entering the global leaders quadrant.

Thus far, in the field of container management, Huawei Cloud has secured a superlative position alongside global top cloud vendors such as Google, Microsoft, and Amazon.

From a certain perspective, this signifies that Chinese cloud vendors have secured a crucial point in the long competition with the United States for the source of global cloud computing innovation.

The value of the Gartner leaders quadrant needs no elaboration, as even world-class vendors like Oracle have not entered, demonstrating the rigor of its selection criteria. However, what warrants deeper analysis is why Huawei Cloud can occupy this quadrant.

In the author's opinion, it can be summarized into three factors: technological advancement, extensive practice, and open-source contribution.

From the perspective of technological advancement, in Gartner's view, Huawei Cloud's proposal of the "Cloud Native 2.0" strategy, which deeply integrates AI, edge computing, and Serverless, aligns with Gartner's evaluation direction for "Intelligent Container Management." This is a macro-strategic factor enabling it to enter the leaders quadrant.

Huawei Cloud has made significant strides in its commitment to Cloud Native 2.0, marked by the introduction of groundbreaking container products like CCE Turbo, CCE Autopilot, Cloud Container Instance (CCI), and Unified Cloud-native Services (UCS). These innovations offer users unparalleled cloud-native infrastructure for scalable container workloads across public, distributed, hybrid clouds, and edges, as previously introduced.

Huawei Cloud's technological prowess can be summed up as "advanced and comprehensive."

In its container solutions, Huawei Cloud stands out by competing with top-tier cloud providers, having developed the industry's most comprehensive container system. This system encompasses public clouds, hybrid clouds, edge computing, and AI scenarios.

Technologically, Huawei Cloud boasts a high-performance container engine capable of supporting tens of thousands of node clusters. Leveraging a distributed QingTian architecture, it achieves millisecond-level scheduling response and enhances resource utilization by over 30%. For instance, the Agricultural Bank of China has leveraged Huawei Cloud containers to build a cloud-native infrastructure that supports the transition of its core business systems to a distributed architecture, handling over 1.9 billion transactions daily.

In terms of essential scheduling capabilities, UCS, a multi-cloud container orchestration engine, facilitates unified management across public, private clouds, and edges. It resolves the issue of resource silos in enterprise multi-cloud environments through Karmada's multi-cluster federation scheduling—a pivotal challenge for Chinese users transitioning from traditional IT architectures to cloud computing.

Integrating AI, Huawei Cloud introduces AI capabilities into the entire lifecycle of container management via CCE Doer. This supports intelligent diagnosis of over 200 abnormal scenarios with a root cause location accuracy exceeding 80%. Specifically in AI training, the combination of CCE intelligent computing clusters and Ascend computing power enables multi-container shared scheduling of GPU resources, cutting overall costs by 50%.

Huawei Cloud also emphasizes differentiating competitive advantages. Leveraging its deep roots in communication technology, Huawei Cloud has merged this expertise with container technology, creating a strategic depth that most cloud providers struggle to match, especially in edge computing and 5G collaboration. Relying on its communication technology expertise, Huawei Cloud deeply integrates 5G MEC (Multi-access Edge Computing) with KubeEdge, achieving millisecond-level latency in smart grid and industrial internet scenarios. For instance, in an intelligent inspection project for an energy enterprise, the 5G + edge container solution reduced fault identification time from minutes to seconds.

Huawei Cloud's container technology also excels in practical applications.

Domestically, Huawei Cloud has led China's container software market share for five consecutive years. It is trusted by 80% of the top 100 internet companies, 75% of state-owned large banks and national joint-stock banks, 75% of the top 20 Chinese energy enterprises, and 90% of the top 30 automotive enterprises. These entities choose Huawei Cloud Container Service to drive digital transformation through cloud-native technology.

Reflecting this success, in Gartner's customer evaluation system, Gartner Peer Insights, Huawei Cloud Container Service ranks first globally with a score of 4.7 out of 5. It excels in delivery efficiency (4.8 points) and technical support (4.7 points).

However, a crucial aspect of Huawei Cloud's development lies in its contributions to the open-source community.

The Cloud Native Computing Foundation (CNCF) is a globally influential open-source technology organization aiming to establish cloud-native technology as the global standard for application development and deployment. Among its four key directions, containerization ranks first. As a neutral organization independent of cloud vendors, CNCF's influence in cloud-native technology is unparalleled, having hosted over 170 open-source projects (as of 2025), forming a comprehensive cloud-native technology stack.

Huawei Cloud actively participates in open-source initiatives. As a long-standing contributor, it has participated in 82 CNCF projects, holds over 20 project management maintainer seats, and holds the only global CNCF TOC vice-chair position.

Regarding Huawei Cloud's active involvement in open source, Bryan, the cloud-native product manager at Huawei Cloud, shared that cloud-native, particularly the container market within it, is an ecosystem-driven market. For example, although Boeing faces competition from Airbus in the passenger aircraft market, it dominates the global cargo aircraft market with a share exceeding 80%, compared to Airbus's 15%. This disparity partly stems from Boeing's significant contribution to standardizing ULD (Unit Load Device) specifications, leading to compatibility across airlines, airports, and aircraft models, which Boeing pioneered with the 747's wide-body cargo hold dimensions. Consequently, 90% of global air cargo containers adhere to these specifications. Similarly, in software, Boeing open-sourced its cargo aircraft loading optimization algorithms (like LoadMaster), achieving a 78% penetration rate among global cargo airlines for load planning.

Viewing air cargo containers as a form of container technology, it becomes evident that early participation in ecosystem building and active open-sourcing of technologies, including software and algorithms, benefits both beneficiaries and contributors. For contributors, it enhances their position as standard leaders and yields richer market returns.

While container technology's basic concepts remain consistent, standards and application methods vary greatly. In the standards-driven cloud computing market, higher ecological contributions lead to higher market penetration, influencing customers' subsequent choices. For Huawei to solidify its position among top leaders, ecological investment and contribution are imperative.

As Huawei expands into the global market, increasing penetration through open-source contributions is essential.

However, open-source technology requires both willingness to open and to use. Behind Huawei's notable achievements lie not just the donation of KubeEdge, Karmada, Volcano, Kuasar, and benchmark projects like Kmesh, openGemini, and Sermant in 2024, but also the growing user base and importance of its open-source technologies. This is crucial for Huawei's ascent to the global leader quadrant.

Investment yields returns. In the dynamic container market, still dominated by American vendors, it is encouraging to see Chinese cloud computing providers, led by Huawei Cloud, progressing and shaping the future through relentless efforts.