Behind the Evolution of Deep Research Agent V2: The Dawn of the Super Agent Era

![]() 08/19 2025

08/19 2025

![]() 663

663

From basic multimodal retrieval to advanced deep multimodal browser agents and significant enhancements in underlying retrieval capabilities, to parallel architectures and MCP multi-agent collaboration mechanisms, Kunlun Wanwei's series of updates to the Tiangong Super Agent reveals a steadily progressing AI evolution: agents are transitioning from mere office productivity tools to genuine super AI assistants, capable of independent retrieval, comprehension, and expression.

In 2025, the era of super agents is swiftly approaching, akin to the launch of the iPhone.

Author | Pi Ye

Produced by | Industry Entrepreneur

Regarding Zhang Xiaolong, "The Story of Tencent" notes that his fame within Tencent stems from two notable achievements: winning the tennis championship at a company sports event and being one of Guangzhou's biggest KENT cigarette consumers. However, with the release of WeChat version 1.0 in 2011, this description became obsolete – WeChat became synonymous with Zhang Xiaolong. According to Tencent's latest second-quarter financial report, WeChat boasts over 1.4 billion monthly active users, roughly equal to China's entire population.

What made WeChat so successful? Over the years, this question has been a focal point for product managers. From PC internet to mobile internet and now to AI, WeChat's design and development path have served as the benchmark for numerous products.

A widely held view is that WeChat embodies the capabilities that defined China's mobile internet era, from speech technology to the underlying technology of social communication, from UI design to product-level demand satisfaction and internal information flow processes. Each comprehensive single-point component contributed to the universal acceptance and explosive growth of this super app, marking a qualitative transformation.

History often repeats itself. Today, similar signals of quantitative change are emerging quietly amidst the agent application boom.

Kunlun Wanwei recently unveiled the Deep Research Agent, integrating multimodal retrieval understanding and cross-modal generation capabilities into deep research work for the first time, utilizing "Agent Empowering Agent" to further bolster the capabilities of the Tiangong Super Agent.

This is not an isolated development. From August 11th to August 15th, Kunlun Wanwei captivated the domestic AI market by releasing a new model every day, ranging from video generation models, world models, to unified multimodal models, agent models, and AI music creation models. These models not only enhance AI's presence in various domain scenarios but also drive the increasing maturity of individual components within the agent infrastructure.

In 2025, what will the ultimate form of agent products be? While no one can provide a definitive answer, it is evident that behind each product action and model signal, the quantitative change in base capabilities is accelerating.

If previous agent platforms were primarily labeled as single points for AI basic search, today, the underlying technical system capable of dissecting and satisfying new demands is also becoming a new product measurement standard, encompassing multimodal retrieval and generation, and deep information retrieval.

Identifying new demands and solving real problems signals that agents are officially entering the second half of the competition.

I. 'True' Multimodal and 'Robust' Deep Search: Pushing the Boundaries

Before delving into the update standards for agent products, let's explore Kunlun Wanwei's latest offering: Deep Research Agent v2. From a broader perspective, this 'Agent for Agents' adds a robust technical foundation to the Tiangong Super Agent.

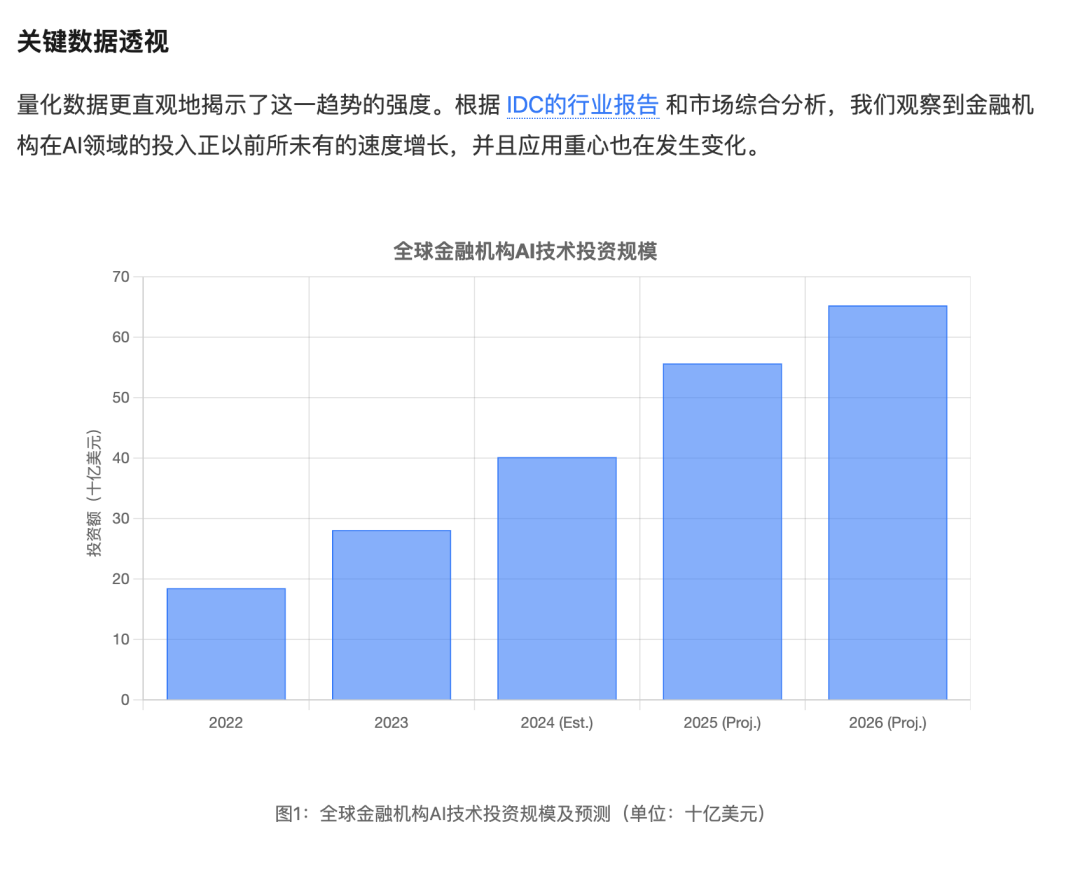

First, let's consider the most fundamental retrieval capability. Today, beyond text-based retrieval, a newer demand is multimodal retrieval. Users now expect agent assistants to provide complex information answers that integrate images and text.

Regarding multimodal capabilities, most current agent products excel more on the generation side, while on the retrieval side, they primarily rely on final text for chart conversion and presentation. Few agent products can achieve deep retrieval at the forefront, which is precisely the latest highlight of Skywork Deep Research Agent v2.

When searching for questions related to "the impact of AI large models on the education industry," Skywork Deep Research Agent v2 automatically retrieves corresponding image information and outputs a comprehensive answer that integrates image and text content after understanding the image.

In other words, during the question retrieval process, with the support of Skywork Deep Research Agent v2, the Tiangong Super Agent is no longer limited to text but groundbreakingly uses image information as a core information retrieval element. Through comprehensive understanding of images and text, it ultimately outputs an answer.

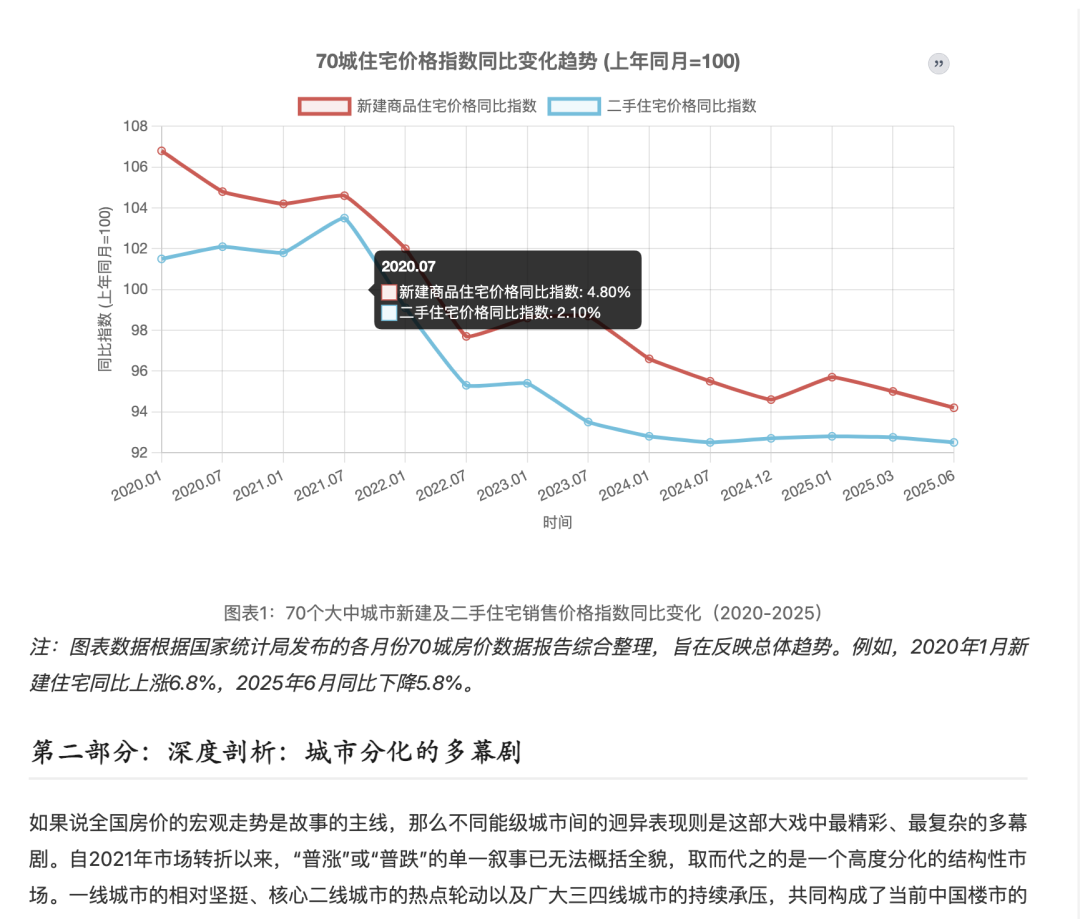

When searching for housing price-related questions, it automatically uses the "city housing price curve" from the corresponding website as the information retrieval source and combines it with text content for output;

When searching for questions related to "K12 online education products," it automatically retrieves user portrait images of corresponding K12 products on the market, understands the image content, and combines it with text for comprehensive output;

If multimodal retrieval enhances the Tiangong Super Agent's retrieval capabilities at the object element level, then deep information retrieval strengthens its retrieval system. There are two major highlights: first, the revolutionary introduction of a deep multimodal browser agent, and second, further strengthening the underlying capabilities of deep information retrieval through the establishment of standards.

Let's start with the deep multimodal browser agent. In the AI market, AI browsers are a hot field for AI implementation. They leverage users' search entry habits in the mobile internet era as an entry point and validate and implement corresponding AI technologies through browser entries. Both domestic and international companies, such as Perplexity and OpenAI, have ventured into this field. However, AI browsers currently face numerous issues.

For instance, they consume significant battery power and respond slowly. Many find AI browsers sluggish. Closer inspection of the corresponding retrieval and thinking process reveals that many browser agents often get stuck in a cycle of repeated verification and hitting dead ends, leading to jokes like "three tasks taking a day to compute."

The problems don't end there. Other issues include AI browsers' difficulty in bypassing users' document and webpage permissions, automatically halting reasoning upon encountering restrictions; high battery consumption, as evidenced by recent heated discussions about certain AI browsers causing significant hardware wear and tear on user terminals; and, most critically, for most AI browsers, they haven't escaped the inherent limitations of browsers, merely conducting searches around previous browser pages with extremely limited increments.

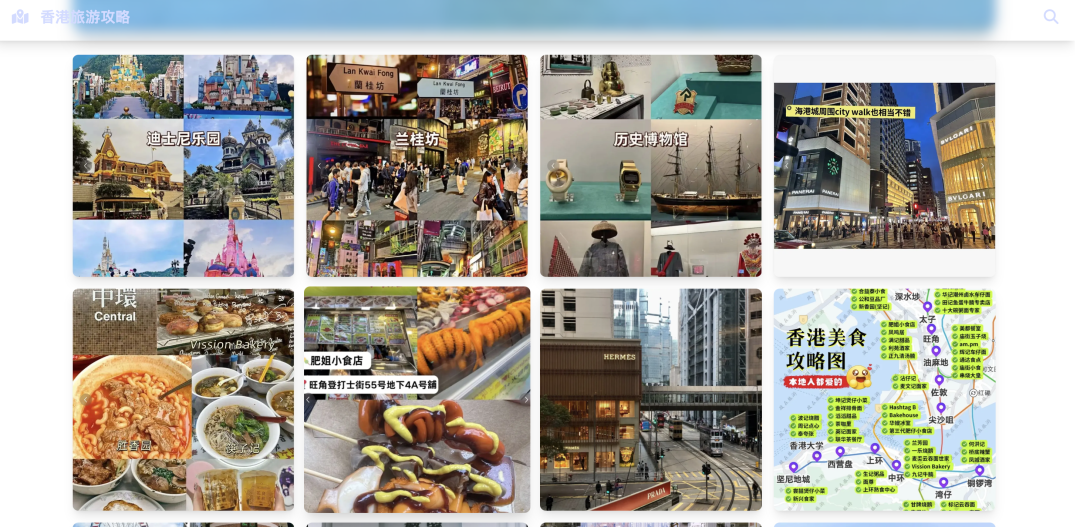

This is where the special design of Skywork Deep Research Agent v2 shines. With its deep multimodal browser agent, users can not only obtain relevant internet information but also access cross-platform, full-system content presentation, such as from Twitter, Instagram, Zhihu, Xiaohongshu, etc., encompassing content, images, bullet comments, and underlying comment sections. Objective and subjective elements are all identified and analyzed, collectively forming the basis for the Tiangong Super Agent's retrieval and thinking in providing answers.

When searching for "Hong Kong travel guides," it automatically retrieves information based on Xiaohongshu and provides a comprehensive plan by integrating Xiaohongshu content posts and comment section feedback;

When comparing Grok4 with GPT5, it automatically visits platforms like Twitter and Xiaohongshu to retrieve relevant information and compile users' authentic evaluations and feedback;

When querying information about relevant basketball stars, the agent automatically cross-platform captures and organizes the stars' recent tweet popularity indicators and representative comments, quickly presenting the full picture of public opinion;

Furthermore, based on the parallel search and multi-action planning mechanisms of the deep multimodal browser agent, retrieval and generation efficiency can be significantly improved, enabling tasks to be quickly executed and feedback provided; in sections requiring user input (such as CAPTCHAs), the agent will automatically remind users to take over the operation.

Beyond the deep multimodal browser agent, Skywork Deep Research Agent v2 further consolidates the Tiangong Super Agent's basic retrieval capabilities. By setting a series of "standard" paths, it must follow corresponding thought chain logic and generate higher-quality answers when answering questions, retrieving, and generating responses.

For example, strict search question construction standards have been established, clearly defining the five core attributes that high-quality search questions and their answers should possess: diversity (covering a wide range of topics and difficulty levels), correctness (accurate answers), uniqueness (answers with certainty), verifiability (answers verifiable through reliable sources), and challenge (requiring deep reasoning abilities). This set of standards is also used in validating answers generated by agents.

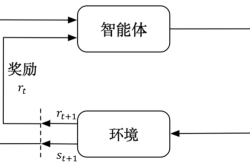

Moreover, in aspects such as end-to-end reinforcement learning and parallel reasoning, Skywork Deep Research Agent v2 employs a series of special designs to ensure agents conduct multiple rounds of verification and optimized thinking before outputting final results, ultimately providing answers quickly, efficiently, and accurately.

Objectively speaking, the three breakthroughs that Skywork Deep Research Agent v2 brings to the Tiangong Super Agent align with people's current updated requirements for agent products: more multi-dimensional answers and question understanding, faster problem-solving speeds, more cross-platform, comprehensive content presentation, and more realistic logical text that aligns with human thought chains.

These are precisely the core design starting points of Skywork Deep Research Agent v2's multimodal retrieval capabilities, deep multimodal browser agent, and deep information search capabilities, namely, to improve and amplify each node of retrieval, reasoning, thinking, and answering within the agent infrastructure, thereby providing users with more suitable AI productivity enhancements.

II. 'Agent Empowering Agent': The Era of Agent Group Armies Has Arrived

Amidst the breakthrough of Skywork Deep Research Agent v2, a broader perspective to consider is, where exactly have agents arrived today?

Over the past two years, agents have emerged as the consensus for implementing AI large models, indicating that AI technology can be released and expressed to a greater extent through agent forms, whether in TO C scenarios or specific TO B industry links.

This includes a series of TO C or TO B agent products such as Manus, Betteryeah, and Dify. However, beyond the consensus, the reality is not perfect.

In addition to the issues mentioned earlier with AI browsers, even in agent products like Manus, a series of illusions, data security concerns, slow responses, and "low-value" answers continue to cast doubt on the value of agents – how should agent products evolve to become genuine productivity tools?

To some extent, Skywork Deep Research Agent v2 provides an answer. Whether it's the multimodal retrieval presented by Skywork Deep Research Agent v2, the deep multimodal browser agent, or the strengthening of underlying retrieval capabilities, these "AI components" that excel at specific nodes have now been embedded in the Tiangong Super Agent app, further iterating the underlying logic of the Tiangong Super Agent's infrastructure and enabling it to evolve step by step.

Specifically, these improvements stem from the optimization of corresponding node technologies.

For instance, in the upgraded multimodal retrieval capabilities, it employs multimodal crawling and long-distance multimodal information collection. The former features "Visual Noise Pruning," which, in layman's terms, identifies and filters out valuable parts of all captured elements, propelling valuable information into subsequent processing, enhancing task progress speed, and conserving computational resources.

The latter, Skywork Deep Research Agent v2, mimics the reading pace of senior researchers, following a "screening first, then intensive reading" process. When the model performs reasoning at each step, it not only examines the results of the current action but also integrates the context of tens of thousands of words from the previous dozens of steps. This mode significantly reduces computational overhead while ensuring that key visual evidence is fully utilized.

For instance, the deep multimodal browser agent's ability to achieve cross-platform and full-element recognition is rooted in the in-depth optimization of the browser's Document Object Model (DOM) by the Kunlun Wanwei AI team. By leveraging the browser's native features, it extracts core information from web pages with greater accuracy and efficiency.

Moreover, the team has conducted extensive action optimization for mainstream social platforms both domestically and internationally, ensuring compatibility and stability across different social networking platforms. This enhancement improves the success rate and efficiency of automated browsing, circumventing the limitations faced by traditional AI browsers.

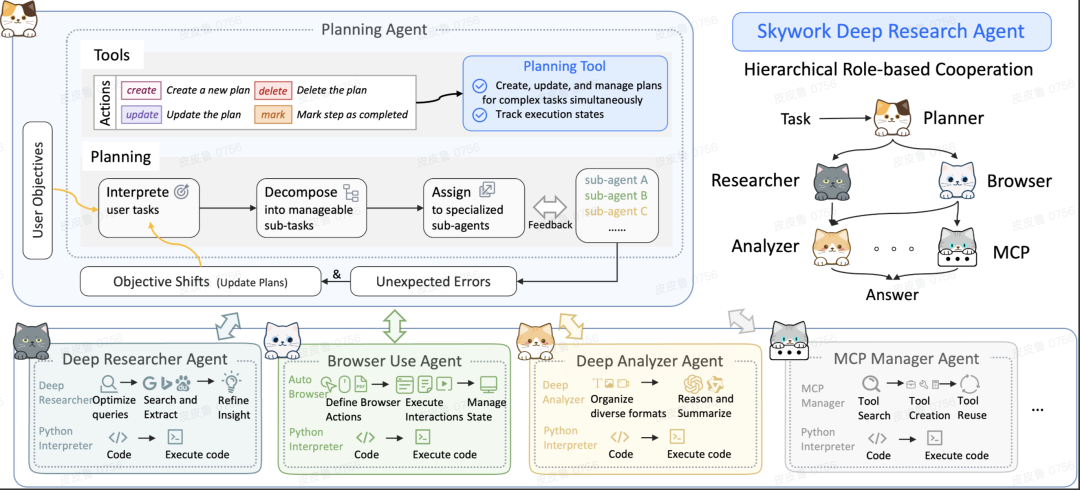

Simultaneously, there has been a breakthrough in basic retrieval capabilities, which is built upon the inherent AI retrieval and response process. Each node has been fortified in a more scientific and rational manner. Furthermore, the most popular multi-agent collaboration mechanism on the market has been seamlessly integrated into the Tiangong Super Agent product via Skywork Deep Research Agent v2, further bolstering its Agent infrastructure and elevating its intelligence ceiling.

These genuine advancements in models and product technologies constitute the corresponding node Agent capabilities demonstrated by Skywork Deep Research Agent v2. These capabilities will be seamlessly integrated into the inherent process links of Tiangong Super Agent, providing users with more intelligent answers that are imperceptible at the front end.

This achievement also underscores a new reality: Agent is no longer a product solely reliant on a particular technology or node but is evolving into a super APP that functions as a cohesive unit.

In other words, when a user performs a search or generates a command, the process within Tiangong Super Agent involves specialized agent products handling each step from question posing to analysis, multimodal retrieval, answer generation, and verification. Through specialized enhancements for each link, each node becomes efficient, intelligent, and controllable, thereby producing an answer that best meets the user's evolving needs.

It can also be said that behind Kunlun Wanwei's approach, it is evident that to achieve truly intelligent answers and valuable responses, Agent products are gradually being broken down into atomic, molecular, or even modular components. These atomized components, functioning as a cohesive unit, are pivotal in determining whether Agent assistants can become genuinely productive tools.

III. 2025,

Awaiting the iPhone Moment for Super Agents

On Zhihu, someone posed the question: What iconic event truly marks the beginning of the mobile internet? Among the highly upvoted answers was the release of the iPhone. While subsequent developments like the maturation of the App store contributed, it was the 4.0 and even 5.0 versions that truly propelled the iPhone to global popularity. The moment Steve Jobs unveiled the keyboard-less phone marked the dawn of the mobile internet era.

Similarly, with Skywork Deep Research Agent v2, or rather, the Tiangong Super Agent empowered by Skywork Deep Research Agent v2, one can sense the changing atmosphere signifying the start of a new era.

If in 2024, people's perception of Agents was limited to super apps like WeChat and Alipay that could accomplish almost any task through instructions, today, these perceptions are gradually diversifying, visualizing, and materializing.

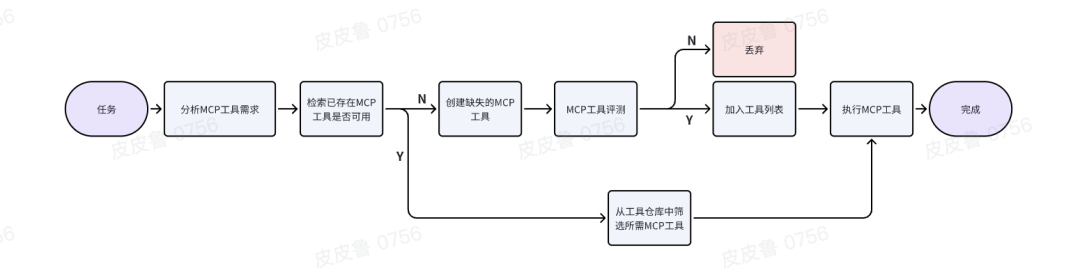

On the current Tiangong Super Agent APP, a series of multidimensional tasks ranging from work to lifestyle and information curiosity can be accelerated. Skywork organically integrates its model capabilities with tool capabilities to construct a collaborative multi-agent framework. This system not only organizes multiple Agents into an efficient collaboration team but also dynamically creates and manages MCP tools in real-time online using the code capabilities of Agents, significantly enhancing task processing capabilities and environmental adaptability.

The overall operational process system for tasks in Tiangong Super Agent: MCP Manager Agent

This is the prototype of a super app in the AI era, with operating logic distinct from any previous product. Objectively, while products represented by the Tiangong Super Agent APP cannot yet accomplish all tasks and may not represent the ultimate form, a clear signal emerges: behind its growing intelligence, the foundation of this super app is becoming increasingly solid, and its operating mechanism increasingly clear and rational.

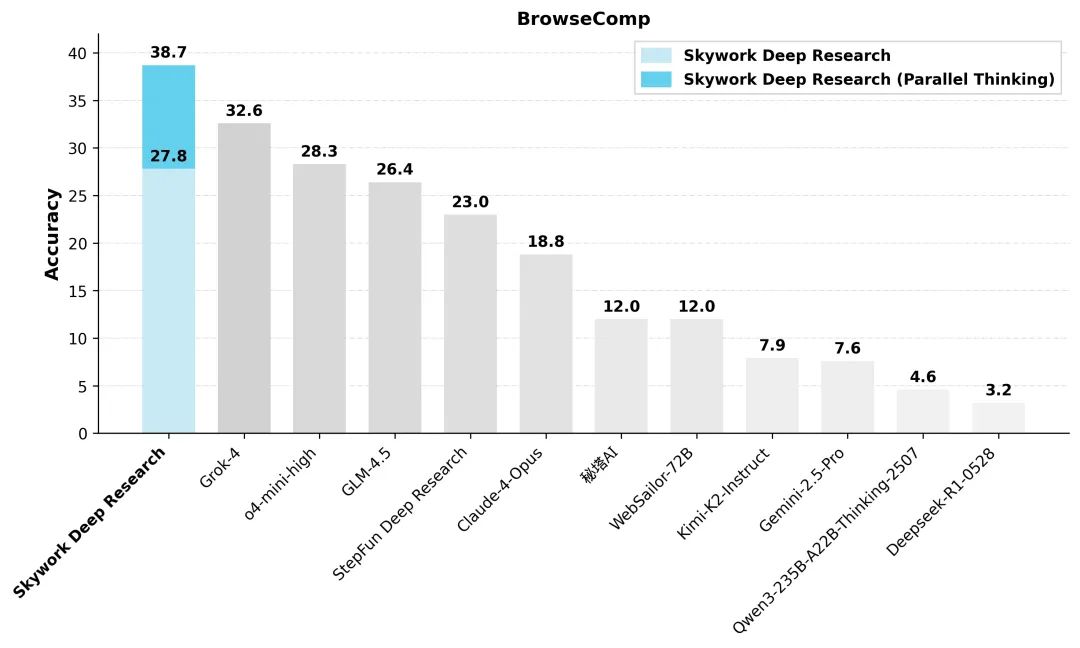

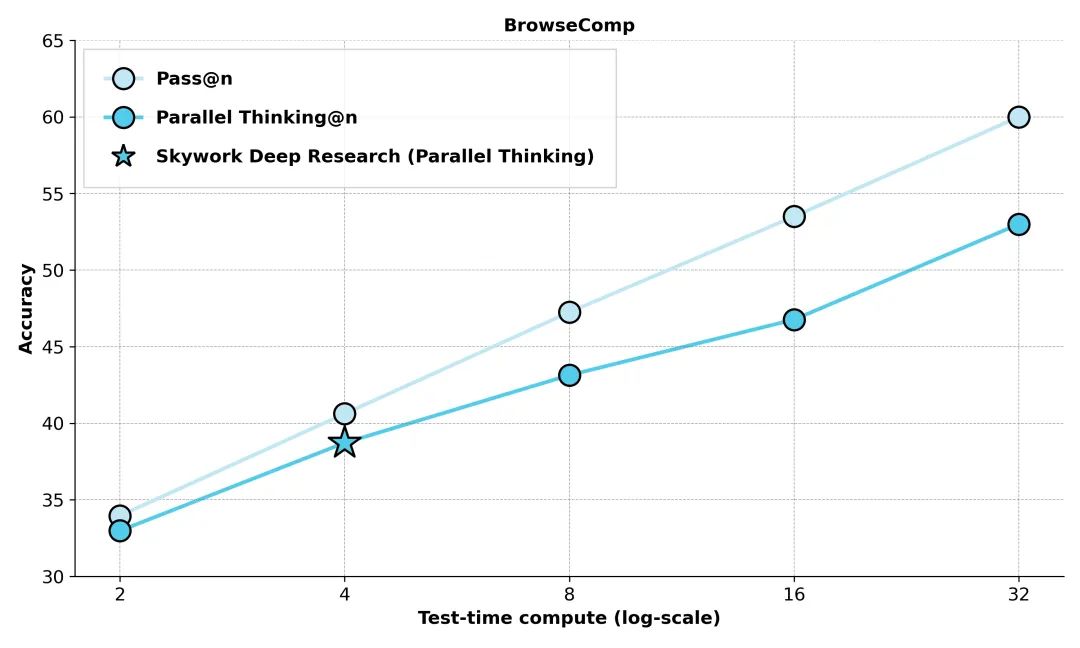

Recent achievements include Skywork Deep Research surpassing most similar products on the authoritative search evaluation ranking BrowseComp, with an accuracy rate of 27.8%. Upon enabling the self-developed "Parallel Thinking" mode, the accuracy rate jumps to 38.7%, setting a new industry SOTA record.

Notably, in the Parallel Thinking mode, Skywork Deep Research's accuracy rate continues to climb as the thinking time increases.

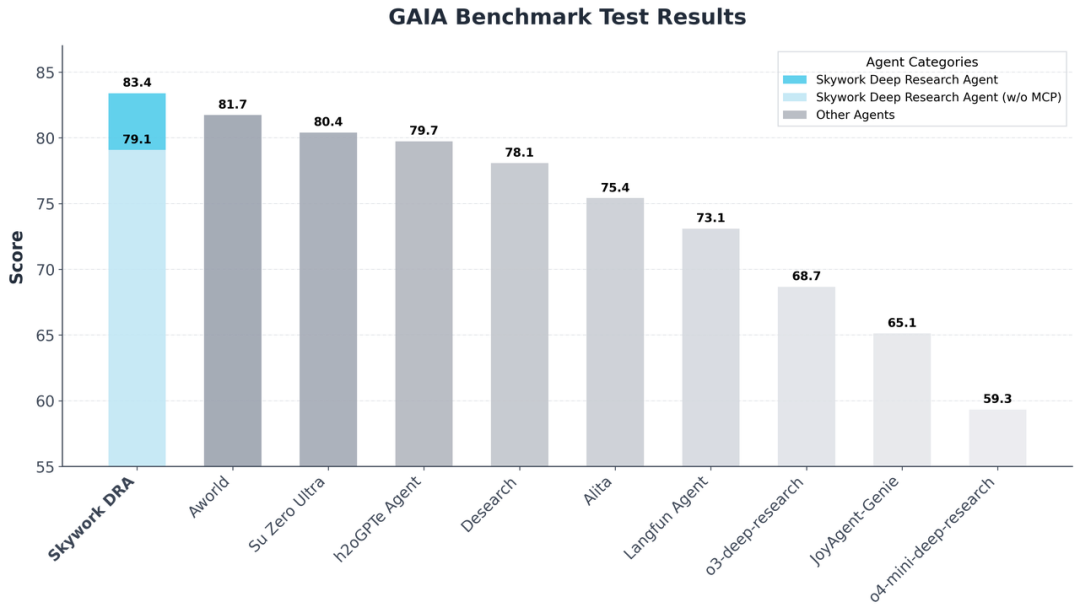

Additionally, in the GAIA test set, Skywork Deep Research Agent V2 has also achieved SOTA results. GAIA is a comprehensive evaluation benchmark for general agents, designed to measure key AI agent capabilities in real environments, such as multimodal reasoning, web browsing, tool usage, long-term planning, environmental interaction, and task execution. It is also considered a significant milestone in assessing whether AI possesses artificial general intelligence (AGI).

From multimodal retrieval capabilities to deep multimodal browser agents and the strengthening of retrieval underlying capabilities, to parallel architectures and MCP multi-agent collaboration mechanisms, Kunlun Wanwei's series of model actions and the updated Tiangong Super Agent reveal an AI evolution path progressing quietly: Agents are evolving from AI office productivity tools to true super AI assistants, capable of independent retrieval, understanding, and expression.

In 2025, the iPhone moment for super agents is accelerating. We eagerly anticipate Kunlun Wanwei's AGI surprises this week.