How Can China's Computing Power Flow Effortlessly, Like Water and Electricity?

![]() 08/28 2025

08/28 2025

![]() 694

694

The intensive training of large AI models has transformed computing power into a scarce resource in the AI era, akin to oil.

Between 2023 and 2024, smart computing centers emerged as the flagship theme of "new infrastructure." Data indicates that as of September 2024, China's computing power scale reached 246 EFLOPS, with smart computing power increasing by over 65% year-on-year. Across various industries, there were over 13,000 computing power application projects. However, in stark contrast to the booming construction of smart computing centers, their operational status this year is concerning, with an average cabinet utilization rate of only 20% to 30%, and some enterprise-level centers even dipping as low as 10%.

This quantitative surge has not been accompanied by a corresponding qualitative leap. To address the embarrassing state of China's computing power implementation, Semiconductor Industry Insights interviewed Zhang Shuai, Ecosystem Director of WQXQ, to delve into WQXQ's groundbreaking ideas and practical pathways to resolve this dilemma.

01

Abundant Computing Power, Yet Underutilized

To gauge the utilization of computing power in smart computing centers, rack rates and utilization rates serve as key indicators. Rack rates focus on whether equipment is installed, racked, and powered on, while utilization rates emphasize whether the equipment is actively performing computational tasks for business purposes. According to a report by the China Academy of Information and Communications Technology, the overall utilization rate of computing power in launched smart computing centers nationwide stands at only 32%.

On April 16, news emerged about local development and reform commissions conducting surveys on computing power. Reports indicate that multiple regions will successively issue notices regarding the survey of computing power, encompassing established, under-construction, and planned computing center projects. The surveyed data will serve as a crucial foundation for the national planning of computing power resources. This move by relevant departments aims to plan and coordinate from a higher level, thereby avoiding blind and repetitive construction across regions. Industry insiders view these latest regulatory actions as reflecting structural issues such as supply-demand imbalances and resource mismatches faced by the industry. Moving forward, the construction of computing power infrastructure is expected to enter a new phase of quality improvement and efficiency enhancement.

A closer inspection of the domestic computing power market reveals three prominent issues:

First, there is an inadequate supply of high-quality computing power, making it challenging for many enterprises to find computing power resources that align with their business needs. Most smart computing centers have a capacity of around 1000P (where 1P of computing power can perform 10 quadrillion calculations per second), built with highly dispersed social capital that lacks industry understanding, making it difficult to identify suitable demand sides.

Second, the usage threshold is too high. Startups often struggle to find and afford computing power. Some companies manage to secure computing power but lack the know-how to utilize these bare-metal devices.

Third, there is fragmentation within the domestic chip ecosystem. The unique landscape of domestic AI infrastructure involves multiple models and chips, resulting in substantial heterogeneous computing power. The chip architectures and instruction sets of different vendors are incompatible, impeding the efficient flow of computing power resources. Due to the incomplete ecosystem, some domestic card enterprises struggle to utilize their products effectively.

Consequently, a new phenomenon has emerged in smart computing centers this year: artificial intelligence enterprises are experiencing a "computing power shortage," while smart computing centers are "selling cards" to survive. In a scenario where "multiple chips" cannot equate to "large computing power," WQXQ has entered the computing power race.

02

The 'Idealism' of the Tsinghua System

In May 2023, Professor Wang Yu from the Department of Electronic Engineering at Tsinghua University, along with his doctoral students Xia Lixue and Dai Guohao, co-founded WQXQ. This company bears a distinct Tsinghua DNA – Wang Yu is a Fellow of the Institute of Electrical and Electronics Engineers, Director of the Department of Electronic Engineering at Tsinghua University, and co-founder of AI chip company DeepBrain Tech, acquired by Xilinx in 2018. Xia Lixue holds both a bachelor's and doctorate degree from the Department of Electronic Engineering at Tsinghua University, with her research focusing on the collaborative optimization of AI chips and algorithms. Co-founder Yan Shengen once served as Executive Research Director of the Data and Computing Platform Department at SenseTime, leading a team to build a cluster of over 10,000 cards. He is currently an associate researcher at Tsinghua University. This pure Tsinghua background has endeared WQXQ to the capital market since its inception. Within less than two years of its establishment, the company has secured nearly RMB 1 billion in funding from renowned investors such as Sequoia China, Baidu, Zhipu AI, Qiming Venture Partners, and Legend Capital. In the AI computing power hard tech sector, such funding speed and scale are rare.

What the capital recognizes is not merely WQXQ's background but also its ambitions.

WQXQ defines itself as a "computing power operator" in the era of large models. Its core objective directly addresses the pain points of China's computing power market: with NVIDIA's CUDA ecosystem holding a dominant position, domestic chip vendors operate independently, and developers need to re-adapt their code for each hardware change. This fragmented ecosystem severely restricts the practical application value of domestic computing power.

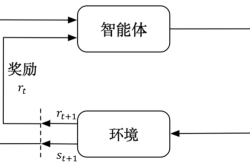

Whether it's domestic or NVIDIA cards, for users, effective computing power is what completes tasks. In response, WQXQ has created a cloud-based computing network characterized by "M×N," converging global heterogeneous, disparate, and diversely owned computing power into a network that is "intelligently perceived, real-time discovered, and obtained on demand." It not only connects but also allocates, transfers, and accurately adjusts resources. In terms of technical implementation, WQXQ's strategy is two-pronged: first, to shield hardware differences through a unified middleware layer, allowing developers to focus on their code rather than underlying chips; second, to optimize the performance of domestic chips for scenarios like large model training and inference, reducing the usage gap to below the perceptual threshold.

This technical concept was validated at the 20th Research Electronic Design Contest (REDC). As a proposition enterprise, WQXQ set up the "Edge/Cloud Collaborative Application Electronic Design Challenge," attracting the participation of 25 university teams. The final entries spanned various fields such as edge AI accelerators, robot control frameworks, and intelligent inspection, demonstrating young developers' dual breakthroughs in technical depth and application breadth. "We hope to shorten the cycle of industrializing scientific research results through a collaborative mechanism of industry, academia, research, and application," said Zhang Shuai, Ecosystem Director of WQXQ. At the REDC, the company not only provided real industrial propositions but also directly fed back its technological needs to university teams. "By jointly cultivating compound talents who understand both algorithms and hardware, we can collectively build a comprehensive AI infrastructure ecosystem, extending from underlying chips to upper-level applications, and accelerating the large-scale implementation of AI technology across various industries."

03

The Ambition of the Three 'Boxes'

As a startup founded just over two years ago, WQXQ is in the stage of product refinement and early commercialization, advancing its commercialization through a cloud-end combination. When asked how WQXQ maintains its competitiveness through technology and ecological layout, Ecosystem Director Zhang Shuai highlighted three key indicators: "ease of use, stability, and cost-effectiveness."

At the 2025 World Artificial Intelligence Conference, WQXQ launched three core products of its comprehensive AI efficiency enhancement solution, affectionately known as the "Three Boxes." These products cover "Wuqiong AI Cloud" for serving global computing power networks from 10,000 to 100,000 cards, "Wujie Smart Computing Platform" for serving large-scale smart computing clusters from 100 to 1,000 cards, and "Wuyin Terminal Intelligence" solutions for serving limited computing power terminals from one to ten cards. They aim to unlock the maximum potential of every unit of computing power across the full spectrum of software and hardware scenarios, spanning from one to 100,000 cards of computing power.

Big Box: Wuqiong AI Cloud

The foundation of Wuqiong AI Cloud is an extensive nationwide computing power network deeply embedded across the country. Built on WQXQ's "One Network, Three Differences" scheduling architecture, it successfully covers key nodes of the national strategic layout of "East Data, West Computation," converging vast computing power resources from 26 provinces and cities and 53 core data centers. It integrates heterogeneous computing power pools of over 15 mainstream chip architectures, with a total computing power scale exceeding 25,000P. Leveraging powerful wide-area high-performance dedicated line intranet interconnectivity, users can seamlessly switch and migrate between computing resources of different types and in different regions on demand, fully embodying the design philosophy of "ease of use" and "stability."

Medium Box: Wujie Smart Computing Platform

The "Medium Box" targets large-scale smart computing clusters ranging from 100 to 1,000 cards, enhancing computing power cost-effectiveness through a "full-link solution." In collaboration with the Shanghai Institute of Algorithm Innovation, it stably supported the uninterrupted training of a large model with 10 billion parameters for 600 hours, based on a 3,000-card Muxi domestic GPU cluster, setting a record for domestic computing power training. When serving China Mobile Yunnan, it efficiently utilized 2,000 Huawei Ascend 910B accelerator cards to achieve distributed deployment and large-scale inference of models with 100 billion parameters, injecting core competitiveness into commercial services. These cases underscore its stability and cost-effectiveness advantages in complex scenarios.

Small Box: Wuyin Terminal Intelligence

The "Small Box" focuses on limited computing power terminals ranging from one to ten cards, making terminal computing power both user-friendly and economical. In collaboration with the Shanghai Institute of Innovation and Entrepreneurship, it co-created the world's first edge-side intrinsic model, Wuqiong Tianquan Infini-Megrez2.0. While achieving cloud-level intelligence with 21B parameters, it controls memory usage at 7B and actual computations at 3B, breaking through the resource limitations of terminal devices. It seamlessly adapts to various current terminal devices, successfully shattering the impossible triangle of terminal "energy efficiency-space-intelligence," enabling terminal devices to complete complex tasks without relying on the cloud, further expanding the boundaries of computing power services.

04

Conclusion

When the computing power scheduling dashboard of Shanghai ModelSpeed Space, the world's largest AI incubation scenario served by WQXQ, displayed an average daily token invocation volume exceeding 10 billion, this figure not only reflected the growth of an enterprise but also highlighted the arduous transformation of China's computing power ecosystem. WQXQ's "Three Boxes" are attempting to answer an industry-level proposition: under the current condition of temporarily lagging chip performance, how can system-level innovation unleash the latent value of domestic computing power?

Faced with the challenges of "fragmented computing power" and "high costs," WQXQ significantly improves the actual utilization rate of computing power resources and markedly enhances the cost-effectiveness and service quality of unit computing power through technological innovation and practice in underlying operators, communication, scheduling, fault tolerance, and other aspects. It is understood that in heterogeneous situations, there have been instances where the same number of domestic chips combined with international mainstream chips performed worse in training than international mainstream chips alone. However, with the gradual maturity of technology and the concerted efforts of upstream and downstream ecosystems, the utilization rate of computing power from the mix of different chips can now reach up to 97.6%, with users barely noticing the difference in experience due to heterogeneous computing power.

In this era where computing power defines AI competitiveness, China needs not only more smart computing centers but also an ecosystem that allows computing power to flow freely. When developers can invoke domestic computing power as effortlessly as water and electricity, and when chip vendors can iterate products based on real-world scenario demands, China's AI industry can hope to emerge from the dilemma of "having computing power but finding it difficult to use."

WQXQ's practice provides a valuable example for this ecological breakthrough.