Token: The Paramount Resource of the Future

![]() 09/05 2025

09/05 2025

![]() 639

639

Throughout the long and storied history of human civilization, every significant leap in productivity has been closely tied to a transformation in the core elements that drive it.

From the coal that powered the steam - powered era to the modern electricity that illuminates our lives, and then to the chips and data that are the lifeblood of the information age, this consistent pattern is undeniable.

These elemental transformations have, often imperceptibly, reshaped the very fabric of social and living structures across the globe.

Today, we stand at the dawn of the artificial intelligence era.

Simultaneously, a seemingly small yet profound concept has begun to gradually take center stage. It is emerging as the core driving force behind the world's operations and is poised to become the most crucial resource for human society —

It is: Token.

01 Token Is Everything

Four years ago, we were trailblazers in the market by introducing the concept of 'Computational Power Is National Strength.' Now, it's time for this logic to evolve and expand further.

After stepping into the era of AI large models, computational power, electricity, data, and humanity's most brilliant intellectual contributions (algorithms) have all converged and been unified under a single, all - encompassing concept — Token.

Today, the meaning of Token has transcended its connotations from the blockchain era. It has moved beyond being a niche belief for tech enthusiasts and is set to become the most powerful driving force in the global industrial economy in history.

In the most universal terms: As the medium through which artificial intelligence generates everything, Token is energy, it is information, it is service, it is currency, it is productivity... Token is everything.

According to statistics from the National Data Bureau, at the beginning of 2024, China's daily average Token consumption was a mere 100 billion. By the end of June 2025, this figure had skyrocketed to 30 trillion, marking a 300 - fold increase.

Behind such staggering data lies a massive investment in intelligent chips, data centers, scientific research wisdom, and R & D efforts, which is essentially equivalent to a country's comprehensive national strength.

And this figure is just the starting point; it will continue to climb exponentially.

02 The Cornerstone of AI Society

Simply stating how important Token is may not make it easily understandable for everyone.

Therefore, it's essential to first clarify what Token is from a general technical perspective.

For those without a technical background, during the process of intelligent machines processing information, Token can be thought of as an 'atom' that is observable.

Alternatively, it is the 'smallest language unit' we use when interacting with AI.

① The Essence of Token: The Smallest Carrier of Information

In the past, the medium for dialogue between humans and machines was code, with programmers acting as intermediaries.

Nowadays, the most commonly used AIs are Large Language Models (LLMs).

When interacting with them, we can already use human language.

However, whether we input a question or the AI provides an answer, this information is not processed internally by the machine in the form of 'characters' or 'words' as we commonly use.

Within AI programs, there exists a tool called the 'Tokenizer'.

Its task is to break down information into smaller, more standardized units according to specific rules.

And these units are what we refer to as Tokens.

This concept might be a bit difficult to grasp, so let's illustrate with an example.

In English, a Token could be a complete word like 'Apple' or a part of a word like 'ing'.

In Chinese, a Token could be a single character or a phrase.

Additionally, punctuation marks, spaces, and even specific pixels in an image or syllables in audio can be abstracted as Tokens.

If our daily language is like a Lego structure, then Tokens are the small building blocks that make it up.

The essence of AI processing and understanding information lies in the combination, arrangement, and reconstruction of these building blocks.

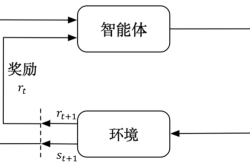

② How Token Works: From Human Language to Machine Understanding

When we input a command into AI, the tokenizer swiftly converts it into a sequence of Tokens so that the AI can understand.

The neural network within the AI model receives this sequence of Tokens and, drawing on vast training datasets and intricately designed complex algorithms, attempts to understand the meaning of the Tokens, the relationships between them, and thereby infer the intent of the entire sequence.

Subsequently, the AI generates a new sequence of Tokens, which the tokenizer then converts back into language or images that humans can understand.

Although this processing mechanism may seem abstract, it is currently the most reasonable and efficient method available.

AI can rely on this mechanism to efficiently process vast amounts of information and generate logical and creative responses.

The quality and efficiency of Tokens directly determine the depth of AI understanding and the accuracy of the generated responses.

③ The Effectiveness and Energy Consumption of Token

Since the beginning of 2025, China's artificial intelligence sector and large models have been experiencing rapid development.

Prior to this, for most people, the most commonly used AI was still GPT - 3.5, and the intelligence level of domestic AI models was relatively lacking.

However, with the release of the DeepSeek open - source model at the start of the year, the 'slow train' on the AI track suddenly transformed into a high - speed rail.

Various models have sprung up like mushrooms after rain, continuously improving in intelligence.

As of early September 2025, Chinese companies are already calling on large models over 10 trillion Tokens daily on average.

While the productivity boost brought about by such enormous consumption is self - evident, energy consumption has also been on the rise.

Do you still recall the CO2 metric we mentioned in our previous article on the AI arena?

In the past, we only focused on the performance of AI.

But now, energy consumption and utilization efficiency have become issues that cannot be overlooked.

Given a certain amount of energy consumption, how to process as many effective Tokens as possible has become one of the primary goals for major AI companies.

This metric not only involves computational power itself but also takes into account the efficiency of converting computational power into actual information processing capabilities.

The goal of the AI industry has remained constant: to enable AI to carry more value and complete tasks more precisely.

Therefore, optimizing Token efficiency will become a core proposition for the future development of AI technology and industrial competition.

03 The Most Important Resource

With the advent of the AI era, Token is no longer just a technical concept within the AI field.

It is rapidly and deeply integrating with society, the economy, productivity, and other aspects at an unprecedented pace, giving rise to new business models and reshaping traditional industrial landscapes.

Frequent users of AI may have noticed that there are mainly two ways to call on models:

One is to directly experience them online on the official website, using the official servers to interact with AI;

The other is to call on the model's API and have a dialogue with the model on one's own server.

The advantage of the former is that it has almost no barriers to entry, and most commonly used models can be used for free, although some new models may have usage limits;

The latter mostly adopts a metered charging model, and the unit of measurement for AI services is precisely Token.

As the basic unit of information processing for LLMs, Token directly affects the application efficiency and economic benefits of LLMs in various industries.

From a cost - benefit perspective:

Since most commercial LLM APIs charge based on the number of Tokens, the length of both the prompts (input to the model) and the answers generated by the model directly affects the usage cost.

Consequently, developers and enterprises need to optimize the length of prompts as much as possible, refining expressions to reduce costs.

During large - scale applications, especially in scenarios requiring the processing of vast amounts of text data, optimizing Token costs will directly relate to the commercial viability of the solution.

From an efficiency and speed perspective:

The speed at which AI models process text is directly related to the number of Tokens, and the same applies to multimodal models.

Shorter Token sequences mean faster inference speeds, which is the core of real - time translation models from various vendors.

Conversely, processing more Tokens requires more computational resources (GPU memory or computing power).

When most enterprises face limited hardware conditions, the number of Tokens becomes one of the most critical factors restricting the model's processing speed and the number of concurrent requests.

The emergence and development of multimodal models have enabled non - text information such as images and audio to be converted into Tokens for model processing, significantly expanding the scope of AI applications.

From an information density and quality perspective:

The context window, i.e., the Token limit, determines how much information the model can 'remember'.

When dealing with complex tasks, long conversations, or even information processing across multiple files, how to effectively utilize the limited context window remains an issue that requires continuous exploration of new solutions.

Furthermore, as we have emphasized multiple times in our previous articles, prompt engineering (Prompt Engineering) simply refers to the study of how to efficiently and clearly organize information to guide the model in generating high - quality output within a limited Token budget.

This directly relates to productivity improvements in various application areas of LLMs, such as code generation, data analysis, and email writing.

04 The Future Is Here

In the AI era, Token plays an increasingly central role, and humanity's understanding of it is constantly deepening.

Some may question whether it is appropriate to define Token as a 'resource'.

After all, its essence is merely the smallest unit of information.

And the truly scarce resources seem to still lie in factors such as computational power and data.

However, as the 'building blocks' through which AI understands and generates content, Token directly determines the utilization efficiency of computational power, the cost of information transmission, and the performance boundaries of models.

It serves as a bridge connecting computational power with value and as a special 'virtual resource' in the information economy era.

Optimizing and efficiently utilizing Token can maximize the output of limited computational power, lower the barriers to information processing, and ultimately impact the entire AI industry.

In the future, the importance of Token is bound to only increase.

Because it is not just an object of technical optimization but also a subject that needs to be jointly addressed at the social, economic, moral, and legal levels.

Speaking of the future, in fact, the future is already upon us.