Trend丨From Marginal Toy to Production Workhorse: Small Models Herald the 'Actuarial Era'

![]() 09/05 2025

09/05 2025

![]() 524

524

Preface: At present, large language models have taken center stage owing to their robust general capabilities. Nevertheless, their high costs, substantial energy consumption, and heavy dependence on computational power considerably restrict their practical deployment in specific contexts. Lately, NVIDIA's unveiling of small language models is steering the AI industry's transition from 'large models reign supreme' to 'precise empowerment through small models.'

Author | Fang Wensan

Image Source | Network

Technological Breakthroughs in NVIDIA's Small Language Models

NVIDIA's newly launched Nemotron-Nano-9B-v2 boasts a distinctive technical architecture. Built upon the Nemotron-H series, it innovatively merges the Transformer architecture with Mamba's Selective State Space Model (SSM).

The SSM's selective state space model facilitates the processing of long information sequences with linear complexity. This trait offers substantial benefits in terms of memory and computational overhead, enabling the model to manage large volumes of information with fewer resources compared to traditional architectures.

In terms of performance, this model processes information six times faster than Transformer models of a similar scale. In numerous benchmark tests, its accuracy is on par with or even surpasses that of well-known open-source large models such as Qwen-3-8B and Gemma-3-12B.

Furthermore, Nemotron-Nano-9B-v2 incorporates a built-in 'reasoning' function, a highly practical innovation. It permits users to conduct self-checks before the model generates final answers. Developers can flexibly activate or deactivate this function using simple control symbols (e.g., /think or /no_think), enabling precise control over the generated content.

Additionally, the model supports runtime 'thought budget' management. Developers can strike a balance between accuracy and latency by limiting the number of tokens used for internal reasoning, thereby achieving the optimal trade-off.

These 'fast and accurate,' 'customizable and controllable' features enable Nemotron-Nano-9B-v2 to be deployed not only on high-end GPUs (e.g., A10G) but also in low-power environments like smart terminals and industrial equipment. It truly overcomes the limitation that small models 'can only handle simple tasks,' laying a solid foundation for their application in complex production scenarios.

Reasons for the Rising Significance of Small Models

Early small models, constrained by architectural designs such as traditional RNNs or lightweight Transformers, frequently underperformed when dealing with complex tasks.

However, with ongoing technological advancements, numerous innovative architectures have emerged in recent years. Architectures like SSM and Linear Input-Varying (LIV) systems, as employed in Nemotron-Nano-9B-v2, significantly enhance small models' capacity to process long texts and multimodal data by optimizing information processing pathways, achieving linear complexity computation, and generating dynamic weights.

For instance, the LFM2-VL visual language model released by MIT's subsidiary, Liquid A, can instantly generate model weights based on each input using the LIV system, reducing redundant computations. Its GPU inference speed is more than twice that of similar models while maintaining high accuracy.

Although large models offer broad generality, in practical production, enterprises encounter more diverse and personalized needs. They require models with 'precise capabilities' tailored to specific scenarios, such as inventory analysis, customer service, and market forecasting.

Through targeted training, small models can deliver higher task relevance with lower resource consumption, such as fine-tuning diagnostic models on medical data or optimizing risk predictions in financial texts.

Take Alibaba's Qwen3 series of open-source small models as an example. Its outstanding performance across different size versions fully illustrates that small models can achieve impressive results while maintaining low resource usage. This 'small yet specialized' characteristic makes small models an ideal choice for enterprises seeking cost reduction and efficiency enhancement, meeting their precise needs in various scenarios.

Moreover, the training and inference of large models necessitate expensive computational support, which is a heavy burden for small and medium-sized enterprises. In contrast, NVIDIA's small models can operate on A10G GPUs with minimal hardware requirements.

Simultaneously, in line with NVIDIA's open model licensing agreement, these models are offered in an open-source and free manner, fully commercializable without the need for royalty payments.

From 'Supplementary Role' to 'Mainstay'

Currently, enterprises' demand for AI has shifted significantly from the initial 'exploratory' phase to the 'large-scale deployment' phase.

Although large models offer general capabilities, their 'overweight' characteristics, such as high response latency and excessive costs, often make direct application challenging in practical business.

In contrast, small models' attributes of low latency, low energy consumption, and high targeting will render them the preferred solution for enterprises handling routine tasks like customer service dialogues, document analysis, and equipment monitoring.

In industrial settings, small models deployed on local devices can analyze sensor data in real-time, promptly issuing fault warnings and ensuring normal production operations.

In the consumer electronics sector, small models embedded in smartwatches can enable voice assistants, health monitoring, and other functions without relying on cloud connections, providing users with convenient services.

It is foreseeable that future enterprise AI architectures will adopt a hierarchical model of 'large models as the foundation and small models as the vanguard.' The proportion of small models directly participating in production processes will continue to increase, becoming an indispensable mainstay in enterprise AI deployment.

Small Models Herald the 'Actuarial Era'

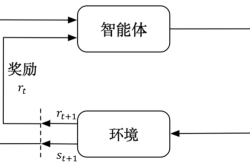

The essence of the 'Actuarial Era' lies in optimizing AI capability allocation through refined designs, such as optimizing model architectures, reasoning strategies, and resource allocation.

Nemotron-Nano-9B-v2's 'reasoning function switch' and 'thought budget management' are typical manifestations of this concept.

Developers no longer blindly pursue 'bigger is better' but dynamically adjust the model's computational depth and output precision based on specific task requirements, minimizing resource consumption while ensuring result reliability.

This 'on-demand actuarial' model is not only applicable to enterprise-level applications, such as balancing prediction accuracy and computational costs in supply chain optimization, but will also drive the popularization of AI in resource-constrained scenarios, like IoT devices and terminals in remote areas.

AI system evaluation metrics will no longer rely solely on parameter scale but will shift towards more refined dimensions like 'task completion efficiency per unit of computational power' and 'output value per dollar invested,' achieving precise assessment and optimization of AI capabilities.

Conclusion:

Meanwhile, the intensive release of small models covering various fields like vision, language, and multimodal interaction has further enriched their application scenarios. This will create a positive cycle of 'technological breakthroughs - scenario deployment - feedback optimization,' driving the continuous prosperity of the small model ecosystem.

In the future, the small model ecosystem will be as diverse as mobile app stores. Developers can swiftly build personalized AI solutions by 'picking and choosing as needed,' accelerating the innovation and application of AI technologies.

Content sourced from:

Shan Zi: AI's 'Actuarial Era' Officially Begins, NVIDIA Fires the First Shot;

Directly Facing AI: NVIDIA's Latest Research: Small Models Are the Future of Intelligent Agents;

Electronic Enthusiast: NVIDIA Fires the First Shot in the 'Small Model' Race;

Suan Ni Community: All-Chinese Team, 47 Times Faster Than Qwen3! NVIDIA's Jet-Nemotron Small Model Makes a Splash