When ADAS Falters: Redefining Intelligent Urban Transportation for Automakers

![]() 08/21 2025

08/21 2025

![]() 633

633

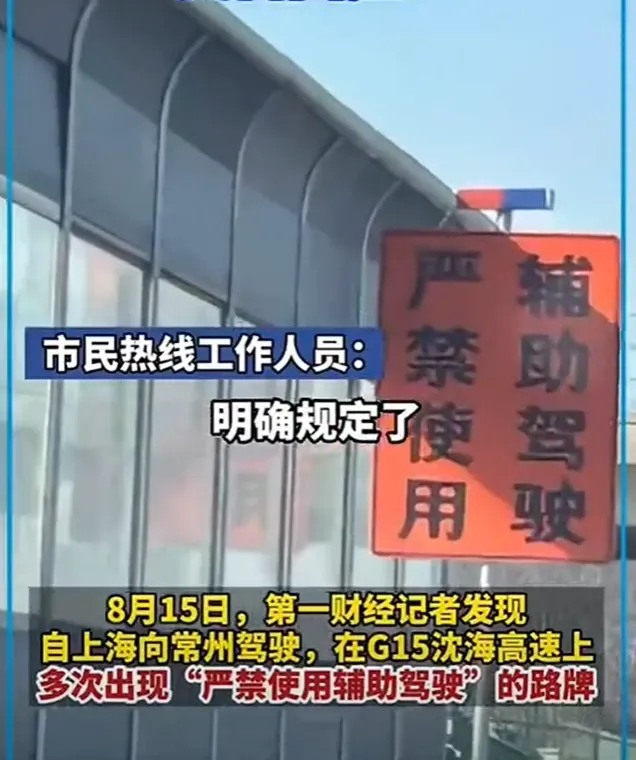

On the LED screens along the Shanghai-Changzhou section of the G15 Shenyang-Haikou Expressway, the words "Strictly Prohibit the Use of ADAS" flash in red, a reminder that, despite lacking penalties, strikes a heavy blow to the smart car industry. As some highway sections explicitly ban L2/L2+ systems, and new regulations from the State Administration for Market Regulation (SAMR) and the Ministry of Industry and Information Technology (MIIT) prohibit vague promotions like "L2.5" and "hands-off driving," the ADAS features once heralded as core selling points by automakers are waning under the dual pressure of policy restrictions and technological limitations. When the ADAS charm fades, where do automakers find their new path?

ADAS "Decline": The Bursting of Technological Hype

ADAS's "failure" is not an accident but the inevitable outcome of the clash between technological constraints and commercial overstatements. A fatal rear-end collision last year serves as a stark reminder: when the lead vehicle suffered a tire blowout and failed to evacuate promptly, the following vehicle's ADAS system couldn't detect the stationary vehicle, leading to two deaths. Automakers have long clarified in their "ADAS Reading Guides" that the system cannot recognize stationary vehicles, slow-moving engineering vehicles, irregular trailers, and other scenarios—these limitations precisely reveal the current technological boundaries.

The three primary reasons for highway bans point more directly to the core contradiction. On road segments under construction or renovation, irregularly placed cones and temporarily set speed limit signs often exceed the fusion perception range of millimeter-wave radars and cameras. During holidays when traffic surges, misjudgments and emergency braking by ACC/AEB systems may cause chain rear-end collisions. Moreover, current regulations requiring drivers to remain responsible throughout the journey blur the line between "assistance" and "autonomy." In one engineering vehicle collision, the system failed to recognize the vehicle on the overtaking lane, and by the time the driver noticed, it was too late to avoid the collision. Ultimately, the automaker was exempted from liability, citing "system recognition limitations," and such incidents erode consumer trust.

The introduction of new regulations has completely stripped away the veneer of marketing rhetoric. SAMR explicitly prohibits promoting ADAS as "driverless" and mandates automakers to prominently label functional limitations in apps and user manuals. Those promotional videos that once garnered attention with "high-speed racing" and "hands-off stunts" are now considered typical violations. Data shows that the penetration rate of domestic L2 ADAS has surpassed 50%, but packaging 60-point technology as 90-point safety assurance is an unsustainable model of overdrawing trust.

Multimodal Interaction: Redefining the Vehicle-Physical World Dialogue

With the failure of ADAS promotional gimmicks, the true technological competition shifts to the depth of a vehicle's understanding of the physical world. Breakthroughs in multimodal models are evolving cars from "single-sensor executors" to "environmental understanders," which is the key to overcoming current technological limitations.

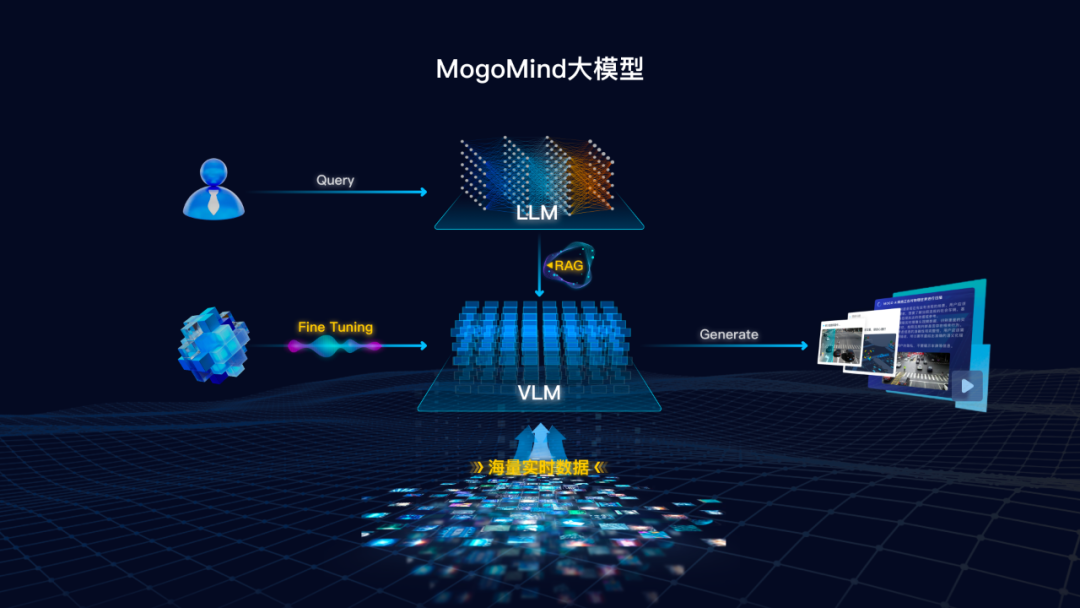

Large AI models for the physical world bridge this gap through a three-layer architecture: "multimodal cognition - scenario reasoning - decision-making evolution." An 8-megapixel camera captures subtle road condition changes, a 4D millimeter-wave radar penetrates rain and fog to recognize cone formations, and a lidar accurately maps the irregular contours of engineering vehicles. These data are no longer isolated signals but transformed into decision-making bases like "reduce speed by 30% on construction sections."

More importantly, the system begins to comprehend the "state of the human." The driver monitoring system mandated by new regulations is no longer a simple "hands-off warning" but constructs a real-time driver state model using multidimensional data such as steering wheel torque perception, eye tracking, and heart rate monitoring. When it detects that the driver's eyes are closed for more than 1.5 seconds, the system first warns through seat vibrations and, if there's no response, immediately turns on hazard lights and slowly decelerates to a stop. This "progressive intervention" not only complies with regulatory requirements but also mitigates the safety risks of abrupt braking.

The "scenario prediction" capability of large AI models compensates for algorithmic experience shortcomings. By converting high-risk cases like G15 highway construction sections and vehicle accident scenarios into training data, these models can simulate the evolution paths of thousands of dangerous situations. In a virtual test of "sudden tire blowout of the preceding vehicle," the system can complete the combined operation of "warning the following vehicle + turning on hazard lights + smoothly decelerating" within milliseconds, more than twice as fast as the average human reaction speed. This "data-fed" prediction capability addresses the core issue of "insufficient response to special scenarios."

Data Quality and Large AI Models: Rebalancing Safety and Efficiency

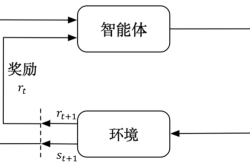

The new regulations emphasize "feeding back warning road segment scenario data to algorithm training," directly addressing an industry pain point: the evolution speed of intelligent driving is never determined by the number of sensors but by data quality and model capabilities. The value of large AI models for the physical world lies in making every drive a "learning sample" for the system.

High-quality scenario data overcomes "recognition dead zones." For the ADAS issue of "misjudging stationary vehicles," when an automaker establishes a special database containing over 100,000 cases across 23 environmental conditions like sunny days, heavy rain, and backlighting, the model gradually masters the recognition rules of "unconventional stationary objects" through comparative analysis of "posture characteristics of blown-out tires" and "reflective strip distribution of engineering vehicles" in accident cases.

The intelligent decision-making in multi-vehicle scenarios demonstrates model evolution. On highway sections with heavy traffic, traditional ACC systems often cause chain reactions due to "following too closely" and "emergency braking to avoid danger." By learning from millions of real traffic flow interaction data, large AI models for the physical world can predict surrounding vehicles' driving trajectories and calculate the optimal strategy of "maintaining second-level vehicle spacing + reserving sufficient acceleration space."

This efficiency improvement enhances urban transportation in reverse. When vehicles equipped with intelligent models form a "collaborative perception network," traffic management departments can grasp road network bottlenecks in real-time.

From "Selling Features" to "Selling Ecosystems": Automakers' Value Reconstruction

ADAS's "failure" is essentially a "disenchantment" process for the industry. As technology returns to rationality, automakers' competitive dimension shifts from "who has cooler features" to "who has a safer ecosystem." The virtuous cycle of "technological practicality - demand growth - market expansion" promoted by the new regulations precisely points to this direction.

Transparent safety promises replace exaggerated promotions. According to new regulations, automakers set up an "Intelligent Driving Safety Center" in their apps, displaying in real-time the "system's capability score on the current road segment" and "functional optimization items in the last 30 days." This "honest communication" enhances user trust and increases the average daily usage time of ADAS. When consumers understand "what the system can do" and "what it cannot do," rational usage habits naturally form.

The standardization of OTA upgrades ensures the safety of technological evolution. The new regulations require software updates to be filed for review, forcing automakers to establish a full-process control of "testing - verification - pushing."

Ultimately, the real competitiveness lies in constructing a harmonious relationship between "human - vehicle - environment." When a vehicle can understand complex road conditions of construction sections, perceive changes in the driver's state, and integrate into the overall efficiency improvement of urban transportation, it ceases to be merely a means of transportation but a core node in the intelligent travel ecosystem. This may just be the positive significance of ADAS's "failure."

As large AI models for the physical world turn every road, vehicle, and pedestrian into a computable node, the logic of selling cars evolves to selling "urban efficiency infrastructure." By then, cars will not just be cars but terminals of urban AI; automakers will not just be automakers but operators of transportation efficiency. The future of smart cars does not lie in the gimmick of "hands-off driving" but in the promise of every safe arrival.