From LiDAR Controversy to Embodied Intelligence Breakthrough: Navigating Technological Shifts and Paradigm Revolutions in AI

![]() 07/03 2025

07/03 2025

![]() 695

695

At an industry summit, a professor from Shanghai Jiao Tong University sparked debate by suggesting that the "actual usage rate of LiDAR is less than 15%." This discussion on autonomous driving sensor routes delves into the profound contradictions within the AI industry, particularly between technology selection and scenario implementation. From the energetic tug-of-war between LiDAR and pure vision solutions to the data depletion crisis hindering AI advancements in high-end fields, and the pioneering embodied intelligence research led by Lu Zongqing's team at Peking University, the technology landscape stands at a pivotal juncture between data-driven and cognitive revolutions. LiDAR Controversy: Navigating the Safety-Economy Trade-off in Energy Paradigms

The choice of autonomous driving sensor solutions has evolved into a debate on "active detection" versus "passive detection," dividing industrial paths. The professor's viewpoint highlights a core issue: the fundamental difference between LiDAR and pure vision solutions transcends mere accuracy comparisons; it's a divergence in energy utilization paradigms. As an active detection tool, LiDAR constructs an environmental model by emitting and receiving laser beams, theoretically offering precise 3D data but facing challenges in energy consumption and cost control.

Data indicates that Huawei's solid-state LiDAR costs have dipped below 2000 yuan, minimally impacting overall vehicle costs. However, the controversy persists. The notion that "excessive detection distance lacks practical significance" touches on the mismatch between autonomous driving needs and technological supply. In urban settings, detection beyond 200 meters rarely translates into safety benefits, often straining computing systems with data overload. This "overperformance" showcases pure vision solutions' advantages in specific scenarios, balancing cost control and basic coverage through algorithm optimization leveraging Hong Kong University's open-source neural network architecture.

The safety-cost dilemma isn't a binary choice. The principle that "data accuracy trumps computing power" underscores autonomous driving safety systems' logic. In open-loop control, minor data drifts can trigger chain reactions, especially in emergencies like pedestrians crossing or oncoming traffic. Automotive test data reveals that in complex intersections, LiDAR's false alarm rate is 42% lower than pure vision solutions. This disparity could be critical in low-probability, high-risk scenarios. As the industry cautions, "Spending an extra 2000 yuan for safety is more cost-effective than post-accident remedies." In automotive safety, a 5% risk probability can be fatal.

AI Training: Bridging Data Depletion and Specialized Field Breakthroughs

Amidst the autonomous driving sensor debate, AI confronts a more foundational data crisis. Current large models rely heavily on internet data, encountering a "data depletion" ceiling. While vast, internet data primarily covers daily life, leaving gaps in high-end fields like healthcare and industry. This imbalance hinders AI breakthroughs in high-tech sectors. The medical field exemplifies this dilemma; tertiary hospital statistics show that only 8% of core department data is publicly available, with 92% restricted due to privacy, system isolation, etc. This barrier confines AI applications in disease diagnosis and surgical planning to the lab. Similar issues plague high-end autonomous driving scenarios, like extreme weather driving and complex transportation hub planning, lacking effective data collection and training mechanisms. Hierarchical solutions are needed. AGI for the masses (e.g., DeepSeek, OpenAI) addresses basic issues with general data, but high-end breakthroughs demand specialized models. Building these models hinges on sustainable professional data acquisition and training. In medicine, federated learning technology breaks data silos, enabling joint modeling without raw data sharing. This model offers insights for autonomous driving data collection—a distributed system covering extreme scenarios through vehicle-road coordination networks could break data bottlenecks.

Embodied Intelligence Breakthrough: From Virtual Interaction to Physical Cognition

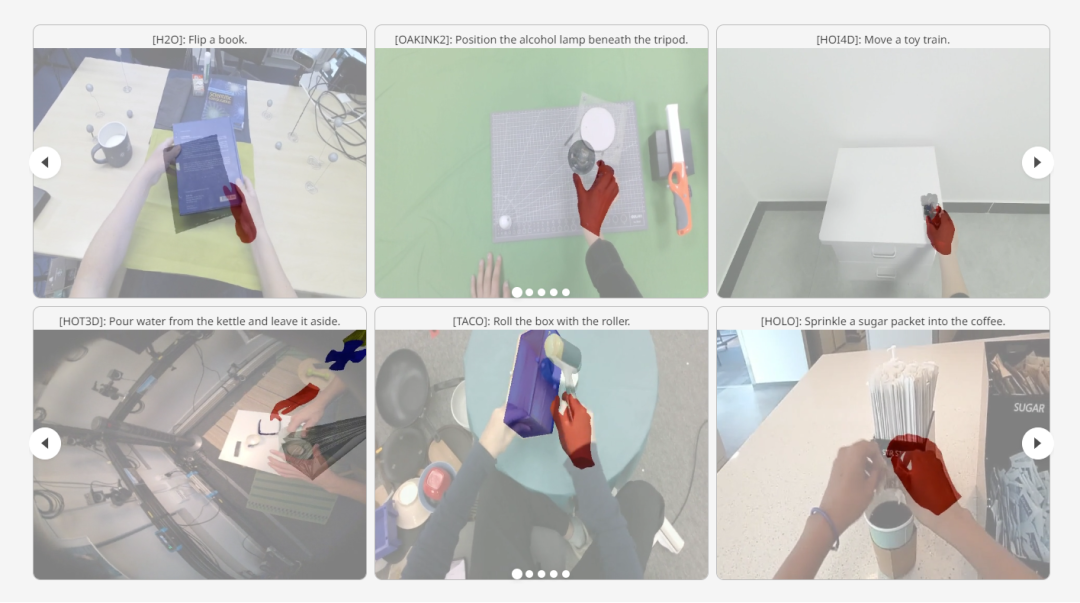

Against the backdrop of AI's data dilemma and autonomous driving technology debate, embodied intelligence presents a unique deadlock-breaking perspective. Lu Zongqing's team at Peking University proposes a "learning human movements through internet videos" approach, addressing AI's lack of physical interaction capabilities. Unlike traditional VLA (Vision-Language-Action) models, this method doesn't add action modules; it fundamentally shifts the learning paradigm, enabling models to cognize the physical world through pre-training on massive human motion data.

Lu Zongqing's team's practice is enlightening. Annotating 15 million internet videos of human joint movements essentially constructs a "physical interaction language model." This contrasts sharply with the autonomous driving sensor debate: while autonomous driving grapples with LiDAR-vision energy dynamics, embodied intelligence explores "teaching AI physical laws through human observation." Experiments in "Red Dead Redemption 2" revealed that internet data-trained models struggle with physical interaction decisions, affirming the value of physical interaction data.

This exploration's significance extends beyond technical advancements to cognitive paradigm shifts. Lu Zongqing's critique of "world models" as mere mapping and navigation exposes AI's physical world cognition barriers. A true world model must grasp "action-result" causality, like pushing a cup to make it fall, which requires physical interaction data beyond semantic reasoning. This explains embodied intelligence's insistence on internet videos as the scalable path—only such data offers rich physical interaction samples.

Real-time AI Network: Bridging Virtuality and Reality

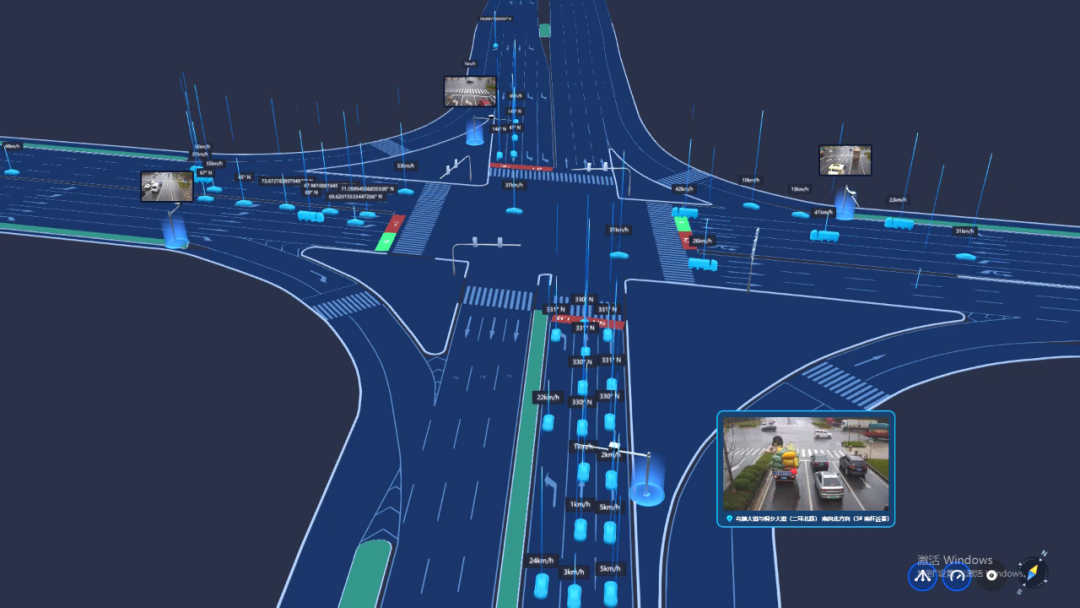

For autonomous driving and AI's evolution, a real-time AI network in the physical world is crucial. It tightly connects virtual algorithm models with reality. In autonomous driving, this network enables vehicles to instantly perceive road changes, traffic signals, and pedestrian/vehicle behaviors.

For instance, in sudden accidents, the network swiftly informs surrounding vehicles to reroute. At complex intersections, it offers optimal passing times based on traffic lights and flow. This real-time interaction-based decision-making surpasses historical data predictions in reliability and safety.

At the industrial transformation crossroads, technological debates will give way to ecological co-construction. Whether choosing autonomous driving sensors, overcoming AI data bottlenecks, or exploring embodied intelligence, the essence lies in finding the optimal tech-scenario blend. An open, collaborative ecosystem mindset surpasses single-route persistence.

As the AI industry transitions from "data-driven" to "cognition-driven," underlying paradigm exploration may outvalue short-term commercialization. With LiDAR beams and embodied intelligence converging, we witness not just technological route competition but a cognitive revolution shaping AI's future.