The Era of General-Purpose Computing Has Ended! Jensen Huang's In-Depth Interview Reveals for the First Time Why He Invested in OpenAI

![]() 10/04 2025

10/04 2025

![]() 535

535

Editor: Zhongdianjun

On September 26, Jensen Huang of NVIDIA discussed topics such as AI industry trends, the future of computing, and NVIDIA's competitive moat in a recent interview, revealing for the first time the reasons behind his $100 billion investment in OpenAI.

He believes that the failure of Moore's Law has led to no significant improvements in transistor costs and energy consumption, making traditional computing unable to continue providing necessary performance enhancements. Against this backdrop, AI demand is experiencing dual exponential compound growth. Firstly, AI user adoption is exploding exponentially. Secondly, the way AI reasons (Inference) has undergone a qualitative change, evolving from simple one-time answers to complex 'thinking' processes. This upgrade in reasoning paradigms has caused the computational load required per AI use to grow exponentially, potentially leading to a 1 billion-fold increase in reasoning demand.

AI infrastructure is triggering an industrial revolution. General-purpose computing has ended, and trillions of dollars of existing global computing infrastructure (including search, recommendation engines, and data processing) is migrating from CPUs to AI-accelerated computing. NVIDIA's AI infrastructure will generate tokens to enhance human intelligence, ultimately bringing enormous economic benefits. The current market size of $400 billion annually is expected to grow at least tenfold in the future.

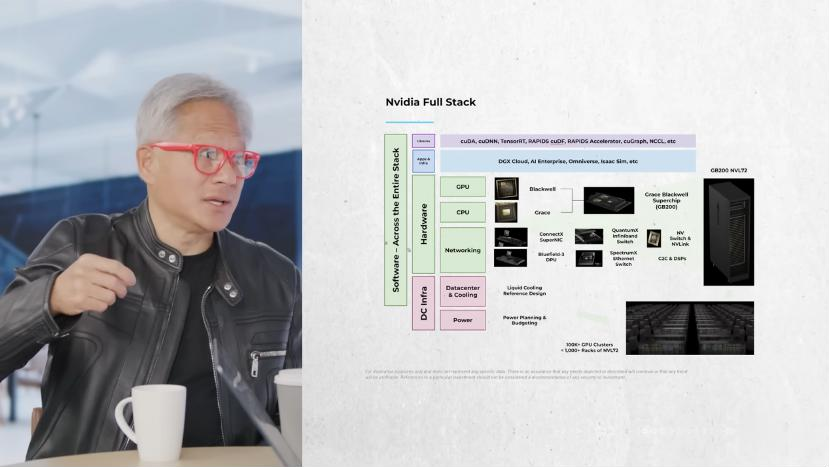

NVIDIA's strategic focus is not merely being a chip company but an AI infrastructure partner, building strong competitive barriers through 'Extreme Co-Design.' Given the limited performance improvements of individual chips, NVIDIA must innovate and optimize simultaneously across the entire stack, including algorithms, models, systems, software, networking, and chips. This full-stack design enables NVIDIA to achieve performance leaps, such as from Hopper to Blackwell, in a short time.

Huang emphasized that the core value of the strategy lies in providing the highest performance per unit of energy consumption. For hyperscale customers with power constraints, even if competitors' chips were free, the enormous opportunity cost of abandoning NVIDIA's systems would be unacceptable.

In terms of business collaboration, NVIDIA views OpenAI as the next trillion-dollar hyperscale company and is collaborating with it to build AI infrastructure, including assisting OpenAI in constructing its first self-built AI factory.

Key Takeaways from Jensen Huang's Interview:

1. Exponential Growth in AI Computing Demand, with Reasoning (Inference) as the Key Driver

Currently, AI training operates under three laws: pre-training, post-training, and inference. Traditional reasoning was 'one-shot,' but the new generation has evolved into 'thinking,' involving research, fact-checking, and learning before answering. This 'thinking' process has caused the computational load required per AI use to grow exponentially, leading to an expected 1 billion-fold increase in reasoning demand. This astonishing growth stems from two exponential compound effects: the exponential growth in the number of AI clients and usage rates, and the exponential growth in computational load per use.

2. OpenAI is Poised to Become the Next Trillion-Dollar Hyperscale Company

Huang believes OpenAI is likely to become the next trillion-dollar hyperscale company, offering both consumer and enterprise services. NVIDIA's investment in OpenAI is considered 'one of the wisest investments imaginable.' NVIDIA is helping OpenAI build its AI infrastructure for the first time, covering chips, software, systems, and AI factories at all levels.

3. AI Infrastructure Represents a New Industrial Revolution with Enormous Market Potential

AI infrastructure construction is seen as an industrial revolution. The vast majority of structured and unstructured data processing currently runs on CPUs. Huang anticipates that all this data processing will migrate to AI in the future, representing a massive market. Trillions of dollars of existing global computing infrastructure, including traditional hyperscale computing infrastructure for search, recommendation engines, and shopping, will need to shift from CPUs to GPUs for AI-accelerated computing. AI infrastructure will generate tokens to enhance human intelligence, ultimately yielding enormous economic benefits, such as using $10,000 worth of AI to increase the productivity of a $100,000 salary employee by two to three times. The current annual market size for AI infrastructure is around $400 billion, but the total addressable market is expected to grow at least tenfold in the future.

4. Wall Street Underestimates NVIDIA's Growth Rate

Wall Street analysts predict that NVIDIA's growth will stagnate by 2027. Huang refuted this view, arguing that computing resources in the market are still in short supply. He emphasized that the likelihood of oversupply is extremely low until all general-purpose computing shifts to accelerated computing, all recommendation engines are AI-based, and all content generation is AI-driven. NVIDIA is responding to market demand, and future market demand for AI infrastructure will be enormous.

5. NVIDIA Builds Core Competitive Advantages Through 'Extreme Co-Design'

Due to the failure of Moore's Law, transistor costs and power consumption remain largely unchanged, making it impossible to enhance performance through traditional means. To address this challenge, NVIDIA has adopted 'Extreme Co-Design,' optimizing and innovating simultaneously at the system, software, networking, and chip levels.

Huang believes that NVIDIA's powerful system platform advantages constitute a competitive moat, even surpassing the potential cost advantages of competitors' ASIC chips. Since customers' operations are constrained by power, they seek the highest returns per watt. NVIDIA's depth and extreme co-design achieve the best performance per watt. If customers abandon NVIDIA's systems for lower-performing chips, even if the chips were free, the high opportunity cost (potential loss of 30 times revenue) would still lead them to choose NVIDIA. Therefore, NVIDIA believes it is building complex AI factory systems, not just chips.

Below is the Original Interview Text:

1. The Paradigm Revolution and Exponential Growth in AI Computing

Host: Jensen, glad to have you back, and of course, my partner Clark Tang.

Huang: Welcome to NVIDIA.

Host: Your glasses are beautiful. They really look good on you. Now everyone will want you to keep wearing them. They'll say, 'Where are the red glasses?' So it's been over a year since our last podcast. Over 40% of your revenue now comes from inference. But with the emergence of chain-of-reasoning, inference is about to explode.

Huang: Yes, it's about to grow 1 billion times. This is something most people haven't fully internalized yet. This is the industrial revolution.

Host: Honestly, I've been running this podcast every day since then. On the AI timescale, it's been about 100 years. I was rewatching that podcast episode. Recently, we've discussed many things, but for me, the most profound moment was when you slammed the table. Do you remember that? Back then, in pre-training, there was a bit of a lull, right? People were saying, 'Oh my god, pre-training has hit its limit.' This was about a year and a half ago. You also said that inference wouldn't be 100 times, 1000 times, but would grow 1 billion times. That brings us to today.

Huang: We now have three scaling laws, right? We have the Pre-training Scaling Law, the Post-Training Scaling Law. Post-training is like AI practicing, repeatedly practicing a skill until it gets it right. It tries many different methods. And to do that, you have to reason. So now in reinforcement learning, training and reasoning are integrated. This is called post-training. The third is inference. Previously, reasoning was done in a one-shot manner. But we're grateful for this new form of reasoning—thinking, which occurs before the model answers. So now you have three scaling laws. The longer you think, the better the quality of your answer. While you're thinking, you do research, check some facts, learn something, think more, learn more, and then generate an answer. Don't generate it right away. So thinking, post-training, pre-training—we now have three scaling laws instead of one.

Host: You said this last year, but how confident are you this year about inference growing 1 billion times and where it will take intelligence levels? Are you more confident than a year ago?

Huang: I'm more confident this year because of the current agentic systems. And AI is no longer just a language model; it's a system composed of language models, all running concurrently. Some are using tools, some are doing research, and there's a lot more. And it's all multimodal—look at the videos AI has generated. It's truly incredible stuff.

2. OpenAI is Poised to Become the Next Trillion-Dollar Hyperscale Company

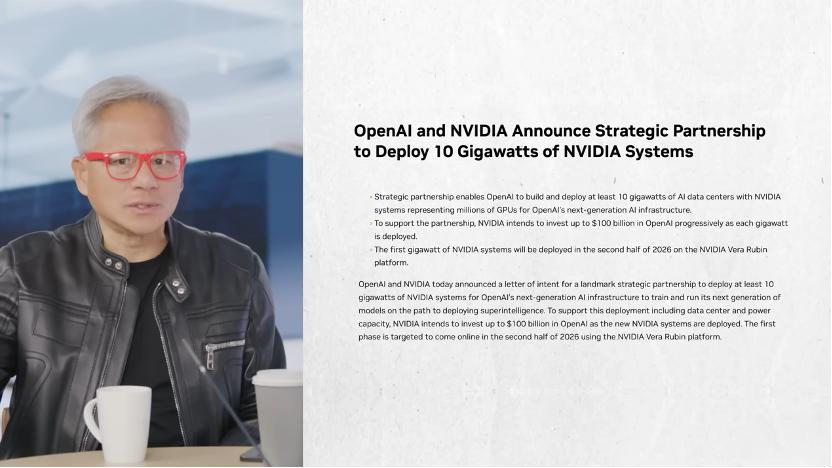

Host: This indeed brings us to the groundbreaking moment everyone's been talking about this week. A few days ago, you announced a massive deal with OpenAI's Stargate project. You'll be the priority partner and invest $100 billion in the company. Over time, they'll build AI data centers with at least 10 gigawatts of capacity, and if they use NVIDIA for data center construction, it could mean up to $400 billion in revenue for NVIDIA. So please help us understand and tell us about that partnership. What does it mean to you, and why is this investment so meaningful for NVIDIA?

Huang: Let me answer the last question first. I believe OpenAI is very likely to become the next trillion-dollar hyperscale company. Hyperscale companies are like Meta—Meta is a hyperscale company, Google is a hyperscale company. They'll have services for both consumers and enterprises. OpenAI is highly likely to become the next company worth trillions of dollars. If so, this is an opportunity to invest before that prediction comes true. These are probably some of the wisest investments we can imagine. You have to invest in what you understand. As it turns out, we happen to be familiar with this field. So there's an opportunity to invest, and the return on that money will be substantial. So we're more than happy to have the opportunity to invest. We don't necessarily have to invest; we have no obligation to invest, but they're offering us the opportunity.

Huang: Now let me start from the beginning. So we're collaborating with OpenAI on multiple projects. First, the first project is accelerating the build-out of Microsoft Azure, and we'll continue to do that. And that collaboration is going very well; we have several more years of construction to do, with hundreds of billions of dollars of work just there. Second is the build-out of OCI (Oracle Cloud Infrastructure). I think there are about five, six, seven more gigawatts to be built, and we'll be working with OCI and OpenAI, as well as with SoftBank. Those projects are under contract, and we're processing them. There's a lot of work to do. Then the third, the third is CoreWeave. So the question is, what is this new partnership? This new partnership is about helping OpenAI, collaborating with OpenAI, to build their own self-built AI infrastructure. So this is us directly helping them at the chip level, at the software level, at the system level, at the AI factory level, to become a fully operational hyperscale company. This will continue for a while, and you know they're going through two exponential growths. The first exponential growth is the number of customers growing exponentially. The reason is that AI is constantly getting stronger, and these use cases are getting better. Almost every application is now connected to OpenAI, so they're experiencing exponential growth in usage. The second exponential is the computational exponential per use. It's now not just doing one-time reasoning but thinking before answering. So these two exponential growths are compounding on their computational needs, so we, we can build out these different projects. So this last one is an overlay on top of everything they've announced, all the work we've been collaborating on with them, this is on top of that.

Host: One thing that interests me is that in your mind, there's a high probability it will become a company worth trillions of dollars, and you think it's a good investment. At the same time, you know, they've been outsourcing to Microsoft to build data centers. Now they want to build a full-stack factory themselves.

Huang: They basically want to establish a relationship with us like the one Elon has with X.

Host: Yes. When you think about the advantages of having the Colossus data center, they're building a full stack—that's a hyperscale cloud service provider because if they don't use the capacity themselves, they can sell it to others. Just like the Stargate project, they're building huge capacity, and they think they'll need to use most of it, but it also allows them to sell it to others. It sounds a lot like AWS or GCP or Azure. Is that what you mean?

Jensen Huang: Yeah. I think they're very likely to use it themselves, but they want to maintain a direct relationship with us—a direct working relationship and a direct procurement relationship. Just like what Zuck and Meta have done with us, it's completely direct. Our relationship with Sundar and Google is direct, and our collaboration with Satya and Azure is also direct. Right? So they've reached a sufficient scale where they believe it's time to start building these direct relationships. I'm delighted to support that for them, and everyone is very much in favor of it.

3. AI Industrial Revolution and Market Potential

Host: So there's one thing I find quite curious. You just mentioned Oracle, with $300 billion, Colossus, what they're building. We know what Sovereigns are building, and we know what hyperscale cloud providers are building. Sam is talking about multi-trillion-dollar scales, but among the 25 sell-side analysts on Wall Street covering your stock, if I look at the consensus expectations, they basically show your growth stalling from 2027 onwards. An 8% growth from 2027 to 2030. These are the 25 people whose sole job is to get paid to predict NVIDIA's growth rate.

Jensen Huang: We're very comfortable with this situation. We're used to it, and we're fully capable of surpassing these numbers.

Host: I understand that, but there's an interesting disconnect. I hear this phrase every day on CNBC and Bloomberg, and I think it refers to the situation where shortages eventually turn into oversupply. They say, 'Well, 2026 might still see shortages, but by 2027, you might not need those anymore.' But to me, this is fascinating. I think it's important to point out that these consensus forecasts aren't happening, right? We also aggregate forecasts and make projections for the company, taking all these numbers into account. And what this shows me is that despite being two and a half years into the AI era, there's still a massive divergence in belief. What we hear from Sam Altman, you, Sundar, Satya, still diverges from Wall Street.

Jensen Huang: I don't think it's contradictory. First, for builders, our goal should be to build for the opportunity. Let me give you three key points to consider, and these three points will make you feel even more confident about NVIDIA in the future. The first point, and this is the most important one from a physical law perspective, is that general-purpose computing is over. The future belongs to accelerated computing and AI computing. That's the first point. The way to understand this is to consider the trillions of dollars worth of computing infrastructure in the world that needs to be updated. So first, you have to realize that this is undisputed. Everyone will say, 'Yes, we completely agree. General-purpose computing is over. Moore's Law is dead.' So what does that mean? General-purpose computing will shift to accelerated computing. Our collaboration with Intel recognizes that general-purpose computing needs to merge with accelerated computing to create opportunities for them.

Jensen Huang: The second key point is that the first use cases for AI are already ubiquitous. It's used in search, recommendation engines, and shopping. The underlying hyperscale computing infrastructure for recommendations, which was primarily done by CPUs in the past, is now shifting to GPUs. So for classic computing, it's shifting from general-purpose computing to accelerated computing and AI; for hyperscale computing, it's shifting from CPUs to accelerated computing and AI. That's the second point. Companies like Meta, Google, ByteDance, Amazon, and others are shifting their classic, traditional hyperscale expansion approaches to AI, and that's hundreds of billions of dollars. We don't even need to consider the new opportunities AI creates; this is about AI transforming how you used to do things into a new way of doing things. Now, let's talk about the future. So far, I've only roughly discussed existing things—just ordinary matters where past practices are no longer valid, like shifting from using oil lamps to electricity, and from using propeller planes to jet planes. That's it. That's all I've been talking about.

Jensen Huang: Now, what's incredibly unbelievable is what happens when you shift to AI, when you shift to accelerated computing? What new applications emerge? That's what we've been discussing with all the AI-related content. What is that opportunity? What does it look like? Simply put, just as electric motors replaced labor and physical activities, now we have AI, AI supercomputers, and AI factories generating tokens to enhance human intelligence. And what percentage of the world's GDP does human intelligence account for? About 55%, 65% of the world's GDP? Let's call it $50 trillion. And that $50 trillion will be augmented by something. Let's come back to the individual. Suppose I hire an employee with a salary of $100,000, and then I add a $10,000 AI for that $100,000 employee, and that $10,000 AI ends up doubling the efficiency of the $100,000 employee, resulting in a tripling of productivity. I'm doing this for everyone in our company right now. Every software engineer, every chip designer in our company, already has an AI working alongside them—100% coverage. As a result, we're manufacturing better chips, and that number is growing. So our company is growing faster. We're hiring more people. Our productivity is higher. Our revenue is higher. Our profitability is stronger. What's not to like? Now, apply NVIDIA's story to the world's GDP. What's likely to happen is that $50 trillion will be augmented by, let's just pick a number, $10 trillion. That $10 trillion needs to run on a machine. Now, what's different about AI compared to past IT is that, to some extent, software was written a priori and then ran on a CPU, with a person operating it. In the future, of course, AI will generate these tokens, which must be generated by machines, and they'll be thinking. So that software is constantly running, whereas in the past, software only needed to be written once. Now, software is actually being continuously written—it's thinking. To enable AI to think, it needs a factory. So let's say $10 trillion worth of tokens are generated, with a 50% gross margin, and $5 trillion of that requires a factory—yes, an AI infrastructure. So if you tell me that the world's capital expenditure is about $5 trillion a year, yes, I would say the math seems to add up. That's the future: shifting from general-purpose computing to accelerated computing, replacing all hyperscale computing with AI, and then augmenting human intelligence to drive global GDP growth.

Host: Now, we estimate this market to be around $400 billion annually, with the total addressable market growing four to five times larger than current estimates.

Jensen Huang: Last night, Alibaba's Eddie Wu said that from now to ten years later, they will increase their data center computing capacity by tenfold. How much did you just say? Four times? It's ten times. They're going to increase their computing capacity by ten times. We correlate that with computing capacity, and NVIDIA's revenue is almost tied to computing capacity. He also mentioned that token production doubles every few months. This means that performance per watt must continue to grow exponentially. That's why NVIDIA is focused on dramatically improving performance per watt, which essentially represents future revenue.

Host: Based on this assumption, its historical context is quite interesting: for two thousand years, GDP saw essentially no growth until the Industrial Revolution brought acceleration, followed by the Digital Revolution further driving growth. As you mentioned, Scott Besson (U.S. Secretary of the Treasury) also noted that he believes GDP growth will reach 4% next year. World GDP growth will accelerate because we now have billions of 'AI colleagues' working for us. If GDP is the output of fixed labor and capital, then it must accelerate. We have to consider the impact of AI technological development.

Jensen Huang: It's creating a new AI agent industry—there's no doubt about it. OpenAI is the company with the highest revenue growth in history, and they're growing exponentially. So AI itself is a rapidly growing industry. And AI requires a factory behind it, an infrastructure to support it. This industry is growing. My industry is growing. Because my industry is growing, the downstream industries that support us are also growing, and energy is growing. This is a revitalization of the energy industry—nuclear energy, gas turbines. Look at the companies in our infrastructure ecosystem below; they're performing exceptionally well, with every company growing.

Host: These numbers have everyone talking about a bubble. Zuckerberg said on a podcast last week, 'Listen, I think at some point we're very likely to have an air pocket, where Meta might actually overspend by $10 billion or more.' But he said it doesn't matter. It's so crucial to the future of his business that it's a risk they have to take. But when you think about it like that, it sounds a bit like a prisoner's dilemma, right? Our current estimate is that by 2026, we'll have $100 billion in AI revenue. It includes Meta and those running recommendation engines on GPUs or search, etc.

Jensen Huang: What industry is that exactly? Which industry is already at hyperscale? Hyperscale is in the trillions. By the way, before anyone starts from zero, the industry is already moving toward AI; you have to start there.

Host: But I think skeptics would say we need to grow from $100 billion in AI revenue in 2026 to at least $1 trillion in AI revenue by 2030. You were just talking about roughly $5 trillion a minute ago—if you did a bottom-up estimate, can you envision growing AI-driven revenue from $100 billion to $1 trillion in the next five years? Are we growing that fast?

Jensen Huang: Yes, and I'd go further by saying we're already there. Because those hyperscale cloud providers, they've already shifted from CPUs to AI. Their entire revenue base is now AI-driven. You can't do TikTok without AI, you can't do YouTube Shorts without AI, you can't do those amazing things without AI. What Meta is doing with customized, personalized content—you can't do that without AI. Those things used to be done by people; previously, people prepared content a priori, created four options that the recommendation engine would then select from, whereas now, it's an infinite number of options generated by AI.

Host: But those things already exist. For example, our shift from CPUs to GPUs was largely for those recommendation engines.

Jensen Huang: No, that's actually quite new. How many years have Meta's GPUs been around? It's very new. And GPUs for search? Also very new.

Host: So your argument would be that the probability of us having $1 trillion in AI revenue by 2030 is almost certain because we've practically already reached that point.

Jensen Huang: Let's discuss incremental improvements from where we are now. Now we can talk about increments.

Host: When you analyze from the bottom up or top down, I just heard you say top down about the percentage of global GDP. What do you think the probability is of oversupply occurring? What's the probability that we'll encounter oversupply in the next three, four, or five years?

Jensen Huang: Until we fully shift all general-purpose computing to accelerated computing and AI. Until we do that, I believe the chance is extremely low. Until all recommendation engines are AI-based, until all content generation is AI-based—because consumer-facing content generation is largely recommendation systems and so on. Until all those previously typical hyperscale efforts now shift to AI, from shopping to e-commerce to all those things, until everything is done—until then.

Host: But all of this is new construction, right? When we talk about trillions, we're investing for a future much farther ahead than now. Is that your responsibility? Even if you see a slowdown or some kind of oversupply coming, do you still have an obligation to invest this money? Or is it the kind of thing where you just wave the flag to the ecosystem and say, 'Go out and build, and if at some point we see this slowdown, we can always scale back investments'?

Jensen Huang: Actually, the situation is quite the opposite. Because we're at the end of the supply chain, we respond to demand. And right now, all the VCs will tell you that the world's computing resources are in short supply. It's not that the world lacks GPUs; if they gave me an order, I would manufacture them. Over the past few years, we've completely overhauled the supply chain, from wafers to co-packaging HBM memory—all the supply chain behind me, all those technologies—we've really prepared thoroughly. If it needs to double, we'll double it, so the supply chain is ready. Now we're just waiting for the demand signal.

Jensen Huang: When CSPs, large cloud providers, and our customers give us their annual plans, we build according to that plan. Now, the situation is that every forecast Wall Street has given us has proven to be wrong because they've underestimated. So in recent years, we've always been in emergency response mode—whatever the forecast given, it's always significantly higher than last year, but still not enough. Last year, Satya seemed to have a bit of a setback, it seemed like that—some called him the adult in the room. A few weeks ago, he said, 'Hey, I built 2 gigawatts this year too, and we're going to accelerate in the future.' Do you see some traditional large cloud providers (hyperscalers) seeming a bit slow, or perhaps slower than what we're saying with Elon's X and the Stargate project? To me, they're all more committed now and accelerating.

Jensen Huang: Because of the second exponential growth. We've already experienced one exponential growth—that's the adoption rate and engagement of AI. The second exponential growth that's been triggered is the improvement in reasoning capabilities. This was our conversation a year ago. Back then, we said, 'Hey, listen. When you have AI remember and generalize an answer, that's basically pre-training.' A year ago, reasoning emerged, research emerged, tool use emerged, and now you have a thinking AI. This will consume even more computing resources.

Host: As you said, certain hyperscale customers do have internal workloads that must migrate from general-purpose computing to accelerated computing, so they've been building throughout the cycle. I think perhaps some hyperscale cloud providers have different workloads, so they're less certain about how quickly they can digest the demand. But now everyone has concluded that they've vastly underestimated the scale needed.

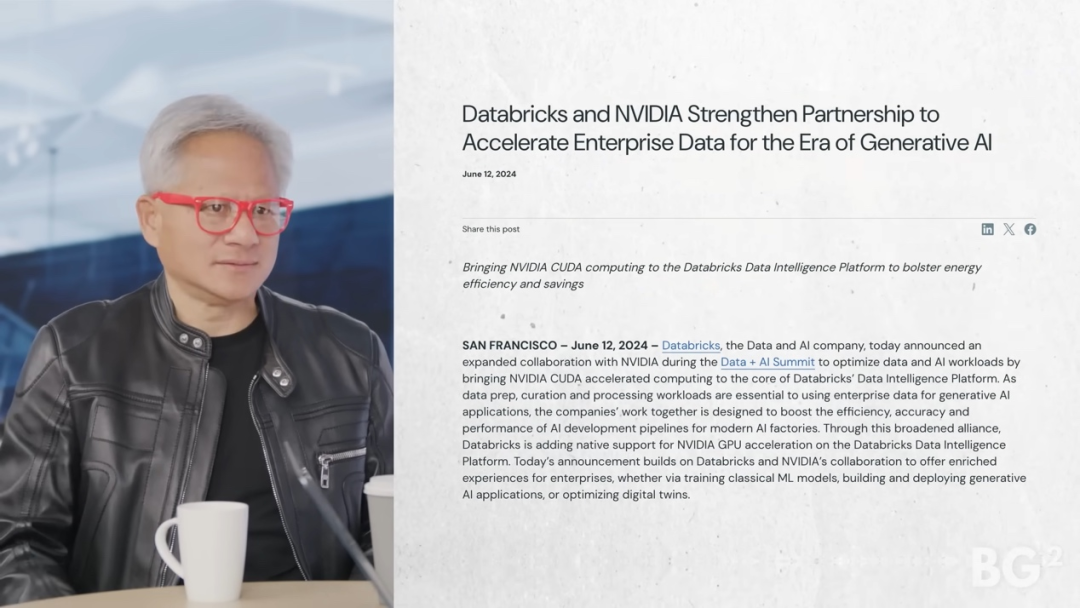

Huang Renxun: One of my favorite examples is traditional data processing—structured data and unstructured data, just old-fashioned data processing. And very soon, we will announce a large-scale accelerated data processing initiative. Data processing represents the vast majority of CPU usage in the world today, and it still runs entirely on CPUs. If you go to Databricks, most of it is CPUs; you go to Snowflake, mostly CPUs. Oracle's SQL processing, mostly CPUs. Everyone is using CPUs for this, and in the future, all of this will migrate to AI data. That's a massive market we're going to transition into. Everything NVIDIA does requires an acceleration layer, requires domain-specific data processing recipes, and we have to build it, but it's coming.

Host: So one of the objections people raise is, I turned on CNBC yesterday, and they said, 'Oh, oversupply, bubble.' When I turned on Bloomberg, they were talking about round-tripping transactions and circular revenue. These arrangements are when companies engage in misleading transactions that artificially inflate revenue without any real economic foundation. In other words, it's growth propped up by financial engineering, not driven by customer demand. Of course, the classic examples everyone cites are Cisco and Nortel during the last bubble 25 years ago. So when you or Microsoft or Amazon invest in companies that are also your big customers—in this case, you invested in OpenAI. At the same time, OpenAI is buying tens of billions of dollars' worth of chips. When Bloomberg analysts and others fuss about circular revenue or round-tripping, what are they getting wrong?

Huang Renxun: 10 gigawatts is roughly equivalent to $400 billion, right? And that $400 billion will have to be funded primarily by their offtake agreements. Their revenue is growing exponentially and must be funded by their capital—the equity they raise and whatever debt they can secure. Those are the three financing tools. The equity and debt they can raise are tied to the credibility of the revenue they can sustain. So, savvy investors and savvy lenders take all of that into account. Fundamentally, that's what they do. That's their company, not my business. Of course, we have to maintain very close ties with them to ensure that the support we build facilitates their continued growth. This is about revenue, not about investment. The investment side isn't tied to anything. This is an opportunity to invest in them. As we mentioned earlier, this is likely to become the next multi-trillion-dollar hyperscale company. Who wouldn't want to be an investor there? My only regret is that we weren't invited to invest earlier. I remember those conversations. We were too poor and underinvested, you know? And I should have given them all our money.

Host: The reality is, if you don't do your job and keep up, if Vera Rubin doesn't turn out to be a good chip, they can go buy other chips and put them in those data centers. They're not obligated to use your chips. As you said, you view this as an opportunistic equity investment.

Huang Renxun: We've done a lot of brilliant investing in this area. We invested in xAI, CoreWeave—we were so smart.

4. NVIDIA's Competitive Edge and Strategy

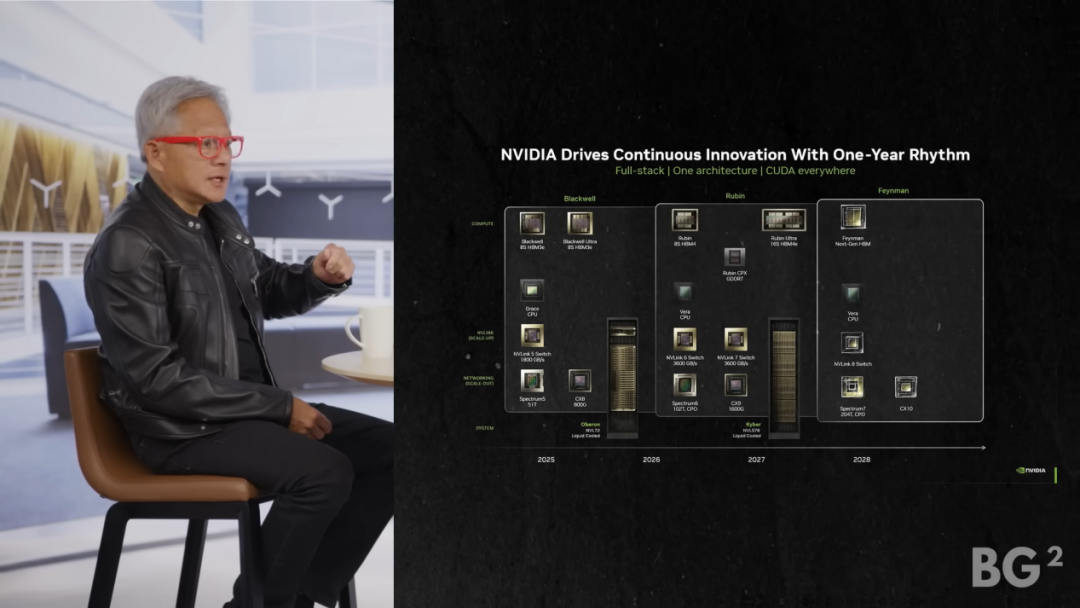

Host: Let's dive deeper into system design, then. In 2024, you switched back to an annual release cycle, and then you did a massive upgrade that required a major overhaul of data centers alongside Grace Blackwell. In the second half of 2026, we'll get Vera Rubin. In 2027, Ultra will be released, and then in 2028, Feynman. How's the annual release cycle going? Is it smooth? What's the primary goal of adopting this annual release cycle? And does NVIDIA's internal AI enable you to execute this annual release cycle?

Huang Renxun: Yes. Without AI, NVIDIA's speed, our cadence, our scale would be limited. So without the AI we have now, it would be impossible to build what we've built. Why are we doing this? Satya has said it, Sam has said it—the token generation rate is rising exponentially, and customer usage is growing exponentially. I think they have around 800 million weekly active users or something like that. I mean, we're not even two years from the rise of ChatGPT, right? So the first thing is, because the token generation rate is rising at such an incredible pace—two exponentials stacked on top of each other—unless we improve performance at an incredible pace, the cost of token generation will continue to rise because Moore's Law is dead because transistors are basically the same price every year now, roughly the same power. Between those two fundamental laws, unless we come up with new techniques to reduce costs, even if there's some variation in growth, you give someone a few percentage points discount—how does that make up for the gap between two exponentials? So we have to improve our performance every year at a rate that keeps up with that kind of exponential growth. So in this context, I think from Kepler all the way to Hopper, the performance improvement was roughly 100,000 times. That was the starting point of NVIDIA's AI journey—100,000 times improvement over 10 years. Between Hopper and Blackwell, we achieved that growth—30 times in one year—because of NVLink 72. And then we'll get another X-factor with Rubin, and then another X-factor with Feynman. And the reason we do this is because transistors aren't helping us that much, right? Moore's Law, to a large extent, is like that. Density is going up, but performance isn't going up with it. So if that's the case, one of the challenges we have to face is that we have to take the entire problem apart at the system level and change every single chip simultaneously, as well as all the software stacks and all the systems, all at the same time. Extreme limit codesign. No one has ever designed at this level before. We change the CPU, revolutionize the CPU, the GPU, the network chip, NVLink scaling, Spectrum X scaling. I hear people say, 'Oh, yeah, this is just Ethernet.' Spectrum X is not just Ethernet. People are starting to realize, 'Oh my god, the X-factor is pretty amazing.' NVIDIA's Ethernet business is the fastest-growing Ethernet business in the world. So to scale up, of course, now we have to build larger systems, so we scale out—multiple AI factories connected together. And we do this at an annual cadence. So now we have an exponential of an exponential, self-accelerating at a technical level. This allows our customers to drive down token costs and continuously make those tokens more and more intelligent through pre-training, post-training, and thinking. And the result is that as AI gets smarter, it will be used more. And as it's used more, it will grow exponentially.

Host: For those who might not be as familiar, what does extreme co-design mean?

Huang Renxun: Extreme co-design means you have to optimize the model, the algorithms, the systems, and the chips all at the same time. You have to innovate outside the box. Because Moore's Law says you just keep making the CPU faster and faster, and everything gets faster—you innovate inside one box, making that chip faster. What do you do if that chip can't speed up anymore? You innovate outside the box. So NVIDIA has really changed a lot because we did two things. We invented CUDA, we invented the GPU, and we introduced the idea of large-scale co-design at a massive scale. That's why we've ventured into all these industries. We're creating all these libraries and doing co-design, first at the full-stack extreme—it even goes beyond software and GPUs, now at the data center level, switches and networks, and all that software inside the switches, in the networks, in the NICs, scaling up, scaling out, optimizing in all those areas. So from Hopper to Blackwell, it's equivalent to a 30x improvement. No Moore's Law could achieve that. So that's the result of extreme co-design. Is that because NVIDIA did it? That's why. That's why we've ventured into networking, switching, scaling up, scaling out, and cross-domain scaling, as well as making CPUs, making GPUs, and making NICs. You know, that's why NVIDIA is so rich in software and talent. We submit more open-source software contributions in the world than almost anyone. Except for one other company, I think it's AI2 or something like that. So we have this incredible wealth of software resources, and that's just in AI. Don't forget computer graphics, digital biology, and autonomous vehicles—the amount of software we produce as a company is astonishing. This allows us to do deep and extreme co-design.

Host: I heard from one of your competitors that this is done because it helps drive down the cost of token generation, but at the same time, your annual release cycle makes it almost impossible for competitors to keep up. The supply chain is locked in even more because you've given them three years of visibility. So now the supply chain—

Huang Renxun: Before you ask the question, consider this. For us to make hundreds of billions of dollars in transactions every year regarding AI infrastructure construction—think about how much capacity we had to start with a year ago. We're talking about hundreds of billions of dollars in wafer starts and DRAM purchases, etc. The scale now is such that almost no company can keep up.

Host: So would you say your competitive advantage is stronger now than it was three years ago?

Huang Renxun: First of all, there's more competition than ever before, but now it's harder than ever. The reason I say that is because wafer costs are rising. That means unless you're co-designing at an extreme scale, you can't achieve that kind of 'X-factor' level of growth. Unless you're handling six, seven, eight chips a year—this isn't about making ASICs, this is about building an AI factory. And there are many chips inside this system, all co-designed and working together, and they deliver that 10x effect we get almost regularly. First, co-design is extremely extreme. Second, scale is extremely extreme. When your customer deploys 1 gigawatt-hour, that's 400,000, 500,000 GPUs. Getting 500,000 GPUs to work together is a miracle. Your customer is taking a huge risk on you, and you have to ask yourself, which customer would place a $50 billion purchase order on a new architecture? You just designed a brand-new chip, you're excited and thrilled about it, and you just showed the first silicon—who gives you a $50 billion purchase order? Why would you start $50 billion in wafer production for a chip that just taped out? But for NVIDIA, we can do that. Because our architecture is fully validated. Our customer base is unbelievably large, and now our supply chain scale is unbelievable. Who would start doing all of that for a company, pre-building all of that, unless they knew NVIDIA could deliver? Right? They believe we can serve all our customers globally. They're willing to invest hundreds of billions of dollars at once.

Host: You know, one of the biggest key debates and controversies in the world is the GPU versus ASIC question. Google's TPUs, Amazon's Trainium—it seems like everyone from ARM to OpenAI to Anthropic is getting involved. Last year, you said, you know, we're building systems, not chips, and you're driving performance improvements through every part of the stack. You also said that many of these projects might never reach production scale, but given that Google's TPU seems to have found success, how do you view this evolving landscape now?

Huang Renxun: Google had the advantage of foresight. Remember, they started TPU1 before everything began. This is no different from a startup. You should go create a startup, and you should build a startup before the market grows. When the market reaches trillions of dollars, you shouldn't still be a startup. This fallacy, which all venture capitalists know, that a few percentage points of market share in a massive market will lead to success—that you can become a large company—is fundamentally wrong. You should have taken the entire small company—that's what NVIDIA did, right? That's what the TPU did when it was just the two of us.

Host: Well, you'd better hope that industry really becomes enormous. You're creating an industry.

Huang Renxun: Exactly. That's the challenge for those building ASICs. It seems like an attractive market, but remember, this attractive market evolved from a chip called the GPU. So I just described an AI factory, and you just saw, I just announced a chip called CPX for context processing and diffusion video generation—a very specialized workload, but an important one inside the data center. I mentioned earlier a possible processor for AI data processing because, guess what? You need long-term memory, you need short-term memory, KV cache processing is incredibly intensive—AI memory is a big deal, and you want your AI to have a good memory. And processing everything related to KV caches in the system is really complicated—maybe it needs a dedicated processor. Maybe there are other things, too, right? So you see NVIDIA—our perspective is no longer just the GPU; our view is the entire AI infrastructure. What diverse infrastructure do these amazing companies need to accomplish all their workloads? Look at transformers. The transformer architecture is undergoing massive changes. How could they try so many experiments to decide which transformer version? Which attention algorithm? How do you dissect it? CUDA enables you to do all of that because it's highly programmable. So the way to think about our approach is, our business now is—you look at the present, and when all these ASIC companies or ASIC projects started 3, 4, 5 years ago, the industry was very cute and simple—it involved one GPU. But now, it's become massive and complex. In two more years, this will be enormous. The scale will be immense. So I think the struggle for a new player to enter a very large market is indeed tough.

Host: Is there an optimal balance within their computer clusters? Investors, being binary creatures, only want a black-and-white 'yes' or 'no' answer. But even if you get ASICs working, isn't there an optimal balance? You think I'm buying an Nvidia platform, and CPX will be pre-populated for video generation, maybe decoding—a platform for video. So there will be many different chips or components added to the Nvidia ecosystem, the accelerated computing cluster. As new workloads emerge, they're being born. And people trying to roll out new chips today don't really anticipate what will happen a year from now; they just want to get the chips working. In other words, Google is a big customer of GPUs.

Jensen Huang: Google is a very special case. We must show respect to those truly deserving of it. TPU is on TPU7. Yes, that's also a challenge for them, so the work they're doing is incredibly arduous. Remember, there are three categories of chips. There's the architectural class of chips—X86 CPUs, ARM CPUs, NVIDIA GPUs—which fall into the architectural category. And on top of that, there's an ecosystem with rich IP and a complex ecosystem, very sophisticated technology. It's built by the owner, just like us. Then there are ASICs. I used to work at LSI Logic, the company that originally invented the concept of ASICs, and as you know, LSI Logic no longer exists. The reason is that ASICs are excellent, but when the market size isn't very large, it's easy for a contractor to handle all the packaging and manufacturing on your behalf, and they'll charge you a 50 to 60-point profit margin. But when the ASIC market grows, a new way of doing things emerges, called CoT. Apple's ace, that phone chip, is so massive that they would never pay someone else a 50%, 60% gross margin to be an ASIC. They build their own tools for their customers. When it becomes a big business, where does TPU go? The customer's own tools. There's no doubt about it. But ASICs still have their place. The scale of video transcoders will never be too large. The scale of SmartNICs will never be too large. So when there are ten, twelve, fifteen ASIC projects happening simultaneously at an ASIC company, I'm not surprised by that situation. Because there might be five SmartNICs and four transcoders there. Are they all AI chips? Of course not. And if someone wants to build an embedded vector processor for a specific recommendation system, well, that's certainly an ASIC, and you can do that, but would you use it as the foundational computing engine for ever-changing AI? You have low-latency workloads, high-throughput workloads, token generation for chatting, thinking workloads, AI video generation workloads. That's what NVIDIA is all about.

Host: To put it more simply, it's like chess and checkers. Right? In fact, those designing ASICs today, whether Trainium or some of these other vendors, are designing a chip that's a component of a larger machine. You've built a very sophisticated system, platform, factory, whatever you want to call it, and now you're opening it up a bit, right? So you mentioned CPX GPUs, meaning that in my view, you're somehow splitting workloads onto the hardware segments best suited for that particular need.

Jensen Huang: Look at what we've done. We released something called Dynamo, Disaggregated Orch AI Workload Orchestration. We open-sourced it because the AI factories of the future are disaggregated.

Host: And then you released NV Fusion. You even told your competitors, including Intel, whom you just invested in. You know, you're engaging in this factory we're building in that way. Because no one else is foolish enough to try to build the entire factory, but if your product is good enough, you can plug into that system, attractive enough for end-users to say, 'Hey, we want to use this instead of ARM GPUs,' or 'We want to use this instead of your inference accelerator,' and so on. Is that correct?

Jensen Huang: Yes. We're delighted to serve you. NV Link Fusion, what a great idea. We're very excited to work with Intel. It requires the participation of the Intel ecosystem. Most enterprises in the world still rely on Intel to run, so it requires the Intel ecosystem, combined with NVIDIA AI, ecosystem, accelerated computing units, and then we fuse them together. That's how we did it with ARM. There are several other projects we'll do it with as well. This opens up opportunities for both of us. It's a win-win for both sides. I'll become their big customer, and they'll give us access to a much larger market opportunity.

Host: What's staggering is when you say our competitors are building ASICs. All chips are cheaper today, but they could literally price them at zero, and our goal is they could price them at zero, and you would still buy an NVIDIA system because the total cost of ownership, power, data center, land, etc., intelligence output is still more valuable than just buying the chip. Even if that chip is given to you for free, it's still just a chip.

Host: Because the land, power, and enclosure have already cost $15 billion.

Host: So we've tried to do the math on this, but walk us through your math because I think for people who don't spend their time here, they just can't wrap their heads around it. How is it possible that you price a competitor's chip at zero, and given the cost of your chips, it's still a better bet?

Jensen Huang: There are two ways to think about this. One way is to just think about it from a revenue perspective. So everyone is power-constrained, and let's say, you managed to secure another two gigawatts of power. Well, you want the two gigawatts of power you have to translate into revenue. Your performance or tokens per watt is twice as good as somebody else's because of deep and extreme co-design, and the performance is much higher. Then my customers can get twice the revenue out of their data center. Who doesn't want twice the revenue? And if somebody gives them a 15% discount, well, you know, our gross margins are around 75 basis points, and somebody else's gross margins, around 50 to 65 basis points, not enough to make up for the 30x difference between Blackwall and Hopper. Let's assume Hopper is an amazing chip, and let's assume somebody else's ASIC is Hopper. So you're giving up 30x revenue in that one gigawatt, giving up way too much. So even if they give it to you for free, you only have two gigawatts to play with, and your opportunity cost is astronomical, you'll always choose the best performance per watt.

Host: I heard this phrase from the CFO of a hyperscaler. He said given the performance uplift from your chips is coming through, again right to that point, per token per gigajoule, and power is the constraining factor, they have to upgrade to a new cycle. So as you look out to Rubin, Rubin Ultra, and Feynman, does that trajectory continue?

Jensen Huang: We're making roughly six or seven chips a year now, right? And each one is part of that system. And that system, the software, is ubiquitous, and it needs to be integrated and optimized across those six dimensions to achieve the 30x performance of Blackwell. Now, imagine I'm doing this every year. So if you build an ASIC in that mix of ASICs, in that stew of chips, where we're optimizing in between, you know, it's a hard problem to solve.

Host: This really brings me back to the competitive moat question we started with. We've been watching this with investors for a while. We're investors across the ecosystem, from Google to Broadcom. But when I really examine this from first principles and ask, are you widening or narrowing your competitive moat? You're going to an annual cadence, you're co-developing with a supply chain that's far larger than anybody expected. It requires scale, whether on the balance sheet or in development, right? The moves you're making, whether through acquisition or internal organic growth like NV Fusion, all of it together convinces me that your competitive mode is strengthening, at least in terms of building the factory or the system. It's at least surprising. But what I find interesting is that your multiples are far lower than most everyone else's. I think it has something to do with the law of large numbers. A $4.5 trillion market cap company can't get much bigger. But I asked you this question a year and a half ago. When you sit here today, if the market is saying AI workloads will be 10x or 5x, we know what cap ex is doing, etc., is there any imaginable scenario in your mind where the top of your revenue in five years isn't two or three times larger than 2025? Or how real is the probability that it actually doesn't grow?

Jensen Huang: Here's how I would answer that. As I described, this is our opportunity, and the opportunity is far greater than the consensus.

Host: I think NVIDIA could very well become the first $10 trillion market cap company. Just a decade ago, people said a trillion-dollar company was impossible. Now we have ten, right? And the world is a much bigger place today. This goes back to the point about exponential GDP growth.

Jensen Huang: The world is a bigger place. And people misunderstand what we do. They remember we're a chip company, and we are—we make chips, and we design some of the most amazing chips in the world. But NVIDIA is really an AI infrastructure company. We're your AI infrastructure partner, and our partnership with OpenAI is a perfect example of that. We're their AI infrastructure partner. We partner with people in many different ways. We don't require anybody to buy everything from us; we don't require them to buy full racks. They can buy one chip. They can buy one component. They might buy our networking. We have customers who only buy our CPUs, straight-up buy our GPUs, somebody else's CPUs, and somebody else's networking. We can basically accept you buying in any way you like. You know, my only request is that you buy something from us, you know?

Host: You've said it's not just about better models. We also have to build. We have to have world-class builders. You've said perhaps our most world-class builder domestically is Elon Musk. We also talked about Colossus 1 and what he's doing there, where at the time it was H100s, H200s in a coherent cluster, and now he's building Colossus 2, which you know could be 500,000 GB, equivalent to millions of H100s in terms of compute, all working together in a cluster.

Jensen Huang: I wouldn't be surprised if he reaches gigawatt scale before anybody else.

Host: Yeah, so say a little bit more about that. The advantage of being a builder, it's not just building the software and models but understanding everything needed to make it happen, building those clusters.

Jensen Huang: These AI supercomputers are incredibly complex things. The technology is complex. The procurement is complex because of financing issues. Securing the land, power, and enclosure, and powering it is complex. I mean, this is without question the most complex systems problem humanity has ever taken on. And Elon has a great advantage in that all these systems are collaborating in his head, and those interdependencies, all in one person's head, including the financing.

Host: So he's a large GPT. He's a large supercomputer himself.

Jensen Huang: He's the ultimate GPU. So he has a great advantage there. And he has a great sense of urgency. He truly has a burning desire to build it, and when that desire is met with skill, incredible things happen. Yeah, it's quite unique.