Huawei Opens CANN Source Code: A Bid to Bridge NVIDIA's CUDA Moat?

![]() 08/24 2025

08/24 2025

![]() 724

724

Are NVIDIA's formidable barriers slowly crumbling?

"Clear the path when mountains stand in the way, build bridges over rivers." Chinese tech giants have honed this resilience in recent years.

On August 5, Huawei's Rotating Chairman Xu Zhijun announced at the conference that Huawei would fully open source its Ascend hardware-enabling CANN and the Mind series application enabling suite and tool chain. This move supports users' independent deep dives and custom developments, accelerating innovation for developers and making Ascend easier and more convenient to use.

Xu Zhijun emphasized that the core of Huawei's AI strategy revolves around computing power, and it remains committed to monetizing Ascend hardware.

Among the announcements, the most noteworthy was the open sourcing of CANN. CANN stands for "Compute Architecture for Neural Networks," bridging upper-level AI training frameworks (such as PyTorch, TensorFlow, MindSpore, etc.) and underlying Ascend chips. This allows developers to harness underlying computing power without delving into chip details.

Similar platforms include NVIDIA's CUDA, AMD's ROCm, Moore Threads' MUSA, and Cambricon's Neuware. However, CUDA remains the most renowned and influential, forming NVIDIA's core competitive advantage alongside its hardware products.

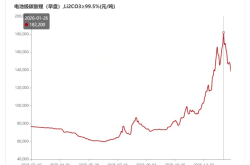

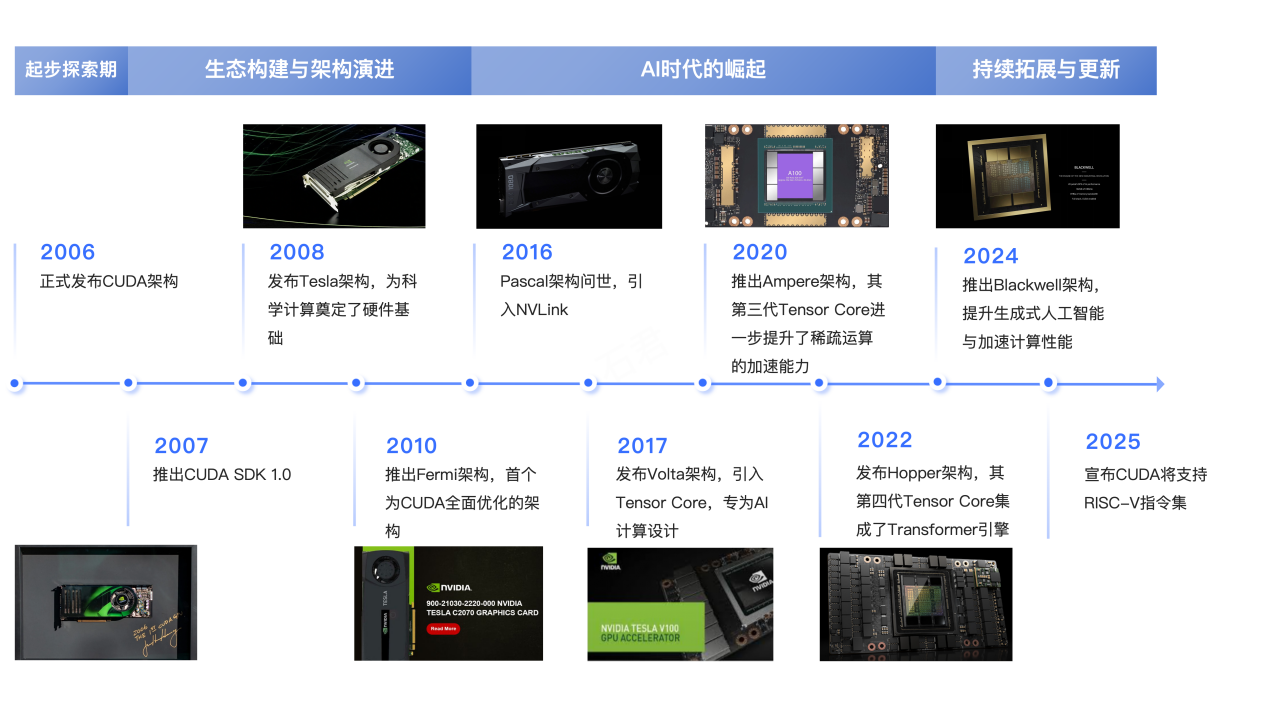

By open sourcing CANN, Huawei aims to breach the 18-year-old CUDA moat built by NVIDIA. So, how did NVIDIA's CUDA evolve, and what opportunities and challenges will Huawei face as a challenger?

1

The Transformative Impact of CUDA

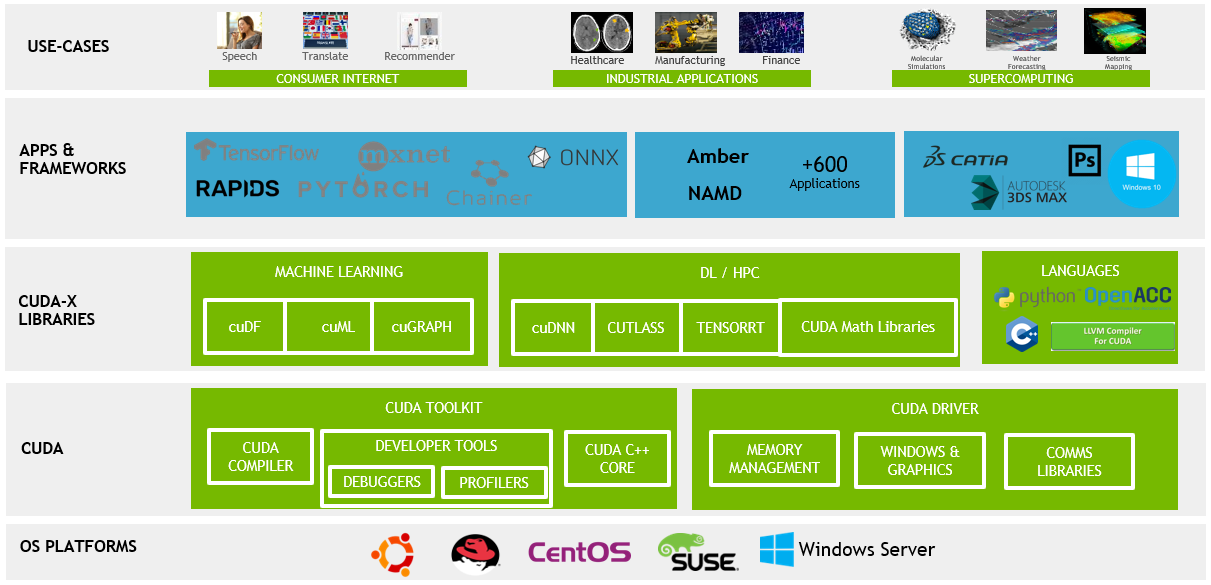

NVIDIA's CUDA (Compute Unified Device Architecture) allows developers to leverage the parallel computing capabilities of NVIDIA GPUs for a broader range of tasks beyond graphics rendering (GPGPU, general-purpose graphics processing unit computing).

Before CUDA, nearly all computing tasks relied solely on CPUs. Scientists and engineers long recognized GPUs' vast computational potential. While CPUs excel at handling complex tasks, GPUs shine in simple, repetitive tasks.

In simple terms, a CPU is like a master chef skilled in various cooking techniques but can only focus on one dish at a time (serial processing with fewer but powerful cores). A GPU, on the other hand, is akin to a team of well-trained kitchen assistants, each handling a simple task but collectively completing numerous tasks simultaneously (parallel processing with thousands of cores).

Now, imagine needing this team of assistants to cook a new dish, braised prawns. Direct instructions won't work because they only understand basic kitchen jargon (graphics instructions). Here, a "translator" is needed to break down the recipe into simpler steps the assistants can understand and execute. This "translator" is CUDA!

(Image source: NVIDIA official blog)

Before CUDA, using GPUs for general-purpose computing required bypassing graphics APIs (like OpenGL) and disguising computing problems as graphics rendering tasks, which was cumbersome and inefficient. CUDA revolutionized this by allowing developers to write GPU-native code (kernels) using C-like languages (later supporting C++, Fortran, Python, etc.). NVIDIA also designed corresponding GPU hardware architectures to support this computing model.

In summary, CUDA's core value lies in transforming GPUs into powerful processors for large-scale parallel data computing through hardware and software co-design, transcending their role as mere graphics acceleration cards.

2

NVIDIA's CUDA Moat

In 2006, NVIDIA officially released the CUDA architecture and introduced CUDA SDK 1.0 in 2007. The GeForce 8800 GTX (G80 chip) was the first GPU to support CUDA, marking the dawn of a new era in heterogeneous computing, according to Chief Scientist David Kirk.

Over the years, NVIDIA launched the Tesla product line (computing cards) and continuously updated architectures like Fermi, Volta, Maxwell, etc., enhancing double-precision performance, introducing unified memory, and more. Simultaneously, it vigorously promoted CUDA in universities and research institutions.

In scientific research, particularly deep learning, GPUs' parallel processing capabilities are well-suited for matrix operations. In 2017, NVIDIA introduced Tensor Core in the Volta architecture, accelerating mixed-precision matrix multiplication and accumulation in AI training and inference. Subsequent architectures like Ampere, Hopper, and Blackwell further strengthened Tensor Core and AI performance. The CUDA ecosystem grew substantially, encompassing numerous acceleration libraries and tools like cuDNN, TensorRT, CUDA-X, forming a deep software and hardware ecosystem moat.

(Original: Compiled from publicly available information)

CUDA's success stems not just from the technology but also from its vast ecosystem. Millions of developers, extensive academic research, and commercial applications rely on CUDA. This network effect and migration cost make it challenging for competitors to unseat NVIDIA in the short term.

Industry insiders note, "The CUDA ecosystem incurs significant migration costs, serving as a bridge between PyTorch and NVIDIA GPUs. Developers switching from CUDA often need to rewrite substantial code and use less mature alternative libraries, losing support from the extensive CUDA troubleshooting community."

A recent Nomura Securities report shows that NVIDIA GPUs currently dominate over 80% of the AI server market. However, due to Sino-US tensions, the U.S. has imposed strict AI restrictions on China, causing NVIDIA's market share in China to decline.

"U.S. AI export controls against China have failed." According to Reuters, AFP, and other media on May 21, NVIDIA CEO Jensen Huang stated at Computex Taipei that NVIDIA's market share in China had dropped from 95% under former U.S. President Biden to 50% currently. Regarding U.S. AI restrictions, Huang reiterated that the strategy was "completely wrong" and "if the goal is to keep the U.S. ahead, current regulations will lead us to lose our leading position."

Amid U.S. restrictions, Chinese chip makers found room to grow, with Huawei Ascend seizing this opportunity.

3

Huawei's Opportunities and Challenges

Ascend chips are NPU (neural network processor) chips independently developed by Huawei (Hisilicon) for high-performance AI computing. The Ascend series primarily includes Ascend 310 and Ascend 910.

At Huawei's Connect 2018 conference, Rotating CEO Xu Zhijun elaborated on the company's AI strategy, officially announcing Ascend 910 and Ascend 310 chips. The physically displayed chip was Ascend 310. A year later, in August 2019, Huawei launched Ascend 910.

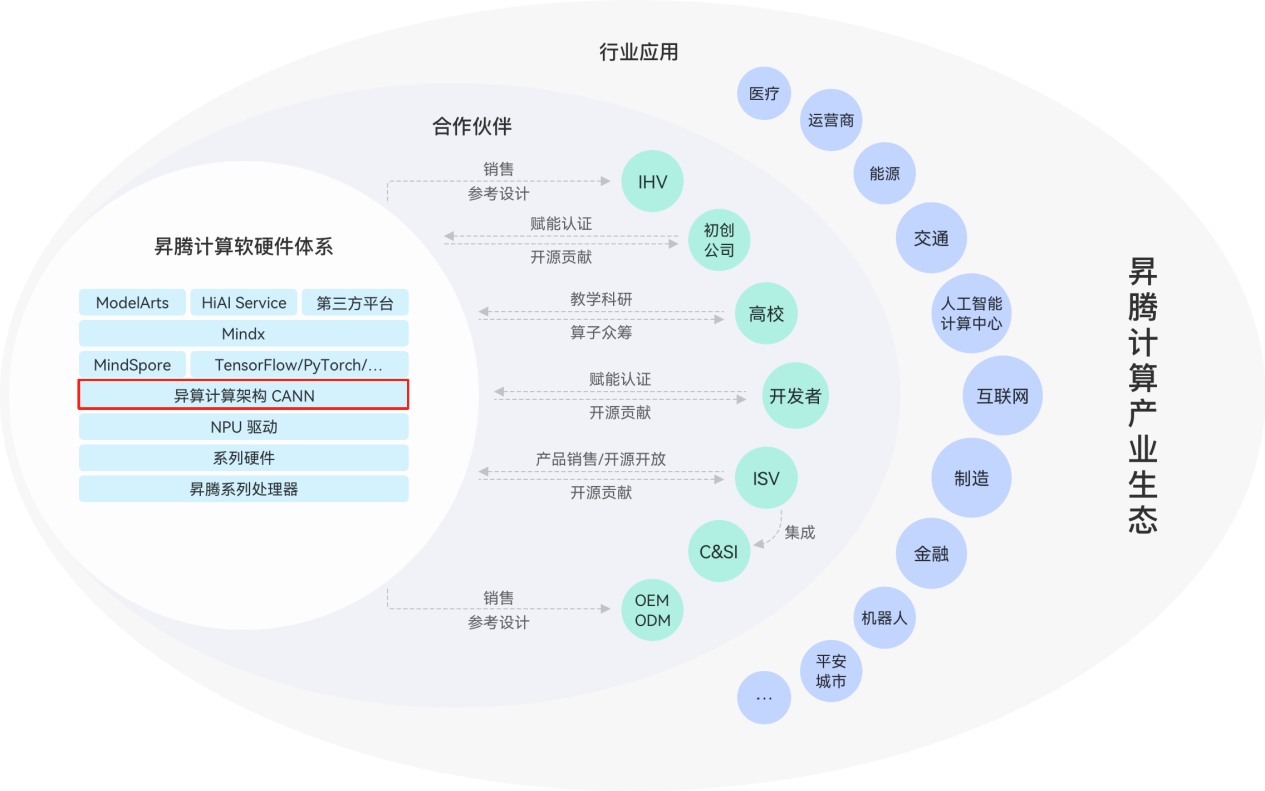

Beyond hardware, Huawei aims to build the Ascend computing industry ecosystem, encompassing academic, technical, public welfare, and commercial activities centered around Ascend technology and products, generating knowledge, products, and partnerships—including OEMs, ODMs, IHVs, C&SIs, ISVs, cloud service providers (XaaS), etc.

CANN is one of the cores of the Ascend computing software and hardware system.

(Image source: Ascend Computing Industry Development White Paper)

However, compared to CUDA, CANN lags in developer scale, architecture maturity, and ecosystem completeness, affecting Ascend chips' ease of use and user preference for CUDA.

To expand its ecosystem, CANN has gradually become compatible with more AI frameworks, now supporting PyTorch, MindSpore, TensorFlow, PaddlePaddle, ONNX, Jittor, OpenCV, and OpenMMLab.

To narrow the gap and ease customer transition to the new ecosystem, Huawei followed NVIDIA's initial CUDA promotion strategy by dispatching engineering teams to Baidu, iFLYTEK, and Tencent to help them reproduce and optimize existing CUDA-based training code in the CANN environment, as reported by foreign media in September last year.

Simultaneously, Huawei actively collaborates with universities. For instance, Professor Xu Tao and his team at Northwestern Polytechnical University integrated Ascend CANN courses into the teaching system, enabling university developers to directly address Ascend processor optimization needs and systematically master skills from algorithm design to hardware adaptation.

To further accelerate ecosystem development, Huawei stated that leading AI enterprises, partners, universities, and research institutions jointly discussed building an open-source Ascend ecosystem, launching the "CANN Open Source and Open Ecosystem Co-construction Initiative" to gather industry forces, explore AI boundaries, and co-build the Ascend ecosystem.

In a media interview, Huawei experts said, "We aim for layered and deep openness for CANN, from the operator development layer to the model development and inference layers, further compatible with third-party open-source frameworks. This allows models/applications running on third-party frameworks and inference engines to migrate to Ascend without modification. For the application development layer, we will provide more SDKs for convenient application deployment and efficient model training and inference."

In contrast, CUDA is closed-source. Its compiler NVCC, math libraries, and deep learning acceleration libraries are only available in binary form. Developers cannot modify the underlying logic and rely solely on NVIDIA's optimizations. Additionally, CUDA only supports NVIDIA GPUs, achieving hardware-software coordination through hardware instruction sets and driver layers. CUDA's deep hardware integration, coupled with NVIDIA's EULA agreement prohibiting other vendors from directly accessing the CUDA interface, forces them to develop independent ecosystems, leading to performance and usability gaps.

Open sourcing CANN is Huawei's latest move to expand its ecosystem swiftly. However, challenging NVIDIA's monopoly built on technological first-mover advantage, closed-loop ecosystems, and business control will require significant efforts from Huawei.

4

Final Thoughts

Amid U.S. technology blockades, Ascend chips provide crucial support for China's AI computing infrastructure. However, last May, Chinese Academy of Engineering academician Sun Ninghui stated that Huawei's Chinese-style closed monopoly approach is hard to compete with Western-style closed monopoly, sparking considerable online controversy.

According to Academician Sun Ninghui, the U.S. dominates global high-level CPU, GPU, and operating system design. For China to breakthrough, it needs an open-source model to break Western ecological monopolies, lower technology possession thresholds for enterprises, enable low-cost chip development, and form a vast smart chip ocean to meet ubiquitous smart needs. By opening up to form a unified technical system, Chinese enterprises and global forces can jointly build a unified smart computing software stack based on international standards, fostering pre-competitive sharing mechanisms among enterprises, sharing high-quality databases, and open-source general-purpose large models. In short, it necessitates the entire industry chain's collective development rather than a closed system by one or two enterprises.

Huawei's announcement to "fully open source Ascend hardware-enabling CANN and the Mind series application enabling suite and tool chain" signifies a significant strategic shift. Open sourcing CANN is a crucial step in ecosystem building, but post-open-source community building and operational capabilities will be even greater tests. This requires Huawei to continuously invest and genuinely engage with the global developer community in an open, transparent, and collaborative manner.

As Xu Zhijun said at the Ascend Summit, "Open sourcing is not the end, but the starting point for ecological co-construction."