How Do Autonomous Vehicles Interpret Textual Cues?

![]() 02/10 2026

02/10 2026

![]() 472

472

In contemporary transportation systems, textual cues act as a versatile and essential supplementary tool, conveying a wealth of dynamic traffic regulations. From routine instructions such as "Left-turning vehicles enter the holding area" to temporary alerts like "Construction ahead, slow down and detour," these Chinese character messages provide intuitive and effective guidance for human drivers. However, for autonomous vehicles, interpreting these cues requires a highly intricate chain of perception, comprehension, and decision-making.

How Do Autonomous Vehicles 'See' Text Clearly?

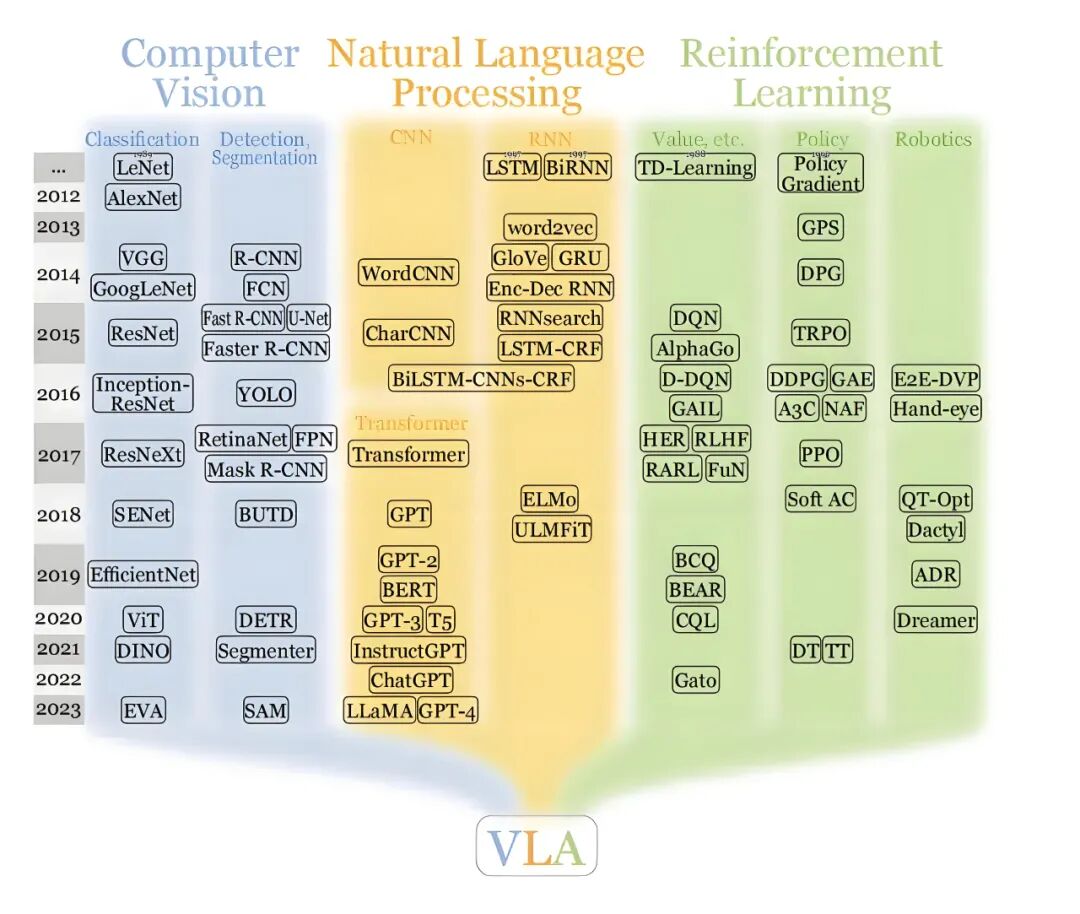

The initial step for autonomous vehicles in perceiving Chinese characters is leveraging scene text recognition technology, which is fundamentally different from document scanning in traditional office settings. In traffic scenarios, text is displayed on carriers with diverse materials, shapes, and reflective properties, such as metal road signs, ground paint, or electronic displays. Raw images captured by onboard cameras are often marred by substantial background noise, including tree shadows, motion blur from vehicles, and localized overexposure due to uneven lighting. Consequently, the autonomous driving system must preprocess these images to denoise and enhance them before entering the text detection phase. The objective of text detection is to accurately pinpoint the regions containing text within complex backgrounds, relying on deep convolutional neural networks that extract pixel features layer by layer to identify candidate boxes with text layout characteristics.

Recognizing Chinese character cues demands significant technical support for the detection module. The stroke structure of Chinese characters is considerably more intricate than that of English letters, and in road scenarios, text can suffer severe perspective distortions due to the camera's pitch angle or vehicle tilt. To mitigate this, spatial transformer networks can be integrated into the text recognition architecture. These networks can geometrically correct detected slanted text regions, akin to humans adjusting their viewing angle, restoring them to flat feature matrices.

Following regional localization, the autonomous driving system transmits the cropped text feature blocks to the recognition module. Convolutional recurrent neural networks are commonly employed, combining convolutional layers for spatial information processing and recurrent layers for temporal information processing. The convolutional layers extract detailed features of each Chinese character segment, while bidirectional long short-term memory networks (LSTMs) capture the contextual relationships between these features. This enables the system to recognize text like "holding area" not merely based on the visual shapes of individual characters but also by referencing the combinatorial logic of surrounding words.

Given the vast Chinese character set, encompassing thousands of commonly used characters, the final layer of the recognition module must attain extremely high classification accuracy. To enhance training efficiency and prediction coherence, the transcription layer can employ Connectionist Temporal Classification (CTC) technology. This algorithm automatically handles character spacing, filters out duplicate characters and blank noise in the predicted sequence, and ultimately outputs structured Chinese character strings. For recognizing long sentences like "Left-turning vehicles enter the holding area," this sequence modeling capability ensures the system outputs complete instructions rather than fragmented character pieces. This pixel-to-character conversion forms the foundational physical perception layer for autonomous driving systems to interpret textual cues.

After completing text recognition, the autonomous driving system does not immediately execute actions but instead converts these characters into machine-understandable logical instructions. For "Left-turning vehicles enter the holding area," the text itself serves merely as a trigger signal. The system must also validate it using the base map information from high-definition maps, which record the static structure of intersections, including the precise geographical coordinates of the holding area. The recognized text information acts as a dynamic enhancement layer, informing the system of the current effective status of this static area. This multimodal fusion of visual perception and map data effectively mitigates the risk of false detections that could arise from relying solely on recognition technology.

How Do Autonomous Vehicles 'Understand' Text?

Merely recognizing characters is insufficient for navigating complex urban traffic. The autonomous driving system must comprehend the traffic regulation implications of combining words like "left-turn," "enter," and "holding area." Traditional rule-based systems achieve this capability primarily through engineers manually writing extensive logical judgment statements, such as "If detected text equals a certain string and traffic light equals a certain state, then execute a certain action." However, this method struggles to make accurate decisions when faced with similarly meaning but differently worded cues like "Left turns may now enter the holding area" or "Prohibited from entering before the left-turn green light." To enhance system generalization, visual language models (VLMs) are being incorporated into the perception architecture of autonomous driving.

The core value of visual language models lies in their ability to map image information and textual semantics into the same high-dimensional feature space for comparison and association. During training, these models learn the correspondence between "textual descriptions" and "physical world objects" by studying vast amounts of road scene images and their corresponding textual descriptions. For example, when the model sees ground-painted text in an image and matches it to the semantics of "enter the holding area," it automatically aligns the language symbol "holding area" with a specific empty lane area ahead of the intersection through a cross-attention mechanism. This alignment is not merely a coincidence of coordinates but also a logical association, enabling autonomous vehicles to search for corresponding physical spaces based on the content of the cues, much like humans do.

In the latest architectures released by automakers like Li Auto, visual language models are assigned the function of "System 2," responsible for logical reasoning and handling long-tail complex scenarios. Unlike "System 1," which handles quick reactions and daily following and steering tasks, the visual language model receives image streams from sensors, undergoes deep logical thinking, and outputs semantic descriptions or decision-making suggestions about the current traffic environment. When a vehicle approaches an intersection with Chinese character cues, the visual language model analyzes the context of the cues: Is it a permanent road sign or a temporary construction notice? Does it apply to all vehicles or just those in specific lanes? This commonsense reasoning ability enables autonomous vehicles to handle extreme cases not encountered in the training data.

To ensure real-time performance during high-speed driving, these models undergo rigorous quantization and pruning before deployment to adapt to the computational limitations of onboard computing platforms. Simultaneously, to enhance robustness, the system utilizes multi-frame image fusion technology. Within a few tens of meters of approaching an intersection, the camera continuously captures dozens of frames containing Chinese character cues. The system compares recognition results from different angles and lighting conditions, using a probabilistic statistical model to calculate the confidence level of the final conclusion. Only when the confidence level exceeds a safety threshold will the semantic understanding results be converted into control inputs for the decision-making layer. This rigorous processing flow ensures that "recognizing Chinese characters" truly serves driving safety without becoming a distraction.

Decision-Making Closed Loop in Dynamic Environments

Taking the specific case of "Left-turning vehicles enter the holding area," when such textual cues appear in the traffic environment, the performance of the autonomous driving system is actually a typical perception-decision-control closed loop. The purpose of the holding area is to improve intersection throughput efficiency, typically requiring vehicles to advance into a preset area in the center of the intersection when the straight-ahead traffic light turns green and the left-turn light is still red. The difficulty of this action lies in its breach of the basic "stop on red" rule, granting higher priority to specific textual cues. When handling this scenario, autonomous vehicles need to synchronize information from three dimensions in real-time: the recognized Chinese character instructions, the current traffic light phase, and the vehicle's precise position within the lane.

After the vehicle confirms the existence of a "left-turn holding area" through the visual system, the decision-making module enters a specific state machine logic. The vehicle closely monitors the traffic light changes. If the straight-ahead traffic light turns green, the recognized Chinese character cue is activated, transforming into a path planning instruction to "allow low-speed advancement to the end of the holding area." During this process, the vehicle utilizes fused perception from radar and cameras to ensure the holding area is not fully occupied by preceding vehicles and continuously detects the position of the ground stop line. This decision-making process is not merely an application of text recognition but also a precise replication of dynamic traffic rules. If the system only possesses the ability to recognize text without understanding traffic flow logic, it may cause the vehicle to stall in the holding area, affecting overall intersection throughput efficiency.

On complex urban roads, Chinese character cues often accompany significant environmental uncertainty. Some intersections may temporarily cancel the holding area due to construction and block it with yellow lines or isolation barriers. In such cases, systems with advanced semantic understanding capabilities demonstrate stronger adaptability. They combine keyword recognition like "construction" and "prohibited entry" from visual language models with physical perception of traffic cones and water-filled barriers to override the original settings in high-definition maps and make judgments that best fit the current reality. This logic, where real-time perception results take precedence over static map data, is a crucial indicator of intelligent driving technology's progression towards all-scenario and all-weather capabilities.

With the evolution of multi-sensor fusion technology, the anti-interference capability of autonomous vehicles in recognizing Chinese characters has significantly improved. In nighttime rainy conditions, ground-painted Chinese characters may become difficult to discern due to road surface reflections. In such cases, the system can utilize the difference in echo intensity from LiDAR to assist in judgment. Since the reflectivity of painted materials differs from that of asphalt road surfaces to lasers, LiDAR can partially outline the ground text contours and complementarily verify them with visual results from cameras. This multi-physical-dimension perception enables autonomous vehicles to understand instructions like "Left-turning vehicles enter the holding area" not merely based on "seeing" but on a comprehensive understanding of the environment, achieving a robust decision-making closed loop.

Cognitive Evolution Under End-to-End Architecture

The processing of Chinese characters and various traffic information by autonomous driving is rapidly evolving towards "perception-planning-control integration." Traditional modular architectures, while logically clear, inevitably incur losses and errors during information transmission. If the text recognition module outputs a character error, it may completely invalidate subsequent rule judgments. With the emergence of end-to-end (E2E) autonomous driving models, which attempt to simulate human neural networks, raw image information is directly transformed into vehicle control instructions. In this architecture, Chinese characters are no longer dissected independent variables but part of the global environmental features directly involved in predicting the driving path.

Under the end-to-end architecture, visual language action models (VLAs) can be used for text recognition. These models not only "understand" Chinese characters and logically deduce their meanings but also directly output specific values for throttle, brake, and steering. When the system sees "Left-turning vehicles enter the holding area," it no longer needs to go through the cumbersome steps of "recognizing characters-consulting maps-judging light colors-generating plans." Instead, it can directly make human-like driving actions based on experiences learned from large-scale, high-quality driving data. Since deep learning networks can capture the subtle and reasonable reaction logics of human drivers when faced with complex textual cues, this evolution significantly enhances the system's ability to handle extreme scenarios.

Image Source: Internet

Since training large models consumes immense computational power and high-quality data, and the black-box nature of models also poses difficulties for safety verification, technical solutions have begun exploring the concept of "world models." World models can simulate billions of traffic scenarios containing complex Chinese character cues in the cloud, allowing autonomous driving algorithms to undergo sufficient reinforcement learning in a virtual world. By repeatedly testing vehicles' understanding and execution of complex cues like "time-limited passage," "bus-only lane," and "holding area" in simulated environments, the algorithm's robustness can be fully validated before mass production and deployment.

Final Remarks

In contemporary times, autonomous vehicles have already acquired the proficiency to precisely discern prompts in Chinese characters and implement the corresponding logic within standard settings. This proficiency is a result of the profound integration of computer vision, natural language processing, and multimodal fusion technologies. With the widespread adoption of visual language models and end-to-end architectures, vehicles' comprehension of road semantics will transcend mere rigid character matching. Instead, it will attain a cognitive level endowed with commonsense reasoning capabilities. When confronted with traffic instructions such as "Left-turning vehicles enter the holding area," autonomous vehicles, through algorithms that are in a state of continual evolution, can grasp not only the individual characters but also the underlying traffic order and civilized behavior hinted at between the lines.

-- END --