Is This Another 'Deepseek Moment' for Chinese AI with ByteDance's Blockbuster?

![]() 02/13 2026

02/13 2026

![]() 414

414

Disruption in Film and Television Content Production / AI-Generated Imagery

Manual Labor / Edited by Wage / Produced by Jiao Shu / Unicorn Observation

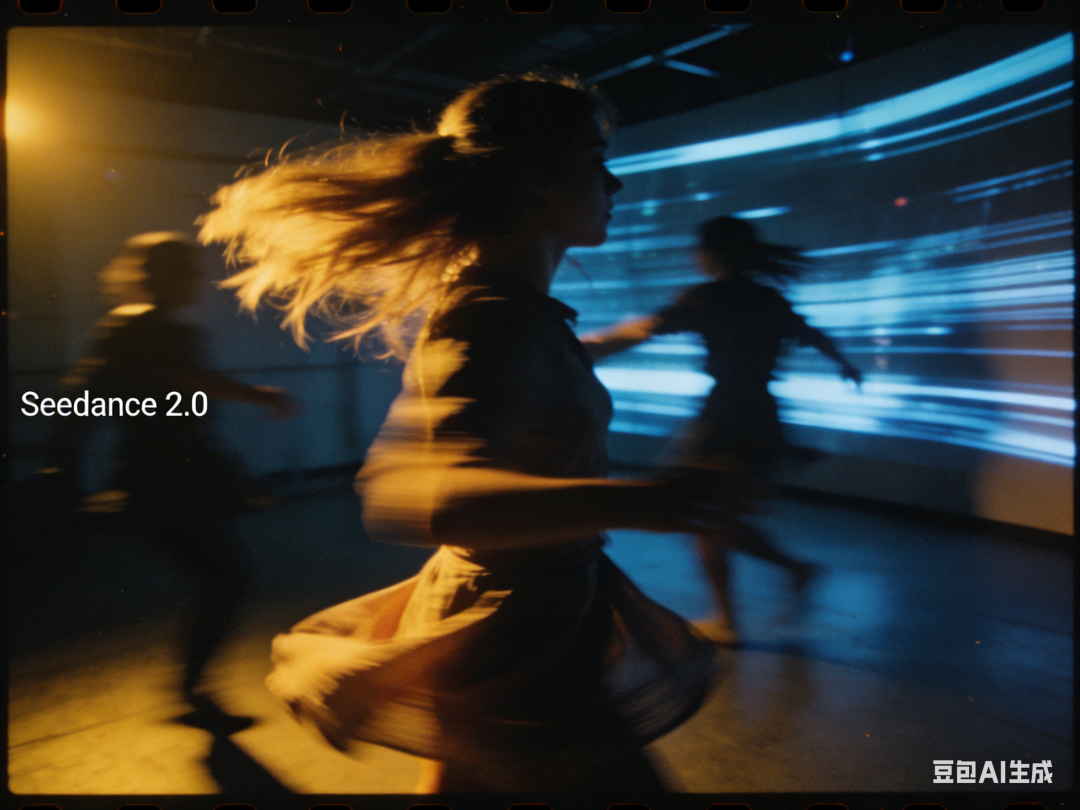

'I'm grateful that at least today's Seedance 2.0 comes from China.' This is how Feng Ji, the producer of 'Black Myth: Wukong,' evaluated the video model Seedance 2.0.

On February 7, ByteDance's Jimeng AI platform officially released the video generation model Seedance 2.0, featuring 60-second 4K multi-shot continuous sequences, millisecond-level audio-visual synchronization, and pixel-level creative control. Within 12 hours of its launch, over one million videos were generated, instantly igniting the AI creation community and capital markets—associated stocks like ChineseAll and Zhangyue Technology surged by 20% in their daily limits, while film and television professionals exclaimed that 'content production paradigms have been disrupted.'

Around the same time last year, DeepSeek emerged with its DeepSeek-V3 model, achieving performance comparable to GPT-4o at a much lower cost. Its open-source strategy democratized access to advanced AI technology, rapidly making DeepSeek a core part of the global developer ecosystem and a 'phenomenal event' in China's AI development history.

Could Jimeng's Seedance 2.0 be another 'Deepseek moment' for Chinese AI?

01 A Stunning Debut That Went Viral

In February, ByteDance did not hold a grand launch event but quietly initiated a small-scale internal test of Seedance 2.0 within products like Jimeng and Doubao. Nevertheless, it quickly triggered a 'technological tsunami' in the AI video field.

Dubbed 'Kill the game' by developers, this model broke industry norms with its all-around capabilities, earning praise as 'director-level AI.' Some even argued that it marked 'the end of AIGC video's infancy.' Its technological breakthroughs and disruptive experience shook the entire AI creation community and film and television industry.

Feng Ji, the producer of 'Black Myth: Wukong,' carried significant weight in his evaluation. He posted late at night, calling it 'undoubtedly the strongest video generation model on the planet today' and lamenting that 'the infancy of AIGC has officially ended.'

In his view, Seedance 2.0's core breakthrough lies in its leap in AI's ability to understand and integrate multimodal information. Its leading position, versatility, and low barrier to entry are expected to drive explosive growth in content production and democratize video creation. While he acknowledged concerns behind its technological prowess, he remained highly impressed by its capabilities.

Pan Tianhong (Tim), founder of 'Film Storm,' made Seedance 2.0 go truly viral with his test video, using the word 'terrifying' six times in nine minutes to describe its impact.

The test demonstrated that AI significantly addressed three major industry pain points: camera movement, shot continuity, and audio-visual alignment. Using just Tim's photo, the AI generated his voice and accurately reconstructed the back layout of a building from a front-facing image. Tim emphasized that he had not authorized this, highlighting how the ability to 'reproduce personal features without authorization' showcased technological strength while sparking widespread controversy.

Further tests by tech bloggers confirmed its 'versatility': in basic tests, a scene of 'a girl and a cat meeting under a cherry blossom tree' was successfully generated in one attempt, with details adhering to physical laws. In advanced tests, a 15-second anime fight sequence maintained emotional coherence and smooth special effects, directly usable for short videos. Some bloggers even stitched together four 15-second clips to create a 60-second short drama in just 15 minutes.

Seedance 2.0's stunning performance also captivated global audiences, with demo videos trending frequently on overseas social platforms, where users praised its Hollywood-level effects. Since it was only available for internal testing in China, a surge in requests for tutorials and accounts emerged overseas, with reports even claiming that some earned over $8,000 in two days by selling Jimeng credits. From industry acclaim to real-world performance, from domestic virality to global adoration, Seedance 2.0 proved its leadership and showcased the explosive potential of China's AI video technology.

From high recognition among domestic industry insiders to crazy (fengkuang, 'frenzied') pursuit by overseas users, Seedance 2.0 proved its competitiveness and once again placed China's AI video technology at the center of the global stage.

02 Hidden Concerns Behind the Glory

If 'doing nothing and doing something' represents vivo's strategic direction through cyclical challenges, then 'evolving organizational capabilities' is the core engine driving this strategy's implementation.

The stunning debut of Seedance 2.0, like a boulder thrown into a calm lake, triggered a chain reaction in capital markets and the film and television industry. Comparisons with OpenAI's Sora became a focal point of industry debate, while ethical, compliance, and capability bottlenecks emerged as critical obstacles to its sustained development.

In capital markets, Seedance 2.0's popularity directly drove a surge in related sectors, reminiscent of the AI bull market triggered by DeepSeek last year. Netizens joked, 'Last year was the DS moment; this year is the SD moment.'

On February 10, the media sector led gains, with Dook Culture and Rongxin Culture hitting 20% daily limits, while Enlight Media, ChineseAll, and other stocks rose simultaneously. A research report by Kaiyuan Securities hailed it as the 'singularity' for AI-driven film and television, arguing that it would expand the content ecosystem's industrial chain and revalue its potential. This enthusiasm essentially reflected dual recognition of its technological strength and industry potential.

The film and television industry faced disruptive changes, with its efficiency and cost advantages reshaping industry fundamentals. Traditionally, a 5-second special effects shot required nearly a month of work by senior personnel at a cost exceeding 30,000 yuan, whereas Seedance 2.0 accomplished it in two minutes for less than 3 yuan—a gap exceeding ten thousandfold in both efficiency and cost.

For a standard 90-minute film, other AI models succeeded in less than 20% of attempts, with actual costs soaring to 10,000 yuan, while Seedance 2.0 achieved over 90% success at a cost below 2,000 yuan.

Short dramas and animated series benefited most significantly, eliminating the need for actors and locations and slashing core costs by over 90%. Meanwhile, intermediaries like animated series agents saw their value diluted, with survival space sharply compressed.

Compared to OpenAI's Sora, both models have strengths and weaknesses: Seedance 2.0 excels in multimodal reference capabilities, high generation success rates, consistent 15-second single-generation outputs, and rapid commercialization. However, its shortcomings include inadequate coherence in long-video stitching, inferior complex scene reconstruction compared to Sora, and limited global influence due to domestic-only availability. This comparison suggests that the AI video race remains unsettled, and Seedance 2.0 requires continuous technological iteration.

Ethical risks arise from its powerful generation capabilities, raising concerns about identity impersonation and information fraud.

Tim's test proved that a single photo could reproduce an individual's voice and appearance, which, if misused, could easily lead to a flood of Deepfake content, infringing on portrait rights and spreading false information.

Feng Ji also warned that realistic fake videos would become accessible to all, challenging existing review systems. In response, ByteDance urgently suspended real-person reference capabilities, requiring users to complete real-person verification before creating digital avatars, and developed blockchain tracing and digital watermarking technologies to prevent abuse.

Additionally, copyright and data compliance pose another core challenge.

Tim speculated that Seedance 2.0 might have used unauthorized 'Film Storm' videos for training, sparking industry discussions about training data compliance. Currently, no clear guidelines distinguish 'fair use' from 'copyright infringement' when AI models train on publicly available data, nor is the copyright ownership of generated content clear. Balancing technological innovation with copyright protection remains a common industry dilemma. While platforms restrict video generation involving celebrities and famous IPs, compliance risks cannot be entirely avoided.

Technologically, the most prominent bottleneck lies in inadequate capabilities for long videos and complex storytelling. Currently, it can only generate 15-second segments at a time, requiring manual stitching for longer videos, which affects coherence. Its ability to craft complex narratives and multi-dimensional characters remains weak, making it difficult to convey deep emotions and plots, thus unable to replace traditional long-form filmmaking. Its role leans more toward being a 'tool to assist creators' rather than a 'replacement.'

With great power comes great responsibility. Beneath the glory, ByteDance faces challenges, with ethical risks and copyright compliance serving as essential thresholds for sustainable and healthy development. This 'half-flame, half-water' situation is inevitable in AI development and tests ByteDance's sense of responsibility. As a frontrunner in the AI race, ByteDance should overcome these hurdles.

03 Another Deepseek Moment?

Last year brought DeepSeek; this year brings Seedance 2.0—domestic AI continues to surprise.

The essence of the Deepseek moment was 'breaking monopolies and building confidence.' Amid foreign dominance in language models, DeepSeek achieved breakthroughs with low-cost, high-performance solutions, promoting technological democratization and injecting confidence into China's AI landscape.

The core of Seedance 2.0 lies in 'scenario-based implementation and value creation.' It moves beyond labs into high-frequency application scenarios, unifying technological and commercial value and propelling China's AI from 'technical catch-up' to 'industrial leadership.' Though they operate in different sectors and at different levels, both represent milestones in China's AI journey, driving high-quality industrial development. Seedance 2.0 already possesses the potential to become a 'phenomenal event.'

Its primary value lies in democratizing AI video technology and promoting equal creative rights. Previously, AI video generation required high technical expertise in prompt engineering and computational costs, making it inaccessible to ordinary users and small creators. Seedance 2.0, with its strong multimodal reference capabilities, eliminates the need for professional skills or complex prompts, enabling anyone to generate cinematic-quality videos.

This technological democratization inspires mass creativity, allowing small creators to compete with large institutions, enriching the content ecosystem, and returning creativity to its core. It ushers in a new era of diverse and prosperous content production.

Second, it addresses China's AI dilemma of 'prioritizing technology over implementation,' unifying technological and commercial value. For too long, many advanced AI technologies remained confined to labs, failing to translate into commercial value or form virtuous cycles. Seedance 2.0 precisely targets high-frequency scenarios like film, television, and short dramas, resolving industry pain points and establishing a clear membership-based revenue model for rapid monetization, which in turn supports technological R&D.

Simultaneously, it drives development across upstream and downstream industries such as computing power, material (sùcái, 'materials'), and editing, creating jobs and fostering deep integration between AI and the real economy, ensuring AI technologies truly serve industries.

More importantly, it achieves 'reverse export' of China's AI video technology, enhancing global influence.

Previously, global AI video dominance rested with foreign giants, limiting China's technological influence. Seedance 2.0's impressive performance has garnered spontaneous overseas admiration, with users clamoring for tutorials and accounts, marking China's AI video technology's 'reverse export' and refocusing global attention on Chinese AI.

From DeepSeek breaking language model monopolies to Seedance 2.0 leading in video implementation, China's AI is transitioning from 'follower' to 'leader,' showcasing the responsibility of Chinese tech firms and the global competitiveness of China's AI.

Of course, for Seedance 2.0 to truly become a new 'Deepseek moment,' it must still address ethical, compliance, and technological challenges. However, it has already taken a solid step, achieving major breakthroughs in AI video technology and driving China's AI industrial transformation.

From DeepSeek to Seedance 2.0, China's AI has embarked on a journey from 'breaking monopolies' to 'scenario-based implementation' and from 'technological confidence' to 'industrial confidence.' Seedance 2.0 marks a new, milestone-like starting point for China's AI video field. (End)