ERNIE Bot 4.5 Series Opens Source: Baidu's Cost Revolution and Ecological Strategy

![]() 03/02 2025

03/02 2025

![]() 601

601

On February 28, Baidu unveiled a groundbreaking announcement: ERNIE Bot 4.5 will officially launch on March 16 and open source on June 30, emphasizing significant advancements in foundational model capabilities, multimodal functions, and deep-thinking abilities. Notably, OpenAI also released the GPT-4.5 model early on February 28, but its performance fell short of market expectations.

This dramatic "aerial confrontation" highlights the evolving landscape of large model competition—where technological leadership is no longer the sole determinant. The key to success now lies in accelerating application deployment through an open ecosystem, as the real-world adoption of AI becomes paramount. Baidu aims to take the lead in this new phase with a more open strategic approach.

From Technological Breakthrough to Ecological Reconstruction: Baidu's Open Logic

Since ChatGPT ignited the global AI race, the large model industry has undergone rapid evolution over the past two years. The competition initially focused on technological advancements, with parameter sizes soaring from 100 billion to 1 trillion, capabilities expanding from single-round dialogue to multimodal and deep thinking, and training costs plummeting by 99% in a year.

However, as technological gaps narrow, the strategic focus is shifting: leaders are now converting technological potential into ecological advantages through open-source, free access, and infrastructure openness.

Baidu's recent actions confirm this trend:

- Free Strategy: From April 1, ERNIE Bot will be fully accessible to the public, allowing users to leverage its advanced capabilities without charge.

- Open-Source Plan: The ERNIE Bot 4.5 series will open source on June 30, becoming the world's first open-source model to benchmark GPT-4.5.

- Cost Revolution: Model inference costs have been reduced by 99% within a year, with daily average calls exceeding 1.65 billion.

Behind these actions lies Li Yanhong's profound understanding of the AI industry's underlying logic: "Foundational models only realize their true value when they solve real-world problems on a large scale."

While OpenAI released a high-priced model at $75 per 1M token, Baidu chose to redefine competition through "technology inclusivity," fostering a virtuous cycle of "user base → data feedback → model iteration" by lowering barriers for developers and expanding application scenarios.

Technical Strength: ERNIE Bot 4.5's Three Key Advantages

If large models are the operating systems of the AI era, their core competitiveness lies in comprehension, generation, and cost control. ERNIE Bot 4.5's upgrade path fortifies these dimensions:

1. RAG Capability: A Precision Strike from Search Genes

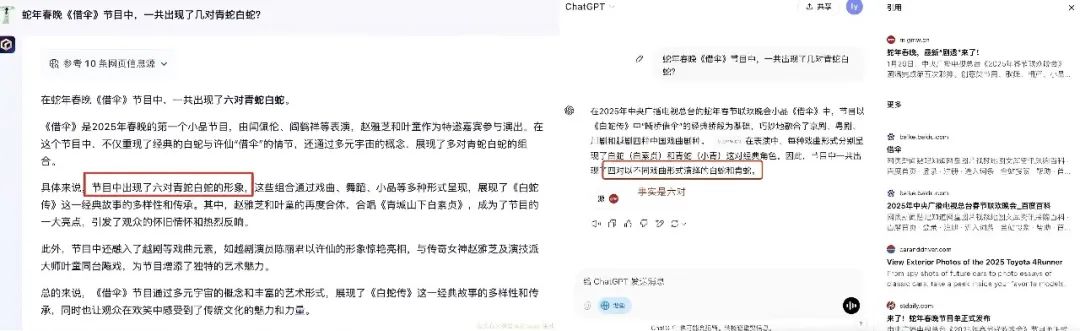

In Retrieval-Augmented Generation (RAG) technology, Baidu excels. Its "comprehension-retrieval-generation" framework integrates its trillion-scale search knowledge base with large models. Test data shows that in time-sensitive scenarios like Spring Festival movies and gala programs, ERNIE Bot provides accurate answers, while ChatGPT often retrieves sources but fails to generate correct responses.

(Left: ERNIE Bot's accurate and detailed answers. Right: ChatGPT's incorrect data and failure to calculate Guan Yue and Xiao Wan appearing at the beginning and Ye Tong and Zhao Yazhi at the end.)

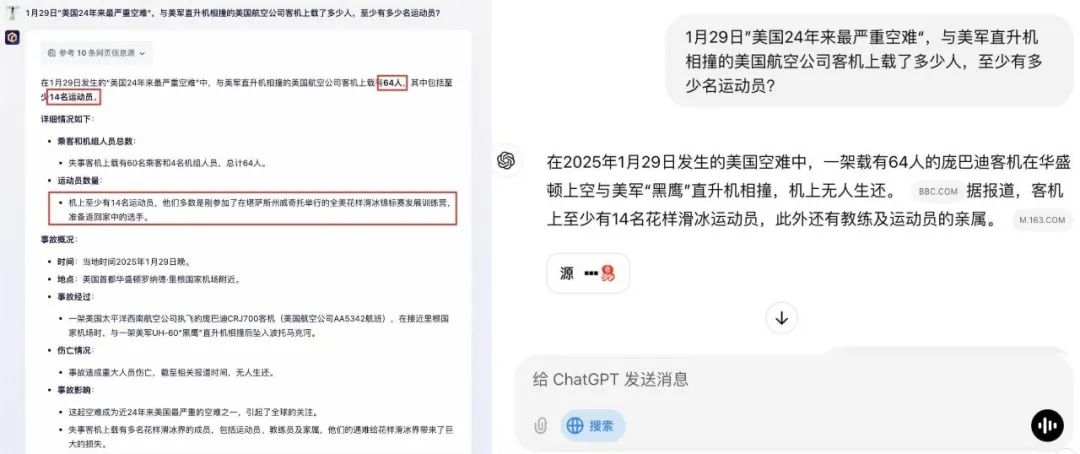

Even in international queries about passenger plane collisions, ERNIE Bot's structured answer is more accurate and comprehensive than GPT's.

(Left: ERNIE Bot's answer. Right: ChatGPT's answer.)

This capability stems from Baidu's two decades of search technology expertise, enabling advanced capabilities like heterogeneous information unification and logical reasoning for conflicting data.

2. iRAG: Revolutionizing Image Generation

ERNIE Bot's iRAG (Image Retrieval-Augmented Generation) technology redefines image generation. Leveraging Baidu Search's billion-level image library, generated images are indistinguishable from real ones in character and scene restoration.

iRAG has broad potential in film, comics, serialized content, and poster production, reducing AI-generated image hallucinations and making them more realistic and natural. It can slash brand poster production costs from hundreds of thousands to nearly zero and boost content production efficiency in film, television, and comics by over tenfold.

This technology's groundbreaking significance lies in extending AI generation from artistic creation to more production scenarios, providing infrastructure-level support for cost reduction and efficiency enhancement in the real economy.

3. Cost Control: Leveraging a Four-Layer Technology Stack

Baidu's vertical integration across its four-layer AI architecture (chip-framework-model-application) gives it a competitive edge.

Recently, Baidu Intelligent Cloud successfully launched the Kunlun Chip III 10,000-card cluster, the first domestically developed and operational 10,000-card cluster. Large-scale clusters enhance computing resource utilization, avoid idle power, increase single-task efficiency, and reduce overall costs through parallel scheduling and elastic power management.

Using the Baige platform, Baidu has increased GPU utilization to 58% and shortened failure recovery time to minutes through the HPN high-performance network and innovative cooling solutions. Model inference costs have dropped by 99% in a year, ushering enterprises into the "cent era" for invocation costs.

Li Yanhong previously predicted that "large model costs will decrease by over 90% annually," meaning a 100-billion-parameter model's training cost will drop from tens of millions of dollars in 2023 to millions of yuan in 2025.

This cost control capability is not just about commercial viability but also reshaping the global AI industry chain—while American companies rely on NVIDIA chips, Chinese players have achieved computing autonomy through hardware-software collaboration.

The Open Ecosystem Strategy: The New Frontier in China-US Competition

If the first half of large model competition was a "technological breakthrough race," the second half is an "ecological positioning war." Baidu and OpenAI's recent actions reveal distinct strategic paths:

Behind this divergence lies the differing underlying logic of China and US AI industries. Baidu "trades openness for scale," leveraging its 430 million user base and 1.65 billion daily average calls to create a data flywheel effect, which OpenAI struggles to replicate due to commercial constraints.

Li Yanhong's assertion at the AI Summit in Dubai is coming true: "Innovations throughout history have all come from cost reduction." As Baidu pushes large model inference costs into the "cent era," the developer ecosystem's explosion is imminent—third-party estimates suggest ERNIE Bot 4.5's open source will attract at least one million developers, fostering a hundred-billion-yuan AI application market in education, healthcare, manufacturing, and more.

As global developers use Baidu's code to build industry models, a silent battle for "standard definition power" has begun. This war eschews dazzling parameter confrontations, focusing instead on API call logs and the unheralded moments of industrial efficiency revolution.

Final Scenario Conjecture: The "Chinese Equation" in the Ecological War

Looking back at the two-year journey of large models, the competition's essence has shifted:

The value anchor has moved from "technological astonishment" to "economic conversion rate"; the competitive dimension has expanded from "laboratory indicators" to "industrial penetration depth"; and the decisive factor has changed from "algorithmic advantage" to "ecological control power."

Under this new paradigm, Baidu's seemingly aggressive open strategy is a pivotal move in the positioning war. As developers worldwide train industry models using ERNIE Bot 4.5, a new power structure is emerging—this is no longer a contest between individual models but a showdown between ecosystems.

Interestingly, Chinese companies are creating new rules in this competition: fortifying moats through open source, exchanging data flywheels for free access, and bridging technological gaps with scenario advantages.

This "asymmetrical tactic" may be the key to unlocking the post-ChatGPT era. While OpenAI grapples with GPT-4.5's "bad news"—being too big and too expensive—Baidu is continuously tipping the scales with its open ecosystem and high-performance, cost-effective models.