Revolutionizing Autonomous Driving Technology with Large Models

![]() 04/21 2025

04/21 2025

![]() 643

643

In recent years, artificial intelligence technology has been permeating and revolutionizing various fields at an unprecedented pace. The rapid evolution of large models, encompassing both large language models and multimodal large models, has opened up new avenues for innovation in autonomous driving technology. Traditional autonomous driving decision systems typically employ a modular design, where subsystems handle tasks from environmental perception to decision-making planning and vehicle control independently. However, in complex traffic scenarios, this hierarchical architecture is prone to issues such as cumulative errors, information loss, and inadequate real-time performance. Large models, with their extensive parameters, cross-modal data processing capabilities, and end-to-end learning paradigms, are gradually transforming this landscape. They not only facilitate efficient fusion of multi-sensor data at the perception level but also devise more rational driving strategies for vehicles through deep semantic understanding and logical reasoning at the decision-making level, thereby enhancing overall safety and robustness.

Advantages of Large Models in Autonomous Driving

The development of autonomous driving technology has traversed multiple stages, evolving from early assisted driving towards fully autonomous driving. Early systems heavily relied on simple object detection and rule-based control. With the advent of deep learning, methods leveraging CNNs, RNNs, and even GANs have continuously enhanced environmental perception and decision-making capabilities. The fusion of Bird's Eye View (BEV) representation and Transformer technology has further compensated for the shortcomings of traditional methods in spatio-temporal modeling. It can be argued that the introduction of large models is fundamentally reshaping the overall architecture of autonomous driving systems, laying a solid foundation for the future commercialization of L3, L4, and even L5 levels.

Transformer-based model architectures typically employ self-attention mechanisms, capable of capturing long-range dependencies, thereby significantly improving the globality and accuracy of information processing. Through the pre-training and fine-tuning approach, models are pre-trained on vast unlabeled data before being fine-tuned for specific autonomous driving tasks. This not only reduces the dependency on extensive labeled data but also endows the model with robust cross-domain transfer capabilities. Multimodal large models can simultaneously process various data forms such as images, point clouds, radar data, etc., achieving the leap from mere "seeing" to profound "understanding," imbuing autonomous driving systems with cognitive abilities akin to humans.

Specific Applications of Large Models in Autonomous Driving

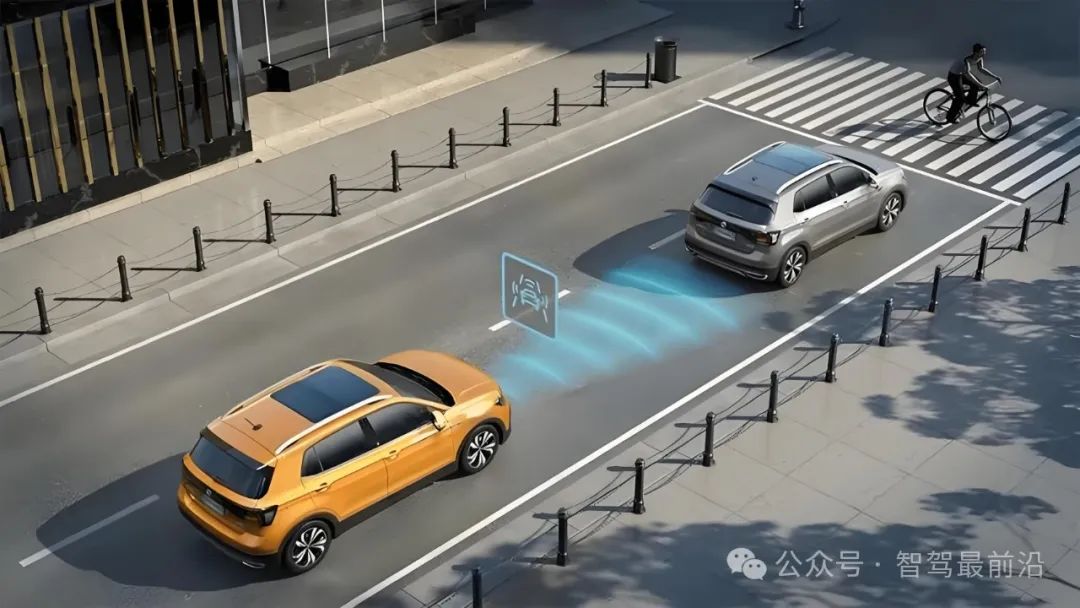

In autonomous driving systems, the application of large models is predominantly manifested at multiple levels, including environmental perception, decision-making planning, and vehicle control. In environmental perception, traditional systems primarily rely on data from a single sensor for object detection and semantic segmentation. However, due to limitations imposed by lighting conditions, weather, and the sensors themselves, they often struggle to cope with complex scenarios. Large models, through multimodal data fusion technology, can integrate various data sources such as cameras, LiDAR, millimeter-wave radars, and high-definition maps to form a richer and more accurate environmental representation. For instance, Vision-Language-Action (VLA) models can simultaneously extract visual and semantic information from images, demonstrating high accuracy in detecting obstacles, predicting pedestrian behavior, and judging road conditions. After deep fusion of information from multiple sensors through large models, not only is the robustness of object detection improved, but predictions of dynamic scenarios can also be achieved through time series analysis, providing more reliable input for vehicle decision-making.

At the decision-making planning level, traditional autonomous driving systems typically rely on pre-set rules or model-based planning algorithms to convert perception results into path planning and motion decisions. However, this method is prone to failure in the face of unforeseen complex traffic conditions, and the interface design between modules is relatively rigid, making it difficult to achieve end-to-end optimization. Large models, through an end-to-end learning framework, can directly extract key information from raw sensor data and generate vehicle control commands through internal logical reasoning. Models like DriveGPT4 and LanguageMPC have showcased the potential of using large models for multi-task decision-making. They can not only devise reasonable driving strategies in complex scenarios but also provide detailed explanations, enhancing the interpretability of the system. The advantage of this end-to-end decision-making lies in reducing intermediate errors during information transmission and enabling the entire system to adapt to new scenarios.

As the final step in autonomous driving, vehicle control not only necessitates accurate decision-making but also ensures real-time system response. Due to the large number of parameters and significant computational load of large models, their direct deployment on in-vehicle systems poses certain challenges. The industry has made significant strides in model compression and lightweighting, utilizing model distillation techniques to extract the essential knowledge from large models and transfer it to smaller, more efficient models, achieving seamless integration with in-vehicle hardware (such as the NVIDIA DRIVE AGX series). This technology not only retains the high performance of large models but also ensures that the response time meets real-time control requirements, thereby playing a pivotal role in the commercialization of L3/L4 autonomous driving.

In the simulation and closed-loop validation of autonomous driving, large models also exhibit significant advantages. By leveraging large-scale data and synthetic scenarios for training, realistic world models can be constructed, enabling closed-loop testing in virtual environments through digital twin technology. This method not only significantly reduces the risks and costs associated with extensive real-world testing but also rapidly simulates various extreme and long-tail scenarios, providing ample data support for model iterative optimization. Waymo's EMMA model leverages simulation platforms and large model technology to achieve high-precision trajectory prediction and collision avoidance decisions, outperforming traditional hierarchical systems and providing new insights for closed-loop validation of future fully autonomous driving systems.

Furthermore, large models play a pivotal role in enhancing system safety and user experience. Autonomous driving is not merely a technical challenge but also encompasses human-machine interaction and social trust. Through natural language processing technology, large models can engage in real-time conversations with drivers, providing driving suggestions and emergency prompts, and even offering personalized assistance based on the driver's emotions. Such interactive design can significantly bolster passenger trust, making autonomous driving systems not only technologically advanced but also more aligned with user needs in practical applications.

Challenges Faced by Large Models in Autonomous Driving

Despite the immense potential of large models in the field of autonomous driving, there are still numerous issues that need to be addressed before transforming them from laboratory achievements to commercial applications. Real-time performance and computational resources are currently among the main bottlenecks. Large models often have extensive parameters and high computational complexity, requiring immense computing power from in-vehicle computing platforms to generate decisions within milliseconds. Dedicated AI chips can be utilized, and large models can be compressed through techniques such as model distillation and quantization, striving to meet real-time response requirements while ensuring performance.

Safety and robustness are also core challenges in the application of large models. Once a decision error occurs in an autonomous vehicle, the consequences can be severe. Therefore, large models must undergo rigorous testing and validation before practical application to ensure correct responses in various complex and extreme scenarios. Due to the "black box" nature of large models, their internal decision-making processes are often difficult to explain. How to improve model interpretability while ensuring high performance has become an urgent issue for regulatory authorities and automotive companies. In the future, combining reinforcement learning, fine-tuning based on human feedback, and rule constraints is expected to design efficient and transparent decision-making systems.

Data privacy and ethical issues also cannot be overlooked in the application of large models. Autonomous driving systems need to collect a vast amount of vehicle, environment, and user data, and the secure storage and use of these data are directly related to user privacy protection. While fully leveraging the advantages of big data, it is crucial to ensure the security of data transmission and processing. Regulatory authorities must first address this by establishing strict data protection standards and privacy protection mechanisms to provide institutional guarantees for the safe application of large models in autonomous driving.

Hardware-software collaboration is also paramount to the implementation of large models. The successful application of large models not only relies on algorithmic innovation but also necessitates high-performance hardware support. Currently, major vendors are launching new generations of in-vehicle computing platforms, such as NVIDIA DRIVE AGX Pegasus and Atlan, which provide hardware guarantees for real-time inference and large-scale deployment of large models. Continuous advancements in sensor technology also provide richer and higher-quality data sources for multimodal data fusion. With the continuous improvement of the entire autonomous driving ecosystem, the deep integration of hardware and software will undoubtedly propel the entire industry into a new era of intelligent transportation.

The profound impact of large models on autonomous driving technology is not only evident in technical details but also triggers a paradigm shift from traditional modular systems to end-to-end systems and from perception intelligence to cognitive intelligence. Future autonomous driving systems, led by large models, will achieve higher-precision environmental perception, more flexible decision-making planning, and safer and more efficient vehicle control, while also reaching new heights in human-machine interaction, personalized assistance, and data security.

-- END --