April's Grand Finale: Can Alibaba's Qwen3 Conquer the Realm of Open-Source Large Models?

![]() 04/30 2025

04/30 2025

![]() 662

662

Alibaba unveiled its Qwen3 series early this morning (April 29th), marking the crescendo of April's large language model releases.

This month has seen a flurry of new large models from Meta, ByteDance, OpenAI, Google, and Baidu. OpenAI, in particular, introduced three models simultaneously, while Baidu announced two during its Create 2025 AI Developer Conference this week.

Amongst this flurry of innovations, can Alibaba still distinguish itself? Indeed, it can. Beyond maintaining its commitment to open-source, the Qwen3 series, Alibaba's flagship large model, has significantly enhanced its performance, narrowing the gap with top-tier models.

Moreover, the Qwen3 series is a hybrid reasoning model, with the official blog post titled "Qwen3: Deep Thinking, Swift Action." In simple terms, Qwen3 supports both thinking and non-thinking modes, unlike DeepSeek where deep thinking is confined to R1 and non-deep thinking to V3.

Photo/Lei Technology

Regarding hybrid reasoning models, Lei Technology reported on the first such model earlier this year, highlighting its advantages and suggesting that "hybrid reasoning" could become the next standard for large model development.

Returning to Alibaba's newly launched Qwen3 series, as China's first hybrid reasoning model and the first fully open-source one, coupled with its improved performance, it's no surprise that Qwen3 garnered 17,000 stars on Github, the world's largest developer community, within just four hours of its launch.

The question arises: in today's contentious environment regarding model benchmarks, can Qwen3 truly deliver on its benchmarked capabilities and the advantages of hybrid reasoning?

While catching up to top closed-source models in benchmarks, what is the true strength of Alibaba's Qwen3?

Undoubtedly, one of Qwen3's standout features is its hybrid reasoning design, enabling the same model to operate in both "thinking" and "non-thinking" modes. Alibaba has seamlessly integrated these two "brain circuits" into a single model, giving users and developers the freedom to choose.

In non-thinking mode, Qwen3 leverages its rapid response advantage, similar to traditional language models—generating results quickly and directly. In thinking mode, the model engages in deep thinking and reasoning, such as breaking down problems, performing logical derivations, and drawing conclusions.

Photo/Lei Technology

While this architecture isn't novel, Qwen3 is the first model in China to fully implement hybrid reasoning and be open-source.

Globally, besides Claude-3.7-Sonnet, which pioneered this design, only Google has made a similar attempt with Gemini 2.5 Flash, launched in mid-April. OpenAI, despite long expressing the goal of "hybrid reasoning," is still in development.

Furthermore, Qwen3 is a multi-size series model, comprising six dense models ranging from 0.6B to 32B, and two MoE mixture-of-experts models—Qwen3-30B-A3B and Qwen3-235B-A22B, suitable for complex tasks. All models support 119 languages and dialects.

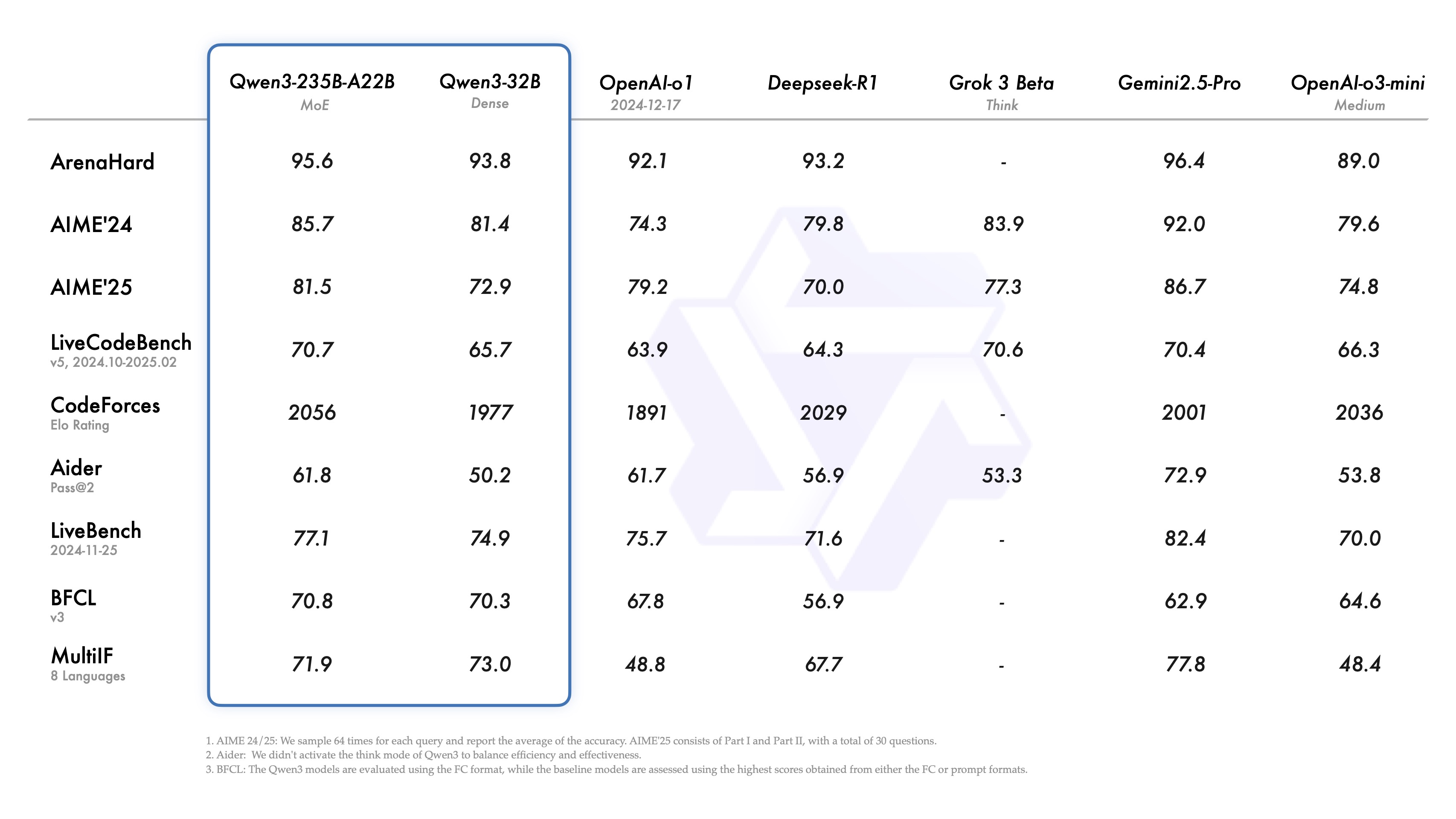

Qwen3 isn't just architecturally "brainy"; its performance is equally impressive. Alibaba claims that smaller models like Qwen3-4B rival the previous generation Qwen2.5-72B-Instruct, while the MoE models have demonstrated capabilities comparable to top closed-source models in benchmark tests.

Photo/Alibaba

Particularly noteworthy is Qwen3-235B-A22B, with 235 trillion parameters, scoring 81.5 on the mathematical reasoning benchmark AIME25, setting a new record for open-source models. It scored over 70 on the code capability test LiveCodeBench, surpassing Grok-3, and 95.6 on the human preference evaluation ArenaHard, exceeding OpenAI o1 and DeepSeek-R1.

These advancements explain Qwen3's warm reception since its release.

Additionally, the Qwen3 team emphasized enhanced Agent capabilities and support for MCP, a logical extension but currently not particularly groundbreaking. It mainly adds another option for AI developers building Agents.

However, Qwen3 isn't perfect. In actual reasoning performance, Qwen3-235B-A22B still lags behind top models. Even at full capacity, it tends to get bogged down in lengthy, irrelevant reasoning when faced with complex problems, yielding unsatisfactory results.

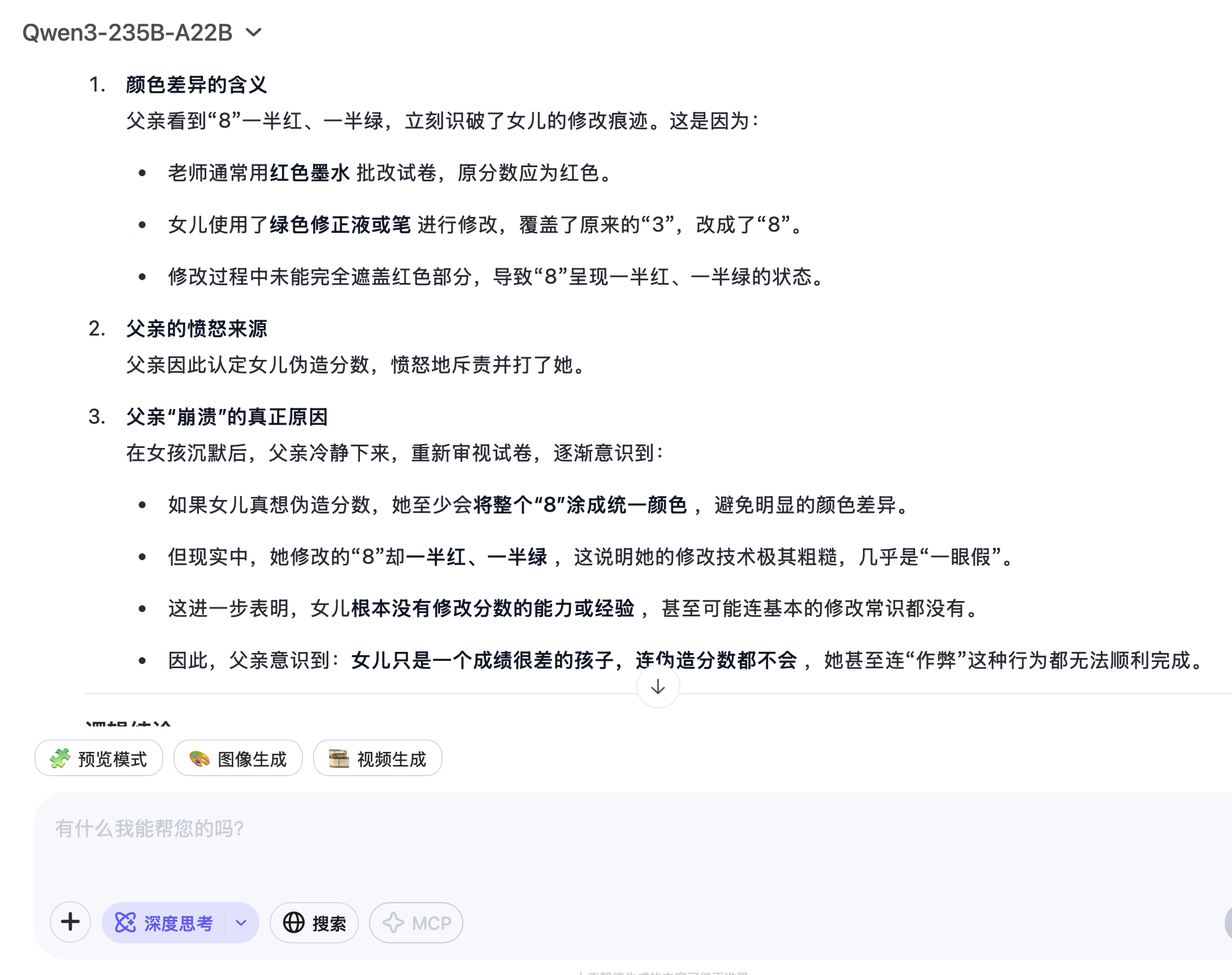

For instance, in a hands-on test on OpenAI-o3, Lei Technology asked about a father's breakdown. While o3 provided a clear, organized answer, Qwen3-235B-A22B encountered similar issues as DeepSeek-R1—taking too long to think, repeatedly changing directions, and failing to grasp the key possibility that "the daughter is colorblind."

Photo/Lei Technology

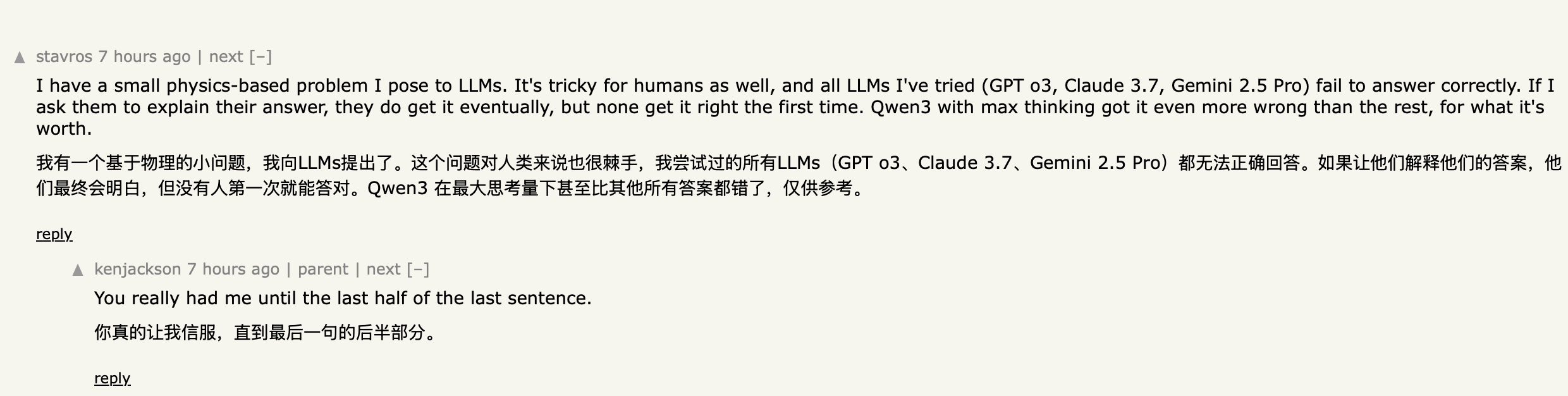

Hacker News also had comments pointing out Qwen3-235B-A22B's performance with complex problems.

Photo/Hacker News

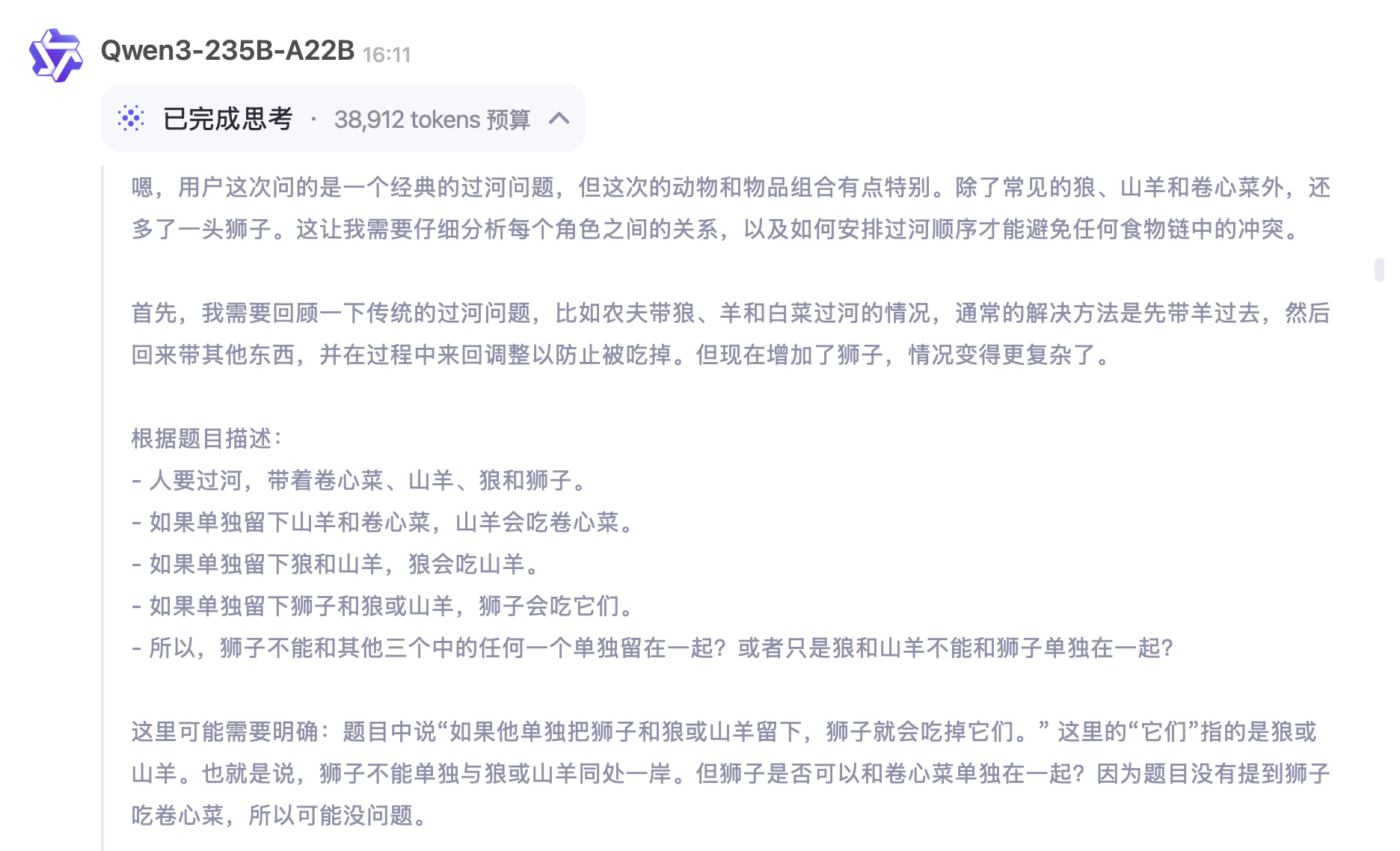

However, when the difficulty was lowered, and the classic river-crossing problem was modified to ask how to safely transport cabbage, a goat, a wolf, and a lion across the river, Qwen3-235B-A22B found a safe path using an exhaustive approach, demonstrating precise rule understanding.

Our brief hands-on experience showcased Qwen3's strongest version's "strength." While benchmark scores shouldn't be the sole criterion, Qwen3 can still be considered the strongest open-source model, with its hybrid reasoning design offering users and developers more flexibility.

On a broader scale, Qwen3's release isn't just a model upgrade but an important reinforcement of Alibaba's AI strategy.

Over the past two years, Alibaba hasn't been slow in large model development, with the Tongyi Qianwen system gradually improving and early open-source efforts. However, it hasn't truly led in global influence or open-source community discourse.

In a leaked report about Qwen3's impending release in April (though delayed), Huxiu noted that Alibaba's foundational model team's most important evaluation dimension is "model influence," with senior management aiming to establish the mindset of "the strongest model" within the industry.

Replicating DeepSeek's influence is challenging. Photo/X

With powerful models from OpenAI, DeepSeek, Google, and Anthropic, Alibaba has mostly played catch-up, struggling to establish a technology-leading position. Qwen3's launch undoubtedly reinforces this position and alleviates the situation to some extent.

Especially in open-source models, Qwen3 covers a complete system from small to large parameters, dense models to mixture-of-experts models, supporting 119 languages and dialects. It has quickly received positive feedback from developer communities like Hugging Face and GitHub, expanding Alibaba's presence in the open-source ecosystem and laying the foundation for more model applications and toolchain construction.

From a commercialization perspective, Qwen3 directly addresses two major pain points in current model applications: high reasoning costs and insufficient adaptation flexibility. By introducing the MoE architecture to reduce reasoning costs while supporting flexible switching between thinking and non-thinking modes, Qwen3 aims for a balance between reasoning efficiency and cost.

For Alibaba Cloud's AI service system, especially for government, enterprise, manufacturing, and finance customers, lower deployment thresholds and higher adaptation flexibility can enhance Alibaba's competitiveness in large model commercialization. More importantly, large model capabilities will be crucial in future AI cloud competition.

Photo/Alibaba

However, a more rational perspective reveals Qwen3's shortcomings. Currently, it's a pure text language model, lacking multimodal and visual reasoning capabilities integrated in QvQ-Max. Compared to the strongest models, Qwen3 has much room for improvement.

Despite Qwen3's reasoning mechanism innovation, its stability and robustness in complex reasoning still lag behind top closed-source models like OpenAI and Anthropic.

Especially in tasks requiring long-chain logical reasoning and multiple rounds of rigorous derivation, Qwen3's "thinking" mode exhibits instability, with occasional reasoning deviations and lengthy, unfocused issues, indicating that the hybrid reasoning design still needs refinement.

In summary, amidst April's fierce large model competition, Qwen3 has indeed brought a necessary and timely upgrade to Alibaba. It not only narrows the performance gap with top models but also explores new possibilities in the reasoning mechanism, potentially helping Alibaba compensate for its AI commercialization shortcomings.

Large model competition will continue to intensify, with performance and cost remaining key "mainlines." Whether Alibaba can maintain its pace and even take the lead in the foreseeable "explosion of intelligent agents" remains to be seen through more technological advancements and product launches.

But today, Qwen3 has indeed positioned Alibaba as a formidable force.

Source: Lei Technology