The Second Half of Large Models: The Growing Need for Open Source in the Agent Era

![]() 04/30 2025

04/30 2025

![]() 525

525

Author: Zhuang Zhou, Editor: Evan

"Large models are rewriting the story of Linux."

Eric Raymond, in his book "The Cathedral and the Bazaar," distinguished between two types of buildings: the marketplace, which evolves daily from nothing to something, and the church, a result of decades of painstaking effort. The story of Linux mirrors the creation of a cathedral through a marketplace approach. Nowadays, in the realm of generative AI, an increasing number of open source models are contributing to this "construction mode."

Alibaba Cloud is a staunch advocate of open source models. Currently, Tongyi Qianwen Qwen has surpassed the US Llama model with over 100,000 derivative models, making it the world's leading AI open source model.

On April 29th, Alibaba unveiled the new generation of Tongyi Qianwen model, Qwen3 (abbreviated as Qianwen 3), with just one-third of the parameters of DeepSeek-R1 at 235B, and a substantial cost reduction.

Qwen3 is China's first "hybrid reasoning model," seamlessly integrating "fast thinking" and "slow thinking." For simple tasks, it provides answers with minimal computing power, while for complex problems, it performs multi-step "deep thinking," significantly conserving computational resources.

From 2023 to present, Alibaba's Tongyi team has open-sourced over 200 models, including the large language model Qianwen Qwen and the visual generation model Wanxiang Wan. These models cover text generation, visual understanding/generation, speech understanding/generation, text-to-image, and video, with parameters ranging from small to large to meet diverse terminal needs.

Qwen3 boasts a total of 235B parameters, with only 22B needed for activation. Its pre-training data volume reaches 36T, and it undergoes multiple rounds of reinforcement learning during post-training, seamlessly integrating non-thinking modes into the thinking model.

Qwen3's deployment cost has also been drastically reduced, requiring only 4 H20s for the full-blooded version, with graphics memory usage just one-third of similar models.

What does Alibaba's release of open source models signify for the industry? How capable are open source models? Where will the competition for large models head in the future?

#01 Catching Up: The Capabilities of Open Source Large Models

Open source large models are closing the gap with closed source models.

This is the consensus among AI entrepreneurs, large model developers from major companies, and investors.

While closed source models still lead today, the gap between open source and closed source models is narrowing rapidly, surprising the industry.

"Closed source models may have initially scored 90, but now open source models can achieve the same score," said a large model developer. The Scaling Law has its limits, where the larger the model, the exponentially higher the cost of capability improvement, giving open source models time to catch up.

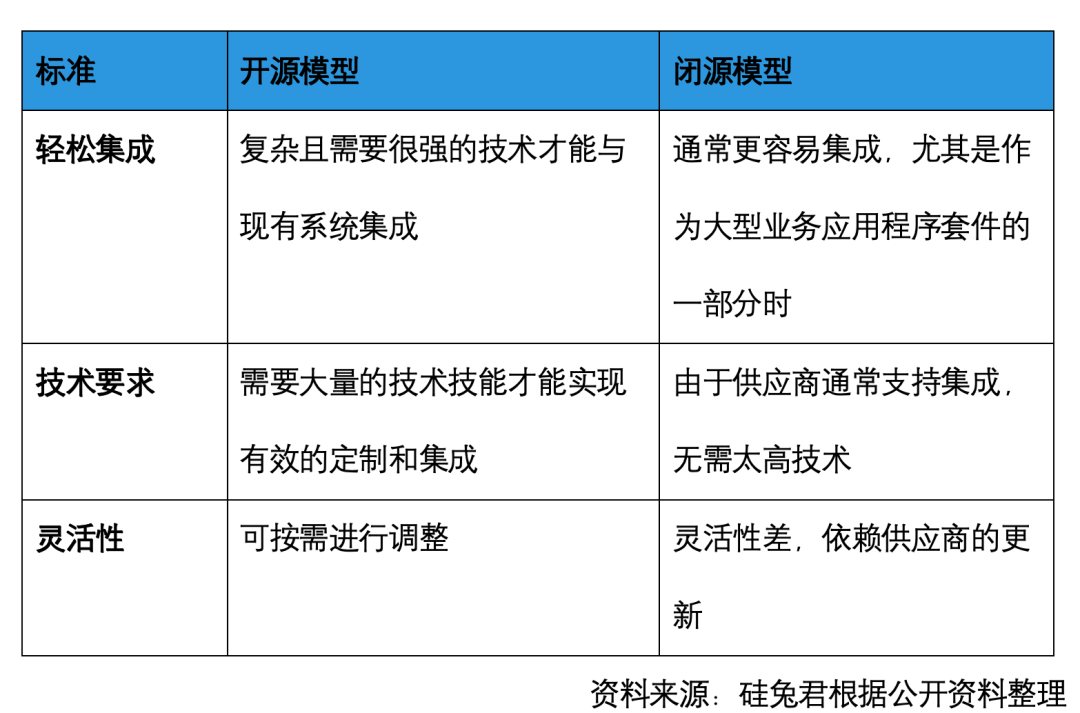

What exactly does an open source model offer? How does it differ from open source software? And what sets it apart from closed source models?

Open source software typically makes the entire source code publicly available for viewing and modification. Subsequent developers can easily reproduce functionality based on the code. However, open source models generally only open source parameters, making it difficult to know the data used, fine-tuning processes, and alignment. Closed source models provide a complete solution. Open source models can be likened to raw ingredients, requiring chefs to prepare their own tools, menus, and research methods, while closed source models are pre-cooked dishes ready to heat and serve.

The advantage of open source models is their flexibility, allowing more developers to participate in model development, enhancing performance, and perfecting the ecosystem. This can save model companies significant labor and time costs and provide a cost-effective solution for users.

However, the cost advantage of open source models is most pronounced in the early stages. For instance, the input cost of the closed source model GPT-4 is approximately $10 per million tokens, with an output cost of $30 per million tokens. In contrast, the input cost of the open source model Llama-3-70-B is around 60 cents per million tokens, and the output cost is 70 cents per million tokens, making it roughly 10 times cheaper with minimal performance differences. But subsequent deployment requires strong technical prowess and investment.

Alibaba's Qwen3 addresses this cost investment issue. In terms of deployment costs, Qwen3 is 25% to 35% of the full-blooded R1, with a significant reduction of 60% to 70% in model deployment costs. The flagship Qwen3 model has 235B parameters with 22B activated, requiring roughly 4 H20s or equivalent GPUs. In comparison, the full-blooded DeepSeek-R1 has 671B parameters with 37B activated, running on one 8-card H20 but is tight on resources (around 100w), generally recommending 16-card H20s at a total price of about 2 million yuan.

In terms of model reasoning, Qwen3's unique hybrid reasoning model allows developers to set their own "thinking budget" for refined thinking control while meeting performance requirements, naturally saving overall reasoning costs. For reference, the similar Gemini-2.5-Flash model shows a 6-fold difference in pricing between reasoning and non-reasoning modes, with users saving 600% in computing power costs using the non-reasoning mode.

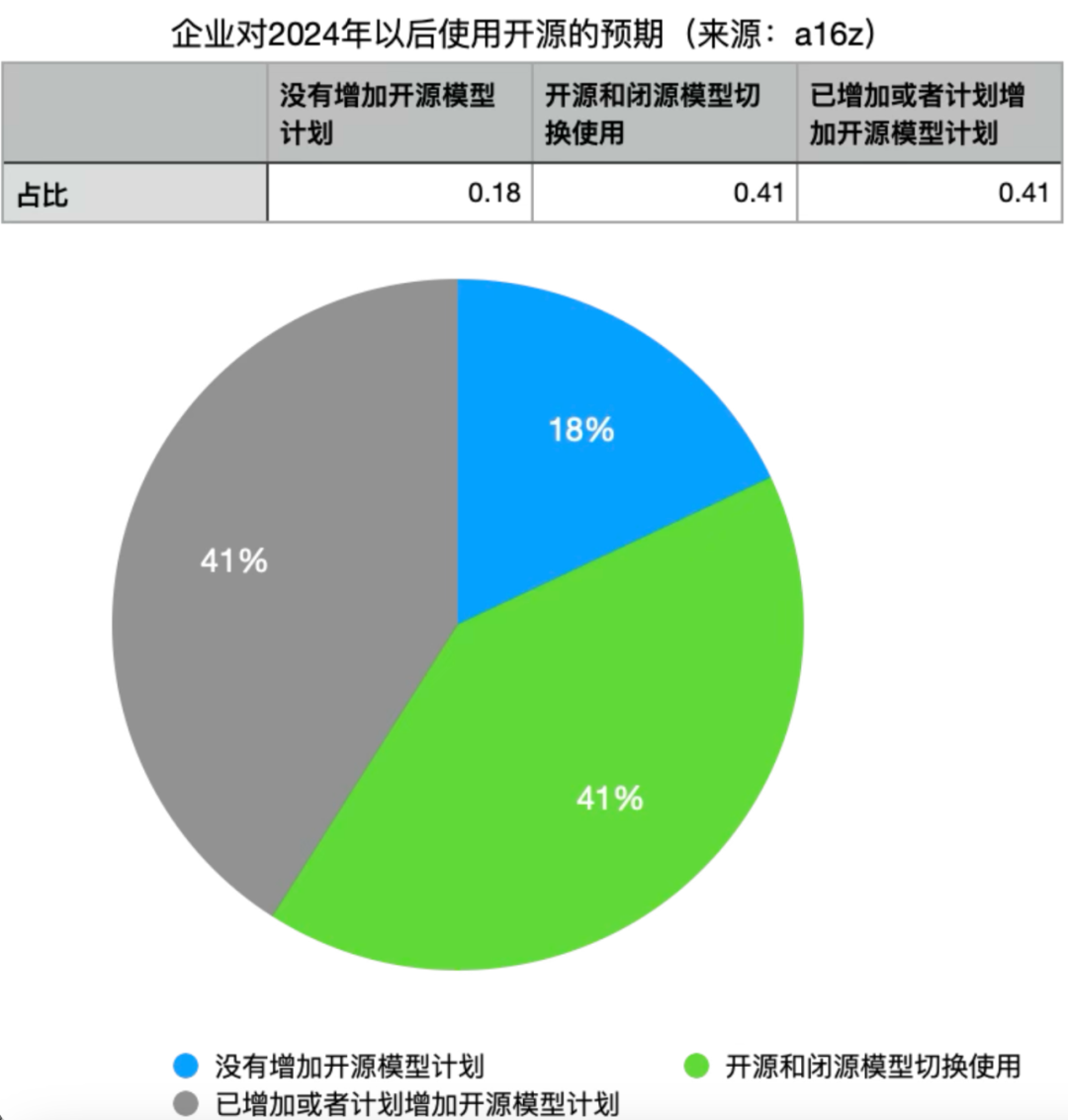

A large model developer from a major company told SiTuJun that open source models are ideal for teams with strong technical skills but limited budgets, such as academic institutions. Closed source models suit companies with fewer personnel but ample funds. However, as open source models improve, 41% of surveyed companies plan to increase their use, and 41% believe they will switch if performance is comparable. Only 18% of companies do not plan to increase their use of open source LLMs.

Marc Andreessen, founder of A16z, noted that open source enables universities to compete again as researchers worry about insufficient funds to remain relevant in AI. When open source models with enhanced capabilities emerge, universities and small companies can leverage them for research, reducing the need for substantial funding.

SiTuJun Chart

#02 Eastern Enlightenment for Large Models

DeepSeek has showcased the capabilities of Chinese companies' open source models.

"DeepSeek represents lightweight, low-cost AI products," said an AI investor from China and the US. For instance, adjusting a Mixture-of-Experts (MoE) model requires high craftsmanship. Previously, mainstream models avoided MoE due to its complexity, but DeepSeek successfully tackled this challenge.

However, the ecosystem is crucial for open source models—how many people are using them. Switching between models is costly for users. After DeepSeek's emergence, some Silicon Valley users of Meta's large models switched to DeepSeek. "Latecomers must offer significant advantages over pioneers," said a large model developer, attracting users to abandon initial investment costs and switch to new open source models.

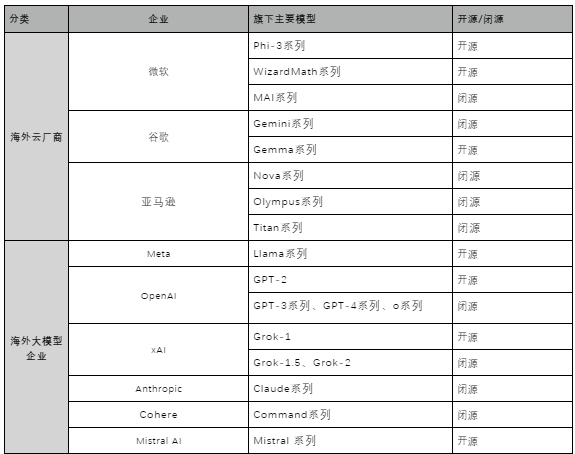

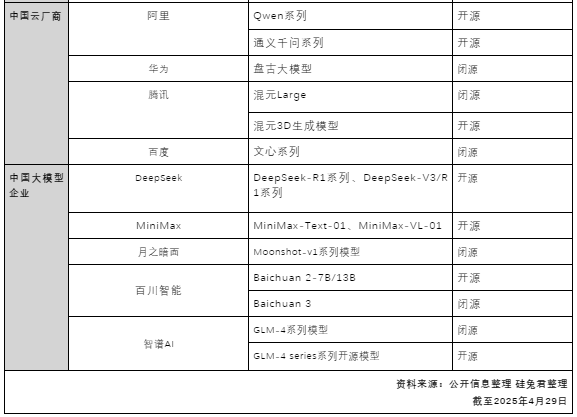

SiTuJun compiled the current open source and closed source status of globally renowned models and found that besides Amazon, Microsoft, Google, Meta, and OpenAI, many companies have open source model layouts. Some choose a purely open source path, while others adopt a parallel open source and closed source approach. In China, Alibaba is the major company most committed to the open source path, laying the groundwork long before DeepSeek released R1.

Global Renowned Model Open Source Status

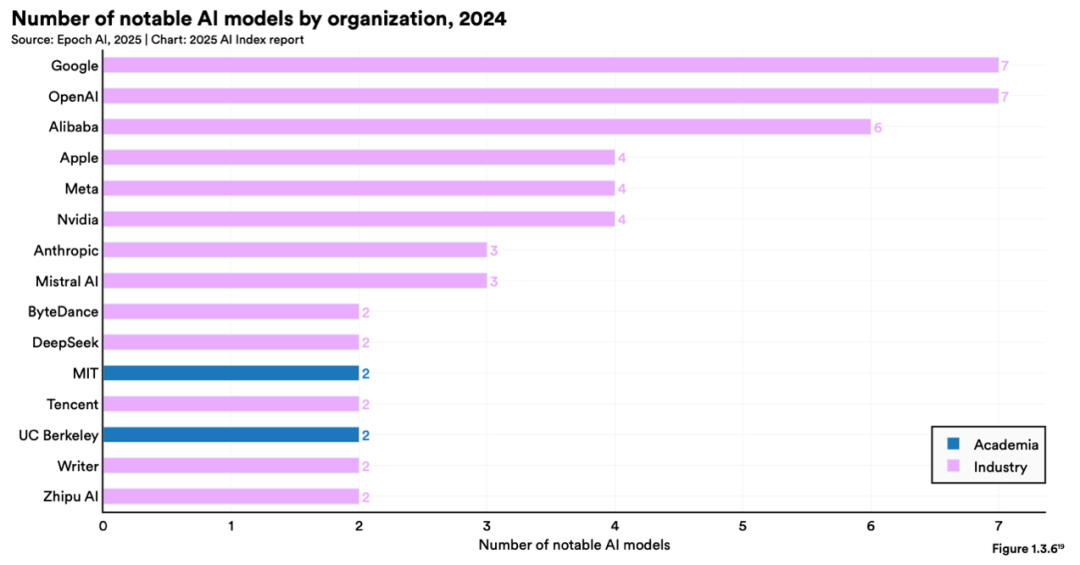

According to Li Feifei's "Stanford AI Report 2025," by mid-2024, Alibaba had released 6 famous AI large models, ranking third globally, with Google and OpenAI tied for first with 7 each. In the report's ranking of important large models in 2024, Alibaba's AI contributions ranked third globally.

Source: "Stanford AI Report 2025"

Qwen3, released on April 29th, as the latest generation of the Tongyi Qianwen series, offers a range of dense and Mixture-of-Experts (MoE) models. It has made significant progress in reasoning, instruction following, agent capabilities, and multilingual support, featuring:

1) Unique Hybrid Reasoning: Seamlessly switches between thinking mode (for complex logical reasoning, mathematics, and coding) and non-thinking mode (for efficient general dialogues), ensuring optimal performance in various scenarios.

2) Enhanced Reasoning Abilities: Surpasses previous models like QwQ (in thinking mode) and Qwen2.5-Instruct (in non-thinking mode) in mathematics, code generation, and commonsense logical reasoning.

3) Better Alignment with Human Preferences: Excels in creative writing, role-playing, multi-turn dialogues, and instruction following, providing a more natural, engaging, and immersive dialogue experience.

4) Outstanding Agent Capabilities: Precisely integrates external tools in both thinking and non-thinking modes, leading among open source models in complex agent-based tasks.

5) Powerful Multilingual Capabilities: Supports 119 languages and dialects, with strong multilingual instruction following and translation abilities.

The "hybrid reasoning" integrates top-tier reasoning and non-reasoning models, requiring intricate design and training. Currently, only Qwen3, Claude3.7, and Gemini 2.5 Flash achieve this among popular models.

Specifically, in "thinking mode," the model performs more intermediate steps like problem decomposition, step-by-step derivation, and answer verification, providing thoughtfully considered answers. In "non-thinking mode," it directly generates answers. The same model accomplishes both "fast thinking" and "slow thinking," mirroring human behavior where simple questions are quickly answered based on experience or intuition, and complex problems require deep thought.

Qwen3 also allows API setting of a "thinking budget" (expected maximum number of thinking tokens) to perform varying degrees of thinking, enabling a balance between performance and cost to meet diverse developer and institutional needs.

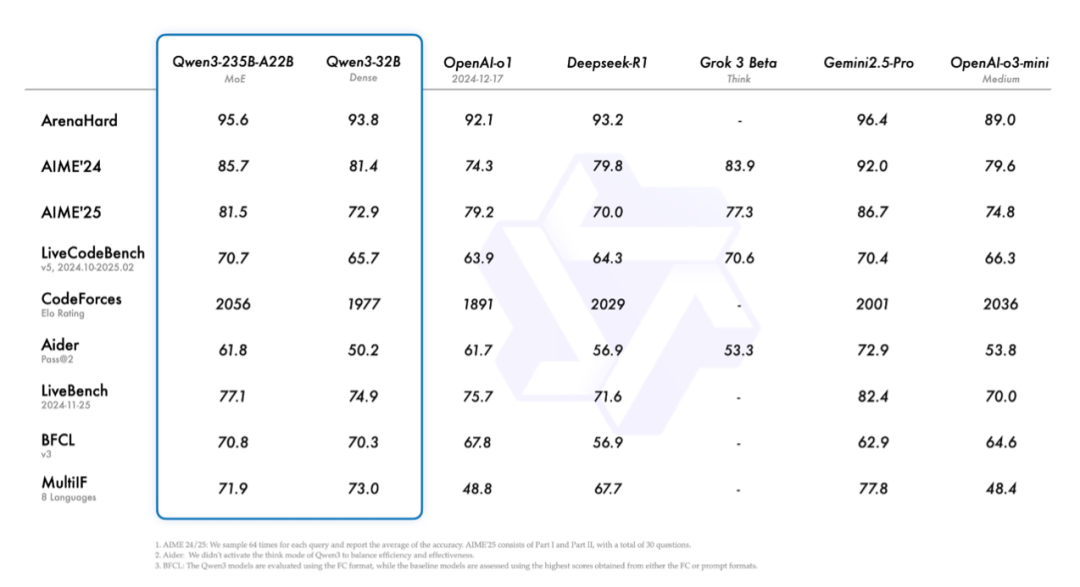

Qwen3 Performance

For China, the open source model approach can attract more customers than closed source models, as closed source models primarily focus on the domestic market, while open source models are accessible to foreign companies. For example, users can use DeepSeek R1 on Perplexity, a US-based company, fully hosted in US data centers.

#03 The Second Half of Large Models

In March 2023, at the Exploratorium in San Francisco, an open source AI event featured alpacas wandering around, paying homage to Meta's open source large language model "LLaMA".

Since 2023, generative AI has evolved rapidly. Public attention has shifted from foundational models to AI-native applications. During YC W25's Demo Day, 80% of projects were AI applications.

"Open source models will propel the deployment of more agents," multiple industry insiders told SiTuJun. On one hand, open source reduces usage costs and thresholds.

Qwen3, for instance, boasts robust tool invocation capabilities, achieving a new high of 70.76 on the Berkeley Function Call (BFCL) benchmark, significantly reducing the barrier for agents to invoke tools. Furthermore, it seamlessly integrates with the Qwen-Agent open-source framework, fully unleashing Qwen3's agent potential. Qwen-Agent is a framework designed for developing LLM applications, leveraging Qwen's capabilities in instruction tracking, tool usage, planning, and memory functions. It encapsulates tool invocation templates and parsers and comes with example applications such as browser assistants, code interpreters, and custom assistants, drastically simplifying coding complexity. Native support for the MCP protocol in Qwen3 enables developers to define available tools using MCP configuration files, utilize integrated tools from Qwen-Agent, or integrate their own tools, thereby swiftly developing agents with setting, knowledge base RAG, and tool usage capabilities.

Moreover, Alibaba's Qianwen 3 supports models of varying sizes, making it more deployment-friendly in smart devices and scenarios like mobile phones, smart glasses, intelligent driving, and humanoid robots. All enterprises can freely download and commercially utilize the Qianwen 3 series models, significantly accelerating the application and deployment of large AI models on terminals.

Some practitioners argue that closed-source models do not effectively address trust issues in the To B segment. Many large enterprises hesitate to integrate their businesses with third-party large model APIs due to concerns about their core data potentially becoming part of these models' training data. This presents an opportunity for open-source models.

One perspective views open sourcing as a marketing strategy for early-stage products before beta testing. When the future is uncertain, releasing the source code first can attract developers. As people begin using it, best practices emerge, gradually forming an ecosystem.

However, industry insiders suggest that due to the longer and less clear commercial chain of open-source models compared to closed-source models, they are more suited to resource-rich 'second-generation' companies. For instance, Meta's open-source models primarily serve to build an ecosystem supporting other Meta business segments. Alibaba's open-source logic is more cloud-service-centric. With robust cloud infrastructure, Alibaba can train large models and deploy them on its cloud service providers, even customizing exclusive large models based on user deployment, thus establishing a clear business logic.

"My model is to let large companies, small companies, and open source compete with each other. This is the norm in the computer industry," said Marc Andreessen. As large models increasingly become standardized products akin to water, electricity, and coal, open source may well be the future direction.