Understanding the World Model in Autonomous Driving

![]() 06/27 2025

06/27 2025

![]() 959

959

As autonomous driving technology matures, vehicles must navigate complex and ever-changing road environments safely. This necessitates a system that not only "sees" but also "understands" and "predicts" future changes. The world model is a technology that abstracts and models the external environment, enabling the autonomous driving system to describe and predict the real world within a concise internal representation, thereby supporting key aspects such as perception, decision-making, and planning.

What is a world model?

Imagine the "world model" as a fusion of a "digital map" and a "future oracle." Traditional maps provide static information like location and road shapes, but the world model goes further by recording current road conditions and simulating potential changes in the next few seconds or minutes. For instance, an autonomous vehicle on an urban road continuously captures data on pedestrians, other vehicles, and traffic lights using cameras, LiDAR, and other sensors. The world model converts this input data into a smaller, more abstract internal "state," akin to compressing a high-resolution street view into digital codes.

When deciding whether the vehicle ahead is decelerating, accelerating, or if a pedestrian might cross, the system simulates different outcomes in this "digital space" to determine the safest option. Direct prediction on raw camera or radar data would be slow and computationally intensive. Instead, by first "compressing" the environment into a low-dimensional representation and performing deductions in this space, the system achieves higher efficiency and better handles sensor noise.

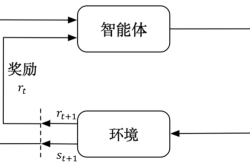

This "abstraction and simulation" is achieved through neural networks. The process involves three steps: "compression," converting high-dimensional sensory data into a concise vector; "prediction," learning how the environment changes over time in this vector space; and "restoration," decoding the predicted vector back into images or other visual information to assess simulation accuracy.

In academia and industry, this encoding-prediction-decoding approach is often implemented using Variational Autoencoder (VAE) or its advanced version, Recurrent State Space Model (RSSM). VAE compresses camera images into "latent vectors" and reconstructs similar images from these vectors. RSSM extends this by adding a temporal dimension, capturing dynamic changes between frames using recurrent neural networks (like LSTM or GRU). This allows the world model to establish a stable digital representation of the current environment and make short- and long-term predictions.

Why does autonomous driving need a world model?

Applying the world model to autonomous driving simulation training allows computers to "practice" before real-world implementation. Traditional "model-free training" required extensive trial and error in real or highly simulated environments, consuming significant resources and time. The "model-based training" approach, however, uses real driving data to train a model that closely replicates the real world. The algorithm then undergoes reinforcement learning and strategy optimization within this model, reducing reliance on real vehicles and roads. This is akin to pilots training in simulators before flying, enhancing safety and reducing costs. Once the world model accurately reflects real traffic rules and dynamics, it can drive the autonomous system to learn hazard avoidance, car following, overtaking, and emergency response faster, without needing constant road testing.

Due to varying traffic conditions, algorithms trained in fixed scenarios may perform poorly in new environments. The world model simulates various scene changes in latent space, including urban roads during peak hours, dimly lit suburban roads at night, flooded roads during rain, and extreme situations like sudden accidents. By integrating different scenarios within a single model, autonomous driving algorithms can practice various extreme conditions during "internal simulation," improving adaptability and robustness when facing real-world scenarios. The world model serves as a versatile training ground, helping algorithms "practice" under complex situations and enhancing their generalization capabilities.

Deploying the world model on automotive hardware involves interesting technical details. On-board computing units (ECUs) have limited computing power and memory, so the trained model needs to be pruned, quantized, or compressed using techniques like knowledge distillation to ensure low latency during real-time operation. Manufacturers often leverage specialized hardware acceleration platforms, like NVIDIA Drive or Xavier module, to load deep neural network models onto dedicated chips. In this hardware-software integrated architecture, the vehicle can encode and predict the world model within milliseconds, providing fast and reliable "future scenario" information to the decision-making module. If a pedestrian is predicted to cross within the next three seconds, the vehicle can quickly calculate the optimal braking or steering plan to ensure safety.

Difficulties in deploying the world model

Successfully implementing the world model and leveraging its advantages presents challenges. The first major challenge is data collection and diversity. For accurate real-world replication, the model requires a vast amount of high-quality data covering various road, weather, and traffic density scenarios. However, extreme or risky scenarios like road flooding during heavy rain or vehicles losing control are often difficult to collect sufficient samples for in real environments. To address this, technologies propose combining real data with simulation data, first generating "supplementary samples" of extreme conditions using virtual simulators and then fine-tuning with real data. Domain adaptation techniques are also employed to reduce performance gaps when the model migrates between different data sources.

The second major challenge is the accumulation of errors in long-term predictions. As the world model predicts the next step based on the previous step's result, small errors accumulate, leading to significant deviations from the real environment. This is manageable for short-term predictions but requires attention for longer-term planning. This can be addressed by combining "semi-supervised, autoregressive" and "teacher forcing" during training, allowing the model to learn from its predicted outputs while occasionally correcting with real data. Penalties for multi-step prediction errors are also added to the loss function, making the model more sensitive to long-distance temporal stability. During real-world testing, if the model prediction deviates significantly from real observations, an online calibration mechanism is activated to correct the model state, preventing long-term error accumulation.

The third major challenge is ensuring interpretability and safety in the world model. Autonomous driving is a safety-critical system. If the model's "latent vectors" are unintelligible, it's difficult to trace the root cause of abnormal decisions. Furthermore, the model could be disrupted by adversarial attacks, causing it to output drastically different predictions for the same road condition. To address this, interpretability designs can be incorporated, such as having latent vectors specifically correspond to lane lines, traffic signs, or other geometric information, making part of the model a "white box" component. Additionally, large-scale adversarial sample testing is conducted before deployment to assess robustness under noise or tampering, and security checks are performed on the latent vector space to ensure timely emergency braking or safety warnings under abnormal inputs.

Future trends of the world model

With the development of self-supervised learning and multi-source data fusion technologies, the world model will be further optimized. Currently, most world models require labeled or weakly labeled data; in the future, models will mine temporal and spatial patterns from unlabeled driving videos using contrastive learning, ensuring latent representations remain consistent across different times or perspectives, enabling continuous improvement without manual annotations. Moreover, future world models are expected to integrate symbolic reasoning, expressing traffic rules, road network topology, and driving intentions using logical symbols that complement neural network representations. This "hybrid" world model will be more stable and reliable, facilitating regulatory and safety certifications. With the prevalence of Vehicle-to-Everything (V2X) technology, the world model can collaboratively perceive with the cloud and other vehicles, enabling real-time online updates. When large-scale congestion or accidents occur, road condition information from other vehicles and cloud-based high-precision map updates can be immediately fed back to each vehicle's world model, allowing them to quickly adjust predictions and enhance sensitivity to extreme situations.

The world model equips the autonomous driving system with the ability to "simulate in the mind," enabling vehicles to make multi-step predictions of the future environment within a smaller, more efficient internal space. This accelerates decision-making, reduces misjudgment risks, and enhances performance in diverse and complex road scenarios. However, to maximize these benefits, continuous optimization and breakthroughs are needed in data collection, long-term prediction stability, interpretability, safety, and deployment efficiency on the vehicle side. With advancements in deep learning, hardware acceleration, and V2X technologies, the world model will play an increasingly critical role in autonomous driving, helping us achieve safer and more intelligent self-driving experiences.

-- END --