Rivian 2025 Autonomy & AI Day of America's Rising Star: Self-Developed 800TOPs Chip and L4 "Eyes-Free" Autonomous Driving Roadmap

![]() 12/15 2025

12/15 2025

![]() 518

518

In China, the focus of everyone working on assisted driving is basically on FSD's technical product solutions. However, recently, Rivian, a rising star in Europe and the United States, dropped a bombshell at its AI Day.

They not only introduced the Autonomy+ autonomous driving assistance package priced at a highly competitive $2,500, but more importantly, they unveiled a comprehensive, aggressive, and heavily hardware self-reliant roadmap.

This is no longer a software upgrade race but a battle of underlying architectures concerning AI chips, three-dimensional world models, and data flywheel efficiency.

From self-developing the 800 TOPS RAP1 chip to embracing LiDAR for the first time, and then to building an end-to-end Large Driving Model (LDM), Rivian is arming itself into a true "AI-defined car" player.

This in-depth analysis will take you through the technical product details of Rivian, directly addressing Rivian's Gen 3 hardware platform, end-to-end software model, and its ambitious goals of achieving "point-to-point autonomous driving" and even "hands-free and eyes-free" driving by 2026.

Overall Technical and Product Roadmap

Rivian's Autonomy+ autonomous driving assistance package is priced at $2,500 (one-time payment) or $49.99 per month, including a general hands-free driving feature that can be used on over 3.5 million miles of roads in the United States and Canada. Tesla FSD is priced at $8,000 in North America or $99 per month for a subscription.

Point-to-Point Navigation (P2P, starting in 2026): The vehicle can fully autonomously navigate from one address to another.

Fully Eyes-Free: The next major step after P2P, where you can take your hands off the wheel and your eyes off the road, looking at your phone or reading a book, without needing to actively participate in driving.

Personal L4 Autonomous Driving: In subsequent iterations, the vehicle will operate fully autonomously, able to pick up and drop off children or pick you up from the airport.

Overall, from a macro perspective, the product details primarily focus on the L2 experience, upgrading to L4 autonomous driving. This also aligns with the current mainstream consensus.

In-Depth Analysis of Rivian Gen 3 Hardware System

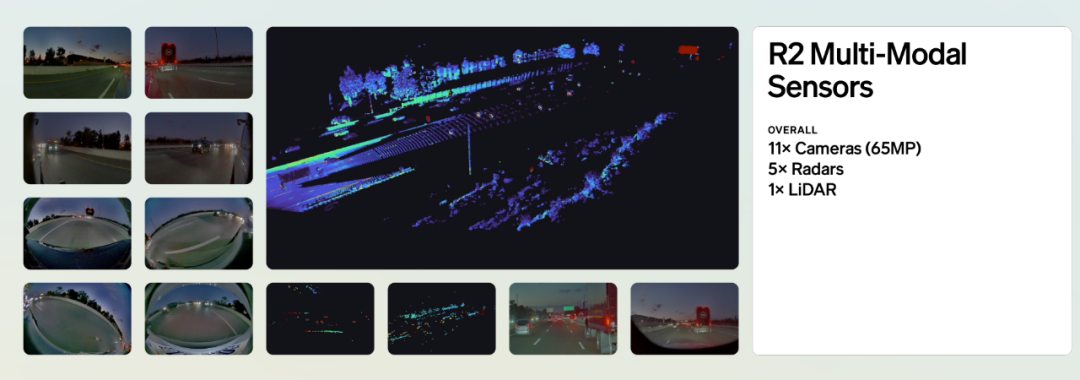

Rivian's Gen 3 Autonomy system will be launched on the R2 vehicle platform at the end of next year. The hardware focuses on three leading areas: sensors, self-developed chips, and overall product integration.

Rivian adopts a multimodal sensor strategy to provide AI models with rich and diverse datasets.

Cameras: Rivian's third-generation assisted driving hardware platform is equipped with 11 cameras, providing a total of 65MP of data, 10MP more than the R1. Cameras are the mainstay of the sensor suite, generating most of the data required by the model.

Radar: Rivian's third-generation assisted driving hardware still retains 5 radars (1 front high-resolution radar and 4 corner radars), compensating for the shortcomings of cameras under non-ideal lighting conditions (low light, bright light, or fog). Radar can see in complete darkness through radio frequencies and provides the depth and speed of objects. Rivian's corner radars support both short-range and long-range dual modes, with high spatial resolution, allowing Rivian to eliminate ultrasonic sensors.

LiDAR: Added for the first time in Rivian's third-generation assisted driving hardware. It is an optical sensor with an active light source, capable of seeing better in the dark. Another advantage is that it provides a 3-dimensional view of the world, while cameras provide a 2-dimensional view (requiring AI models to infer depth with lower accuracy).

Rivian states that radar and LiDAR are highly beneficial in filling the "gaps" in camera perception that would otherwise trigger long-tail issues.

The LiDAR in Rivian's third-generation assisted driving hardware platform is well-integrated into the front design of the vehicle, avoiding the common abrupt "taxi roof" structure.

According to relevant information, Rivian's LiDAR may come from American brands such as Luminar. As for Chinese LiDAR companies like Robosense, which have been discussed previously, it is highly unlikely in the current geopolitical environment.

Gen 3 Autonomous Driving Computing Platform and RAP1 Self-Developed Chip

Previously, Rivian used NVIDIA's Orin series chips for its intelligent assisted driving, similar to other domestic companies.

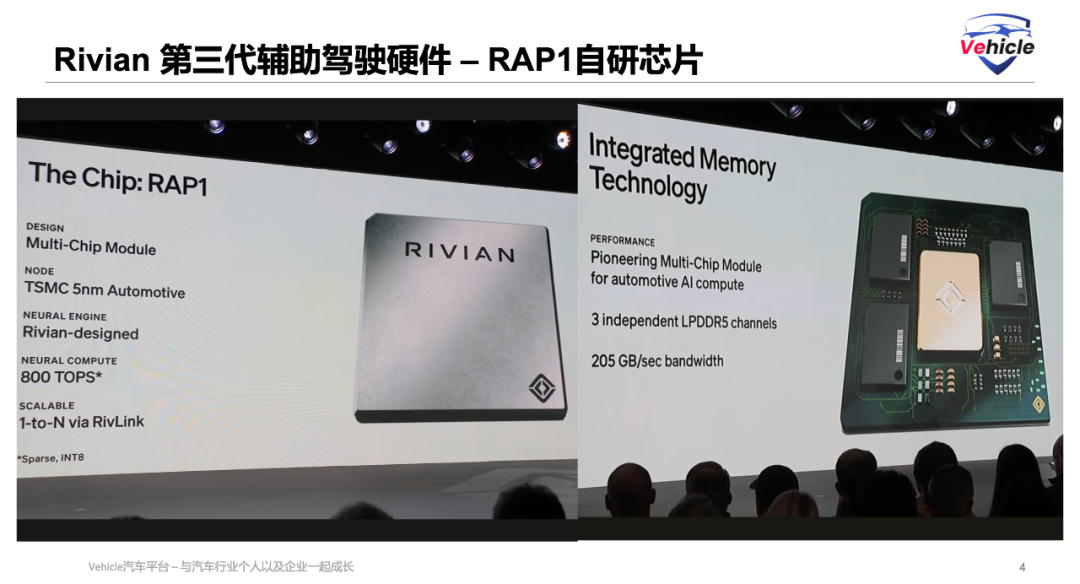

Now, the introduced Gen 3 autonomous driving chip is called the Rivian Autonomy Processor (RAP1). It is the first in a series of chips built for physical AI, adopting a Multi-Chip Module (MCM) architecture and integrating Rivian chips and memory.

Regarding computing power and energy consumption, Rivian claims it achieves 4 times the peak performance of the Gen 2 computing platform (i.e., NVIDIA Orin) while improving power efficiency by 2.5 times.

Core Specifications: Produced on TSMC's 5nm automotive-grade process. The core is Rivian's designed AI neural engine, featuring 800TOPS sparse sparse INT8 computing power.

Rivian claims that its new product adopts a multi-chip module architecture, and RAP1 is one of the first OEMs to introduce MCM packaging into high-computing automotive applications. This tight coupling achieves extremely high memory bandwidth, with a net bandwidth of 205GB/second. The MCM design also simplifies PCB design, making it smaller, simpler, and with fewer layers, thus reducing costs.

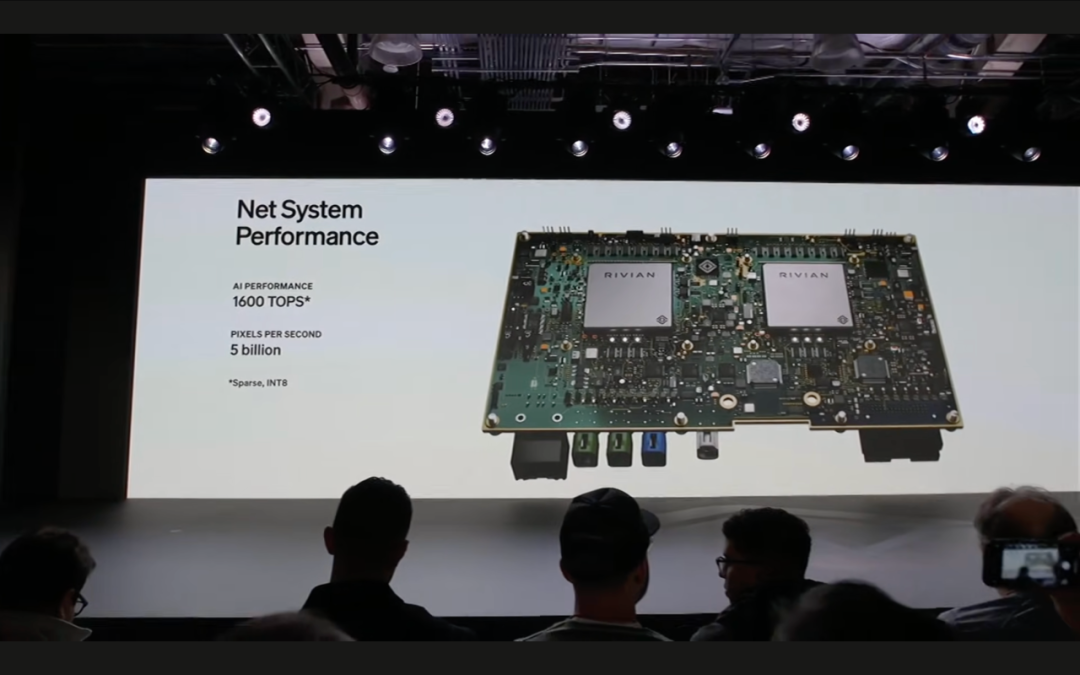

The Gen 3 autonomous driving computing platform uses two RAP1 chips, totaling 1600TOPS.

Regarding Chip SoC

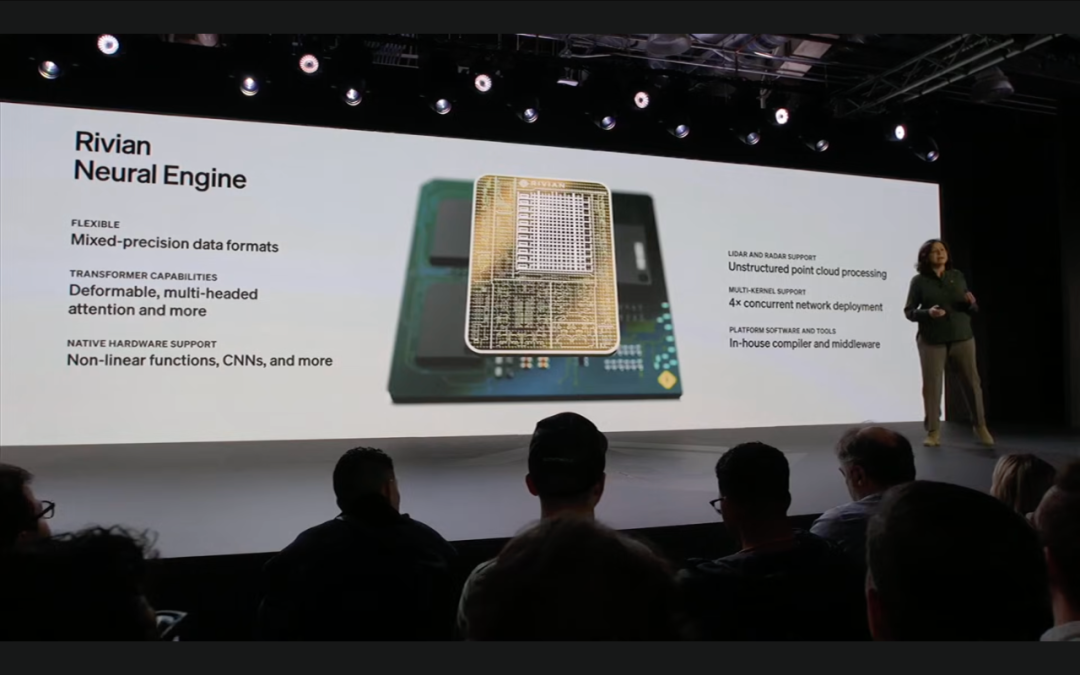

Structure: In addition to the neural engine, the SoC includes 14 high-energy-efficient Arm Cortex-A720AE cores (application processors), 8 ARM Cortex-R52 cores for functional safety and real-time computing (safety island and real-time processing unit), as well as ISP, encoder, GPU, etc.

AI Support: The neural engine supports the most advanced deep learning models, particularly native hardware support for Transformers and all types of attention mechanisms (such as multi-head attention, deformable attention). It also includes special hardware hooks to support LiDAR and radar processing.

Furthermore, regarding software and safety, Rivian claims to have invested significant internal resources in developing software tools and middleware stacks, including an internal compiler, to fully leverage the capabilities of RAP1. RAP1 has been designed with functional safety in mind from the outset, complying with ISO26262 ASIL ratings.

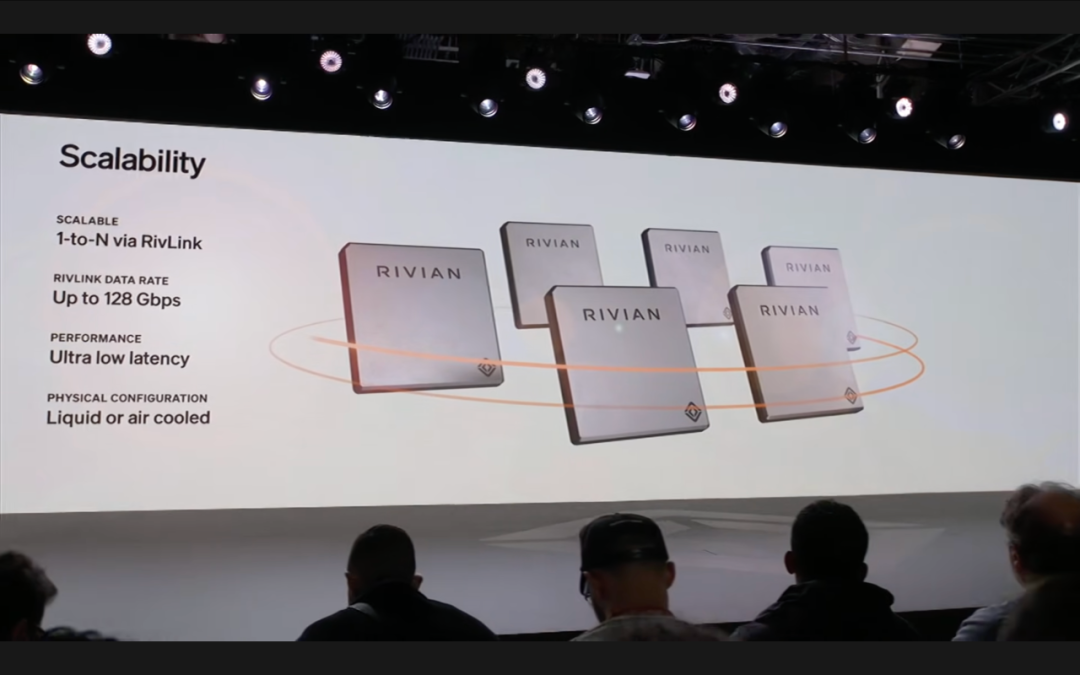

Finally, Rivian claims that its RAP1 chip design is scalable. It can achieve multi-chip connectivity through RivLink (up to 128Gbps), allowing seamless sharing of sensor data between SoCs, thus achieving higher performance.

The system deployed in the first R2 is liquid-cooled, and Rivian states that it supports air-cooled systems. The Gen 3 autonomous driving hardware system is capable of processing 5 billion pixels of sensor data per second.

Rivian Software Platform and Data Flywheel Operations

Rivian refers to its assisted driving software model as the Large Driving Model (LDM) and states that it is fully self-developed in-house, trained end-to-end, directly generating trajectories from pixels, radar echoes, and LiDAR points.

LDM uses algorithmic techniques based on Transformers, autoregressive prediction, and reinforcement learning, as well as large language models (LLMs).

Sensor input multimodality integrates sensor data into a single world model through early fusion. Sensors complement each other, enhancing accuracy and predictive confidence.

Entering the AI algorithm network structure, each sensor input is encoded, projected, and then combined into a geometric feature space. Subsequently, a Transformer-based decoding engine generates a complete world model from this fused tensor, including pedestrians, vehicles, dense 3D Occupancy probabilities for short-range operations, and real-time generated local maps.

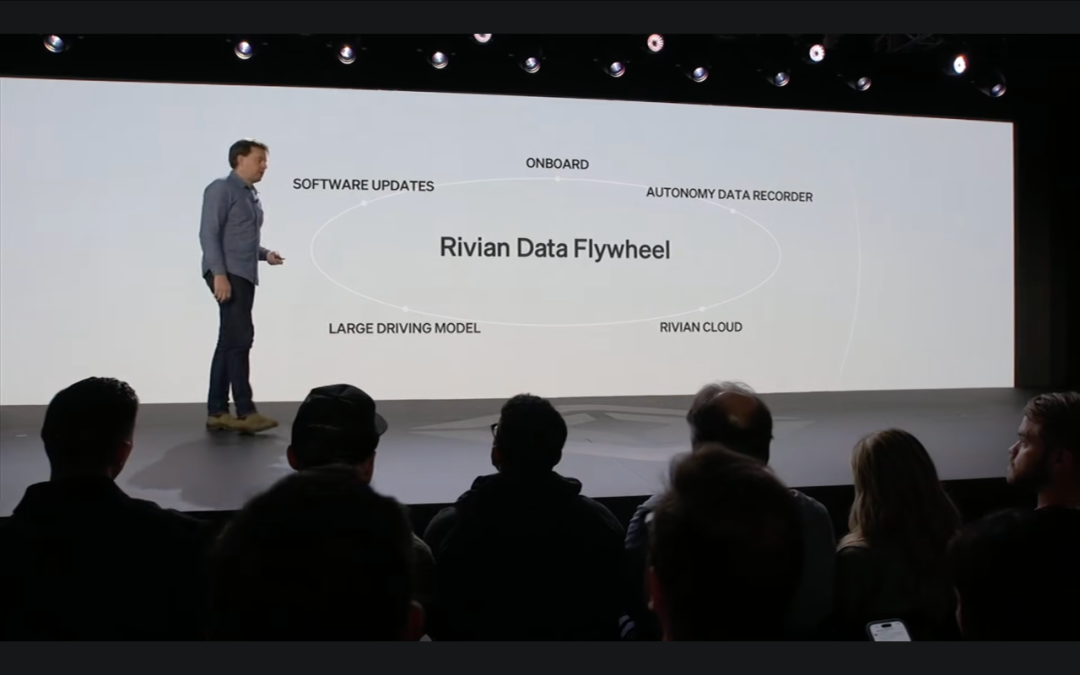

With end-to-end algorithms, the next step is to have a data flywheel or data closed-loop capability. It can convert real-world driving into data and continuously iterate the model.

Rivian has built a queryable dynamic driving scenario database.

A vehicle-deployed ADR operation trigger code can be triggered to record based on any signals seen by the world model (such as pedestrians running red lights, differences between model-predicted trajectories and human-driven trajectories). ADR is highly efficient, and most "boring" driving data is not captured. Once triggered, all sensor data before and after the event is captured, labeled, compressed, and uploaded, then immediately available for model training, evaluation, or simulator playback.

Furthermore, after Rivian is equipped with LiDAR, each R2 will become a "ground truth" vehicle. This provides a force multiplier several orders of magnitude greater than other OEMs for training data. Additionally, since all sensor modalities are stored, most training data can be automatically labeled using large offline models.

Training and Release of Algorithmic Models

LDM is based on the same technology as large language models (LLMs), using Transformers and tokens (representing small portions of trajectories). It employs GRPO reinforcement learning, but the goal is safe, high-performance, and smooth driving rather than alignment with human values.

During training, the model samples multiple trajectories and ranks them through road rule rankers, thereby training the model to follow correct road rules.

Rivian verifies and determines the release of new models through the following two methods:

Cloud Simulator: At each release, the entire autonomous driving stack is run through millions of miles of real-world scenarios to measure safety, comfort, and performance metrics in a statistically significant manner.

Apprentice Mode: Before releasing features to customers, new software versions run in the background, monitored and compared against human-driven mileage and old versions, achieving tens of millions of miles of evaluation.

This way, the end-to-end model is deployed on the vehicle, data is collected on the vehicle, trained in the cloud, and distributed, forming a data flywheel that continuously improves and iterates software versions.

Rivian Unified Intelligence (RUI) applications such as smart cockpits

Rivian has combined its AI applications to form the concept of RUI. It states that RUI is a common AI foundation that understands Rivian's products and operations and personalizes customer experiences.

Rivian has revamped its vehicle operating system to support AI. It has internally developed a multi-agent, multi-LLM, and multimodal intelligence platform. RUI spans both the cloud and the edge.

For edge AI, the R2 will feature nearly 100 TOPS of edge AI dedicated to the cockpit experience. This will shift most intelligent workloads from the cloud to the edge, ensuring a full cockpit AI experience even when the vehicle is offline.

The most important application is the Rivian Assistant, similar to a language assistant and AI agent experience. It is fully integrated into the user experience and the in-vehicle operating system. It understands the user, the vehicle, and the user's environment and will be available to all Gen 1 and Gen 2 customers in early 2026.

The Rivian Assistant is built on the AGENTIC framework, allowing integration into larger ecosystems and personal digital contexts.

Personal context integration: The assistant can integrate with Google Calendar. For example, it can inquire about schedules or manage them through natural language instructions (such as 'Reschedule my conference call with Tim to 5 PM').

Vehicle context integration: The assistant integrates with all vehicle applications, including EV route planning and driving modes. For example, you can request navigation to an appointment location and inquire about the remaining battery level upon arrival. It can also automatically switch to 'Conserve Mode' without specifying a particular mode (such as 'Switch to a more efficient driving mode').

Complex task control: The assistant can execute complex tasks with a single natural language command, such as 'Heat all seats except mine.'

Messaging: The assistant provides a text messaging experience. It is fully integrated into the in-vehicle operating system and can access all applications and controls. It can understand context (for example, you are navigating to a location) and then search for nearby restaurants based on the conversation and integrate the options and your estimated time of arrival (ETA) into a single text message sent to the contact.

The assistant possesses memory and context awareness capabilities, representing a new foundation for Rivian's digital system. AI is exponentially widening the gap between software-defined vehicles and traditional vehicle architectures, and Rivian is uniquely positioned to transition from software-defined vehicles to AI-defined vehicles.

In factory production, RUI's diagnostic agent instantly connects to real-time telemetry data on the assembly line, allowing Rivian to verify quality before vehicles leave the factory.

Service and maintenance: The RUI platform is trained on real vehicle data, empowering technicians to instantly identify and determine the root cause of faults, reducing repair time by several hours. The platform also supports customers in intelligently performing self-service troubleshooting through the vehicle or mobile app.

In Conclusion

Rivian's 'Autonomy & AI Day' clearly outlined its firm commitment to Level 4 autonomous driving, constructing a highly redundant and efficient system through self-developed chips (RAP1) + multimodal sensors (vision, radar, LiDAR).

Now, the camp (Note: translated as 'camp' or 'group' for context, but 'multisensor fusion solution camp' is used here for clarity) of multisensor fusion solutions, such as the NVIDIA supply chain solution mentioned in our previous article 'The VLA Large Model Algorithm Behind Wu Xinzhou Leading NVIDIA's Charge Towards L4 Autonomous Driving,' includes Waymo and now features the addition of Rivian, a new force from Europe and America.

This creates the most distinct technological divergence in the automotive AI field compared to Tesla's persistent 'pure vision' approach.

Now, a core question lies before us:

Will Rivian's aggressive hardware solution and more competitive Autonomy+ pricing force Tesla to reconsider its hardware and pricing strategies?

In the ultimate race to Level 4 autonomy, which technological paradigm do you believe will win consumers' trust earlier and more safely: the multimodal heavyweight solutions of Waymo/NVIDIA/Rivian or Tesla's pure vision minimalism?

References and Images

Rivian AI day .pdf

*Reproduction and excerpting are strictly prohibited without permission-