In the AI Era, a Single Misstep Can Unleash a Tsunami from a Butterfly's Flap

![]() 12/31 2025

12/31 2025

![]() 436

436

MOMO in the Yangtze River Delta

If a paper blindly places its trust in an "AI hallucination," it can set off a domino effect, resulting in a cascade of recurring data errors. How can we break free from this vicious cycle? The answer lies in using magic to combat magic—by harnessing AI tools.

Let me kick things off with two anecdotes.

The first story revolves around the widespread adoption of AI.

Today, students and teachers alike are embracing AI with open arms. A few days ago, I paid a visit to a relative's home, only to find him scolding his daughter for not writing essays herself and insisting she must do it manually. To his astonishment, she tearfully clarified, "The teacher instructed us to do so and then submit it to AI for revision suggestions."

Some time ago, I participated in a forum attended by university professors and independent media practitioners. Curious about AI usage, I posed the question, and the consensus was that teachers are extensively utilizing AI to craft course materials.

The second story delves into the realm of accuracy.

Not long ago, the Liangzhu water supply incident stirred up a commotion, only to be suddenly overshadowed by a claim that Nongfu Spring had a factory in Liangzhu. The news spread like wildfire. Fortunately, the incident was swiftly investigated, and the Weibo user who posted the false information promptly deleted it and issued an apology, admitting it was AI-generated and incorrect. One false move nearly spelled disaster for the company.

Clearly, AI has demonstrated over the past two years its ability to provide answers of higher quality than traditional searches, leading people to place blind trust in AI's responses. This trust permeates academia, media, education, science, and public administration. But have we pondered the repercussions if AI makes a blunder akin to the Liangzhu Nongfu Spring incident in serious academic domains?

I believe it would trigger the butterfly effect.

A media report or a paper relying on incorrect data can set off a chain reaction of data errors, ultimately leading AI into cognitive pitfalls and misleading everyone thereafter. This is a domino effect in action.

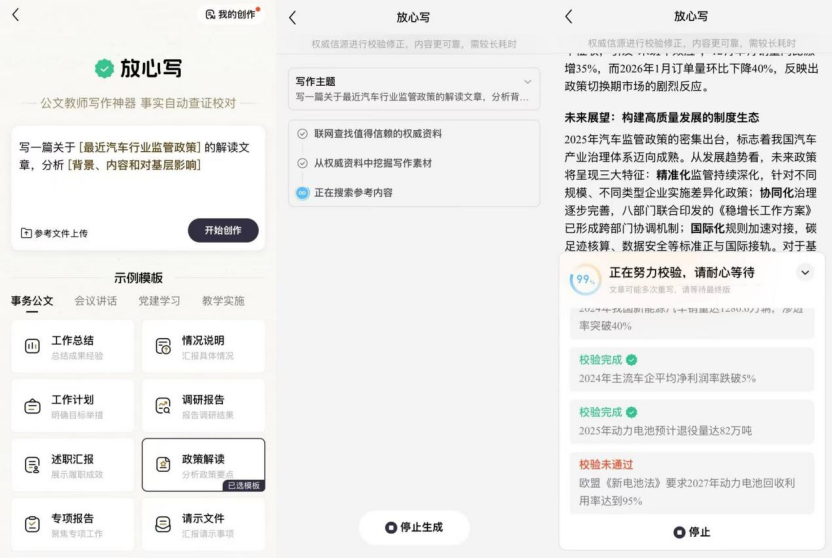

Baidu Wenxin recently unveiled the "Write with Confidence" feature, significantly bolstering the reliability of AI usage in media and education. To be candid, previously, to ensure data accuracy, I would cross-verify information using multiple AI tools and cross-check with authoritative media reports. Now, with this feature, I can directly upload my written article to Wenxin, which flags any issues, enabling me to make online revisions and conduct secondary reviews.

This adds an extra layer of risk control for every writer, checking for data inaccuracies, policy misinterpretations, and logical flaws. Internally, I jest that our industry's proofreaders can finally bid farewell—indeed, our company's proofreaders have been out of work for quite some time.

Why are academia and media the prime targets for AI? Because a significant portion of AI's data originates from these sources. Errors here can contaminate data across the board, so Baidu Wenxin's "Write with Confidence" effectively blocks this cross-contamination, serving as a potent weapon against AI hallucinations.

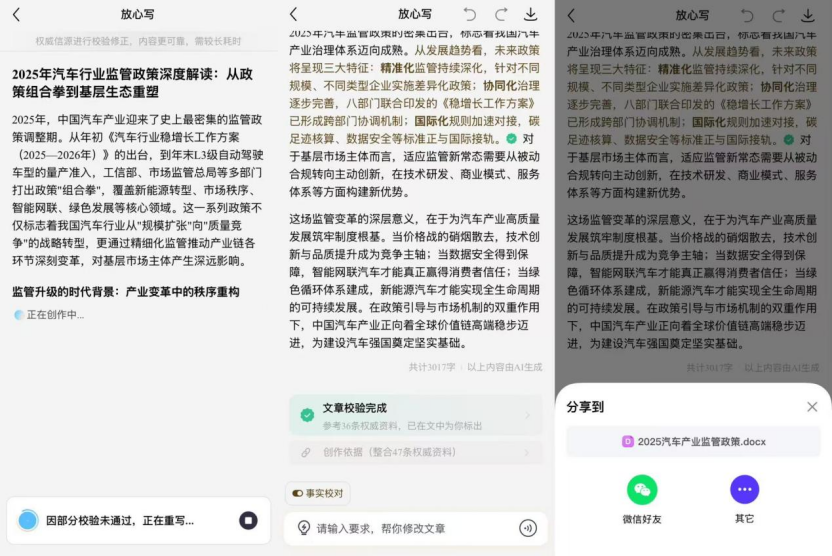

I also gave it a whirl, generating an article on "Interpretation of Recent Automotive Industry Regulatory Policies." I won't delve into the nitty-gritty and final outcome but will simply say this: the conclusion is highly usable, reaching the level of intelligent agents I previously employed with certain large models, through a conversational assistant approach.

The verification process was intriguing. When the prompt failed, I specifically inquired why AI deemed "The EU's New Battery Law mandates a 95% recycling and utilization rate of power batteries by 2027" as failing. It turned out the law applies differently to various materials, so a blanket statement isn't precise—very rigorous indeed.

After rewriting, it passed with flying colors, and downloading and sharing were seamless.

One pro tip: remember to activate "Fact Check" at the bottom left.

Recently, I came across news that LMArena's large model arena released its latest rankings, with Wenxin's new model, ERNIE-5.0-Preview-1203, scoring 1451 to claim the top spot in the LMArena text rankings in China, particularly excelling in creative writing.

This deserves accolades. Whether in terms of models or applications, Wenxin shines in Chinese writing. The latest model capabilities are now accessible in the Wenxin APP's newest version. I learned that the accuracy of "Write with Confidence" in Chinese writing has surpassed 99%, with a significantly reduced hallucination rate.

In conclusion, I believe "Write with Confidence" is a viable path to ensure AI doesn't generate excessive hallucinations in the future.

First, how to construct the database?

As a writing tool designed to help users avoid AI hallucinations, it necessitates a proprietary, segmented database. This database isn't open-ended; "Write with Confidence" relies on authoritative and trustworthy media and government websites, eliminating the need to sift through vast internet data. Hallucinations often arise when incorrect information outweighs correct data, and this interference is currently manageable.

Thus, the secondary verification tool's database must not consist of open-ended web data.

Second, iterate within small, specific domains.

Currently, Wenxin's "Write with Confidence" boasts strong review capabilities for official documents, educational course materials, industry reports, and meeting minutes. However, this capability primarily caters to office work rather than entertainment or personal needs, focusing on serious topics. In such specific scenarios, data remains cleaner, and this cleanliness can extend to larger external databases.

Third, it aids novices in swiftly adapting to work.

For many office newcomers and even new teachers, standardizing their writing and course materials is a daunting task, as these often adhere to fixed formats requiring experience. Besides detecting errors, Wenxin's "Write with Confidence" can also adjust formats based on reference documents you provide.

Over the past year, AI has earned a new moniker in many companies: the "scapegoat."

Previously, when errors occurred in office work, people would point fingers at suppliers. Now, they realize AI can also shoulder the blame, highlighting the pervasive reality of AI hallucinations.

Countless experts fret about our society's future information security and whether humans can truly discern between real and AI-fabricated information in the future. This year, a peanut crisp from Bestore was depicted by AI as growing on trees, turning into a farce. Such incidents are bound to multiply.

Baidu's "Write with Confidence" represents an attempt from the opposite direction: AI can be not just a provider but also a corrector of data information. Hallucinations may be spawned by AI, but they can also be rectified by AI.

As we step into 2026, AI competition will undoubtedly intensify, with functional differences becoming increasingly negligible. What will everyone vie for in the second half? If all AI provide similar answers to a question, using any would suffice. But if one AI can furnish a unique answer with a very low or zero error rate, that's the winning formula.

Ensuring strong ties to the physical world, avoiding hallucinations, and taking responsibility for deliveries will be the linchpin of AI's future success, in my opinion.

In 2026, AI should not morph into the scapegoat for human errors but emerge as the hero of error correction.