OpenAI's Multi-Billion-Dollar Gamble: An AI Infrastructure Network Woven by Interest Alliances—or Just Another Internet Bubble?

![]() 09/29 2025

09/29 2025

![]() 483

483

When Sam Altman was interviewed by the media outside the expanding data center in Abilene, Texas, the sprawling server rooms behind him served as a vivid backdrop—this private company, now valued at $500 billion, is constructing a colossal network spanning chips, cloud computing, and data centers at a breakneck pace, inadvertently driving the S&P 500 to new heights.

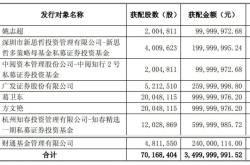

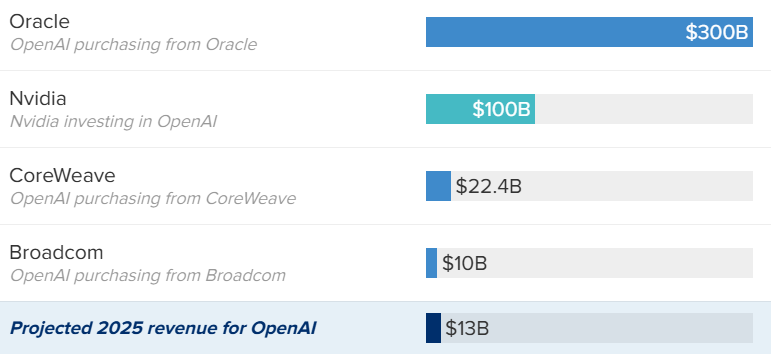

In late September, Nvidia's announcement of a $100 billion investment in OpenAI propelled the Nasdaq and S&P 500 to record peaks. This came just two months after OpenAI's $300 billion "Stargate" partnership with Oracle and shortly before CoreWeave doubled its AI infrastructure supply commitment from $11.9 billion to $22.4 billion. In this frenetic computing power arms race, OpenAI's deal pipeline is rapidly expanding with multi-billion-dollar transactions.

A Closed-Loop Network of Mutual Benefits

OpenAI's transaction network has transcended simple commercial partnerships, evolving into an ecosystem with deeply intertwined interests. From chip giants to cloud service providers, every participant functions as both a contributor and a beneficiary, forming a unique "risk community."

This symbiosis is most evident in Nvidia's deals: the chip giant's funding ultimately flows back as GPU sales revenue through data center construction. OpenAI's early investment in CoreWeave secured it priority access to computing power, with this "invest-then-procure" model becoming an industry norm.

Can $13 Billion in Revenue Support a Trillion-Dollar Ambition?

Behind the frenzied expansion lies OpenAI's unbalanced financial ledger. CFO Sarah Friar revealed that 2025 revenue is projected at just $13 billion—a negligible figure compared to recent multi-billion-dollar transactions.

Bain & Company's calculations in its 2025 Tech Report sound an even louder alarm: by 2030, global AI computing demand will reach 200 gigawatts, requiring $500 billion annually just for data center construction. This demands $2 trillion in total industry revenue to cover costs, yet current AI companies' combined revenue doesn't even reach a fraction of that. Even with full commitment from all tech firms, an $800 billion funding gap remains.

However, OpenAI's leadership refuses to be constrained by short-term financials. "People said the same about overbuilding internet infrastructure in the early days—look at the world now," Friar argued, using history as justification. Altman was more direct, stating in an August CNBC interview that he's willing to accept sustained losses for growth and investment. This confidence may stem from his September 23 blog post "The Abundance of Intelligence," where he declared: "Access to AI will become a fundamental economic driver, possibly even a basic human right."

The Clash Between Bubble Warnings and Infrastructure Demand

OpenAI's aggressive strategy is drawing increasing skepticism, with the sharpest criticism comparing its model to the "vendor financing" that preceded the 2000 internet bubble—where upstream suppliers fund downstream clients, who then return the favor through purchases, creating a self-perpetuating cycle that inevitably risks collapse.

"Even without questioning AI's technical potential, this field has become overly self-referential," Bespoke Investment Group stated bluntly. "If Nvidia must prop up clients to sustain growth, the entire ecosystem may prove unsustainable." Peter Boockvar, CIO of One Point BFG Wealth Partners, drew parallels to the late-1990s tech crash: "The only difference is the scale is orders of magnitude larger this time."

Alibaba Chairman Joseph Tsai's warning deserves equal attention. At the HSBC Global Investment Summit, he noted that America's AI data center construction already shows bubble signs: "Many projects start fundraising without identifying clear clients." Goldman Sachs has responded by downgrading its 2025 AI training server shipment forecast from 31,000 to 19,000 units.

But support for OpenAI remains strong. IDC data reveals a 40% shortage in high-end computing power, with GPU resources for large model training often requiring months-long queues. An OpenAI spokesperson framed these investments as "ambition matching a once-in-a-century opportunity," while Altman emphasized: "This is the cost of realizing AI. Unlike previous tech revolutions, AI demands infrastructure on an unprecedented scale."

The Real Dilemma Behind the Computing Arms Race

When OpenAI, Oracle, and SoftBank announced plans to build five new data centers across the U.S., boosting the Stargate project's total power capacity to 7 gigawatts, the infrastructure frenzy led by a single company had gained irreversible momentum. Micron Technology's 46% Q4 revenue surge seems to validate computing demand, but DeepSeek's 97% cost reduction in inference through technological innovation reveals another possibility—that the computing race may not need to rely solely on scale.

The outcome of this high-stakes gamble remains uncertain. If AI applications achieve internet-like explosive growth, OpenAI's current investments may become tomorrow's infrastructure cornerstone. But if demand falls short, this carefully woven transaction network could transform into an inescapable debt trap.

As Gai Hong, CEO of Xuanrui Fund, observed, the debate over AI computing surplus versus shortage is essentially a race between technological innovation and industrial absorption capacity. Will OpenAI's multi-billion-dollar transaction network become the bridge to the AI era or the final frenzy before the bubble bursts? The answer may only emerge when 2030's computing demand materializes, but one certainty remains: the entire tech industry has been drawn into this no-turning-back gamble.